2168-7161 (c) 2015 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/TCC.2015.2511738, IEEE

Transactions on Cloud Computing

3

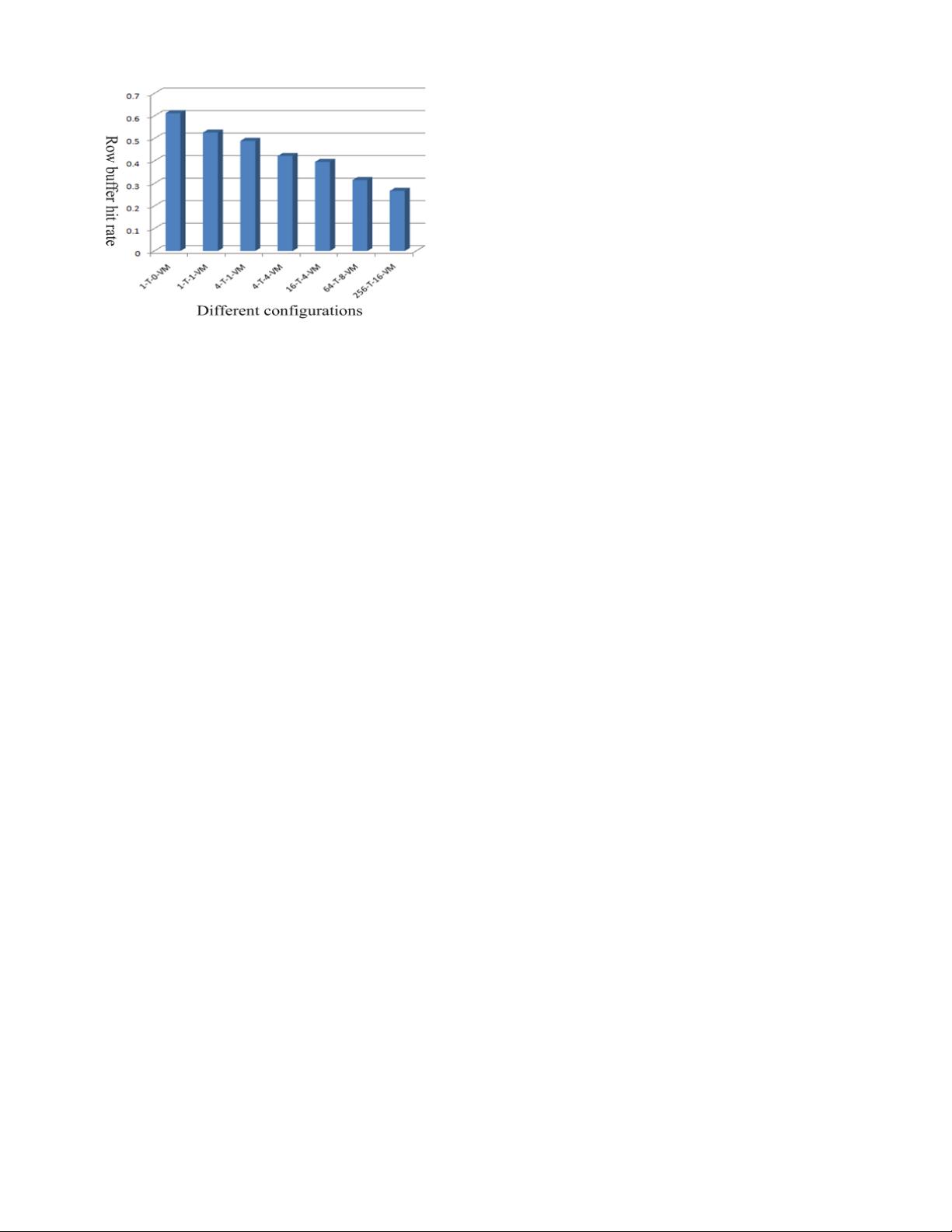

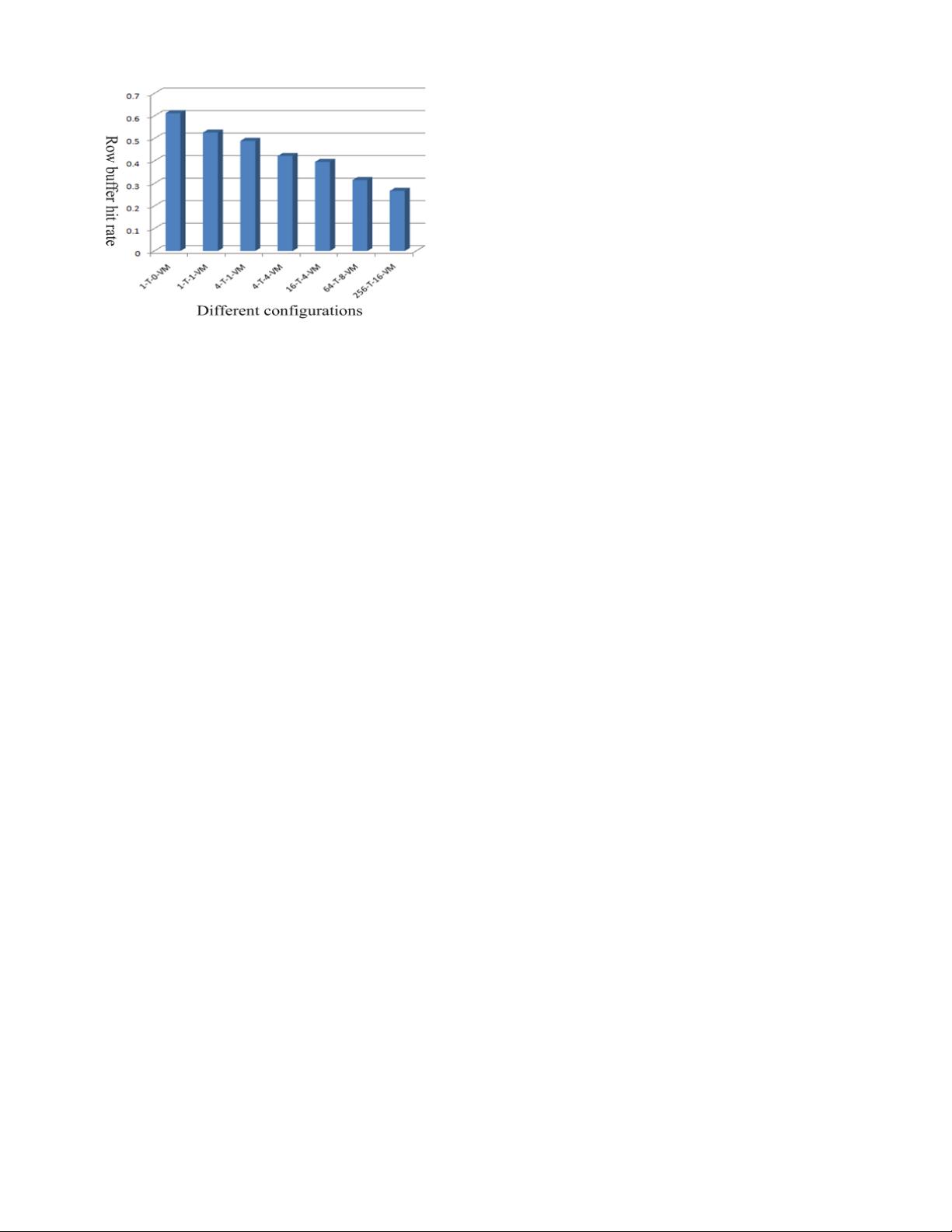

Fig. 2. row buffer hit rate under different running configurations

each time an array is accessed, an entire multi-KB row

is transferred to a row buffer. This operation is called an

“activation” or a “row opening”. Then, any column of the row

can be read/written over the channel in one burst. Because

the activation is destructive, the corresponding row eventually

needs to be “pre-charged”, that is, written back to the array.

B. Profiling of Interference

With the core number and VM number keep increasing

in the server, one of major challenges on memory system is

interference. Usually a single thread’s memory requests have

good locality and can exhibit good row buffer hit rate. But the

locality is significantly reduced in a multi-core server with

running many VMs parallel. Therefore, row buffer hit rate

decreases sharply, leading to poor overall system performance.

Figure 2 demonstrates that the row buffer hit rate decreases

significantly with the thread number and VM number in-

creased. The y-axis shows the row buffer hit rate, and the

x-axis presents the different running configurations. The n-T-

m-VM represents there is m VMs with n threads in the server,

and every VM has n/m threads.

The interference mainly derives from three aspects:

1) Hypervisor interfere VMs. The hypervisor presents the

guest operating systems with a virtual operating platform

and manages the execution of the guest operating sys-

tems. Multiple instances of a variety of operating systems

may share the virtualized hardware resources. The hy-

pervisor takes responsible for the resources management.

Therefore, VMs need to request the hypervisor to allocate

physical resources, like the memory resource, during their

lifetime. In the process of memory allocation, hypervisor

may disturb the origin memory access stream of VMs,

which is one aspect of the memory interference.

Above situation has been shown in the figure 2. 1-

T which represents one thread running on operating

system without hypervisor is better than 1-T-1-VM which

represents one thread running on one VM with hypervisor

in row buffer hit rate. The advantage is mainly from the

hypervisor interference-free.

Moreover, in order to detailed analyze the interference

effect from hypervisor, we count the proportion caused by

hypervisor to the VM row buffer misses. Figure 3 demon-

strates the VM row buffer misses proportion caused by

hypervisor in different configurations. The x-axis presents

the different benchmarks from PARSEC running on VM.

This figure has proven hypervisor contributes great row

buffer misses.

2) VM interfere applications running on it.In order to get

the service of the guest operating system (OS), applica-

tions need to invoke system calls frequently during their

lifetime. To access guest OS’s address space, applications

have to switch to the kernel state. After the service

finished, the state returns back to the user state, which is

the address space of applications originally. For a simple

system call, kernel only uses a small part of a page, while

it has to complete the above steps, which may lead to two

additional row-buffer misses. Although guest OS invoca-

tions are usually short-lived, they are invoked frequently

[18], which leads to the frequently switches between

kernel state and user, intensifying interference. Figure

4 demonstrates row buffer misses proportion caused by

guest OS to different benchmarks. In the figure, we

run different benchmarks on VM to count the misses

proportion caused by invoking system calls and other

guest OS interference. This figure has clearly shown guest

OS contributes most row buffer misses to applications.

3) Interference among VMs and threads on one VM.VMs

and threads on one VM running concurrently contend

shared memory in both CMP systems and virtual CMP

(VCMP) systems. Therefore, memory streams of different

VMs and threads are interleaved and interfere with each

other at DRAM memory and virtual memory address

space respectively. The results of figure 2 have proven

the interference among VMs and threads on one VM. 1-

T-1-VM which represents one thread running on one VM

is better than 4-T-4-VM which represents four threads

running on four VMs and each VM has one thread in row

buffer hit rate. Briefly, one VM is better than four VMs

simultaneously running in row buffer miss rate. Moreover,

as the threads number of one VM increased, such as 1-T-

1-VM, 4-T-1-VM, 64-T-8-VM and 256-T-16-VM, from

one thread to 16 threads on one VM, the row buffer

miss rate decreases seriously. Interference among VMs

and threads on one VM needs to alleviate for memory

performance improvement.

C. Profiling of Memory Sharing

Basically, memory deduplication of reducing memory re-

quirements is based on the assumption that a system has many

identical contents. However, in the virtualization, a physical

server mostly hosts multiple VMs to run simultaneously for

different services. The software as well as the data used in

VMs can be similar [19]. Therefore, through merging those

identical pages, the physical system can release additional free

pages.

Moreover, the guest OS running on each VM also have

many identical contents. Figure 5 demonstrates the identical

pages proportion between two VMs without applications run-

ning on them. In the figure, the x-axis presents the two guest