323

Research on Parallel Computing Performance Visualization Based on MPI

ZHANG Fan1,2

1. School of Geophysics and Information Technology, China University of Geosciences (Beijing)

2. Institute of Geophysics, University of Mining and Technology Freiberg, Germany

Beijing, P.R. China

E-mail: 68kilo@163.com

Abstract—with the development of parallel technology and

application, the performance analysis and visualization of

parallel computing is one of the most important parts. This

paper presents a parallel computing performance visualization

system which is developed to visualize and analyze the speedup

and efficiency of parallel computing. We visualize and analyze

the results of magnetotelluric parallel forward modeling

program based on MPI which used finite element method. It

succeeds in collecting the data of program execution time and

communication time, visualizing and analyzing the speedup

and efficiency.

Keywords-Parallel Computing; Performance; Speedup;

Efficiency; MPI

I. INTRODUCTION

Parallel computing means that it processes many tasks,

many instructions and data in the same time. It is realized

on computer cluster or computer with multi-cores. The aims

for parallel computing are improving calculation speed and

solving the problems which can’t be solved by traditional

computers. The performance of parallel computing becomes

more and more important for developing parallel programs.

But it is hard to concern about parallel programming and

performance at the same time. In order to help program

designer to find and analyze the factors which influence

program execution easily, people develop performance

visualization tools.

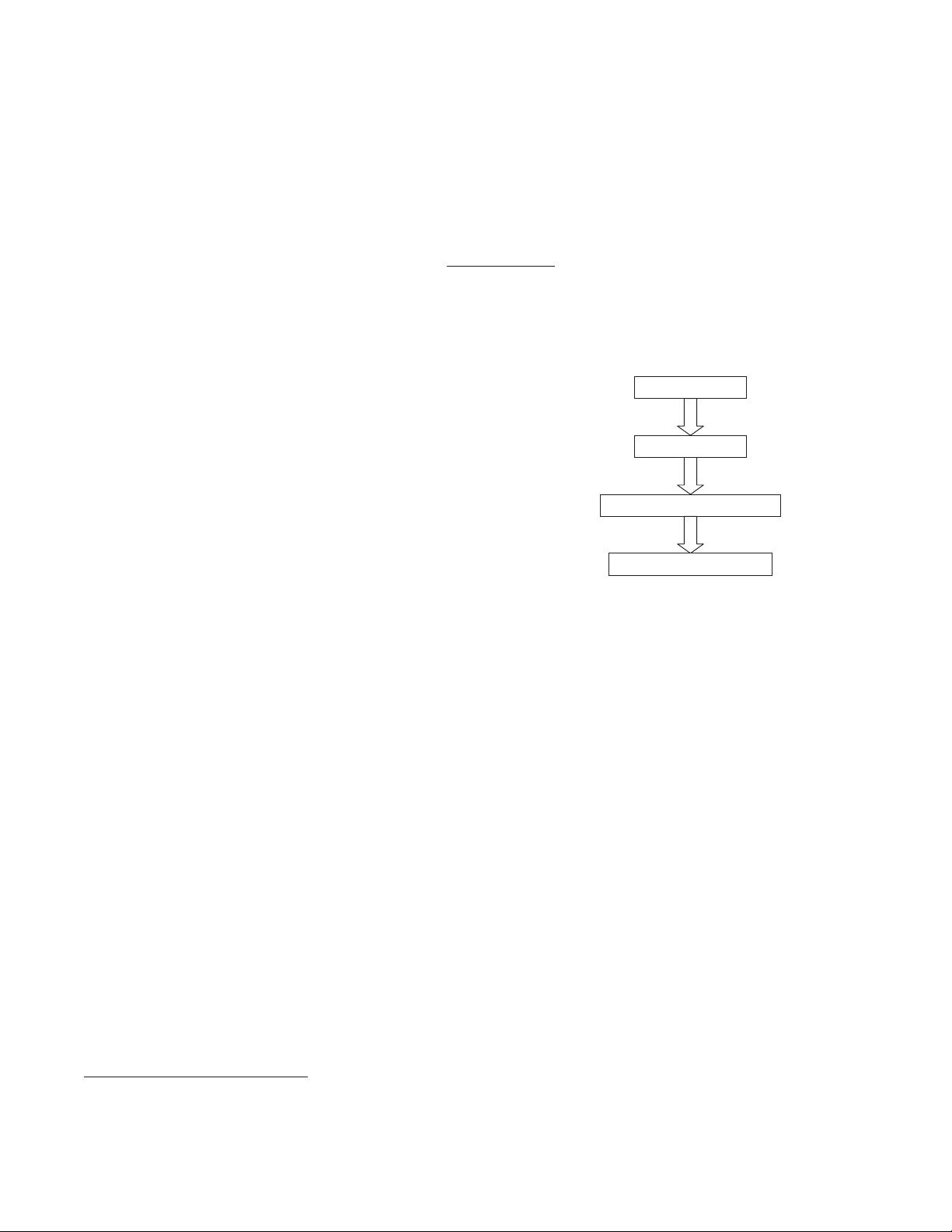

Generally, performance visualization is to record

execution time and other factors which influence

performance, then visualize these information into 2D or

3D graphics. It is directly showed to programmers. There

are 3 processes for performance visualization.

(1) Data generation. This process generates

performance data and event tracing documentation. Then

people can divide different visualization tools based on

different times for data generation and different types of

storages of data documentation.

(2) Data display. There are many different types of

data, such as processor, communication, memory, operation

system, communication protocol and so on. People should

choose proper visualization tools for different types of data.

978-1-4244-5848-6/10/$26.00 ©2010 IEEE

(3) Data analysis and user interaction. For this process,

people can choose different types of analysis methods by

means of visualization tools. Users can use different

interactions, such as replaying, cartoon and so on.

Data generation

Data display

Data analysis and user interaction

Realization Visualization

Figure 1. The Realization Processes for Performance Visualization

MPI is issued on May, 1994. It is a message passing

interface. It provides a widely used standard for

programming message passing program without the

relationship with program language and platform. Actually

MPI is a standard instruction for message passing function

library. So it is a library rather than a language. It can be

called by FORTRAN77/C/Fortran90/C++. We can get MPI

for free and all of the parallel computer manufacturers

support MPI. MPI program can run on all of the parallel

computers without being modified. MPI is a message

passing program model, so it aims to provide service for the

communication between processes.

II. T

WO INDEXES OF PARALLEL COMPUTING

PERFORMANCE

:SPEEDUP AND EFFICIENCY

A. Speedup

People often use speedup to measure performance of

parallel algorithms. It is a ratio value of the time for solving

problem on single processor and the time for solving the

same problem on multi-processors.

Speedup = serial computing time/parallel computing

time

In theory, speedup can’t exceed the number of

processors (p). If the time for solving a problem using the

best serial algorithm is Ts and the time for solving the same

problem using multi-processors is not more than Ts / p, it

means that the time cost of every processor is not more than