650 IEEE TRANSACTIONS ON IMAGE PROCESSING, VOL. 26, NO. 2, FEBRUARY 2017

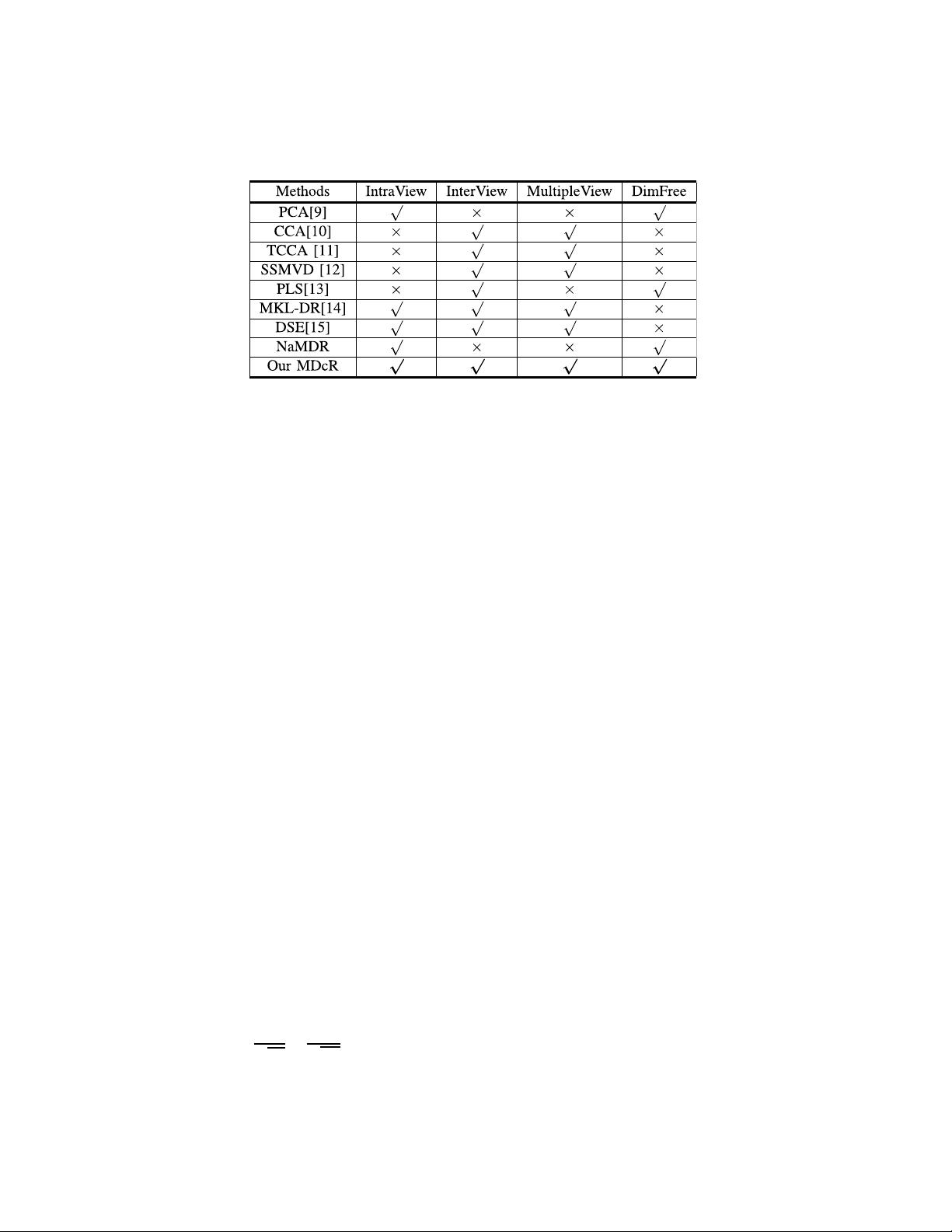

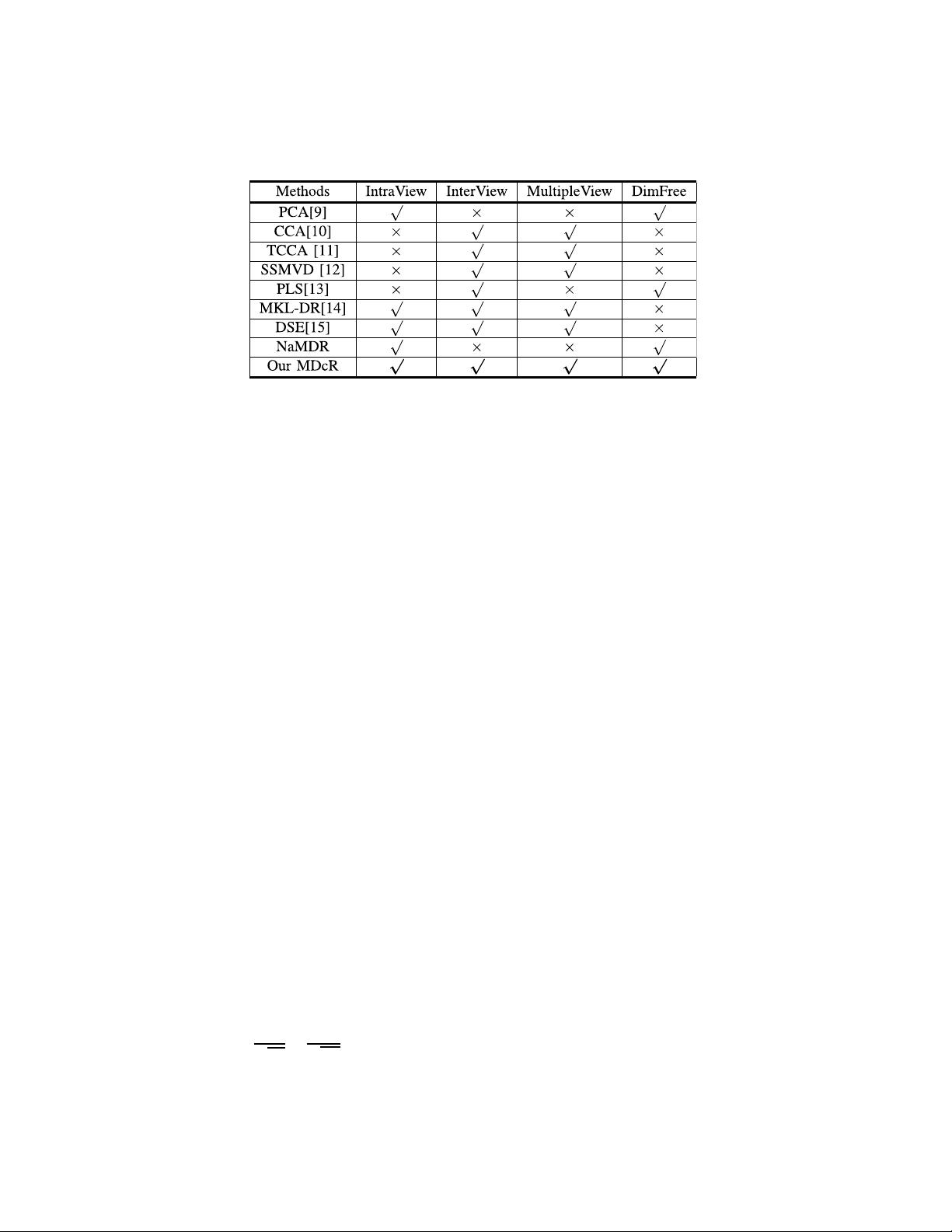

TABLE I

C

OMPARISON OF UNSUPERVISED MULTI-VIEW DIMENSIONALITY REDUCTION METHODS.INTRAVIEW:EXPLORING THE CORRELATIONS WITHIN

EACH VIEW,INTERVIEW:EXPLORING THE CORRELATIONS ACROSS THE DIFFERENT VIEWS,MULTIPLEVIEW:APPLIED FOR THE

DATA WITH MORE THAN 2VIEWS,DIMFREE:FREE OF REDUCING EACH VIEW TO A COMMON SPACE (‘

√

’ AND ‘×’INDICATE

PRESENCE AND ABSENCE OF PROPERTY,RESPECTIVELY)

is measured by HSIC. It employs the inner product kernel

as well as two nonlinear kernels. However, the optimization

for nonlinear case is not such efficient. In the work [35],

the authors exploit the criterion HSIC in a semi-supervised

manner to map pairs of instances to each other without exact

correspondence requirement. The work [32] performs self-

based subspace clustering with multiple views, while the HSIC

is used for diversity measure. In our work, we make our goal

to perform multi-view dimensionality reduction jointly. To this

end, we learn the low-dimensional projection for each view

by maximizing HSIC over the kernel matrices to explore the

correlations across these multiple views.

III. T

HE PROPOSED METHOD

We firstly give a brief introduction for graph embedding

dimensionality reduction [39]. Then we will detail the HSIC

for kernel matching and induce the proposed MDcR method.

A. Graph Embedding Dimensionality Reduction

Graph embedding dimensionality reduction [39] aims to

perform dimensionality reduction with local relationships of

points preserved. Given X =[x

1

, x

2

,...,x

N

]∈R

D×N

with

each column being a sample vector, the goal of dimensional-

ity reduction is obtaining the corresponding low-dimensional

representation Z =[z

1

, z

2

,...,z

N

]∈R

K ×N

,whereK D

and z

i

is the low-dimensional representation of x

i

.Forthe

graph embedding based dimensionality reduction method [39],

the objective is designed to ensure the sufficiently smooth on

the the data manifold. The intuitive explanation is that if two

data points x

i

and x

j

are close, then their low-dimensional

representations z

i

and z

j

should be similar to each other. The

distance of two low-dimensional representations z

i

and z

j

is

defined as

d(z

i

, z

j

) =||

z

i

√

d

ii

−

z

j

d

jj

||

2

, (1)

where the distance is normalized by d

ii

and d

jj

in order to

reduce the impact of popularity of nodes as in traditional

graph-based learning [40], [41], and D is a diagonal matrix

with element d

ii

=

n

j=1

w

ij

. W = (w

ij

) is the affinity

matrix, which is often constructed by the original data

matrix X. Then, the graph-regularized representation can be

learned by the following objective function

min

Z∈R

K×N

ij

||z

i

− z

j

||

2

w

ij

= max

Z∈R

K×N

tr(ZLZ

T

), (2)

where L = D

−1/2

WD

−1/2

is the normalized graph Laplacian

matrix, and tr(·) denotes the trace of a matrix.

For the multi-view setting, a naive way is incorporating

multiple views directly as

max

Z

(v)

∈R

K

(v)

×N

V

v=1

tr(Z

(v)

L

(v)

Z

(v)

T

), (3)

where v denotes the view index. Z

(v)

and L

(v)

denote the

learned low-dimensional representation and the normalized

graph Laplacian matrix corresponding to the v

th

view, respec-

tively. For explicitly learning the projections between high-

dimensional and low-dimensional spaces, the projection P

(v)

is introduced as Z

(v)

= P

(v)

X

(v)

for the v

th

view. Accordingly,

the objective function in Eq. (3) is derived as

max

P

(v)

∈R

K

(v)

×D

(v)

V

v=1

tr(P

(v)

X

(v)

L

(v)

X

(v)

T

P

(v)

T

)

s.t. P

(v)

P

(v)

T

= I,v = 1,...,V, (4)

where D

(v)

and K

(v)

correspond to the dimensionalities of

the original (high-dimensional) space and the corresponding

reduced (low-dimensional) subspace, respectively. The con-

straint P

(v)

P

(v)

T

= I prevents the trivial solution. Intuitively,

this naive way reduces the dimensionality of each view

independently and does not exploit the correlations of these

multiple views.

B. Kernel Matching via HSIC

In this work, we introduce Hilbert Schmidt Independence

Criterion (HSIC) [36] to explore the correlations among

different views, which has the following advantages [38].

First, HSIC measures dependence of the reduced subspaces of

different views by mapping variables into a reproducing kernel

Hilbert space (RKHS) such that these views need not depend

on a common low-dimensional subspace, which is critical to