P1

B1

P2

B2

Exec%T1

Commit

Exec%T2%(A)

Exec%T2%(B)

Commit

Commit

Exec%T1

Commit

Exec%T2%(B)

Exec%T2%(A)

Commit

Commit

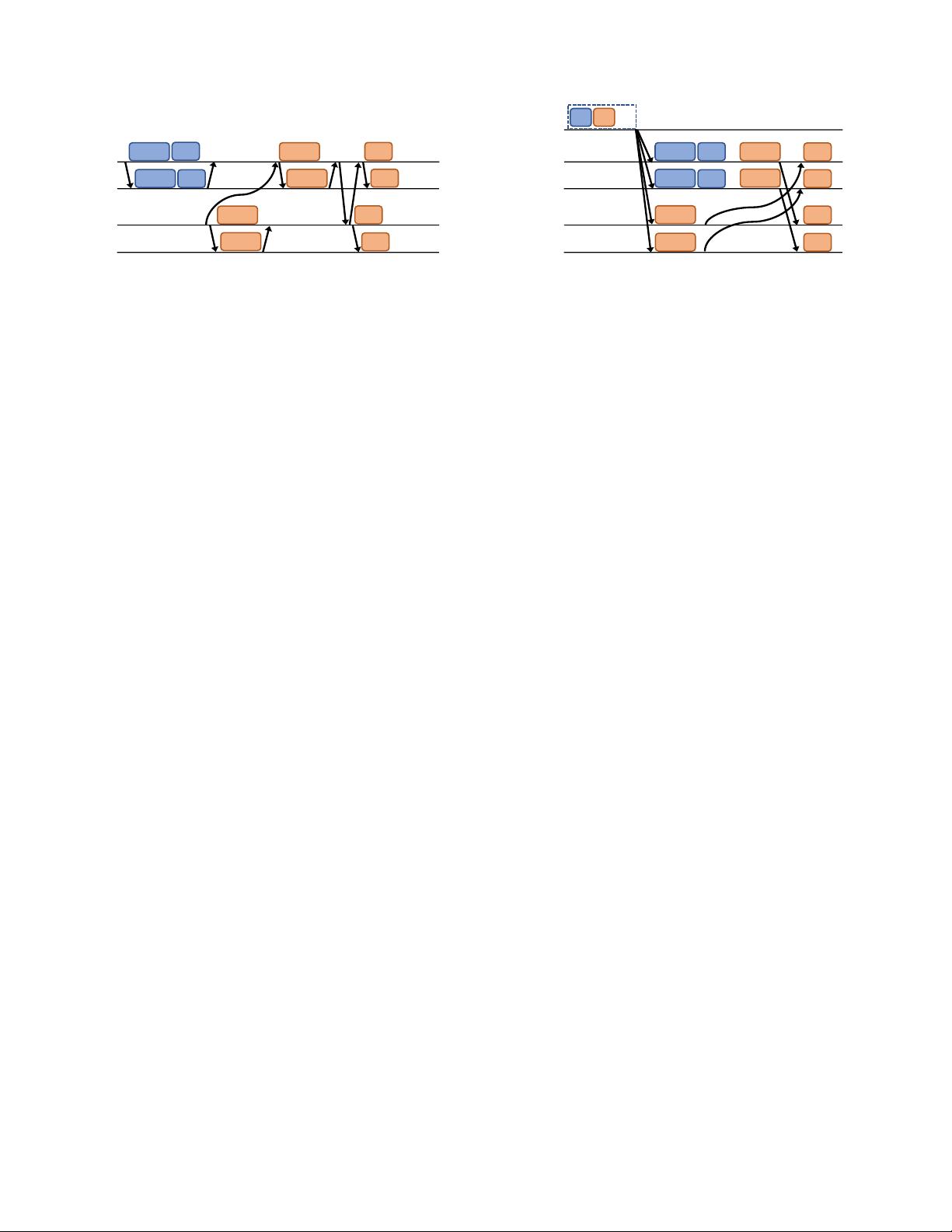

Figure 2: Active-active replication in H-store/VoltDB

all replicas of the database in the same deterministic order, such

that all the replicas end up with identical states once the batch is

executed. Replicas in an active-active database only coordinate to

determine the batches, but do not coordinate during the execution

of transactions, which significantly reduces the network traffic be-

tween replicas. Two prominent examples of databases which use

active-active replication are H-store [16] (and its commercial suc-

cessor VoltDB [35]) and Calvin [37].

H-Store’s replication protocol is illustrated in Figure 2. In H-

Store, all transactions have to be registered in advance as stored

procedures. The system is optimized to execute each transaction

from the beginning to completion with minimum overhead. In H-

Store, transactions do not get record locks, and instead only lock

the partition they need. Transactions are executed in each parti-

tion sequentially, without getting pre-empted by other concurrent

transactions. For a single-partition transaction such as T 1, the pri-

mary replicates the ID of the invoked stored procedure along with

its parameters to all its backup replicas. All replicas, including the

primary, start executing the transaction code in parallel. Unlike in

log shipping, here the replicas do not need to coordinate, as they ex-

ecute the same sequence of transactions and make deterministic de-

cisions (commit or abort) for each transaction. For multi-partition

transactions, one of the primaries acts as the transaction coordina-

tor and sends the stored procedure and its parameters to the other

participating partitions. At each partition, an exclusive lock is ac-

quired, so that no other transaction is allowed to be executed on that

partition. Each partition sends the stored procedure to all its backup

replicas so they run the same transaction and build their write-set.

Finally, the coordinator initiates a 2PC to ensure that all the other

primaries are able to commit. H-Store performs extremely well if

the workload consists of mostly single-partition transactions. How-

ever, its performance quickly degrades in the presence of multi-

partition transactions, since all participating partitions are blocked

for the entire duration of such transactions.

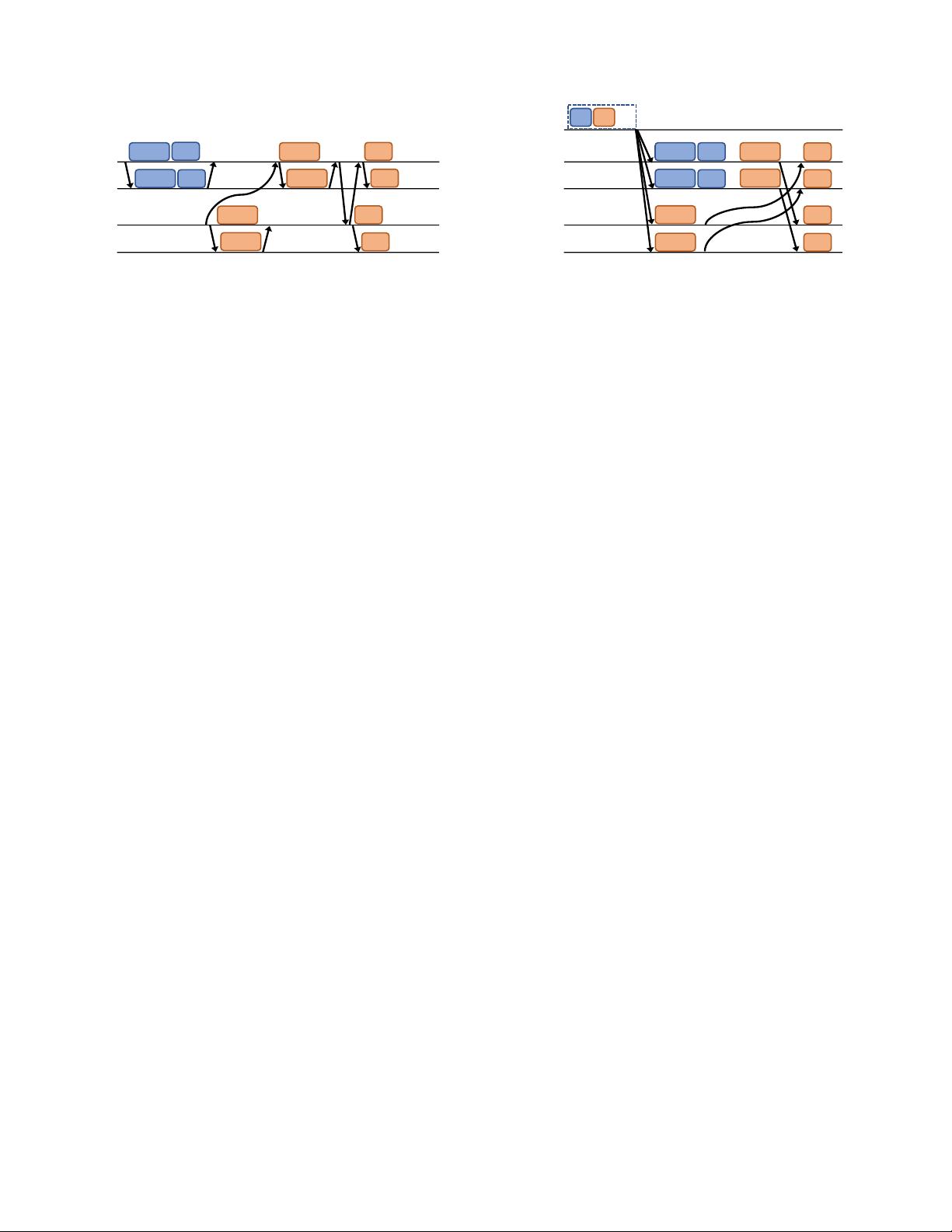

Calvin [37] is another active-active system that takes a differ-

ent approach than H-Store to enforce determinism in execution and

replication (Figure 3). All transactions first arrive at the sequencer

which orders all the incoming transactions in one single serial his-

tory. The inputs of the transactions are logged and replicated to all

the replicas. Then, a single lock manager thread in each partition

scans the serial history generated by the sequencer and acquires all

the locks for each transaction, if possible. If the lock is already

held, the transaction has to be queued for that lock. Therefore,

Calvin requires that the read-set and write-set of transactions are

known upfront, so that the lock manager would know what locks

to get before even executing the transaction (This assumption may

be too strict for a large category of workloads, where the set of

records that a transaction is read or modified is known throughout

executing the transaction). Those transactions which all their locks

are acquired by the lock manager are then executed by the worker

Sequencer

P1

B1

P2

Sequencing

B2

!"

!#

…

$%&'(!"()*+

$%&'(!"()*+

$%&'(!#(),+

$%&'(!#(),+

$%&'(!#()*+

$%&'(!#()*+

-.//01

-.//01

-.//01

-.//01

-.//01

-.//01

Figure 3: Active-active replication in Calvin

threads in each replica without any coordination between replicas.

For multi-partition transactions, the participating partitions com-

municate their results to each other in a push-based manner (instead

of pull-based, which is common in the other execution schemes).

Compared to Calvin with its sequencing overhead, H-store has

a much lower overhead for single-partitioned transactions. Calvin,

on the other hand, benefits from its global sequencing for multi-

partition transactions.

3. THE CASE FOR REPLICATION WITH

RDMA NETWORKS

With the fast advancement of network technologies, conventional

log-shipping and active-active schemes are no longer the best fits.

In this section, we revisit the design trade-offs that conventional

schemes made and demonstrate why the next-generation networks

call for a new design of high availability protocol in Section 3.1.

We then provide some background on RDMA in Section 3.2.

3.1 Bottleneck Analysis

The replication schemes described in the previous section were

designed in a time that network communication was the obvious

bottleneck in a distributed main-memory data store by a large mar-

gin. Reducing the need for accessing the network was therefore a

common principle in designing efficient algorithms. Both classes

of techniques approach this design principle by exchanging high

network demand with more processing redundancy, each to a dif-

ferent degree. This idea is illustrated in Figure 4a. In log ship-

ping, the logs have to be replayed at each replica, which may not

be much cheaper than redoing the transaction itself for some work-

loads. Active-active techniques reduce the need for network com-

munication even further and thus improve performance when the

network is the bottleneck but impose even more redundancy for

computation.

In these networks, communication during replication is consid-

ered expensive mainly due to three factors. (1) Limited bandwidth

of these networks would be easily saturated and become the bottle-

neck. (2) The message processing overhead by the operating sys-

tem proved to be substantial [3], especially in the context of many

OLTP workloads which contain simple transactions that read and

modify only a few records. (3) High latency of network communi-

cation increases the transaction latency, contributing to contention

and therefore impacts throughput.

With the emergence of the next-generation of RDMA-enabled

networks, such as InfiniBand, these assumptions need to be re-

evaluated. (1) Network bandwidth has increased significantly, and

its increase rate does not seem to be slowing down [13]. For exam-

ple, a Mellanox ConnectX-4 EDR card offers 100× bandwidth of a

typical 1Gb/sec Ethernet found in many public cluster offerings (in-

cluding our own private cluster). (2) The RDMA feature open new

1639