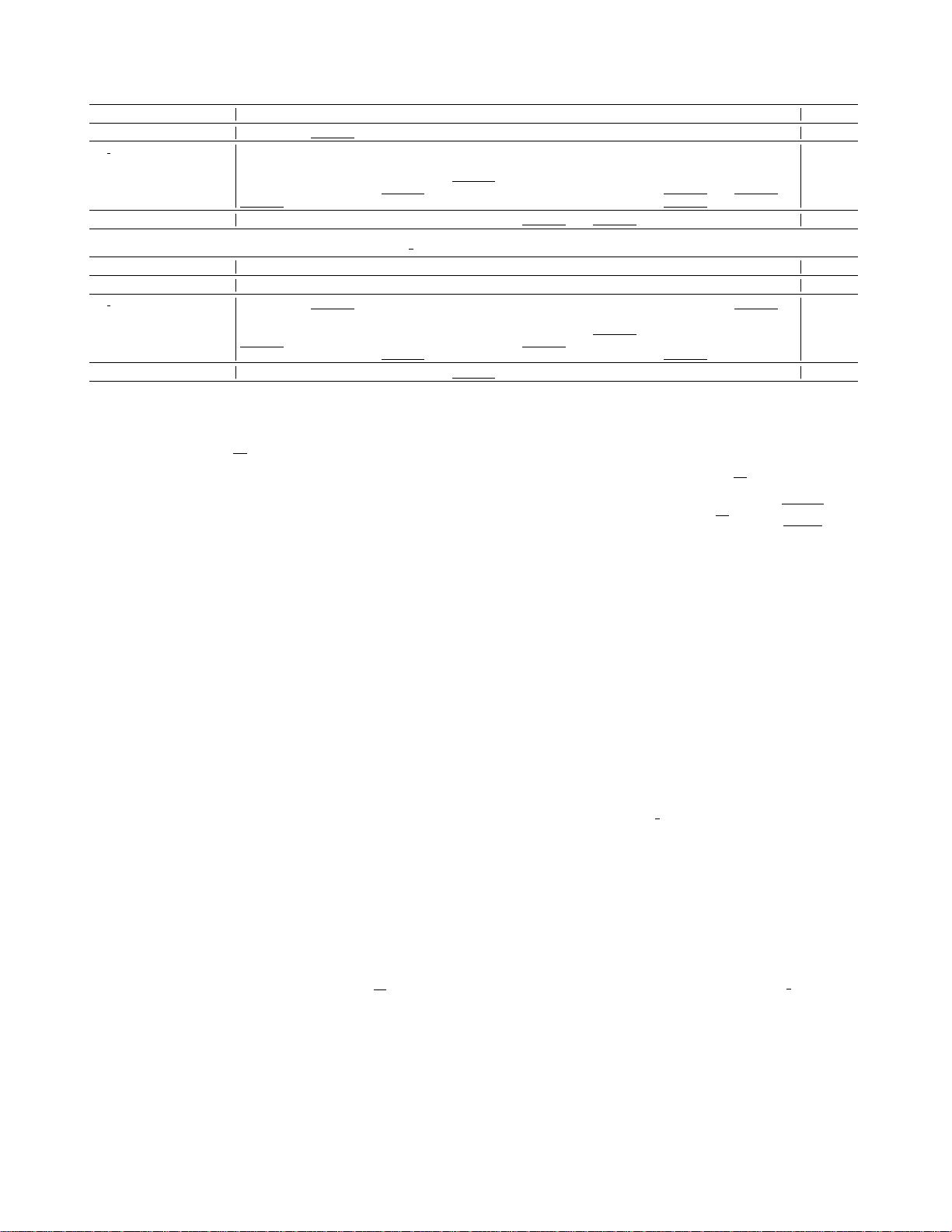

Table 1: Test ROC-AUC (%) of GIN(contexpred) on downstream molecular property prediction benchmarks.(’↑’ denotes performance im-

provement compared to the Fine-Tuning baseline. )

Methods BBBP Tox21 Toxcast SIDER ClinTox MUV HIV BACE Average

Fine-Tuning (baseline) 68.0±2.0 75.7±0.7 63.9±0.6 60.9±0.6 65.9±3.8 75.8±1.7 77.3±1.0 79.6±1.2 70.85

L2 SP

[

Xuhong et al., 2018

]

68.2±0.7 73.6±0.8 62.4±0.3 61.1±0.7 68.1±3.7 76.7±0.9 75.7±1.5 82.2±2.4 70.25

DELTA

[

Li et al., 2018b

]

67.8±0.8 75.2±0.5 63.3±0.5 62.2±0.4 73.4±3.0 80.2±1.1 77.5±0.9 81.8±1.1 72.68

Feature(DELTA w/o ATT) 61.4±0.8 71.1±0.1 61.5±0.2 62.4±0.3 64.0±3.4 78.4±1.1 74.0±0.5 76.3±1.1 68.64

BSS

[

Chen et al., 2019

]

68.1±1.4 75.9±0.8 63.9±0.4 60.9±0.8 70.9±5.1 78.0±2.0 77.6±0.8 82.4±1.8 72.21

StochNorm

[

Kou et al., 2020

]

69.3±1.6 74.9±0.6 63.4±0.5 61.0±1.1 65.5±4.2 76.0±1.6 77.6±0.8 80.5±2.7 71.03

GTOT-Tuning (Ours) 70.0±2.3↑

2.0

75.6±0.7↓

0.1

64.0±0.3↑

0.1

63.5±0.6↑

2.6

72.0±5.4↑

6.1

80.0±1.8↑

4.2

78.2±0.7↑

0.9

83.4±1.9↑

3.8

73.34↑

2.49

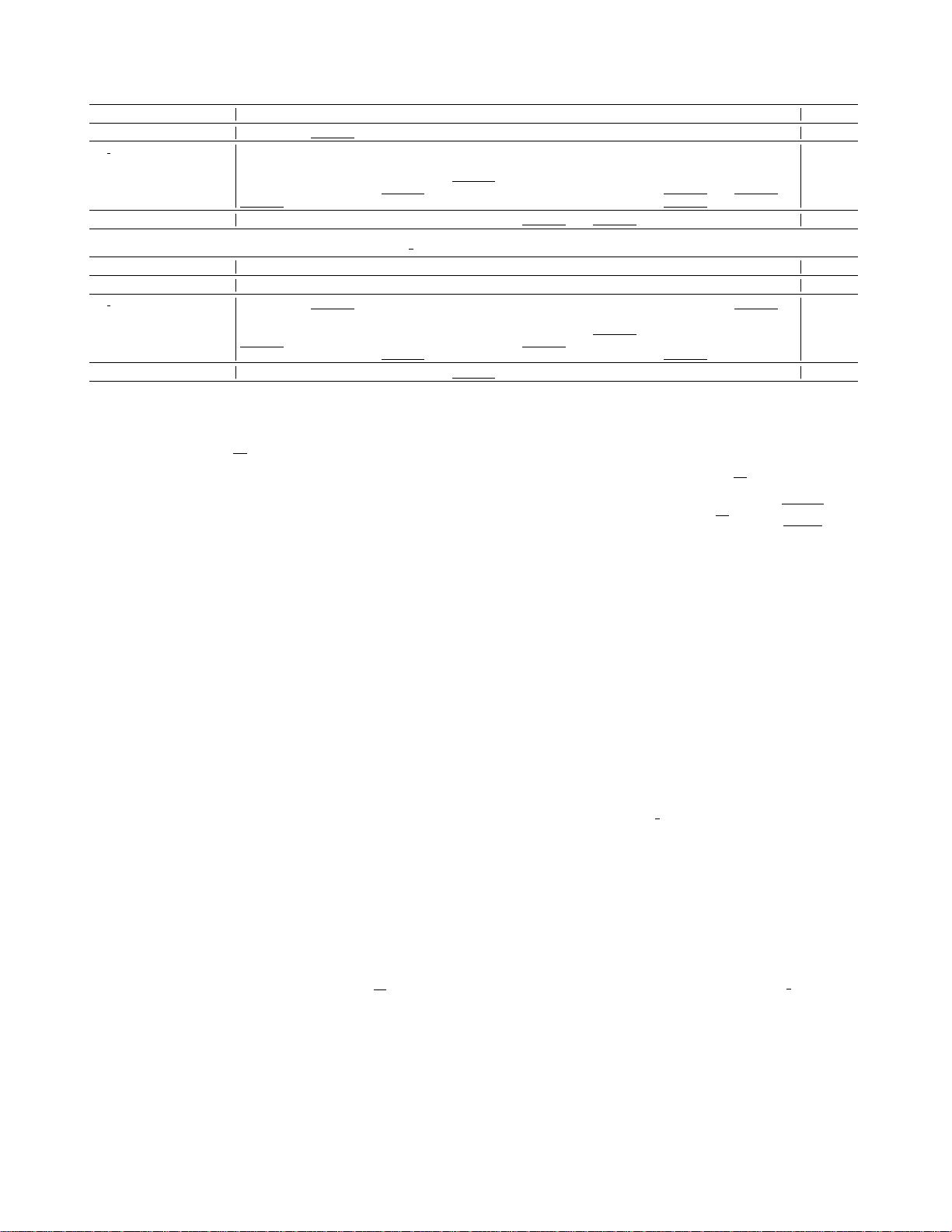

Table 2: Test ROC-AUC (%) of GIN(supervised contexpred) on downstream molecular property prediction benchmarks.

Methods BBBP Tox21 Toxcast SIDER ClinTox MUV HIV BACE Average

Fine-Tuning (baseline) 68.7±1.3 78.1±0.6 65.7±0.6 62.7±0.8 72.6±1.5 81.3±2.1 79.9±0.7 84.5±0.7 74.19

L2 SP

[

Xuhong et al., 2018

]

68.5±1.0 78.7±0.3 65.7±0.4 63.8±0.3 71.8±1.6 85.0±1.1 77.5±0.9 84.5±0.9 74.44

DELTA

[

Li et al., 2018b

]

68.4±1.2 77.9±0.2 65.6±0.2 62.9±0.8 72.7±1.9 85.9±1.3 75.6±0.4 79.0±1.1 73.50

Feature(DELTA w/o ATT) 68.6±0.9 77.9±0.2 65.7±0.2 63.0±0.6 72.7±1.5 85.6±1.0 75.7±0.3 78.4±0.7 73.45

BSS

[

Chen et al., 2019

]

70.0±1.0 78.3±0.4 65.8±0.3 62.8±0.6 73.7±1.3 78.6±2.1 79.9±1.4 84.2±1.0 74.16

StochNorm

[

Kou et al., 2020

]

69.8±0.9 78.4±0.3 66.1±0.4 62.2±0.7 73.2±2.1 82.5±2.6 80.2±0.7 84.2±2.3 74.58

GTOT-Tuning (Ours) 71.5±0.8↑

2.8

78.6±0.3↑

0.5

66.6±0.4↑

0.9

63.3±0.6↑

0.6

77.9±3.2↑

5.3

85.0±0.9↑

3.7

81.1±0.5↑

1.2

85.3±1.5↑

0.8

76.16↑

1.97

Tuning is to minimize the following loss:

L =

1

N

N

X

i=1

l(f, G

i

, y

i

) (6)

where l(f, G

i

, y

i

) := φ(f(G

i

), y

i

)+λL

mw

(A

(i)

, q

(i)

, q

(i)

),

f denotes a given GNN backbone, λ is a hyper-parameter for

balancing the regularization with the main loss function, and

φ(·) is Cross Entropy loss function.

6 Theoretical Analysis

We provide some theoretical analysis for GTOT-Tuning.

Related to Graph Laplacian. Given a graph signal s ∈

R

n×1

, if one defines C

ij

:= (s

i

− s

j

)

2

, then L

mw

=

min

P∈U(A,a,b)

P

ij

P

ij

A

ij

(s

i

− s

j

)

2

. As we know,

2s

T

L

a

s =

P

ij

A

ij

(s

i

− s

j

)

2

, where L

a

= D − A is the

Laplacian matrix and D is the degree diagonal matrix. There-

fore, our distance can be viewed as giving a smooth value of

the graph signal with topology optimization.

Algorithm Stability and Generalization Bound. We ana-

lyze the generalization bound of GTOT-Tuning and expect to

find the key factors that affect its generalization ability. We

first give the uniform stability below.

Lemma 1 (Uniform stability for GTOT-Tun-

ing). Let S := {z

1

= (G

1

, y

1

), z

2

=

(G

2

, y

2

), ··· , z

i−1

= (G

i−1

, y

i−1

), z

i

= (G

i

, y

i

), z

i+1

=

(G

i+1

, y

i+1

), ··· , z

N

= (G

N

, y

N

)} be a training set with

N graphs, S

i

:= {G

1

, G

2

, ..., G

i−1

, G

0

i

, G

i+1

, ..., G

N

}

be the training set where graph i has been replaced. As-

sume that the number of vertices |V

G

j

| ≤ B for all j and

0 ≤ φ(f

S

, z) M, then

0

|l(fS,z)-l(fSi,z)|≤2M+λ√

0

B(7)

0

其中λ是方程(6)中使用的超参数。

0

根据引理1和[BousquetandElisseeff,

2002]的结论,GTOT-Tuning的泛化误差界限如下所示。

0

命题2.假设使用GTOT正则化的GNN满足0≤l(fS,z)≤Q。对于

任意δ∈(0,1),以下界限在样本S的随机抽取上至少以概率1-δ

成立。

0

R(fS)≤Rm(fS)+4M+2λ√

0

B

0

+(8NM+4Nλ√

0

B+Q)

0

0

l

0

2N(8)

0

其中R(fS)表示广义误差,Rm(fS)表示经验误差。证明见附录

。这个结果表明,GNN与GTOT正则化器的泛化界受训练数据

集中最大顶点数(B)的影响。

0

7实验我们在图分类任务上进行实验以评估我们的方法。7.1不

同微调策略的比较。设置。我们重用了由[胡等,2020]发布的

两个预训练模型作为骨干:GIN(contextpred)[Xu等,201

8],它仅通过自监督任务Context

Prediction进行预训练,以及GIN(supervised

contextpred),它是通过ContextPrediction+Graph

Level多任务监督策略进行预训练的架构。这两个网络都是在

化学数据集(包含200万个分子)上进行预训练的。此外,M

oleculeNet

[Wu等,2018]中的八个二分类数据集用于评估微调策略,其

中使用了脚手架分割方案进行数据集分割。更多细节可以在附

录中找到。基线。由于我们没有找到关于微调GNN的相关工

作,我们将几个针对卷积网络的典型基线方法扩展到GNN,

包括L2SP[Xuhong等,2018],DELTA

[Li等,2018b],BSS[Chen等,2019],SotchNorm

[Kou等,2020]。结果。不同微调策略的结果如表1、2所示。

观察(1):GTOT-Tuning在不同数据集上获得了竞争性能,

并且平均表现优于其他方法。观察(2):权重正则化(L2

SP)无法改善纯自监督任务。这意味着L2

SP可能需要预训练任务与下游任务相似。幸运的是,我们的

方法可以持续提升监督和自监督预训练模型的性能。观察(3

):欧几里德距离正则化(Features(DELTAw/o

ATT))的性能比普通微调差,这表明直接使用节点表示正则

化可能导致负迁移。

0

+v:mala2277获取更多论文