6846 IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING, VOL. 52, NO. 11, NOVEMBER 2014

be imposed to be zero for those x

j

that are not among them;

second, the rows of the weight matrix should be summed to

be one. By the two constraints, the reconstruction errors are

invariant to rotations, rescalings, and translations of that data

pixel and its neighboring pixels. From (1), the invariance to

rotations and rescalings follows immediately; the invariance to

translations is enforced by the sum-to-one constraint on the

rows of the weight matrix. The optimal ω

(i)

j

to the cost error

then becomes a constrained optimization problem. Detailed

information on the solution is included in Appendix I.

Suppose that the data set also lies on or near a smooth

nonlinear manifold of dimensionality d(d L). There would

then be a proper linear mapping, which employs translation, ro-

tation, and rescaling, to map the high-dimensional coordinates

of each neighborhood into the global internal coordinates on

the manifold [29]. These corresponding reconstruction weights

should directly reflect the intrinsic geometric properties of the

data set, so that it would not be variant to these transforma-

tions. In other words, each locally linear patch from the high-

dimensional feature space can be placed in a low-dimensional

embedding space, where the same weight ω

ij

that reconstructs

the ith pixel in the L dimensions should also reconstruct its

embedded manifold coordinates in d dimensions.

The key to the low-dimensional embedding is then a neigh-

borhood preserving mapping. Therefore, each pixel x

i

is

mapped into a low-dimensional vector z

i

to minimize the

embedding cost function:

ε(z)=

N

i=1

z

i

−

x

j

∈H

i

ω

ij

z

j

2

. (3)

The aforementioned cost function is much like the previous

one, which is also computed from the locally linear reconstruc-

tion errors. The weight ω

ij

is fixed this time, and the embedding

cost in the aforementioned equation constitutes a minimization

problem. The solution actually becomes a sparse eigenvec-

tor problem. The final z

i

constitutes the bottom d nonzero

eigenvectors, which constitute an ordered set of orthogonal

coordinates centered on the origin. Further details are listed in

Appendix II.

In the aforementioned way, each pixel’s reconstruction

weight is retrieved by only its local neighborhood pixels. In

other words, each patch is independent of the other patches

in the mapping into the low-dimensional feature space. By the

weight matrix, however, a global operation coupling all of the

pixels in the connected components of the graph of the matrix

is constructed, and an N

∗

N eigensolver is computed to get the

embedding coordinates. In addition, the bottom eigenvectors

from (3) are figured out one at a time. In this way, all of the local

patch information is exploited to discover the global structure,

as all of the pixels’ patches are overlapping.

We use the reconstruction error in (3) as the separation rule,

with adaptive thresholding [2], which is quick and increases the

flexibility, so as not to omit the probable anomalies. Further-

more, the anomalies have a low probability of occurrence in the

image, and the percentage of target pixels versus background

is under 1%. In most cases, we can safely use an empirical

probability of 0.05% for separating the most probable anomaly

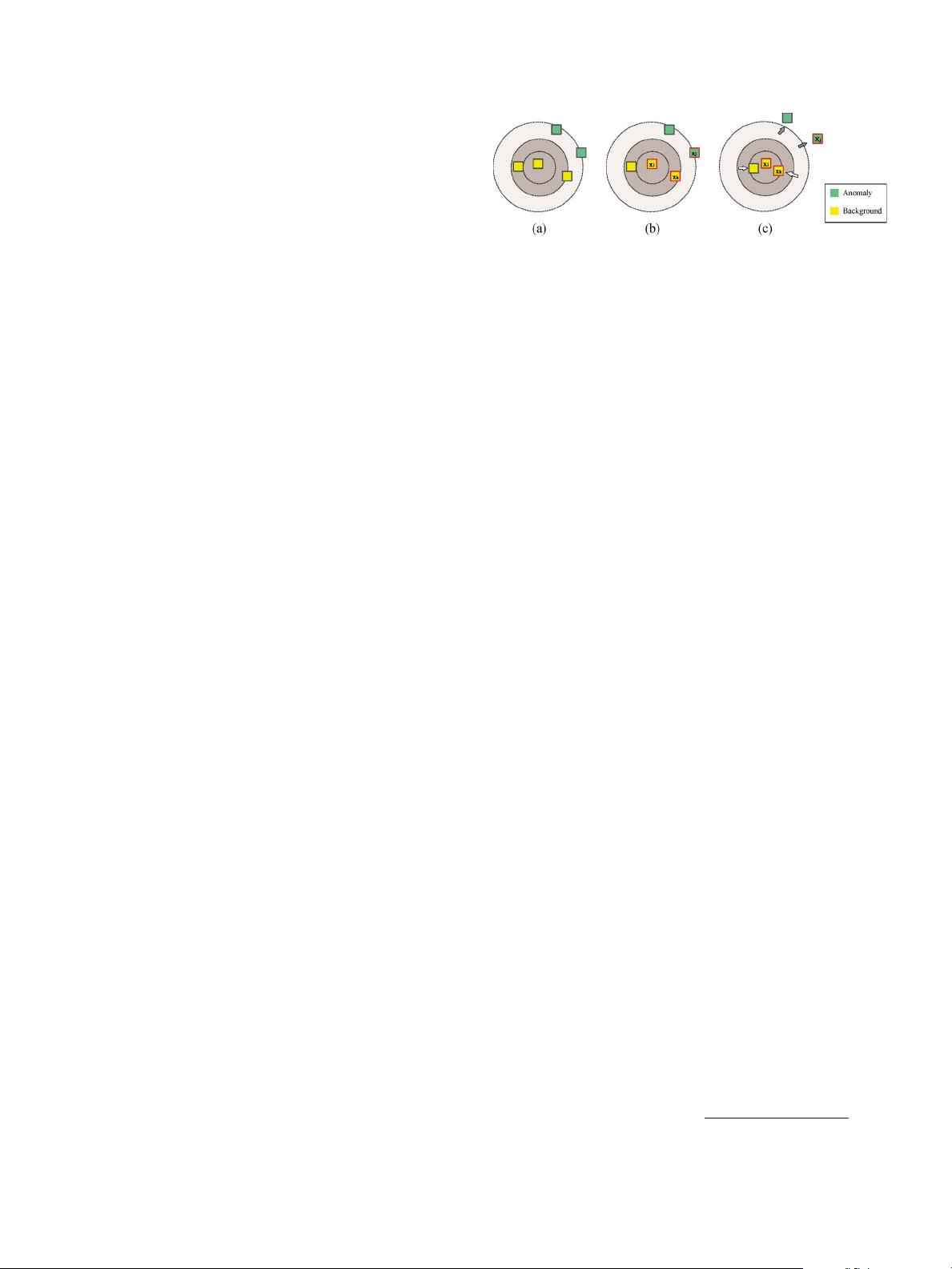

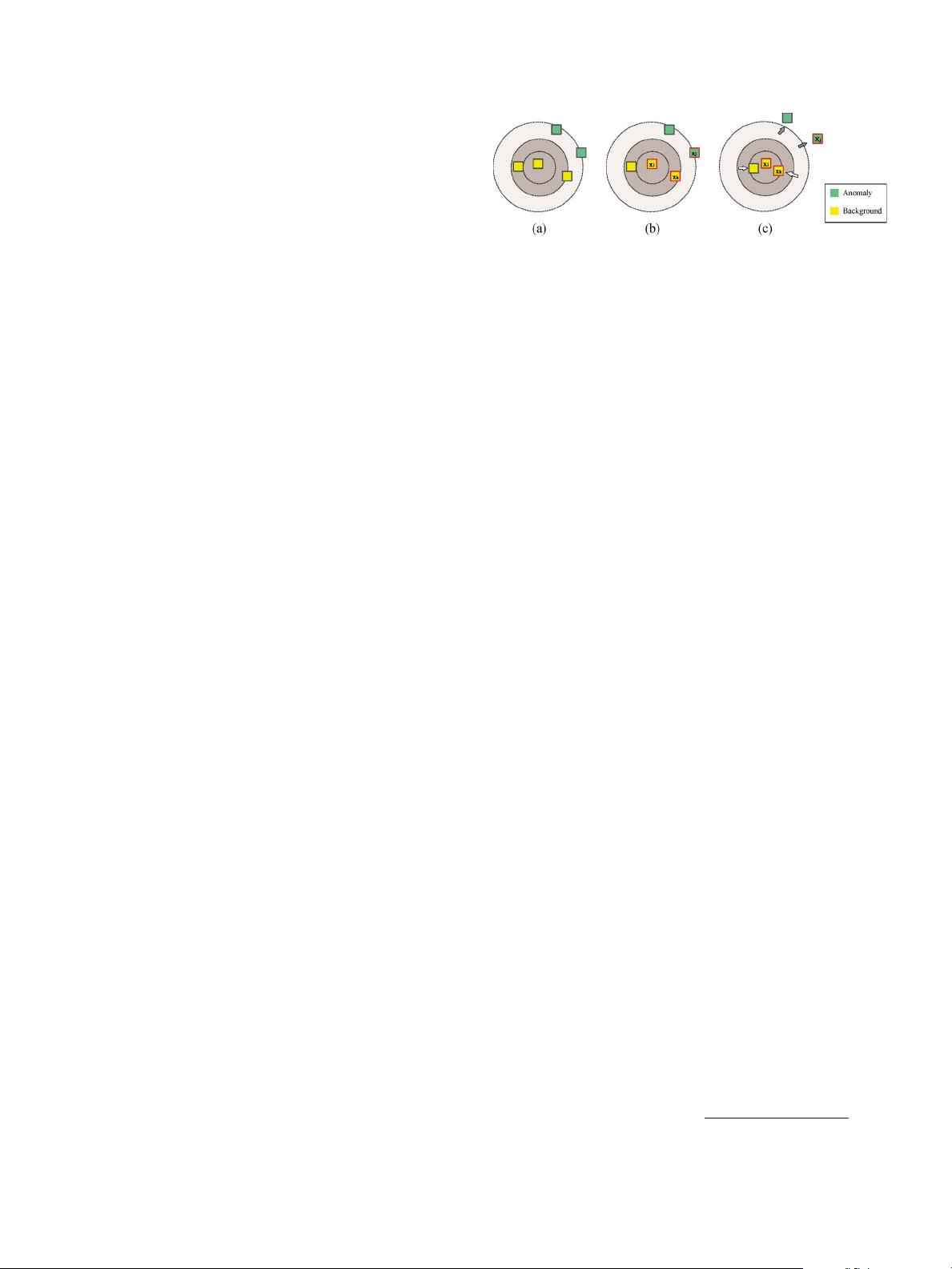

Fig. 2. Schematic illustration of the proposed method. The purpose is to

enlarge the separability between anomalies and background pixels, while the

distance of background pixels is reduced. (A) Original sample. (B) Triplewise

discriminative information labeling. (C) Projecting by anomaly metric.

pixels. The adaptive thresholding method then calculates the

threshold for segmenting anomalies by setting an accumulat-

ing distribution probability percentage of 99.95% from the

histogram of the detection results. The determined probable

anomaly areas are not used directly as the detection results but

as the discriminative label information.

We define two sets named A (anomaly) and B (background)

to denote these labels. For each anomaly pixel, two of its

surrounding nearest neighbor background pixels are picked up

to construct the two data sets. The two background pixels refer

to those among the observed anomaly pixel’s neighbors but are

also the ones with low reconstruction errors to be classified

as background pixels. Therefore, the number of B is twice

of that of A(N

B

=2N

A

). We can then define the dissimilar

data set D and the similar data set S from these two sets:

D = {(x

i

, x

j

)}, x

i

, x

j

=1,...,N

A

, where x

i

belongs to B

and x

j

belongs to A, with x

i

being the nearest neighbor of

x

j

in B; S = {(x

i

, x

k

)}, x

i

, x

k

=1,...,N

B

, where x

i

and

x

k

belong to B. Furthermore, a guard interval is set between

the labeled anomaly pixels and the labeled background pixels,

excluding the labeled background pixels with the largest 10%

reconstruction errors, so as to make S and D immune to weak

anomalies on the boundary of anomaly and background.

III. A

NOMALY METRIC LEARNING

AND

ANOMALY DETECTION

The main idea behind our method can be represented as

follows. From Fig. 2(a), the anomaly pixels appear to be not

particularly differentiable from the background ones. Then, in

Fig. 2(b), the pixels are labeled as probable anomaly and prob-

able background by means of the manifold reconstruction error.

Finally, with the aim of improving the separability between

anomalies and backgrounds, all of the samples are projected

by a proper anomaly metric. The resulting distribution is shown

in Fig. 2(c), revealing that the background samples are more

compact, while the distances between the backgrounds and

anomalies are enlarged.

The key then becomes the construction of a robust anomaly

metric from the label information. A Mahalanobis-like distance

metric is employed. Its expression is

dis

M

(x

i

, x

j

)=x

i

−x

j

M

=

(x

i

−x

j

)

T

M(x

i

−x

j

). (4)

In order to figure out M, an optimal function should be

defined. Some distance constraints are also introduced. In de-

tail, the optimal function comprises two parts: The first part