Appears in the Proceedings of the 5th ACM Symposium on Cloud Computing (SoCC ’14)

2 Methodology

In this section, we describe our methodology, specifically our

choice of target systems, the base issue repositories, our issue

classifications and the resulting database.

• Target Systems: To perform an interesting cloud bug study

paper, we select six popular and important cloud systems

that represent a diverse set of system architectures: Hadoop

MapReduce [3] representing distributed computing frame-

works, Hadoop File System (HDFS) [6] representing scal-

able storage systems, HBase [

4] and Cassandra [1] represent-

ing distributed key-value stores (also known as No SQL sys-

tems), ZooKeeper [5] rep resenting synchronization services,

and finally Flume [

2] representin g streaming systems. T hese

systems are referred with different names (e.g., data-center

operating systems, IaaS/SaaS). For simplicity, we re fer them

as cloud systems.

• Issue Repositories: The development projects of our tar-

get systems are all hosted under Apache Software Founda-

tion Projects [

8] wherein each of them ma intains a highly

organized issue re pository.

1

Each repository contains devel-

opment and deployment issues submitted mostly b y the de-

velopers or sometimes by a larger user community. The term

“issue” is used here to represent both bugs and new features.

For every issue, the repository stores many “raw” labels,

among which we find useful are: issue date, time to resolve

(in days) , bug priority level, patch availability, and numbe r

of developer responses. We download raw labels automati-

cally using the provided web API. Regarding the #responses

label, each issue contains developer re sponses that provide a

wealth of information for understanding the issue; a complex

issue or hard-to-find bug typically has a long discussion. Re-

garding the bug priority label, there are five priorities: trivial,

minor, major, critical, and blo cker. For simplicity, we label

the first two as “minor” and the last three as “major”. Al-

though we an a lyze all issues in our work, we only focus on

major issues in this paper.

• Issue Classifications: To perform a meaningful study of

cloud issues, we introduce several issue classifications as dis-

played in Table

1. The first classification tha t we perform is

based on issue type (“miscellaneous” vs. “vital”). Vital issues

pertain to system development and deployment problems that

are marked with a major priority. Miscellaneou s issues rep-

resent non-vital issues (e.g., code mainten a nce, refactoring,

unit tests, documen ta tion). Real bugs that are easy to fix (e.g.,

few line fix) tend to be labeled as a minor issue and hence are

also marked as miscellaneous by us. We had to manually add

our own issue-type classification because the major/minor

1

Hadoop MapReduce in particular has two repositories (Hadoop and

MapReduce). The first one contains mostly development infrastructure (e.g.,

UI, library) while the second one contains system issues. We use the latter.

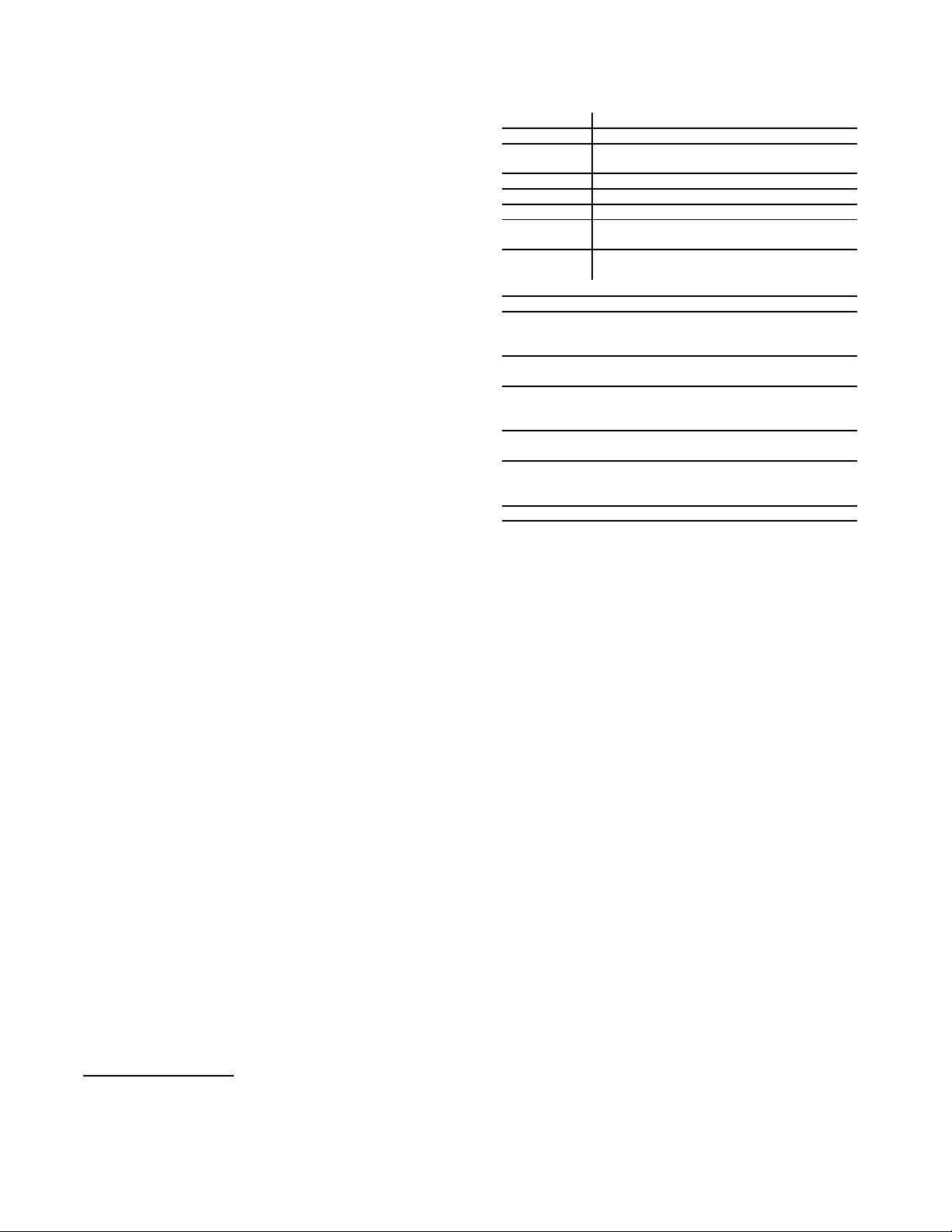

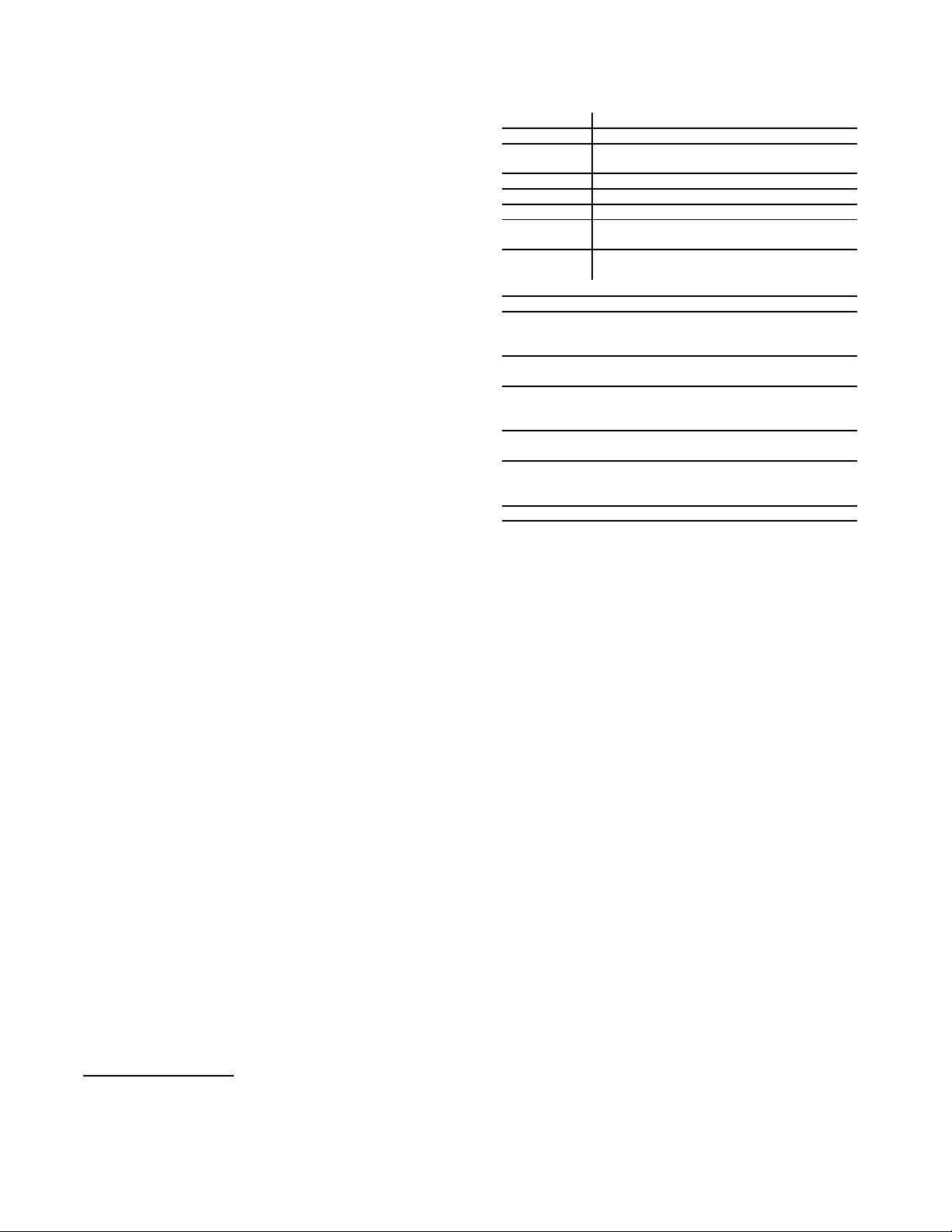

Classification

Labels

Issue Type Vital, miscellaneous.

Aspect Reliability, performance, availability, security,

consistency, scalability, topology, QoS.

Bug scope Single machine, multiple machines, entire cluster.

Hardware Core/processor, disk, memory, network, node.

HW Failure Corrupt, limp, stop.

Software Logic, error handling, optimization, config, race,

hang, space, load.

Implication Failed operation, performance, component down-

time, data loss, data staleness, data corruption.

Per-component Labels

Cassandra: Anti-entropy, boot, client, commit log, compaction,

cross system, get, gossiper, hinted handoff, IO, memtable, migra-

tion, mutate, partitioner, snitch, sstable, streaming, tombstone.

Flume: Channel, collector, config provider, cross system, mas-

ter/supervisor, sink, source.

HBase: Boot, client, commit log, compaction, coprocessor, cross

system, fsck, IPC, master, memstore flush, namespace, read, region

splitting, log splitting, region server, snapshot, write.

HDFS: Boot, client, datanode, fsck, HA, journaling, namenode,

gateway, read, replication, ipc, snapshot, write.

MapReduce: AM, client, commit, history server, ipc, job tracker,

log, map, NM, reduce, RM, security, shuffle, scheduler, speculative

execution, task tracker.

Zookeeper: Atomic broadcast, client, leader election, snapshot.

Table 1: Issue Classifications and Component L ab el s.

raw labels do not suffice; many miscellaneou s issues are also

marked as “major” by the developers.

We carefully read ea ch issue (the discussion, patches, etc.)

to decide whether the issue is vital. If an issue is vital we

proceed with further classifications, otherwise it is labeled

as m iscellaneous and skipped in our study. This pape r only

presents findings from vital issues.

For every vital issue, we introduce aspect labels (m ore in

§

3). If the issue involves har dware problems, then we add

informa tion about the hard wa re ty pe and failure mode (§

5).

Next, we pinp oint the software bug types (§ 6). Finally, we

add implicatio n labels (§

7). As a note, an issue can have

multiple aspect, hardware, software, and implication labels.

Interestingly, we find that a bug can simultaneously affects

multiple machines or even the entire cluster. For this purpose,

we use bug scope labels (§

4). In addition to generic classifi-

cations, we also add per-component labels to mark where the

bugs live; this enables more interesting analysis (§

8).

• Cloud Bug Study DB (CBSDB): The product of our c las-

sifications is stored in CBSDB, a set of raw text files, data

mining scripts and graph utilities [9], which ena bles us (and

future CBSDB users) to perform both quantitative and qu al-

itative analysis of cloud issues.

As shown in Figure

1a, CBSDB contains a total of 21,399

issues submitted over a period of three y e ars (1/1/2011-

1/1/2014) which we analyze one by one. The majority of

the issues are miscellaneous issues (83% on average across

3