AIGC for Various Data Modalities: A Survey • 7

quality, diversity and stability. They are mostly applicable to the conditional setting as well, with some modications. Tab. 2 shows a summary

and comparison between some representative methods.

VGAN [

684

] proposes a GAN for video generation with a spatial-temporal convolutional architecture. TGAN [

571

] uses two separate

generators – a temporal generator to output a set of latent variables and an image generator that takes in these latent variables to produce

frames of the video, and adopts a WGAN [

24

] model for more stable training. MoCoGAN [

666

] learns to decompose motion and content by

utilizing both video and image discriminators, allowing it to generate videos with the same content and varying motion, and vice versa.

DVD-GAN [

129

] decomposes the discriminator into spatial and temporal components to improve eciency. MoCoGAN-HD [

655

] improves

upon MoCoGAN by introducing a motion generator in the latent space and also a pre-trained and xed image generator, which enables

generation of high-quality videos. VideoGPT [

750

] and Video VQ-VAE [

688

] both explore using VQ-VAE [

677

] to generate videos via discrete

latent variables. DIGAN [

788

] designs a dynamics-aware approach leveraging a neural implicit representation-based GAN [

613

] with temporal

dynamics. StyleGAN-V [

614

] also proposes a continuous-time video generator based on implicit neural representations, and is built upon

StyleGAN2 [

321

]. Brooks et al. [

62

] aim to generate consistent and realistic long videos and generate videos in two stages: a low-resolution

generation stage and super-resolution stage. Video Diusion [

263

] explores video generation through a DM with a video-based 3D U-Net

[

128

] architecture. Video LDM [

53

], PVDM [

787

] and VideoFusion [

423

] employ latent diusion models (LDMs) for video generation, showing

a strong ability to generate coherent and long videos at high resolutions. Several recent works [

203

,

245

,

774

] also aim to improve the

generation of long videos.

4.2 Conditional Video Generation

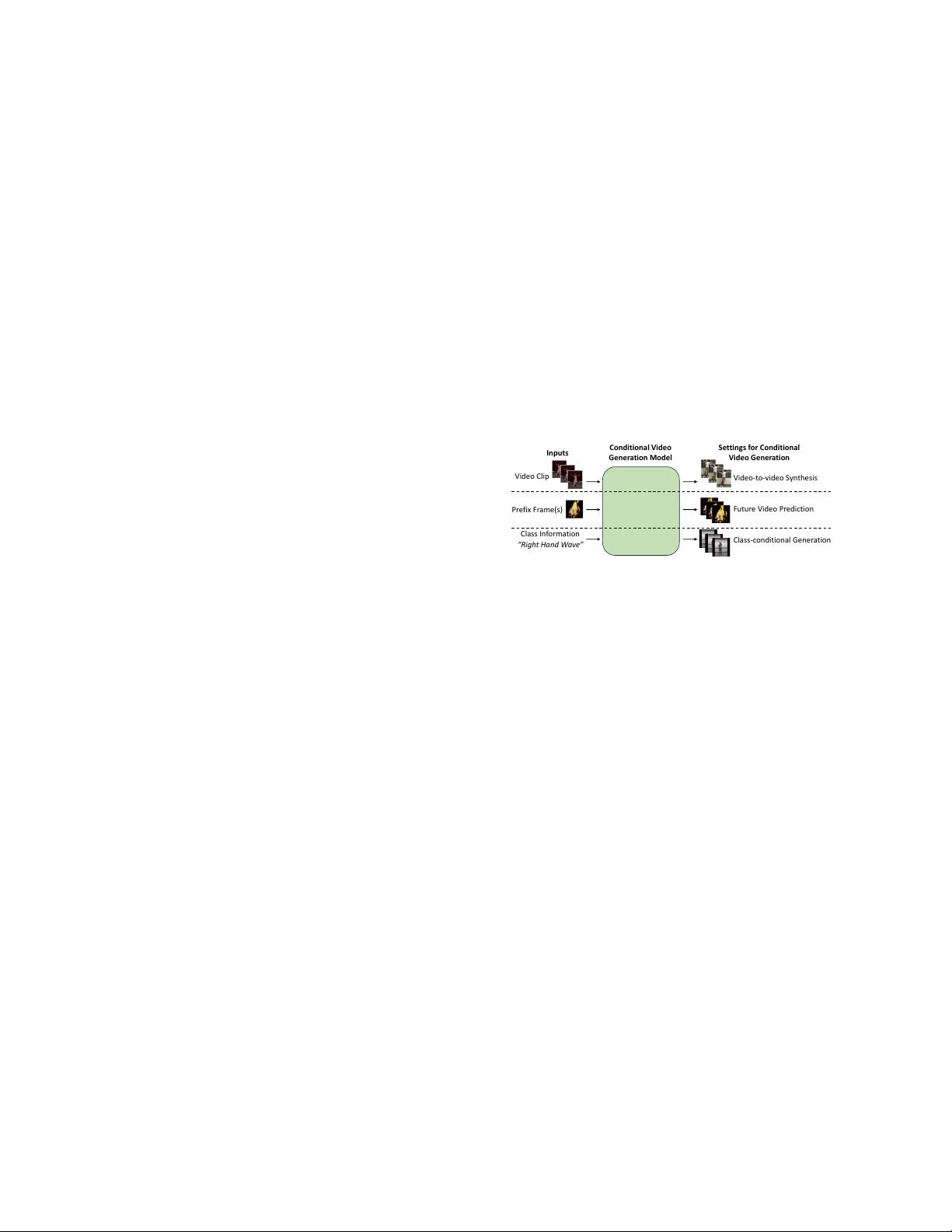

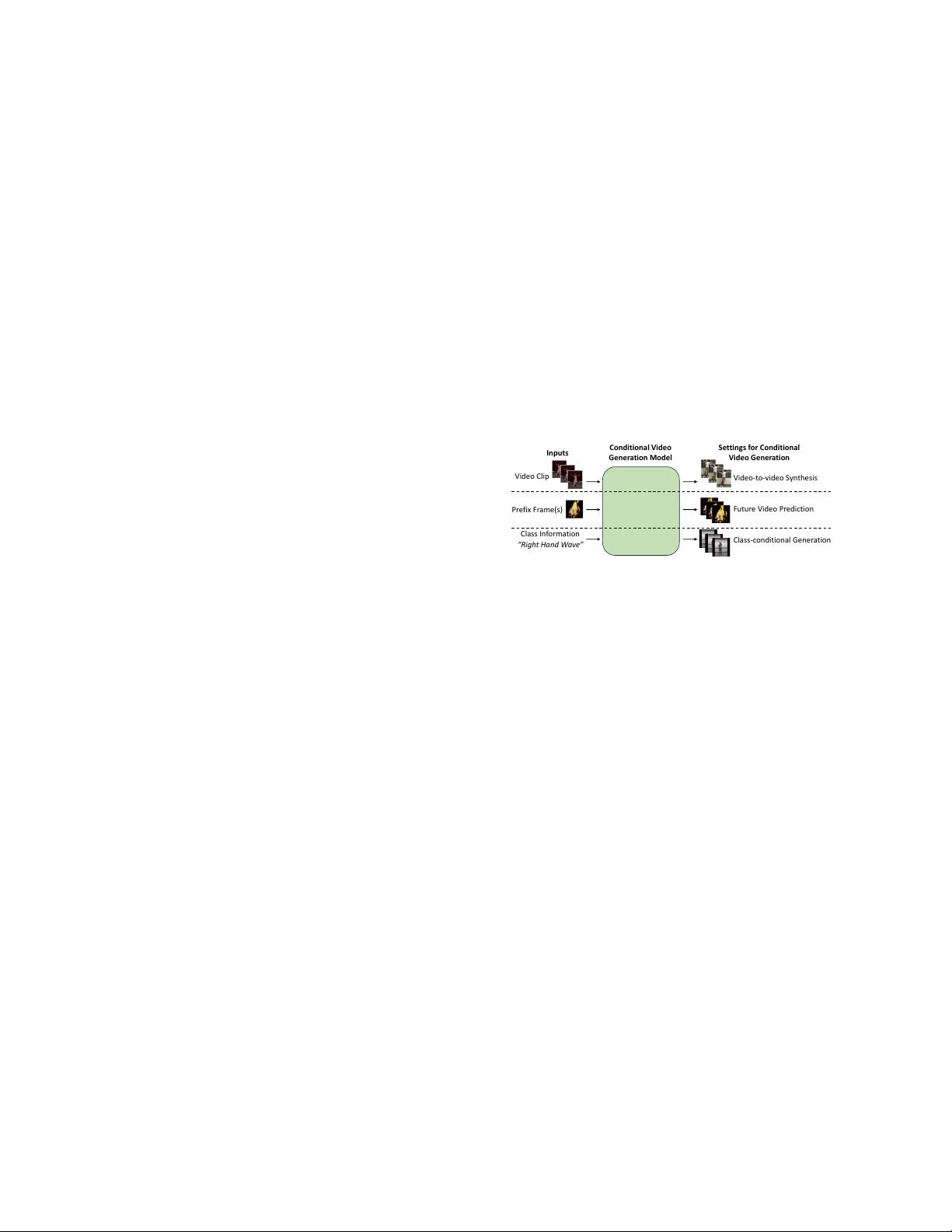

Prefix Frame(s)

Inputs

Video Clip

Video-to-video Synthesis

Future Video Prediction

Class-conditional Generation

Conditional Video

Generation Model

Settings for Conditional

Video Generation

Class Information

“Right Hand Wave”

Fig. 4. Illustration of various conditional video generation inputs. Exam-

ples obtained from [84, 164, 475].

In conditional video generation, users can control the content of the gen-

erated video through various inputs. In this subsection, we investigate the

scenario where the input information can be a collection of video frames

(i.e., including single images), or an input class. A summary of these settings

is shown in Fig. 4.

Video-to-Video Synthesis aims to generate a video conditioned on

an input video clip. There are many applications for this task, including

motion transfer and synthetic-to-real synthesis. Most approaches identify

ways to transfer information (e.g., motion information) to the generated

video while maintaining consistency in other aspects (e.g., identities remain

the same).

Vid2vid [

697

] learns a cGAN using paired input and output videos with a spatio-temporal learning objective to learn to map videos

from one visual domain to another. Chan et al. [

84

] extract the pose sequence from a subject and transfers it to a target subject, which

allows users to synthesize people dancing by transferring the motions from a dancer. Wang et al. [

696

] proposes a few-shot approach for

video-to-video synthesis which requires only a few videos of a new person to perform the transfer of motions between subjects. Mallya et al.

[431] aims to maintain 3D consistency in generated videos by condensing and storing the information of the 3D world as a 3D point cloud,

improving temporal coherence. LIA [

702

] approaches the video-to-video task without explicit structure representations, and achieve it purely

by manipulating the latent space of a GAN [218] for motion transfer.

Future Video Prediction is where a video generative model takes in some prex frames (i.e., an image or video clip), and aim to generate

the future frames.

Srivastava et al. [

628

] proposes to learn video representations with LSTMs in an unsupervised manner via future prediction, which allows

it to generate future frames of a given input video clip. Walker et al. [

687

] adopts a conditional VAE-based approach for self-supervised

learning via future prediction, which can produce multiple predictions for an ambiguous future. Mathieu et al. [

442

] tackles the issue where

blurry predictions are obtained, by introducing an adversarial training approach, a multi-scale architecture, and an image gradient dierence

loss function. PredNet [

416

] designs an architecture where only the deviations of predictions from early network layers are fed to subsequent

network layers, which learns better representations for motion prediction. VPN [

312

] is trained to estimate the joint distribution of the pixel

values in a video and models the factorization of the joint likelihood, which makes the computation of a video’s likelihood fully tractable.

SV2P [

31

] aims to provide eective stochastic multi-frame predictions for real-world videos via an approach based on stochastic inference.

MD-GAN [

730

] presents a GAN-based approach to generate realistic time-lapse videos given a photo, and produce the videos via a multi-stage

approach for more realistic modeling. Dorkenwald et al. [

164

] uses a conditional invertible neural network to map between the image and

video domain, allowing more control over the video synthesis task.

Class-conditional Generation aims to generate videos containing activities according to a given class label. A prominent example is the

action generation task, where action classes are fed into the video generative model.

CDNA [

183

] proposes an unsupervised approach for learning to generate the motion of physical objects while conditioned on the action

via pixel advection. PSGAN [

755

] leverages human pose as an intermediate representation to guide the generation of videos conditioned on

extracted pose from the input image and an action label. Kim et al. [

334

] adopts an unsupervised approach to train a model to detect the

keypoints of arbitrary objects, which are then used as pseudo-labels for learning the objects’ motions, enabling the generative model to be

applied to datasets without costly annotations of keypoints in the videos. GameGAN [

331

] trains a GAN to generate the next video frame of a

graphics game based on the key pressed by the agent. ImaGINator [

699

] introduces a spatio-temporal cGAN architecture that decomposes

, Vol. 1, No. 1, Article . Publication date: September 2023.