"使用lasso进行线性回归的收缩和选择方法"

需积分: 10 180 浏览量

更新于2024-03-21

收藏 1.83MB PDF 举报

Regression Shrinkage and Selection via the Lasso is a groundbreaking paper written by Robert Tibshirani in 1996, published in the Journal of the Royal Statistical Society. The paper introduces the method of using lasso regression for shrinking and selecting variables in linear regression models. The lasso stands for Least Absolute Shrinkage and Selection Operator, and it is a regularization technique that can help improve the accuracy and interpretability of linear regression models.

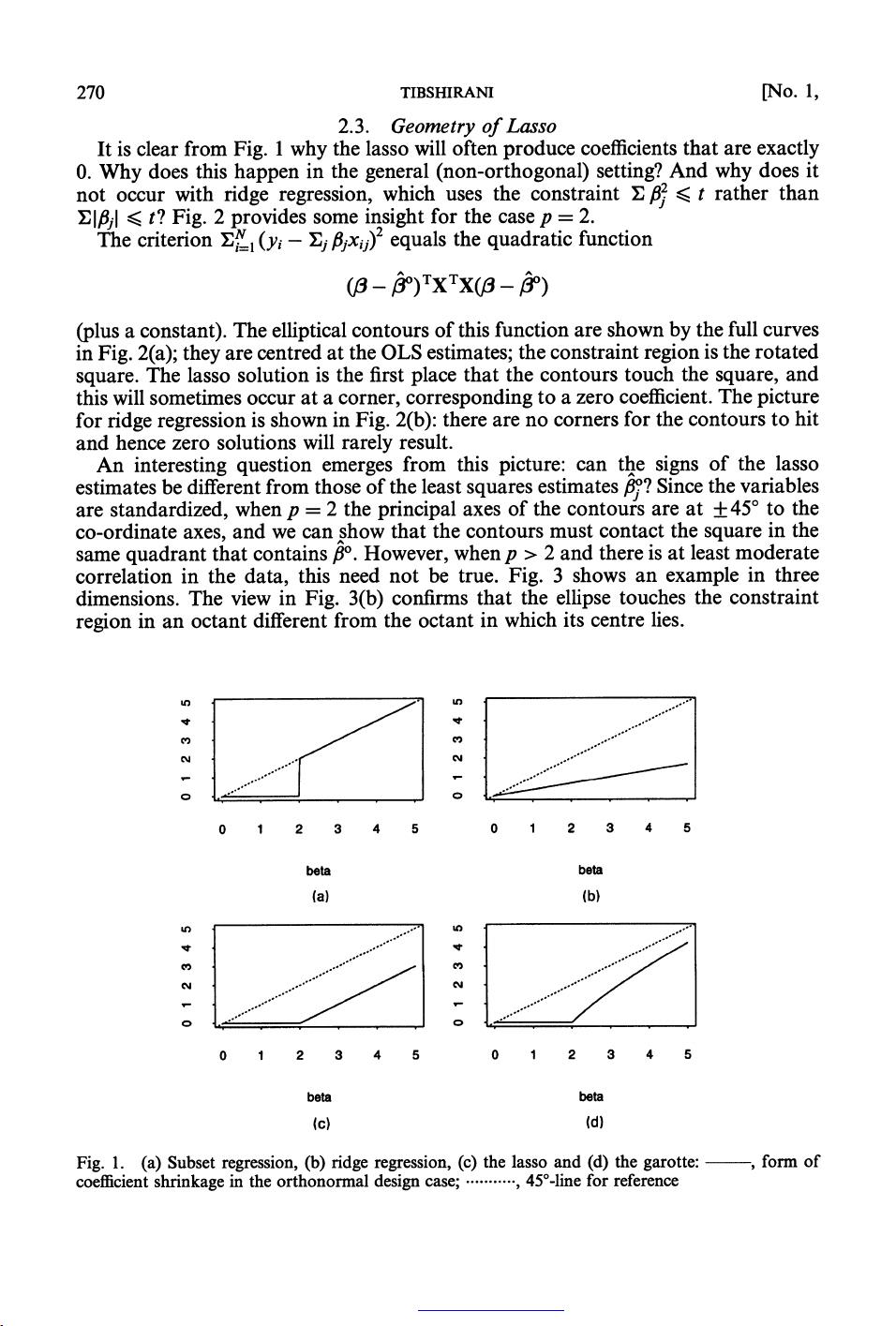

The key idea behind lasso regression is to add a penalty term to the traditional least squares loss function, where the penalty is the sum of the absolute values of the coefficients multiplied by a tuning parameter lambda. This penalty term encourages sparsity in the coefficients, effectively shrinking some coefficients towards zero and setting others to exactly zero. As a result, lasso regression can perform feature selection by identifying and discarding irrelevant variables from the model.

Tibshirani demonstrates the effectiveness of lasso regression through simulations and real data examples, showing how it can outperform traditional methods in terms of predictive accuracy and model interpretability. The paper also discusses the computational challenges of implementing lasso regression and proposes efficient algorithms for solving the optimization problem.

Overall, Regression Shrinkage and Selection via the Lasso has had a significant impact on the field of statistics and data analysis. It has popularized the use of lasso regression as a powerful tool for variable selection and model regularization, leading to numerous applications in machine learning, econometrics, and other domains. The paper continues to be widely cited and referenced in research literature, cementing its status as a seminal work in the field of statistical methodology.

点击了解资源详情

点击了解资源详情

点击了解资源详情

2023-06-12 上传

2023-04-09 上传

2021-09-30 上传

2022-08-03 上传

2018-09-06 上传

2022-11-26 上传

Honorkang

- 粉丝: 6

- 资源: 1

最新资源

- FACTORADIC:获得一个数字的阶乘基数表示。-matlab开发

- APIPlatform:API接口平台主页接口调用网站原始码(含数十项接口)

- morf源代码.zip

- 参考资料-附件2 盖洛普Q12 员工敬业度调查(优秀经理与敬业员工).zip

- MyJobs:Yanhui Wang 使用 itemMirror 和 Dropbox 管理作业的 SPA

- SiFUtilities

- PrivateSchoolManagementApplication:与db连接的控制台应用程序

- python-sdk:MercadoLibre的Python SDK

- Docket-App:笔记本Web应用程序

- Crawler-Parallel:C语言并行爬虫(epoll),爬取服务器的16W个有效网页,通过爬取页面源代码进行确定性自动机匹配和布隆过滤器去重,对链接编号并写入url.txt文件,并通过中间文件和三叉树去除掉状态码非200的链接关系,将正确的链接关系继续写入url.txt

- plotgantt:从 Matlab 结构绘制甘特图。-matlab开发

- 【精品推荐】智慧体育馆大数据智慧体育馆信息化解决方案汇总共5份.zip

- tsu津

- houdini-samples:各种Houdini API的演示

- parser-py:Python的子孙后代工具

- proton:Vue.js的无渲染UI组件的集合