Computer Vision on Mars

mission and supporting technology development. Demo

III, RCTA, and PerceptOR addressed off-road navigation

in more complex terrain and, to some degree, day/night,

all-weather, and all-season operation. A Demo III follow-

on activity, PerceptOR, and LAGR also involved system-

atic, quantitative field testing. For results of DemoIII,

RCTA, and PerceptOR, see (Shoemaker and Bornstein,

2000; Technology Development for Army Unmanned

Ground Vehicles, 2002; Bornstein and Shoemaker, 2003;

Bodt and Camden, 2004; Krotkov et al., 2007) and refer-

ences therein. LAGR focused on applying learning meth-

ods to autonomous navigation. The DARPA Grand Chal-

lenge (DGC), though not a government-funded research

program, stressed high speed and reliability over a con-

strained, 131 mile long, desert course. Both LAGR and

DGC are too recent for citations to be available here. We

review MER in the next section.

With rover navigation reaching a significant level of

maturity, the problems of autonomous safe and precise

landing in planetary missions are rising in priority. Fea-

ture tracking with a downlooking camera during descent

can contribute to terrain-relative velocity estimation and

to landing hazard detection via structure from motion

(SFM) and related algorithms. Robotic helicopters have

a role to play in developing and demonstrating such ca-

pabilities. Kanade has made many contributions to struc-

ture from motion, notably the thread of factorization-

based algorithms initiated with Tomasi and Kanade

(1992). He also created one of the largest robotic heli-

copter research efforts in the world (Amidi et al., 1998),

which has addressed issues including visual odometry

(Amidi et al., 1999), mapping (Miller and Amidi, 1998;

Kanade et al., 2004), and system identification modeling

(Mettler et al., 2001). For safe and precise landing re-

search per se, JPL began developing a robotic helicopter

testbed in the late 1990s that ultimately integrated inertial

navigation, SFM, and a laser altimeter to resolve scale in

SFM. This achieved the first fully autonomous landing

hazard avoidance demonstration using SFM in Septem-

ber of 2003 (Johnson et al., 2005a,b; Montgomery et al.,

to appear).

Finally, Kanade guided in early work in the area that

became known as physics-based vision (Klinker et al.,

1990; Nayar et al., 1991; Kanade and Ikeuchi, 1991),

which exploits models of the physics of reflection to

achieve deeper image understanding in a variety of ways.

This outlook is reflected in our later work that exploits

physical models from remote sensing to improve outdoor

scene interpretation for autonomous navigation, includ-

ing terrain classification with multispectral visible/near-

infrared imagery (Matthieset al., 1996), negative obstacle

detection with thermal imagery (Matthies and Rankin,

2003), detection of water bodies, snow, and ice by ex-

ploiting reflection, thermal emission, and ladar propaga-

tion characteristics (Matthies et al., 2003), and modeling

the opposition effect to avoid false feature tracking in

Mars descent imagery (Cheng et al., 2005).

3. Computer Vision in the MER Mission

The MER mission landed two identical rovers, Spirit and

Opportunity, on Mars in January of 2004 to search for

geological clues to whether parts of Mars formerly had

environments wet enough to be hospitable to life. Spirit

landed in the 160 km diameter Gusev Crater, which in-

tersects the end of one of the largest branching valleys on

Mars (Ma’adim Vallis) and was thought to have possi-

bly held an ancient lake. Opportunity landed in a smooth

plain called Meridiani Planum, halfway around the planet

from Gusev Crater. This site was targeted because orbital

remote sensing showed that it is rich in a mineral called

gray hematite, which on Earth is often, but not always,

formed in association with liquid water. Scientific results

from the mission have confirmed the presence of water

at both sites, and the existence of water-derived alter-

ation of the rocks at both sites, but evidence has not been

discovered yet for large lakes (Squyres and Knoll, 2005).

Details of the rover and lander design, mission op-

eration procedures, and the individual computer vision

algorithms used in the mission are covered in separate

papers. In this section, we give a brief overview of the

pertinent aspects of the rover and lander hardware, briefly

review the vision algorithms, and show experimental re-

sults illustrating qualitative behavior of the algorithms in

operation on Mars. Section 4 addresses more quantita-

tive performance evaluation issues and work in progress

to improve performance.

3.1. Overview of the MER Spacecraft and Rover

Operations

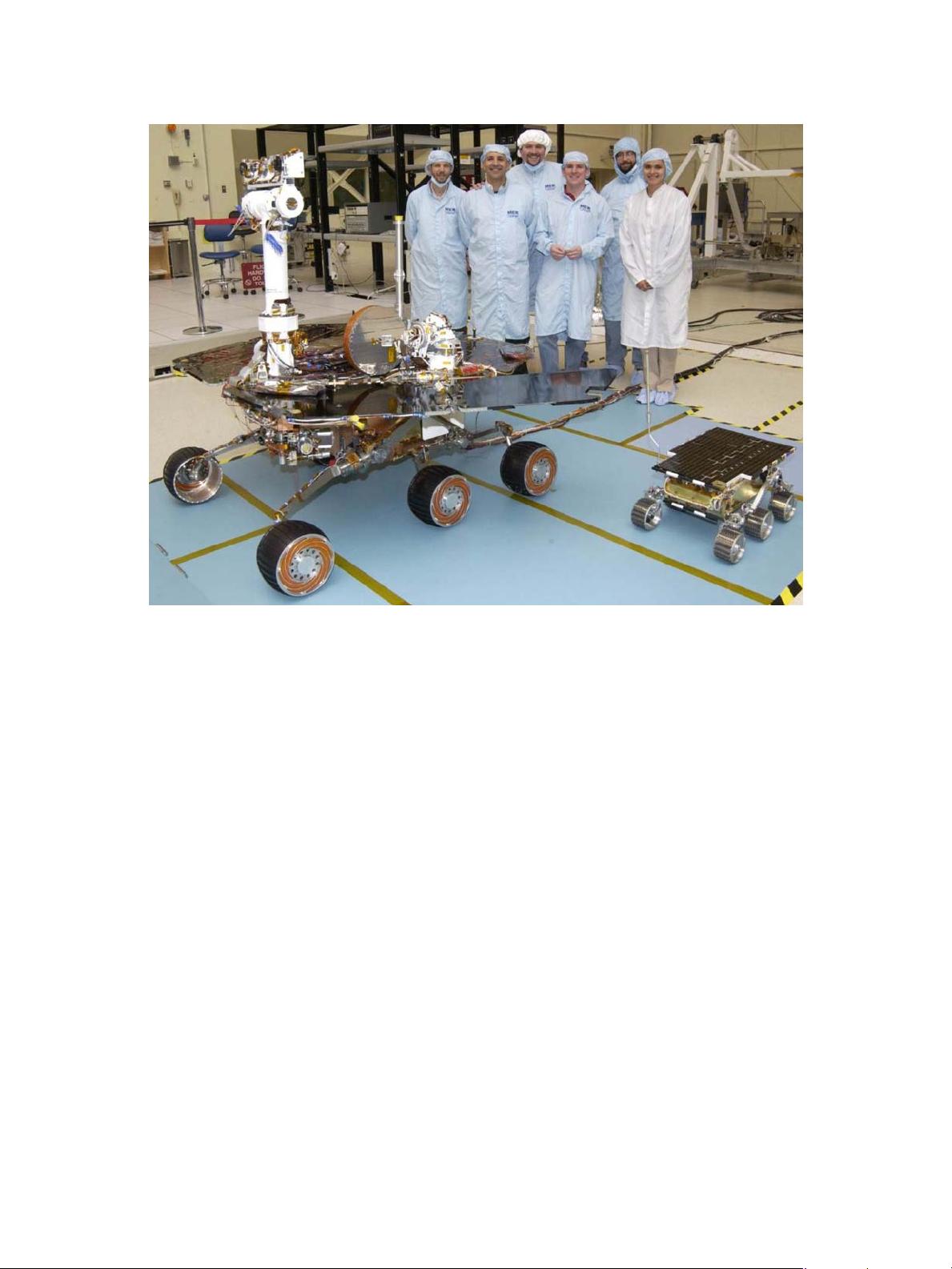

Figure 2 shows a photo of one of the MER rovers in a

JPL clean room, together with the flight spare copy of the

Sojourner rover from the 1997 Mars Pathfinder mission

for comparison. The MER rovers weigh about 174 kg, are

1.6 m long, have a wheelbase of 1.1 m, and are 1.5 m tall

to the top of the camera mast. Locomotion is achieved

with a rocker bogie system very similar to Sojourner,

with six driven wheels that are all kept in contact with

the ground by passive pivot joints in the rocker bogey

suspension. The outer four wheels are steerable.

The rovers are solar powered, with a rechargeable

lithium ion battery for nighttime science and commu-

nication operations. The onboard computer is a 20 MHz

RAD6000, which has an early PowerPC instruction set,

with no floating point, a very small L1 cache, no L2

cache, 128 MB of RAM, and 256 MB flash memory.

Navigation is done with three sets of stereo camera pairs:

one pair of “hazcams” (hazard cameras) looking forward