Cascaded Deep Video Deblurring Using Temporal Sharpness Prior

Jinshan Pan, Haoran Bai, and Jinhui Tang

Nanjing University of Science and Technology

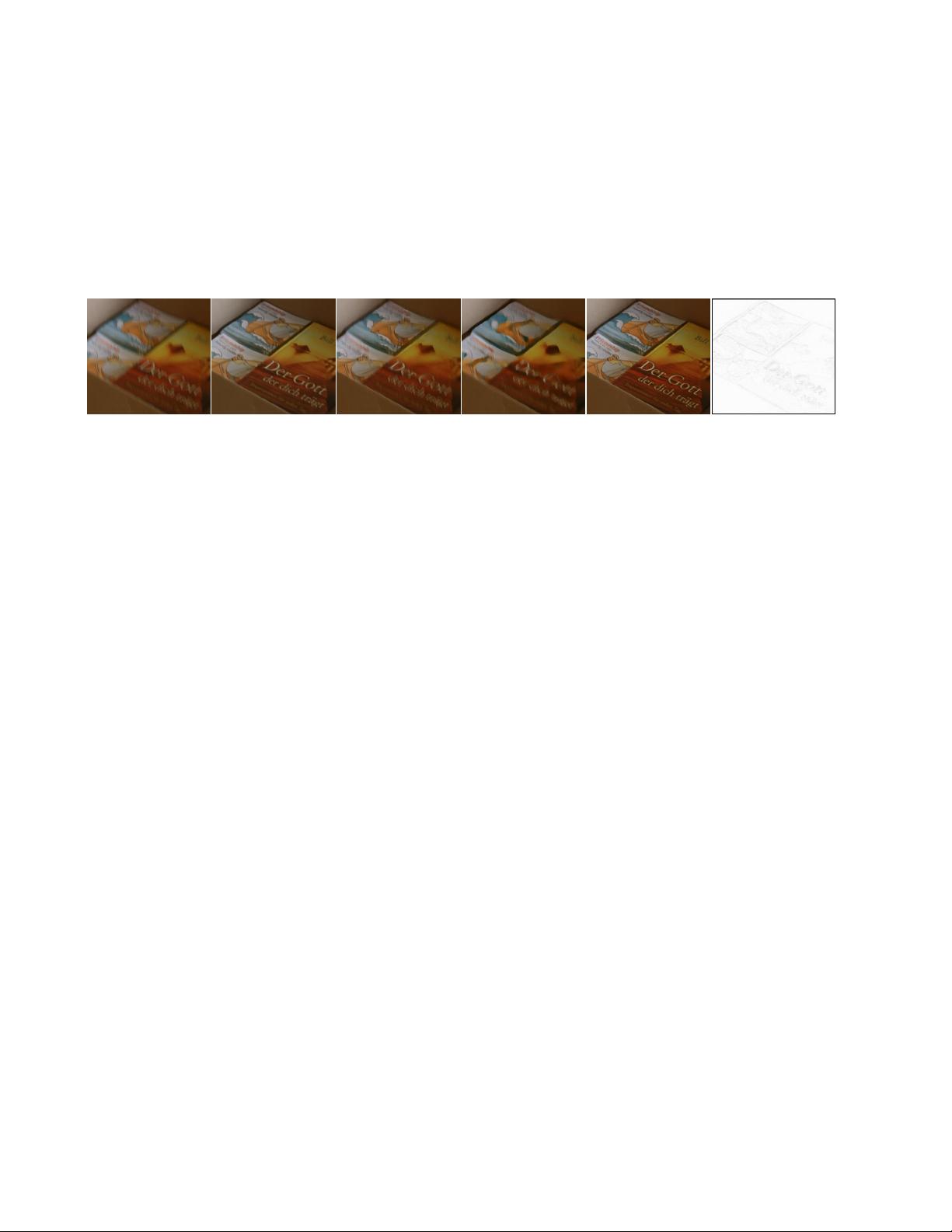

(a) Input frame (b) Kim and Lee [12] (c) STFAN [32] (d) EDVR [27] (e) Ours (f) Sharpness prior of (a)

Figure 1. Deblurred result on a real challenging video. Our algorithm is motivated by the success of variational model-based methods. It

explores sharpness pixels from adjacent frames by a temporal sharpness prior (see (f)) and restores sharp videos by a cascaded inference

process. As our analysis shows, enforcing the temporal sharpness prior in a deep convolutional neural network (CNN) and learning the

deep CNN by a cascaded inference manner can make the deep CNN more compact and thus generate better-deblurred results than both the

CNN-based methods [27, 32] and variational model-based method [12].

Abstract

We present a simple and effective deep convolutional

neural network (CNN) model for video deblurring. The pro-

posed algorithm mainly consists of optical flow estimation

from intermediate latent frames and latent frame restora-

tion steps. It first develops a deep CNN model to estimate

optical flow from intermediate latent frames and then re-

stores the latent frames based on the estimated optical flow.

To better explore the temporal information from videos, we

develop a temporal sharpness prior to constrain the deep

CNN model to help the latent frame restoration. We de-

velop an effective cascaded training approach and jointly

train the proposed CNN model in an end-to-end manner. We

show that exploring the domain knowledge of video deblur-

ring is able to make the deep CNN model more compact and

efficient. Extensive experimental results show that the pro-

posed algorithm performs favorably against state-of-the-art

methods on the benchmark datasets as well as real-world

videos. The training code and test model are available at

https://github.com/csbhr/CDVD-TSP.

1. Introduction

Video deblurring, as a fundamental problem in the vision

and graphics communities, aims to estimate latent frames

from a blurred sequence. As more videos are taken us-

ing hand-held and onboard video capturing devices, this

problem has received active research efforts within the last

decade. The blur in videos is usually caused by camera

shake, object motion, and depth variation. Recovering la-

tent frames is highly ill-posed as only the blurred videos are

given.

To recover the latent frames from a blurred sequence,

conventional methods usually make assumptions on mo-

tion blur and latent frames [12, 2, 4, 11, 5, 29]. Among

these methods, the motion blur is usually modeled as op-

tical flow [12, 2, 5, 29]. The key success of these meth-

ods is to jointly estimate the optical flow and latent frames

under the constraints by some hand-crafted priors. These

algorithms are physically inspired and generate promising

results. However, the assumptions on motion blur and la-

tent frames usually lead to complex energy functions which

are difficult to solve.

The deep convolutional neural network (CNN), as one

of the most promising approach, has been developed to

solve video deblurring. Motivated by the success of deep

CNNs in single image deblurring, Su et al. [24] concatenate

consecutive frames and develop a deep CNN based on an

encoder-decoder architecture to directly estimate the latent

frames. Kim et al. [13] develop a deep recurrent network to

recurrently restore latent frames by the concatenating multi-

frame features. To better capture the temporal information,

Zhang et al. [31] develop spatial-temporal 3D convolutions

to help latent frame restoration. These methods perform

well when the motion blur is not significant and displace-

ment among input frames is small. However, they are less

1

arXiv:2004.02501v1 [cs.CV] 6 Apr 2020