44 J. Comput. Sci. & Technol., Jan. 2012, Vol.27, No.1

usually create a small number of threads, close to the

number of processors, while GPU programs usually cre-

ate thousands, or even millions of threads. As a re-

sult, the granularity of parallelism is different between

a CPU and GPU. In CPU programs, jobs are divided

into small number of sub-tasks, each assigned to a CPU

thread. GPU programs, on the other hand, divide jobs

into a large number of fine-grained sub-tasks, each as-

signed to a GPU thread.

The other obstacle is that CPU and GPU have

different ISA. In the early days of GPU computing,

the pioneer programmers used OpenGL to program

GPUs. With time the idea of the general purpose GPU

(GPGPU) has received much more attention, which has

led to changes in both the GPU hardware and pro-

gramming languages. Many features that were once

CPU-only are now used in GPU designs, such as dou-

ble precision arithmetic operations, atomic operations,

and cache. However programmers are still required to

write different code for the CPU and GPU. Code writ-

ten for the CPU cannot be run directly on the GPU,

and vice versa.

To solve the first problem, we provide a

MapReduce

[10]

based programming API. The MapRe-

duce based API enables programmers to specify the

parallelism without needing to know the underlying

threading model. We will discuss the programming

API in detail in Subsection 2.2. The second problem

is solved by defining the programming language. In

MapCG, programmers write code in a defined subset of

the C++ language, which is then translated into corre-

sponding CPU/GPU code with a source code transla-

tion tool. This is discussed in detail in Subsection 2.3.

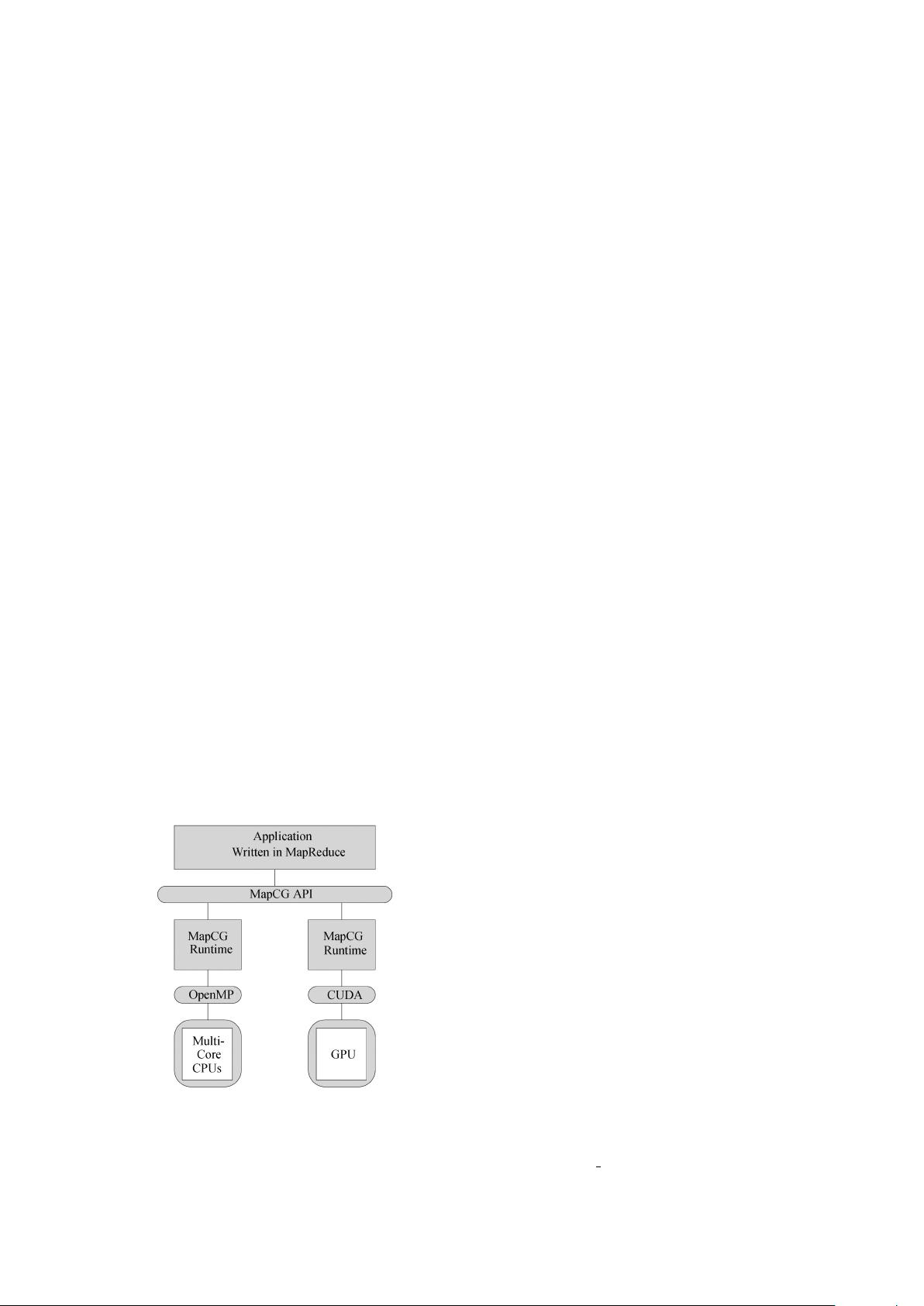

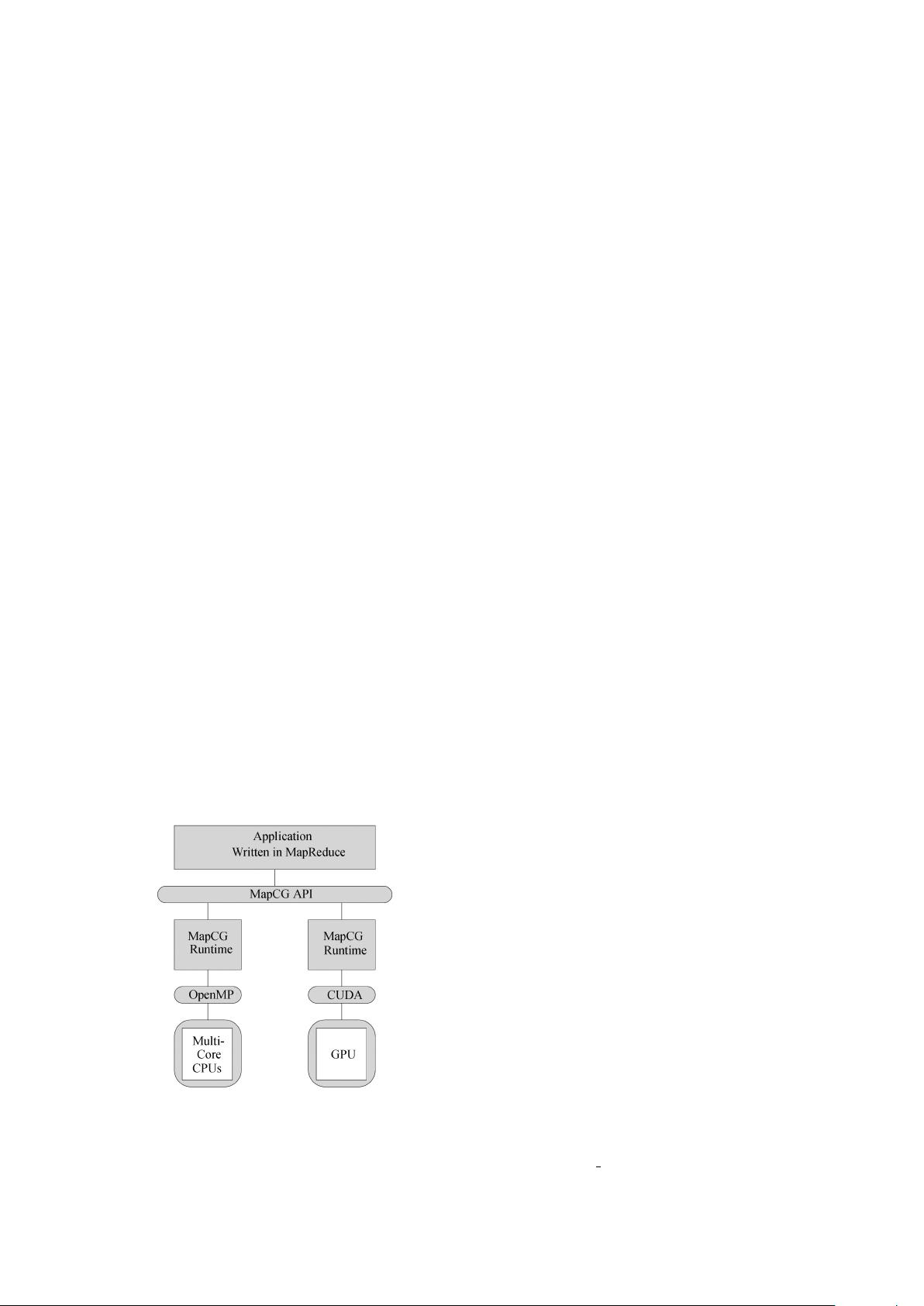

Fig.1 shows an overview of the MapCG framework.

Fig.1. Overview of the MapCG framework.

The MapCG API provides a MapReduce-style para-

llel programming model. Programmers express the

parallelism of the application in the MapReduce model,

and write the map() and reduce() functions in a de-

fined language, which provides a unified view of the

ISA of CPU and GPU. The framework generates CPU

and GPU versions of the Map and Reduce functions by

source code translation, and then uses the MapCG run-

time library to execute them on CPU and GPU respec-

tively. The MapCG runtime is responsible for executing

MapReduce code efficiently on different architectures.

It also fills the gaps between architecture capabilities.

For example, it provides the string library on the GPU,

which enables the programmer to call the string library

in the Map and Reduce functions. We also allow co-

processing of CPU and GPU, i.e., using CPU and GPU

together to finish the map() and reduce() tasks. This

is accomplished by dispatching the map() and reduce()

tasks to the CPU and GPU simultaneously.

At present, the MapCG runtime on CPU is imple-

mented using C++ and OpenMP. The runtime on GPU

is implemented using CUDA, the most commonly used

GPU programming mo del. OpenCL is another option

for implementing the MapCG runtime on GPU. We

leave this for the future work.

2.2 Programming Interface

The MapCG API is based on the MapReduce

[10]

programming model. The MapReduce programming

model originated from functional languages, and was

proposed as a parallel programming model by Google.

Applications written in MapReduce execute in a map-

reduction manner, which is very straightforward. Pro-

grammers simply specify how to split the input data

and how to do the mapping and reduction. The run-

time environment schedules the map() and reduce()

tasks to parallel threads. It also takes care of com-

munication and fault tolerance. Due to its simplicity,

the MapReduce programming model has attained great

popularity since its creation. It has been used widely

in data mining

[11]

, machine learning

[12]

and many other

fields

[13-14]

.

The execution of a MapReduce program can be sim-

ply described as follows.

1) The MapReduce framework divides the input

data into pieces using a programmer specified split()

function.

2) Each piece of the input data is then passed as

input to an instance of the map() function, forming

a map task. Map tasks execute in parallel in differ-

ent threads/processes, each processing its own data.

While processing, map tasks may produce (key, value)

pairs, which are called intermediate pairs. Map tasks

notify the MapReduce framework about the pairs by

calling the emit intermediate() function defined by the