4 IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 25, NO. 1, JANUARY 2014

if the FSA reaches a state from where there is no

outgoing edge corresponding to the last symbol of the

current subsequence. FSAs have been used for outlier

detection in [23], [35], [36], [37]. The methods to gen-

erate the state transition rules depend on particular

application domains.

Some Markov methods store conditional informa-

tion for a fixed history size=k, while others use a

variable history size to capture richer temporal depen-

dencies. Ye [38] proposes a technique where a Markov

model with k=1 is used. In [39], [40], the conditional

probability distribution (CPD) are stored in proba-

bilistic suffix trees (PSTs) for efficient computations.

Sparse Markovian techniques estimate the conditional

probability of an element based on symbols within the

previous k symbols, which may not be contiguous or

immediately preceding to the element [41], [42].

HMMs can be viewed as temporal dependency-

oriented mixture models, where hidden states and

transitions are used to model temporal dependencies

among mixture components. HMMs do not scale well

to real life datasets. The training process may require

judicious selection of the model, the parameters, and

initialization values of the parameters. On the other

hand, HMMs are interpretable and theoretically well

motivated. Approaches that use HMMs for outlier

detection include [23], [43], [44], [45], [46].

Unsupervised OLAP based Approach

Besides traditional uni-variate time series data,

richer time series are quite popular. For example, a

time series database may contain a set of time series,

each of which are associated with multi-dimensional

attributes. Thus, the database can be represented

using an OLAP cube, where the time series could

be associated with each cell as a measure. Li et

al. [47] define anomalies in such a setting, where given

a probe cell c, a descendant cell is considered an

anomaly if the trend, magnitude or the phase of its

associated time series are significantly different from

the expected value, using the time series for the probe

cell c.

Supervised Approaches

In the presence of labeled training data, the fol-

lowing supervised approaches have been proposed in

the literature: positional system calls features with the

RIPPER classifier [48], subsequences of positive and

negative strings of behavior as features with string

matching classifier [34], [49], neural networks [50],

[51], [52], [53], [54], Elman network [53], motion fea-

tures with SVMs [55], bag of system calls features

with decision tree, Na

¨

ıve Bayes, SVMs [56]. Sliding

window subsequences have also been used as features

with SVMs [57], [58], rule based classifiers [59], and

HMMs [44].

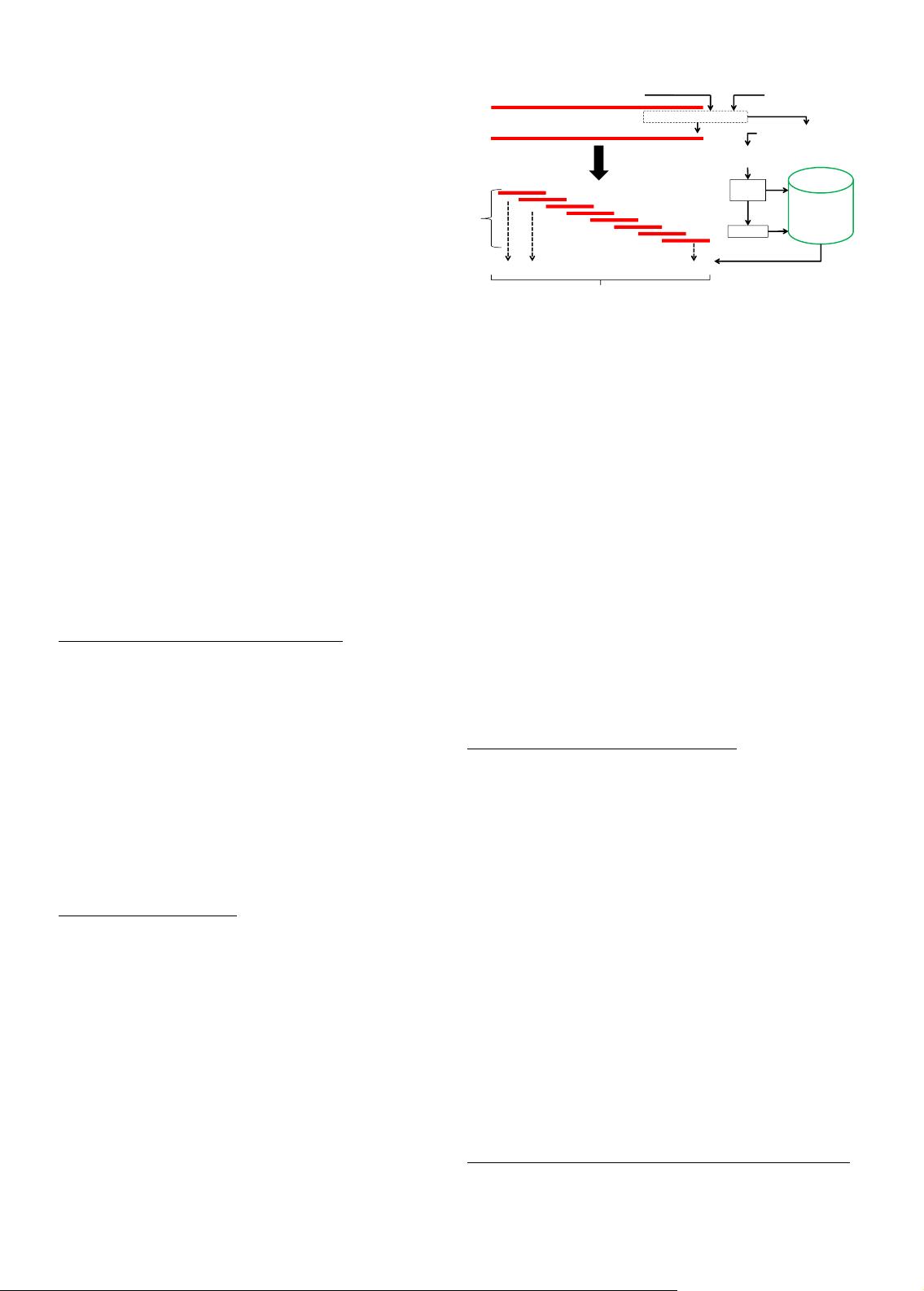

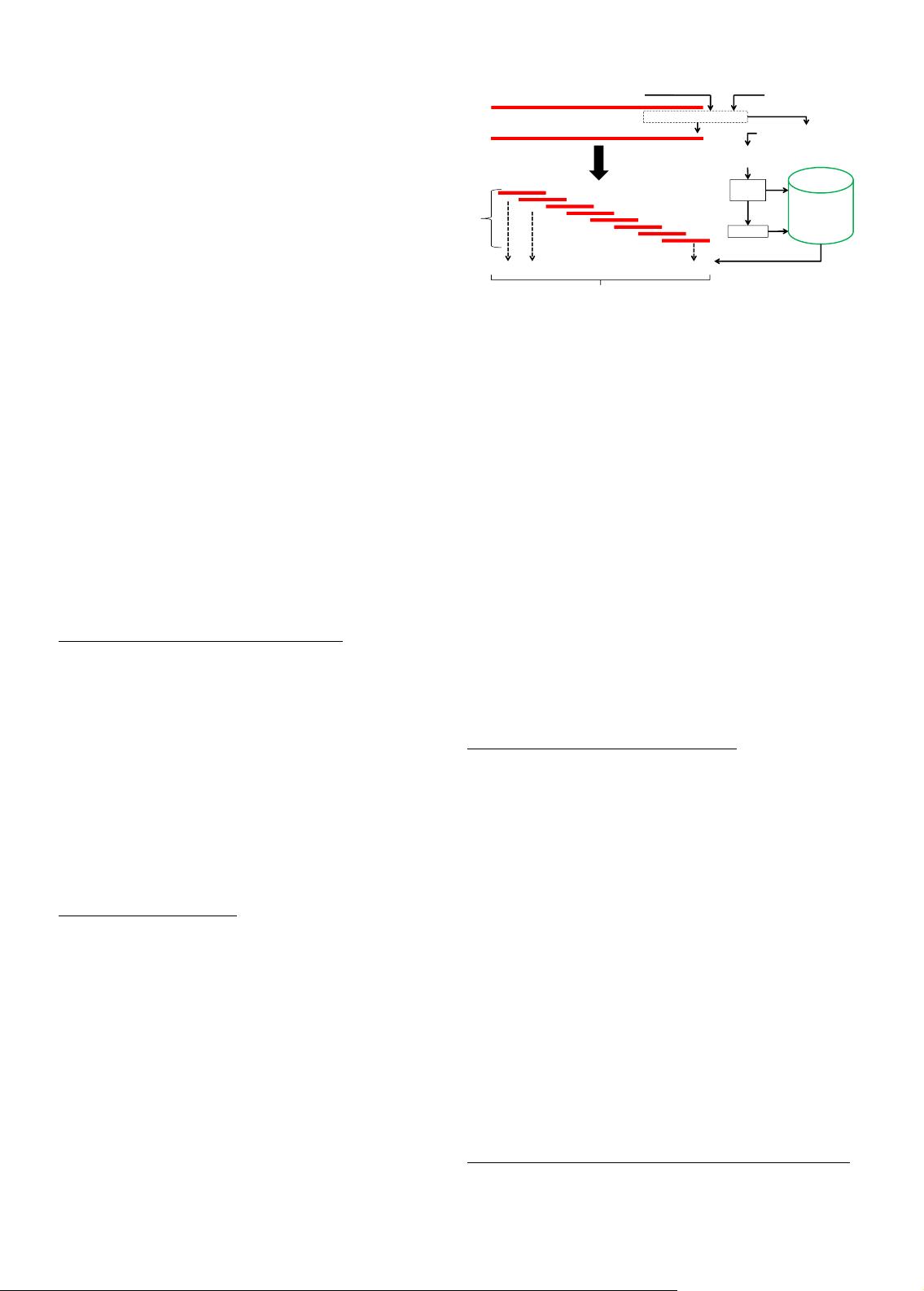

2.1.2 Window based Detection of Outlier Time Series

Given: A database of time series

Normal

or Abnormal

Windows

Database

Test Sequence s

Windows

!

"

Train Sequences

Optional Processing like DWT

Processed Sequence

Processed Sequences

Normal

Samples

Detectors

Length-w

windows

Fig. 2. Window Based Time Series Outlier Detection

Find: All anomalous time windows, and hence

anomalous time series

Compared to the techniques in the previous sub-

section, the test sequence is broken into multiple

overlapping subsequences (windows). The anomaly

score is computed for each window, and then the

anomaly score (AS) for the entire test sequence is

computed in terms of that of the individual windows.

Window-based techniques can perform better local-

ization of anomalies, compared to the techniques that

output the entire time series as outliers directly. These

techniques need the window length as a parameter.

Windows are called fingerprints, pattern fragments,

detectors, sliding windows, motifs, and n-grams in

various contexts. In this methodology, the techniques

usually maintain a normal pattern database, but some

approaches also maintain a negative pattern or a

mixed pattern database. Figure 2 shows a general

sketch of the method.

Normal Pattern Database Approach

In this approach, normal sequences are divided into

size w overlapping windows. Each such window sub-

sequence is stored in a database with its frequency. For

a test sequence, subsequences of size w are obtained,

and those subsequences that do not occur in normal

database are considered mismatches. If a test sequence

has a large number of mismatches, it is marked as

an anomaly [44], [49], [51], [53], [60]. Rather than

looking for exact matches, if a subsequence is not

in the database, soft mismatch scores can also be

computed [20], [61], [62], [63].

Besides contiguous window subsequences, a looka-

head based method can also be used for building

a normal database [64]. For every element in every

normal sequence, the elements occurring at distance

1,2,. . ., k in the sequence are noted. A normal database

of such occurrences is created. Given a new test

sequence, a lookahead of the same size k is used. Each

pair of element occurrence is checked with the normal

database, and the number of mismatches is computed.

Negative and Mixed Pattern Database Approaches

Besides the dictionaries for normal sequences,

anomaly dictionaries can also be created [34], [50],

[65], [66], [67]. All normal subsequences of length w