5

detect various attacks in the in-vehicle network. The use of static threshold values for detection limits the

scheme to detecting only very simple attacks. In [33], the authors propose a deep convolutional neural network

(CNN) model to detect anomalies in the vehicle’s CAN network. However, the model does not consider the

temporal relationships between messages, which can better predict certain attacks. The authors in [34]

proposed an LSTM framework with a hierarchical attention mechanism to reconstruct the input messages. A

non-parametric kernel density estimator along with a k-nearest neighbors classifier is used to reconstruct the

messages and the reconstruction error is used to detect anomalies. Although most of these techniques attempt

to increase the detection accuracy and attack coverage, none of them offers the ability to process very long

sequences with relatively low memory and runtime overhead and still achieve reasonably high performance.

In this paper, we propose a robust deep learning model that integrates a stacked LSTM based encoder-

decoder model with a self-attention mechanism, to learn normal system behavior by learning to predict the next

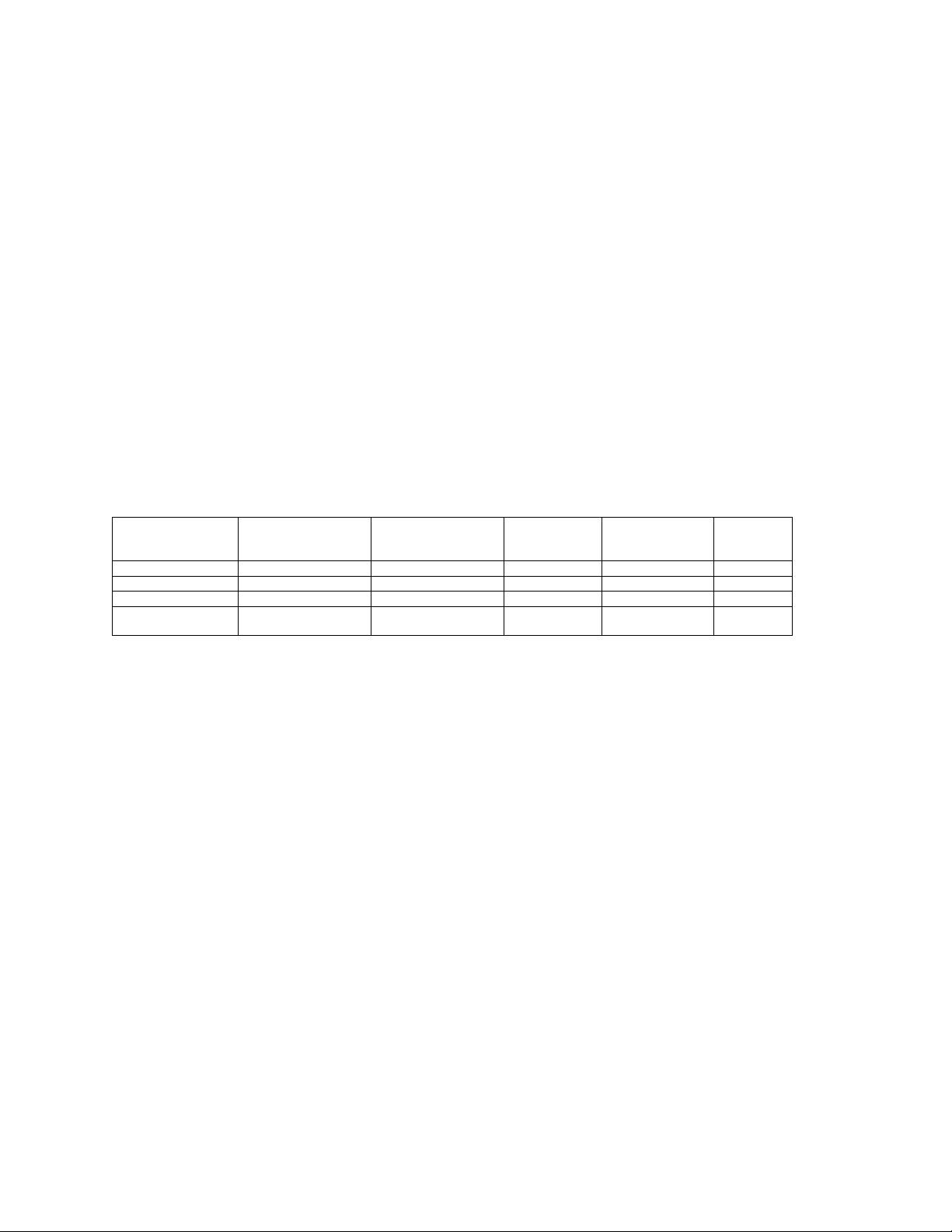

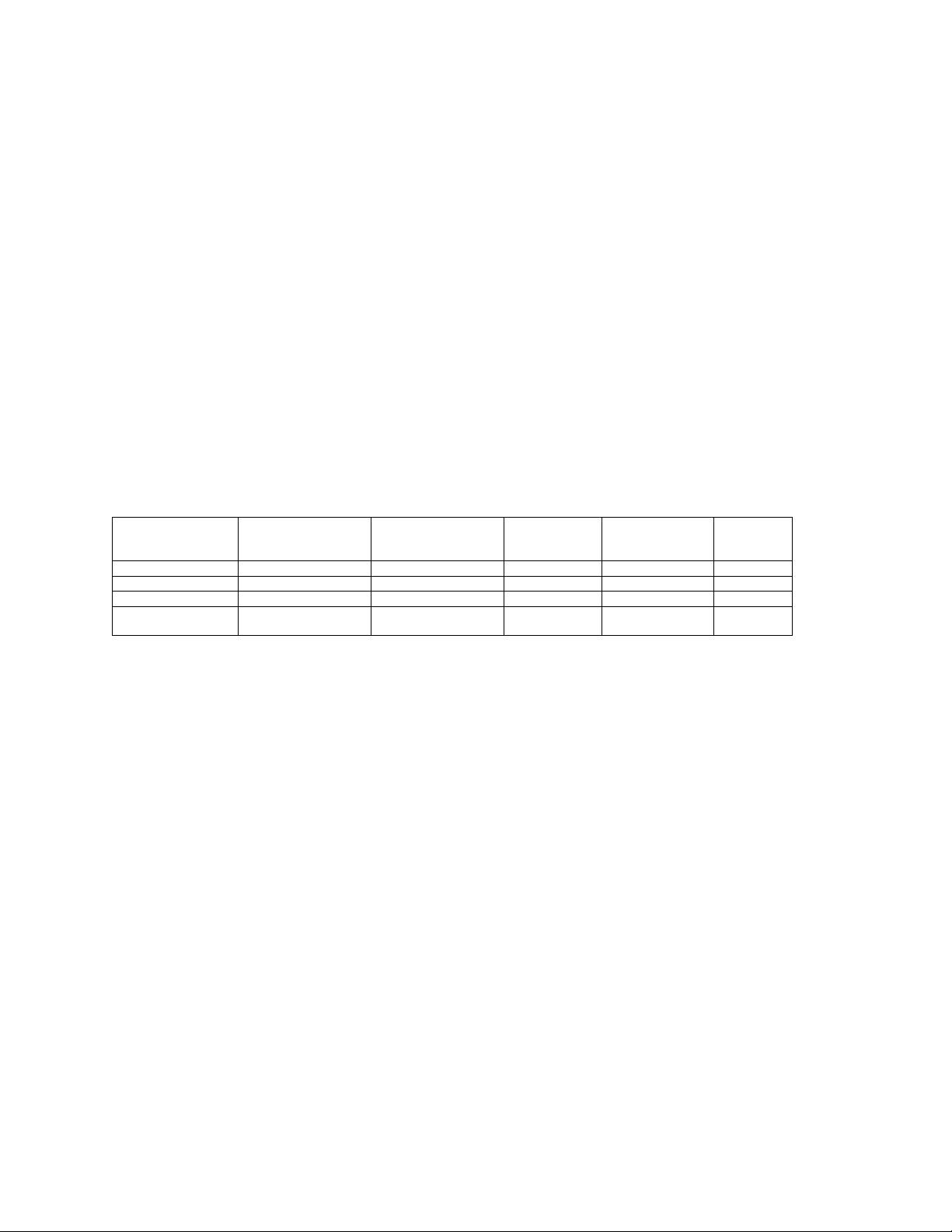

message instance. Table I summarizes some of the state-of-the-art anomaly detection works and their key

features, and highlights the unique characteristics of our proposed LATTE framework. At runtime, we

continuously monitor in-vehicle network messages and provide a reliable detection mechanism using a non-

linear classifier. Sections 4 and 5 provide a detailed explanation of the proposed model and overall framework.

In section 6 we show how our model is capable of efficiently identifying a variety of attack scenarios.

Table 1: Comparison between our proposed LATTE framework and the state-of-the-art works

Technique Task

Network

architecture

Attention

type

Detection

technique

Requires

labeled

data?

BWMP [30] Bit level prediction LSTM network - Static threshold Yes

RepNet [28] Input recreation Replicator network - Static threshold No

HAbAD [34] Input recreation Autoencoder Hierarchical KDE and KNN Yes

LATTE

Next message value

prediction

Encoder-decoder Self-attention OCSVM No

3 BACKGROUND

Solving complex problems using deep learning was made possible due to advances in computing hardware

and the availability of high-quality datasets. Anomaly detection is one such problem that can leverage the power

of deep learning. In an automotive system, ECUs exchange safety-critical messages periodically over the in-

vehicle network. This time series exchange of data results in temporal relationships between messages, which

can be exploited to detect anomalies. However, this requires a special type of neural network, called Recurrent

Neural Network (RNN) to capture the temporal dependencies between messages. Unlike traditional feed-

forward neural networks where the output is independent of any previous inputs, RNNs use previous sequence

state information in computing the output, which makes them an ideal choice to handle time-series data.

3.1 Recurrent Neural Network (RNN)

An RNN [35] is the most basic sequence model that takes sequential data such as time-series data as the input

and learns the underlying temporal relationships between data samples. An RNN block consists of an input, an

output, and a hidden state that allows it to remember the learned temporal information. The input, output, and

hidden state all correspond to a particular time step in the sequence. The hidden-state information can be

thought of as a data point in the latent space that contains important temporal information about the inputs from