没有合适的资源?快使用搜索试试~ 我知道了~

首页2018 USENIX年度技术会议:波士顿会议论文集

2018 USENIX年度技术会议:波士顿会议论文集

需积分: 9 0 下载量 163 浏览量

更新于2024-07-16

收藏 69.6MB PDF 举报

“2018 USENIX Annual Technical Conference.pdf”是USENIX协会主办的一次计算机行业的年度技术会议的会议记录。会议包含了计算领域的教程、单轨技术会议,用于展示经过同行评审的研究论文,还包括专业兴趣小组(SIG)会议和BoFs(Birds of a Feather,同好会)讨论。

在2018年的USENIX年度技术会议上,来自全球的专家和学者在波士顿聚集一堂,日期为2018年7月11日至13日。会议得到了众多知名企业的赞助,包括Facebook、Google、Microsoft、NetApp、PrivateInternetAccess、Amazon、Bloomberg、Oracle、Squarespace和VMware等公司。这些赞助商对会议的成功举办起到了关键作用,为参会者提供了交流最新研究和技术的平台。

会议的出版物,即“2018 USENIX年度技术会议”的会议记录,具有ISBN 978-1-931971-44-7,由USENIX协会出版,并且遵循特定的版权规则。作者或作者的雇主保留对各自论文的权利,但允许非商业性的复制用于教育或研究目的。个人可以打印一份会议记录供个人独家使用。

USENIX ATC'18还得到了一系列赞助商的支持,包括金牌、银牌和铜牌赞助商,以及产业合作伙伴和媒体赞助商,如ACM Queue等。这样的赞助不仅支持了会议的运行,也帮助促进了计算机科学领域的学术交流和合作。

这次会议的主题涵盖了广泛的IT领域,如操作系统、网络、安全、存储、系统架构、编程语言、性能分析等多个方面。参会者有机会接触到最新的研究成果,与同行进行深入讨论,共同推动信息技术的发展。通过USENIX这样的平台,研究人员和业界专家得以分享创新思想,解决当前的技术挑战,同时也为学生和新进从业者提供了学习和建立职业网络的机会。

2018 USENIX年度技术会议是IT专业人士不容错过的盛会,它展示了计算技术的最新进展,强化了行业内知识的传播和共享。参与这样的会议,有助于个人和组织保持在行业前沿,提升技术能力,以及了解未来可能的技术趋势。

Tributary: spot-dancing for elastic services with latency SLOs

Aaron Harlap

§∗

Andrew Chung

§∗

Alexey Tumanov

†

Gregory R. Ganger

§

Phillip B. Gibbons

§

§

Carnegie Mellon University

†

UC Berkeley

Abstract

The Tributary elastic control system embraces the uncer-

tain nature of transient cloud resources, such as AWS

spot instances, to manage elastic services with latency

SLOs more robustly and more cost-effectively. Such

resources are available at lower cost, but with the pro-

viso that they can be preempted en masse, making them

risky to rely upon for business-critical services. Tribu-

tary creates models of preemption likelihood and exploits

the partial independence among different resource of-

ferings, selecting collections of resource allocations that

satisfy SLO requirements and adjusting them over time,

as client workloads change. Although Tributary’s col-

lections are often larger than required in the absence of

preemptions, they are cheaper because of both lower spot

costs and partial refunds for preempted resources. At the

same time, the often-larger sets allow unexpected work-

load bursts to be absorbed without SLO violation. Over

a range of web service workloads, we find that Tribu-

tary reduces cost for achieving a given SLO by 81–86%

compared to traditional scaling on non-preemptible re-

sources, and by 47–62% compared to the high-risk ap-

proach of the same scaling with spot resources.

1 Introduction

Elastic web services have been a cloud computing sta-

ple from the beginning, adaptively scaling the number

of machines used over time based on time-varying client

workloads. Generally, an adaptive scaling policy seeks to

use just the number of machines required to achieve its

Service Level Objectives (SLOs), which are commonly

focused on response latency and ensuring that a given

percentage (e.g., 95%) of requests are responded to in

under a given amount of time [17, 28, 19]. Too many

machines results in unnecessary cost, and too few re-

sults in excess customer dissatisfaction. As such, much

research and development has focused on doing this

well [20, 14, 11, 12, 26].

Elastic service scaling schemes generally assume in-

dependent and infrequent failures, which is a relatively

safe assumption for high-priority allocations in private

clouds and non-preemptible allocations in public clouds

(e.g., on-demand instances in AWS EC2 [3]). This as-

∗

Equal contribution

sumption enables scaling schemes to focus on client

workload and server responsiveness variations in deter-

mining changes to the number of machines needed to

meet SLOs.

Modern clouds also offer transient, preemptible re-

sources (e.g., EC2 Spot Instances [1]) at a discount of

70–80% [6], creating an opportunity for cheaper ser-

vice deployments. But, simply using standard scaling

schemes fails to address the risks associated with such

resources. Namely, preemptions should be expected to

be more frequent than failures and, more importantly,

preemptions often occur in bulk. Akin to co-occurring

failures, bulk preemptions can cause traditional scaling

schemes to have sizable gaps in SLO attainment.

This paper describes Tributary, a new elastic control

system that exploits transient, preemptible resources to

reduce cost and increase robustness to unexpected work-

load bursts. Tributary explicitly recognizes the bulk

preemption risk, and it exploits the fact that preemp-

tions are often not highly correlated across different

pools of resources in heterogeneous clouds. For ex-

ample, in AWS EC2, there is a separate spot market

for each instance type in each availability zone, and re-

searchers have noted that they often move independently:

while preemptions within each spot market are corre-

lated, across spot markets they are not [16]. To safely

use preemptible resources, Tributary acquires collections

of resources drawn from multiple pools, modified as re-

source prices change and preemptions occur, while en-

deavoring to ensure that no single bulk preemption would

cause SLO violation. We refer to this dynamic use of

multiple preemptible resource pools as spot-dancing.

AcquireMgr is Tributary’s component that decides the

resource collection’s makeup. It works with any tradi-

tional scaling policy that determines (reactively or pre-

dictively) how many cores or machines are needed for

each successive period of time, based on client load vari-

ation. AcquireMgr decides which instances will provide

sufficient likelihood of meeting each time period’s tar-

get at the lowest expected cost. Its probabilistic algo-

rithm combines resource cost and preemption probability

predictions for each pool to decide how many resources

to include from each pool, and at what price to bid for

any new resources (relative to the current market price).

USENIX Association 2018 USENIX Annual Technical Conference 1

Given that a preemption occurs when a market’s spot

price exceeds the bid price given at resource acquisition

time, AcquireMgr can affect the preemption probability

via the delta between its bid price and the current price,

informed by historical pricing trends. In our implemen-

tation, which is specialized to AWS EC2, the predictions

use machine learning (ML) models trained on historical

EC2 Spot Price data. The expected cost of the computa-

tion takes into account EC2’s policy of partial refunds for

preempted instances, which often results in AcquireMgr

choosing high-risk instances and achieving even bigger

savings than just the discount for preemptibility.

In addition to the expected cost savings, Tributary’s

spot-dancing provides a burst tolerance benefit. Any

elastic control scheme has some reaction delay between

an unexpected burst and any resulting addition of re-

sources, which can cause SLO violations. Because Trib-

utary’s resource collection is almost always bigger than

the scaling policy’s most recent target in order to accom-

modate bulk preemptions, extra resources are often avail-

able to handle unexpected bursts. Of course, traditional

elastic control schemes can also acquire extra resources

as a buffer against bursts, but only at a cost, whereas the

extra resources when using Tributary are a bonus side-

effect of AcquireMgr’s robust cost savings scheme.

Results for four real-world web request arrival traces

and real AWS EC2 spot market data demonstrate Tribu-

tary’s cost savings and SLO benefits. For each of three

popular scaling policies (one reactive and two predic-

tive), Tributary’s exploitation of AWS spot instances re-

duces cost by 81–86% compared to traditional scaling

with on-demand instances for achieving a given SLO

(e.g., 95% of requests below 1 second). Compared to un-

safely using traditional scaling with spot instances (AWS

AutoScale [2]) instead of on-demand instances, Tribu-

tary reduces cost by 47–62% for achieving a given SLO.

Compared to other recent systems’ policies for exploit-

ing spot instances to reduce cost [24, 16], Tributary pro-

vides higher SLO attainment at significantly lower cost.

This paper makes four primary contributions. First,

it describes Tributary, the first resource acquisition sys-

tem that takes advantage of preemptible cloud resources

for elastic services with latency SLOs. Second, it in-

troduces AcquireMgr algorithms for composing resource

collections of preemptible resources cost-effectively, ex-

ploiting the partial refund model of EC2’s spot markets.

Third, it introduces a new preemption prediction ap-

proach that our experiments with EC2 spot market price

traces show is significantly more accurate than previous

preemption predictors. Fourth, we show that Tributary’s

approach yields significant cost savings and robustness

benefits relative to other state-of-the-art approaches.

2 Background and Related Work

Elastic services dynamically acquire and release machine

resources to adapt to time-varying client load. We distin-

guish two aspects of elastic control, the scaling policy

and the resource acquisition scheme. The scaling pol-

icy determines, at any point in time, how many resources

the service needs in order to satisfy a given SLO. The

resource acquisition scheme determines which resources

should be allocated and, in some cases, aspects of how

(e.g., bid price or priority level). This section discusses

AWS EC2 spot instances and resource acquisition strate-

gies to put Tributary and its new approach to resource

acquisition into context.

2.1 Preemptible resources in AWS EC2

In addition to non-preemptible, or reliable resources,

most cloud infrastructures offer preemptible resources as

a way to increase utilization in their datacenters. Pre-

emptible resources are made available, on a best-effort

basis, at decreased cost (in for-pay settings) and/or at

lower priority (in private settings). This subsection de-

scribes preemptible resources in AWS EC2, both to pro-

vide a concrete example and because Tributary and most

related work specialize to EC2 behavior.

EC2 offers “on-demand instances”, which are reli-

able VMs billed at a flat per-second rate. EC2 also of-

fers the same VM types as “spot instances”, which are

preemptible but are usually billed at prices significantly

lower (70% - 80%) than the corresponding on-demand

price. EC2 may preempt spot instances at any time, thus

presenting users with a trade-off between reliability (on-

demand) and cost savings (spot).

There are several properties of the AWS EC2 spot mar-

ket behavior that affect customer cost savings and the

likelihood of instance preemption. (1) Each instance type

in each availability zone has a unique AWS-controlled

spot market associated with it, and AWS’s spot mar-

kets are not truly free markets [9]. (2) Price movements

among spot markets are not always correlated, even for

the same instance type in a given region [23]. (3) Cus-

tomers specify a bid in order to acquire a spot instance.

The bid is the maximum price a customer is willing to

pay for an instance in a specific spot market; once a bid

is accepted by AWS, it cannot be modified. (4) A cus-

tomer is billed the spot market price (not the bid price)

for as long as the spot market price for the instance does

not exceed the bid price or until the customer releases

it voluntarily. (5) As of Oct 2nd, 2017, AWS charges

for the usage of an EC2 instance up to the second, with

one exception: if the spot market price of an instance ex-

ceeds the bid price during its first hour, the customer is

refunded fully for its usage. No refund is given if the

spot instance is revoked in any subsequent hour. We de-

fine the period where preemption makes the instance free

2 2018 USENIX Annual Technical Conference USENIX Association

as the preemption window.

When using EC2 spot instances, the bidding strategy

plays an important role in both cost and preemption prob-

ability. Many bidding strategies for EC2 spot instances

have been studied [9, 33, 30]. The most popular strategy

by far is to bid the on-demand price to minimize the odds

of preemption [23, 21], since AWS charges the market

price rather than the bid price.

2.2 Cloud Resource Acquisition Schemes

Given a target resource count from a scaling policy, a

resource acquisition scheme decides which resources to

acquire based on attributes of resources (e.g., bid price

or priority level). Many elastic control systems assume

that all available resources are equivalent, such as would

be true in a homogeneous cluster, which makes the ac-

quisition scheme trivial. But, some others address re-

source selection and bidding strategy aspects of multi-

ple available options. Tributary’s AcquireMgr employs

novel resource acquisition algorithms, and we discuss re-

lated work here.

AWS AutoScale [2] is a service provided by AWS that

maintains the resource footprint according to the target

determined by a scaling policy. At initialization time,

if using on-demand instances, the user specifies an in-

stance type and availability zone. Whenever the scaling

target changes, AutoScale acquires or releases instances

to reach the new target. If using spot instances, the user

can use a so-called “spot fleet”[4] consisting of multi-

ple instance type and availability zone options. In this

case, the user configures AutoScale to use one of two

strategies. The lowestPrice strategy will always select

cheapest current spot price of the specified options. The

diversified strategy will use an equal number of instances

from each option. Tributary bids aggressively and diver-

sifies based on predicted preemption rates and observed

inter-market correlation, resulting in both higher SLO at-

tainment and lower cost than AutoScale.

Kingfisher [26] uses a cost-aware resource acquisition

scheme based on using integer linear programming to

determine a service’s resource footprint among a het-

erogeneous set of non-preemptible instances with fixed

prices. Tributary also selects from among heterogeneous

options, but addresses the additional challenges and op-

portunities introduced by embracing preemptible tran-

sient resources. Several works have explored ways of

selecting and using spot instances. HotSpot [27] is a re-

source container that allows an application to suspend

and automatically migrate to the most cost-efficient spot

instance. While HotSpot works for single-instance ap-

plications, it is not suitable for elastic services since its

migrations are not coordinated and it does not address

bulk preemptions.

SpotCheck [25] proposes two methods of selecting

spot markets to acquire instances in while always bid-

ding at a configurable multiple of the spot instance’s cor-

responding on-demand price. The first method is greedy

cheapest-first, which picks the cheapest spot market. The

second method is stability-first, which chooses the most

price-stable market based on past market price move-

ment. SpotCheck relies on VM migration and hot spares

(on-demand or otherwise) to address revocations, which

incurs additional cost, while Tributary uses a diverse pool

of spot instances to mitigate revocation risk.

BOSS [32] hosts key-value stores on spot instances

by exploiting price differences across pools in different

data-centers and creating an online algorithm to dynam-

ically size pools within a constant bound of optimal-

ity. Tributary also constructs its resource footprint from

different pools, within and possibly across data-centers.

Whereas BOSS assumes non-changing storage capacity

requirements, Tributary dynamically scales its resource

footprint to maintain the specified latency SLO while

adapting to changes in client workload.

Wang et al. [31] explore strategies to decide whether,

in the face of changing application behavior, it is better to

reserve discounted resources over longer periods or lease

resources at normal rates on a shorter term basis. Their

solution combines on-demand and “reserved” (long term

rental at discount price) instances, neither of which are

ever preempted by Amazon.

ExoSphere [24] is a virtual cluster framework for

spot instances. Its instance acquisition scheme is based

on market portfolio theory, relying on a specified risk

averseness parameter (α). ExoSphere formulates the re-

turn of a spot instance acquisition as the difference be-

tween the on-demand cost and the expected cost based

on past spot market prices. It then tries to maximize the

return of a set of instance allocations with respect to risk,

considering market correlations and α, determining the

fraction of desired resources to allocate in each spot mar-

ket being considered. For a given virtual cluster size,

ExoSphere will acquire the corresponding number of in-

stances from each market at the on-demand price. Unsur-

prisingly, since it was created for a different usage model,

ExoSphere’s scheme is not a great fit for elastic services

with latency SLOs. We implement ExoSphere’s scheme

and show in Section 5.6 that Tributary achieves lower

cost, because it bids aggressively (resulting in more pre-

emptions), and higher SLO attainment, because it explic-

itly predicts preemptions and selects resource sets based

on sufficient tolerance of bulk preemptions.

Proteus [16] is an elastic ML system that combines

on-demand resources with aggressive bidding of spot re-

sources to complete batch ML training jobs faster and

cheaper. Rather than bidding the on-demand price, it bids

close to market price and aggressively selects spot mar-

kets and bid prices that it predicts will result in preemp-

tion, in hopes of getting many partial hours of free re-

USENIX Association 2018 USENIX Annual Technical Conference 3

sources. The few on-demand resources are used to main-

tain a copy of the dynamic state as spot instances come

and go, and acquisitions are made and used to scale the

parallel computation whenever they would reduce the av-

erage cost per unit work. Although Tributary uses some

of the same mindset (aggressive use of preemptible re-

sources), elastic services with latency SLOs are different

than batch processing jobs; elastic services have a tar-

get resource quantity for each point in time, and having

fewer usually leads to SLO violations, while having more

often provides no benefit. Unsurprisingly, therefore, we

find that Proteus’s scheme is not a great fit for such ser-

vices. We implement Proteus’s acquisition scheme and

show in Section 5.6 that Tributary achieves much higher

SLO attainment, because it understands the resource tar-

get and explicitly uses diversity to mitigate bulk preemp-

tion effects. Tributary also uses a new and much more

accurate preemption predictor.

3 Elastic Control in Tributary

AcquireMgr is Tributary’s resource acquisition compo-

nent, and its approach differentiates Tributary from pre-

vious elastic control systems. It is coupled with a scal-

ing policy, any of many popular options, which provides

the time-varying resource quantity target based on client

load. AcquireMgr uses ML models to predict the pre-

emption probability of resources and exploits the rela-

tive independence of AWS spot markets to account for

potential bulk preemptions by acquiring a diverse mix of

preemptible resources collectively expected to satisfy the

user-specified latency SLO. This section describes how

AcquireMgr composes the resource mix while targeting

minimal cost.

Resource Acquisition. AcquireMgr interacts with

AWS to request and acquire resources. To do so, Ac-

quireMgr builds sets of request vectors. Each request

vector specifies the instance type, availability zone, bid

price, and number of instances to acquire. We call this

an allocation request. An allocation is defined as a set

of instances of the same type acquired at the same time

and price. AcquireMgr’s total footprint, denoted with the

variable A, is a set of such allocations. Resource acqui-

sition decisions are made under four conditions: (1) a

periodic (one-minute) clock event fires, (2) an allocation

reaches the end of its preemption window, (3) the scaling

policy specifies a change in resource requirement, and/or

(4) a preemption occurs. We term these conditions deci-

sion points.

AcquireMgr abstracts away the resource type which

is being optimized for. For the workloads described

in this paper, virtual CPUs (VCPUs) are the bottleneck

resource; however, it is possible to optimize for mem-

ory, network bandwidth, or other resource types instead.

A service using Tributary provides its resource scaling

characteristics to AcquireMgr in the form of a utility

function υ(). This utility function maps the number of

resources to the percentage of requests expected to meet

the target latency, given the load on the web service.

The shape of a utility function is service-specific and de-

pends on how the service scales, for the expected load,

with respect to the number of resources. In the simplest

case where the web service is embarrassingly parallel,

the utility function is linear with respect to the number

of resources offered until 100% of the requests are ex-

pected to be satisfied, at which point the function turns

into a horizontal line. As a concrete example, if an em-

barrassingly parallel service specifies that 100 instances

are required to handle 10000 requests per second with-

out any of the requests missing the target latency, a linear

utility function will assume that 50 instances will allow

the system to meet the target latency on 50% of the re-

quests. Tributary allows applications to customize the

utility function so as to accommodate the resource re-

quirements of applications with various scaling charac-

teristics.

In addition to providing υ(), the service also provides

the application’s target SLO in terms of a percentage of

requests required to meet the target latency. By expos-

ing the target SLO as a customizable input, Tributary al-

lows the application to control the Cost-SLO tradeoff.

Upon receiving this information, AcquireMgr acquires

enough resources to meet SLO in expectation while op-

timizing for expected cost. In deciding which resources

to acquire, AcquireMgr uses the prediction models de-

scribed in Sec. 3.1 to predict the probability that each

allocation would be preempted. Using these predictions,

AcquireMgr can compute the expected cost and the ex-

pected utility of a set of allocations (Sec. 3.2). Ac-

quireMgr greedily acquires allocations until the expected

utility is greater than or equal to the SLO percentage re-

quirement (Sec. 3.3).

3.1 Prediction Models

When acquiring spot instances on AWS, there are

three configurable parameters that affect preemption

probability: instance type, availability zone and bid

price. This section describes the models used by Ac-

quireMgr to predict allocation preemption probabilities.

Previous work [16] proposed taking the historical me-

dian probability of preemption based on the instance

type, availability zone and bid price. This approach does

not consider time of day, day of week, price fluctuations

and several other factors that affect preemption proba-

bilities. AcquireMgr trains ML models considering such

features to predict resource reliability.

Training Data and Feature Engineering. The pre-

diction models are trained ahead of time with data de-

rived from AWS spot market price histories. Each sam-

ple in the training dataset is a hypothetical bid, and the

4 2018 USENIX Annual Technical Conference USENIX Association

target variable, preempted, of our model is whether or

not an instance acquired with the hypothetical bid is pre-

empted before the end of its preemption window (1 hr).

We use the following method to generate our data set:

For each instance and bid delta (bid price above the mar-

ket price with range [0.00001,0.2]) we generate a set of

hypothetical bids with the bid starting at a random point

in the spot market history. For each bid, we look forward

in the spot market price history. If the market price of

the instance rises above the bid price at any point within

the hour, we mark the sample as preempted. For each

historical bid, we also record the ten prices immediately

prior to the random starting point and their time-stamps.

To increase prediction accuracy, AcquireMgr engi-

neers features from AWS spot market price histories.

Our engineered features include: (1) Spot market price;

(2) Average spot market price; (3) Bid delta; (4) Fre-

quency of spot market price changing within past hour;

(5) Magnitude of spot market price fluctuations within

past two, ten, and thirty minutes; (6) Day of the week;

(7) Time of day; (8) Whether the time of day falls within

working hours (separate feature for all three time zones).

These features allow AcquireMgr to construct a more

complex prediction model, leading to a higher prediction

accuracy (Sec. 5.7).

Model Design. To capture the temporal nature of the

EC2 spot market, AcquireMgr uses a Long Short-Term

Memory Recurrent Neural Network (LSTM RNN) to pre-

dict instance preemptions. The LSTM RNN is a popu-

lar model for workloads where the ordering of training

examples is important to prediction accuracy [29]. Ex-

amples of such workloads include language modeling,

machine translation, and stock market prediction. Un-

like feed forward neural networks, LSTM models take

previous inputs into account when classifying input data.

Modeling the EC2 spot market as a sequence of events

significantly improves prediction accuracy (Sec. 5.7).

The output of the model is the probability of the resource

being preempted within the hour.

3.2 AcquireMgr

To make decisions about which resources to acquire

or release, AcquireMgr computes the expected cost and

expected utility of the set of instances it is considering

at each decision point. Calculations of the expected val-

ues are based on probabilities of preemption computed

by AcquireMgr’s trained LSTM model. This section de-

scribes how AcquireMgr computes these values.

Definitions. To aid in discussion, we first define the

notion of a resource pool. Each instance type in each

availability zone forms its own resource pool—in the

context of the EC2 spot instances, each such resource

pool has its own spot market. Given a set of allocations

A, where A is formulated as a jagged array, let A

i

be de-

fined as the i

th

entry of A corresponding to an array of

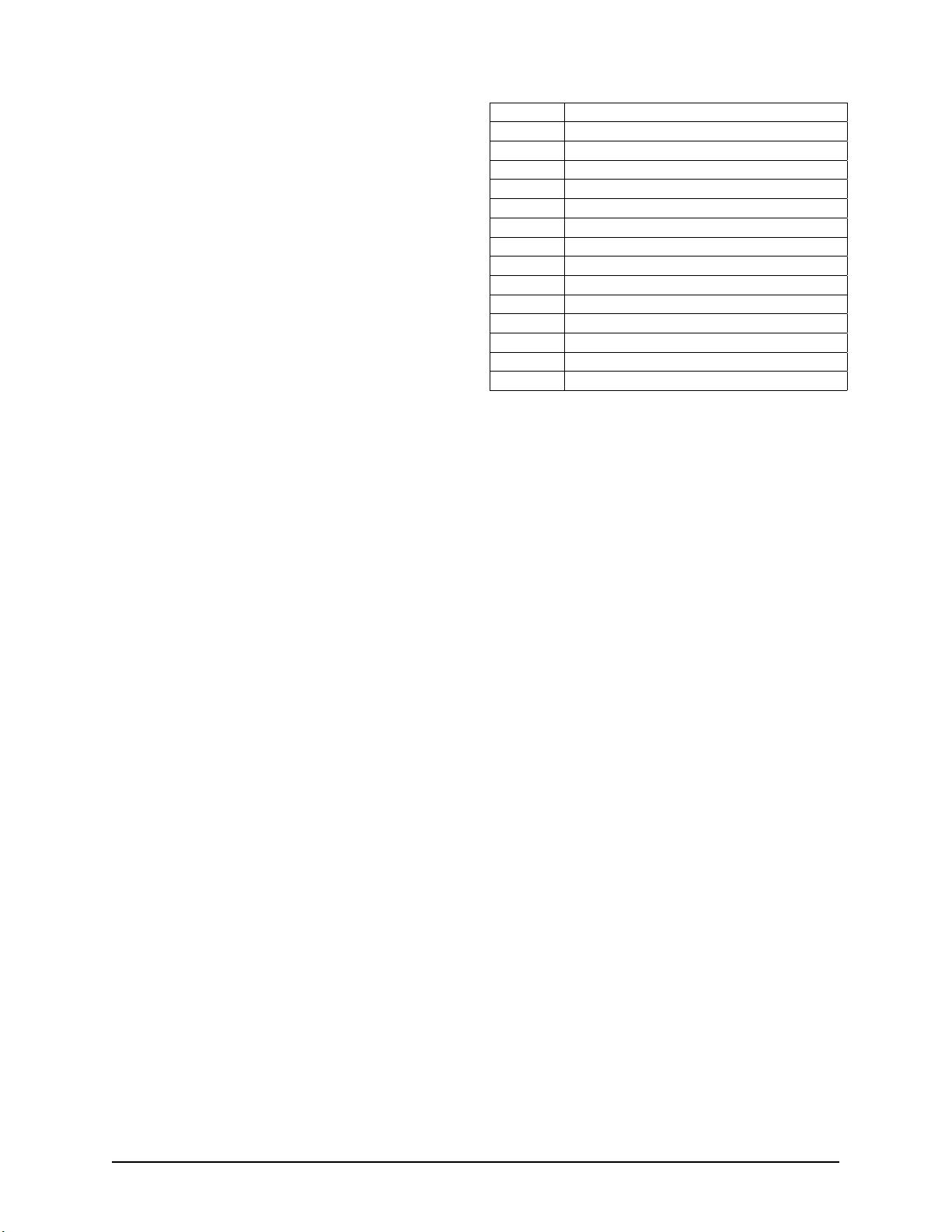

A Set of allocations as jagged array

A

i

Sorted array of allocations from resource pool i

a

i, j

Set of instances allocated from resource pool i

β

i, j

Probability that allocation a

i, j

is preempted

t

i, j

Time left in the preemption window for a

i, j

k

i, j

Number of instances in allocation a

i, j

P

i, j

Market price of allocation a

i, j

p

i, j

Bid price of allocation a

i, j

size(y) Size of the major dimension of array y

resc(y) Counts the total number of resources in y

λ

i

Regularization term for diversity

P(R = r) Probability that r resources remain in A

υ(r) The utility of having r resources remain in A

V

A

The expected utility of a set of allocations A

C

A

Expected cost of a set of allocations ($)

Table 1: Summary of parameters used by AcquireMgr

.

allocations from resource pool i sorted by bid price in as-

cending order. We define allocation a

i, j

as an allocation

from resource pool i (i.e., a

i, j

∈ A

i

) with the j

th

lowest

bid in that resource pool. We further denote p

i, j

as the

bid price of allocation a

i, j

, β

i, j

as the probability of pre-

emption of allocation a

i, j

, and t

i, j

as the time remaining

in the preemption window for allocation a

i, j

. Note that

p

i, j

≥ p

i, j−1

, which also implies β

i, j−1

≥ β

i, j

. Finally,

we define a size(A) function that returns the size of A’s

major dimension. See Table 1 for symbol reference.

Expected Cost. The total expected cost for a given

footprint A is calculated as the sum over the expected

cost of individual allocations C

A

[a

i, j

]:

C

A

=

size(A)

∑

i=1

size(A

i

)

∑

j=1

C

A

[a

i, j

] (1)

AcquireMgr calculates the expected cost of an alloca-

tion by considering the probability of preemption within

the preemption window β

i, j

for a given allocation a

i, j

at

a given bid delta. There are exactly two possibilities: an

allocation will either be preempted with probability β

i, j

or it will reach the end of its preemption window in the

remaining t

i, j

minutes with probability 1 − β

i, j

, in which

case we would voluntarily release the allocation. The ex-

pected cost can then be written down as:

C

A

[a

i, j

] = (1 − β

i, j

) ∗ P

i, j

∗ k

i, j

∗t

i, j

+ β

i, j

∗ 0 ∗ k

i, j

∗t

i, j

(2)

where k

i, j

is the number of instances in the allocation.

and P

i, j

is the market price for instance of type i at the

time of acquisition.

Expected Utility. In addition to computing expected

cost for a set of allocations, AcquireMgr computes the

expected utility for a set of allocations. The expected

utility is the expected percentage of requests that will

meet the latency target given the set of allocations A. Ex-

pected utility takes into account the probability of allo-

cation preemptions, providing AcquireMgr with a metric

USENIX Association 2018 USENIX Annual Technical Conference 5

剩余1034页未读,继续阅读

2019-04-05 上传

2019-08-30 上传

2023-05-31 上传

2023-04-10 上传

2023-06-06 上传

2023-08-06 上传

2023-05-01 上传

2023-06-12 上传

blteo

- 粉丝: 0

- 资源: 9

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Aspose资源包:转PDF无水印学习工具

- Go语言控制台输入输出操作教程

- 红外遥控报警器原理及应用详解下载

- 控制卷筒纸侧面位置的先进装置技术解析

- 易语言加解密例程源码详解与实践

- SpringMVC客户管理系统:Hibernate与Bootstrap集成实践

- 深入理解JavaScript Set与WeakSet的使用

- 深入解析接收存储及发送装置的广播技术方法

- zyString模块1.0源码公开-易语言编程利器

- Android记分板UI设计:SimpleScoreboard的简洁与高效

- 量子网格列设置存储组件:开源解决方案

- 全面技术源码合集:CcVita Php Check v1.1

- 中军创易语言抢购软件:付款功能解析

- Python手动实现图像滤波教程

- MATLAB源代码实现基于DFT的量子传输分析

- 开源程序Hukoch.exe:简化食谱管理与导入功能

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功