A) BATCH LAYER

The crux of the LA is the master dataset. The

master dataset constantly receives new data in an

append-only fashion. This approach is highly

desirable to maintain the immutability of the data.

In the book

[3]

, Marz stresses on the importance of

immutable datasets. The overall purpose is to

prepare for human or system errors and allow

reprocessing. As values are overridden in a

mutable data model, the immutability principle

prevents loss of data. Secondly, the immutable

data model supports simplification due to the

absence of indexing of data. The master dataset

in the batch layer is ever growing and is the

detailed source of data in the architecture. The

master dataset permits random read feature on the

historical data. The batch layer prefers re-

computation algorithms over incremental

algorithms. The problem with incremental

algorithms is the failure to address the challenges

faced by human mistakes. The re-computational

nature of the batch layer creates simple batch

views as the complexity is addressed during

precomputation. Additionally, the responsibility

of the batch layer is to historically process the

data with high accuracy. Machine learning

algorithms take time to train the model and give

better results over time. Such naturally

exhaustive and time-consuming tasks are

processed inside the batch layer. In the Hadoop

framework, the master dataset is persisted in the

Hadoop File System (HDFS)

[6]

. HDFS is

distributed and fault-tolerant and follows an

append only approach to fulfill the needs of the

batch layer of the LA. Batch processing is

performed with the use of MapReduce jobs than

run at constant intervals and calculate batch

views over the entire data spread out in HDFS.

The problem with the batch layer is high-latency.

The batch jobs must be run over the entire master

dataset and are time consuming. For example,

there might be some MapReduce. jobs that are

run after every two hours. These jobs can process

data that can be relatively old as they cannot keep

up with the inflow of stream data. This is a serious

limitation for real-time data processing. To

overcome this limitation, the speed layer is very

significant.

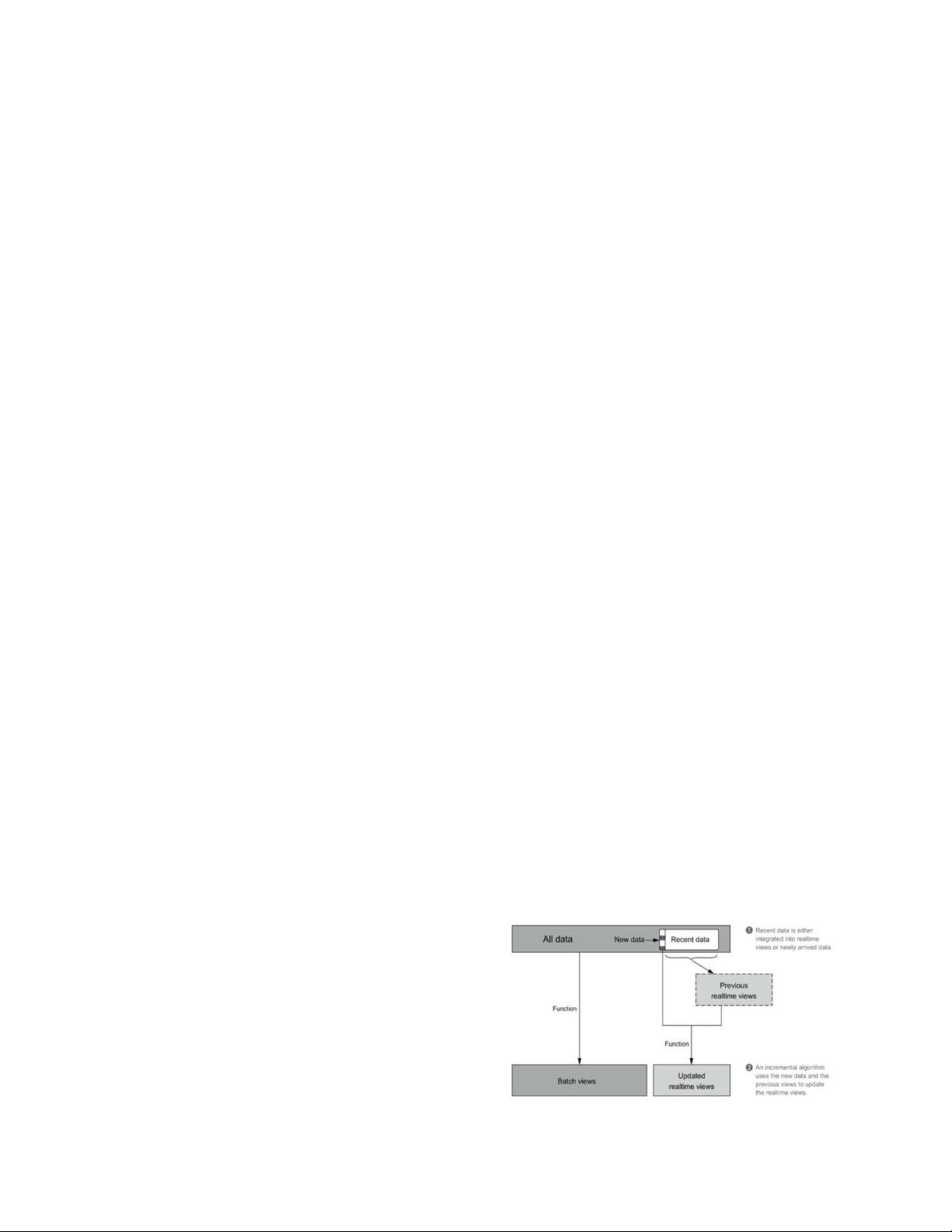

B) SPEED LAYER

[3] and [5] state that real-time data processing is

realized because of the presence of the speed

layer. The data streams are processed in real-time

without the consideration of completeness or fix-

ups. The speed layer achieves up-to-date query

results and compensates for the high-latency of

the batch layer. The purpose of this layer is to fill

in the gap caused by the time-consuming batch

layer. In order to create real-time views of the

most recent data, this layer sacrifices throughput

and decreases latency substantially. The real-

time views are generated immediately after the

data is received but are not as complete or precise

as the batch layer. The idea behind this design is

that the accurate results of the batch layer

override the real-time views, once they arrive.

The separation of roles in the different layers

account for the beauty of the LA. As mentioned

earlier, the batch layer participates in a resource

intensive operation by running over the entire

master dataset. Therefore, the speed layer must

incorporate a different approach to meet the low-

latency requirements. In contrast to the re-

computation approach of batch layer, the speed

layer adopts incremental computation. The

incremental computation is more complex, but

the data handled in the speed layer is vastly

smaller and the views are transient. A random-

read/random-write methodology is used to re-use

and update the previous views. There is a

demonstration of the incremental computational

strategy in the Fig. 2.