‘This article is protected by copyright. All rights reserved.’

Informative Priors

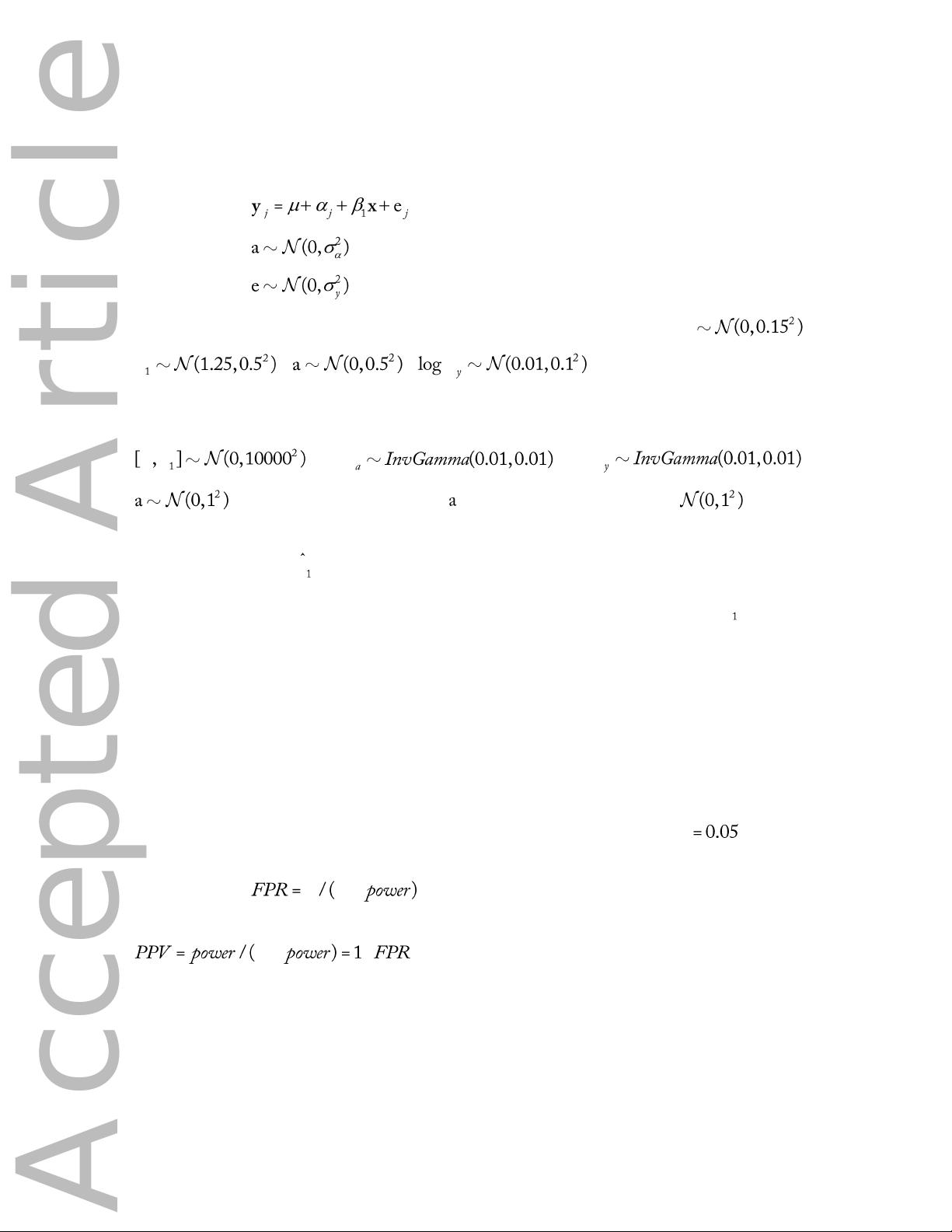

Many authors advocate the use of informative priors to alleviate problems like the winner’s curse that

arise from low statistical power. [Gelman and Hill(2007)] and [Gelman et~al.(2013)] state that noninformative

priors facilitate model development but should be substituted for informative priors during the final analyses.

More philosophically, both [Kruschke(2010)] and [Hobbs and Hooten(2015)] argue that current scientific

philosophy improperly idealizes science in vacuum, wherein only the data on hand are used to draw inferences;

science should instead utilize all available data. Despite these arguments, most textbooks on Bayesian data

analyses provide analytical and computational examples using noninformative priors, avoid making explicit

recommendations for informative priors, and do not illustrate how informative priors might affect results

[Gelman and Hill(2007), Kruschke(2010), Korner-Nievergelt et~al.(2015), but see ,Lemoine2016a]. Because

of the ambiguity surrounding prior choice and the pedagogy of Bayesian statistics emphasizing noninformative

priors, informative priors remain underutilized in ecological data analysis (Table 1).

This is unfortunate because many statistical issues like the winner’s curse can be mitigated via the use

of informative priors. Weakly informative priors serve as a method of statistical regularization, preventing the

winner’s curse by shrinking parameter estimates towards zero unless there is sufficiently strong evidence of a

large effect [McElreath(2015)]. Low-powered data result in larger shrinkage. In plainer words, large effect sizes

arising from limited sample sizes and noisy data are treated skeptically. Consider a few practical examples of

regularization. In the first example, imagine a -test comparing the mean of two groups. Standardizing the

response variable and using a prior for the difference between groups states that effect sizes are

unlikely to be greater than one standard deviation of the response. This is an explicit acknowledgment that most

ecological effects are small [Mand Jennions(2002), Jennions and M(2003)] and that unrealistically large effects

in the data should be constrained unless supported by high statistical power (i.e. large sample sizes, low noise).

This effectively makes Bayesian analyses with weakly informative priors more conservative, but potentially

more accurate, than analyses based on noninformative priors. Weakly informative priors reduced statistical

power at sample sizes below 50, but also reduced Type I error rates nearly by half (Fig. 5, see Appendix 3). As

another example, I conducted 10,000 simulation experiments for an independent -test with a statistical power

of 0.2. For each simulation, I calculated the confidence interval for the effect size using both noninformative

and weakly informative priors. Under noninformative priors, the confidence interval failed to

include the true effect size in 3.59% of simulations, whereas the failure rate for weakly informative priors was

only 2.87%. Shrinkage can also mitigate the need for post-hoc corrections for multiple comparisons. [Gelman

et~al.(2012)] simulated data from multiple treatment groups with small effect sizes and conducted all pairwise

comparisons among groups. Out of 1,000 simulations, classical tests recorded at least one significant difference

47% of the time, whereas Bayesian analyses with shrinkage recorded at least one significant difference only 5%

of the time. However, Bayesian differences were 35% more accurate (i.e. correct sign) than classical analyses.

[Gelman et~al.(2012)] described the objective of regularization succinctly, as “the price to pay for more reliable

comparisons is to claim confidence in fewer of them”.