0

5000

10000

15000

20000

25000

30000

1 4 16 64 256 1024

0

50

100

150

200

250

300

350

400

Throughput, tasks/sec

Number of threads

Throughput

Latency

Linear (ideal) latency

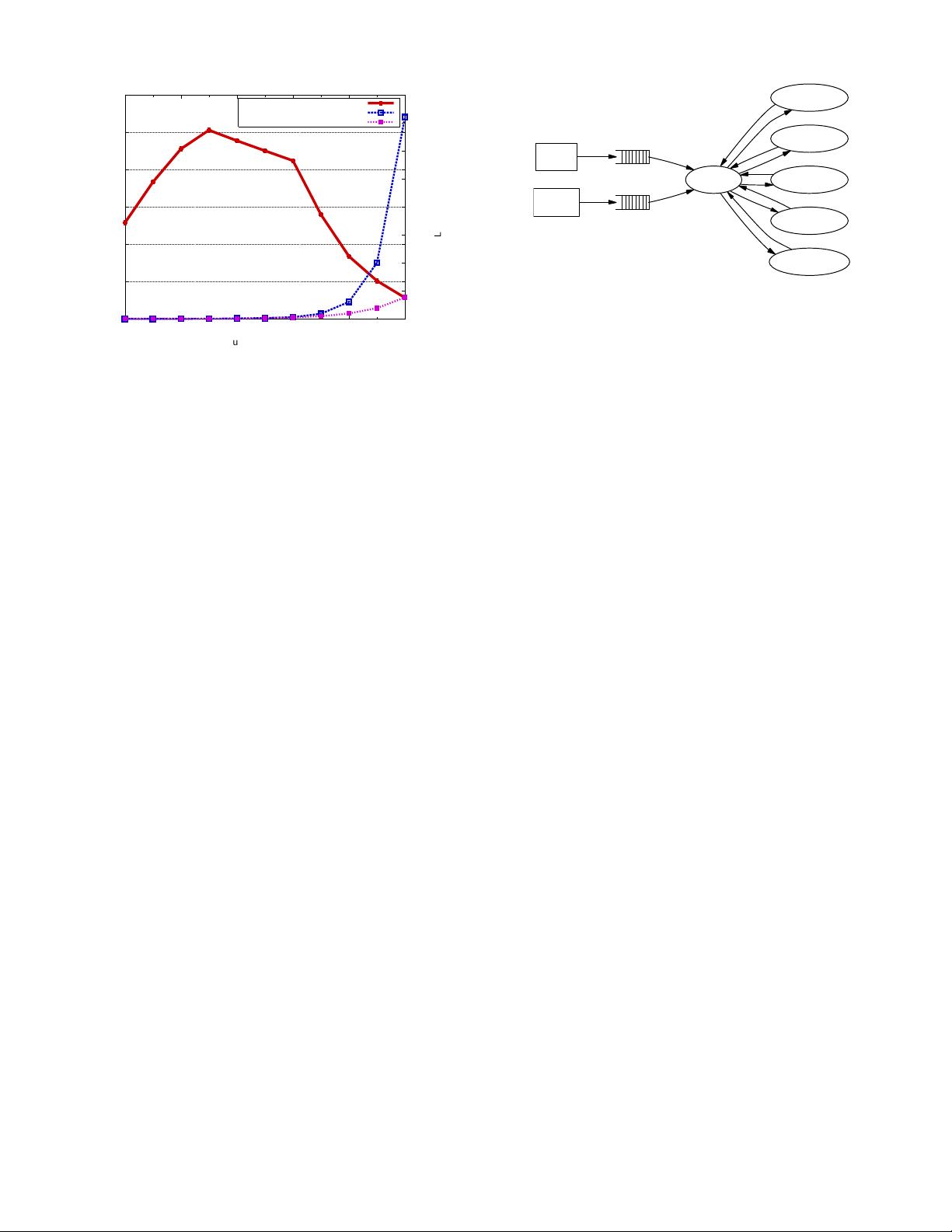

Figure 2: Threaded server throughput degradation: This benchmark mea-

sures a simple threaded server which creates a single thread for each task in the

pipeline. After receiving a task, each thread performs an 8 KB read from a disk

file; all threads read from the same file, so the data is always in the buffer cache.

Threads are pre-allocated in the server to eliminate thread startup overhead

from the measurements, and tasks are generated internally to negate network

effects. The server is implemented in C and is running on a 4-way 500 MHz

Pentium III with 2 GB of memory under Linux 2.2.14. As the number of con-

current tasks increases, throughput increases until the number of threads grows

large, after which throughput degrades substantially. Response time becomes

unbounded as task queue lengths increase; for comparison, we have shown the

ideal linear response time curve (note the log scale on the x axis).

identify internal performance bottlenecks in order to perform tuning

and load conditioning. Consider a simple threaded Web server in which

some requests are inexpensive to process (e.g., cached static pages) and

others are expensive (e.g., large pages not in the cache). With many

concurrent requests, it is likely that the expensive requests could be the

source of a performance bottleneck, for which it is desirable to perform

load shedding. However, the server is unable to inspect the internal

request stream to implement such a policy; all it knows is that the thread

pool is saturated, and must arbitrarily reject work without knowledge of

the source of the bottleneck.

Resource containers [7] and the concept of paths from the Scout op-

erating system [41, 49] are two techniques that can be used to bound

the resource usage of tasks in a server. These mechanisms apply ver-

tical resource management to a set of software modules, allowing the

resources for an entire data flow through the system to be managed as a

unit. In the case of the bottleneck described above, limiting the resource

usage of a given request would avoid degradation due to cache misses,

but allow cache hits to proceed unabated.

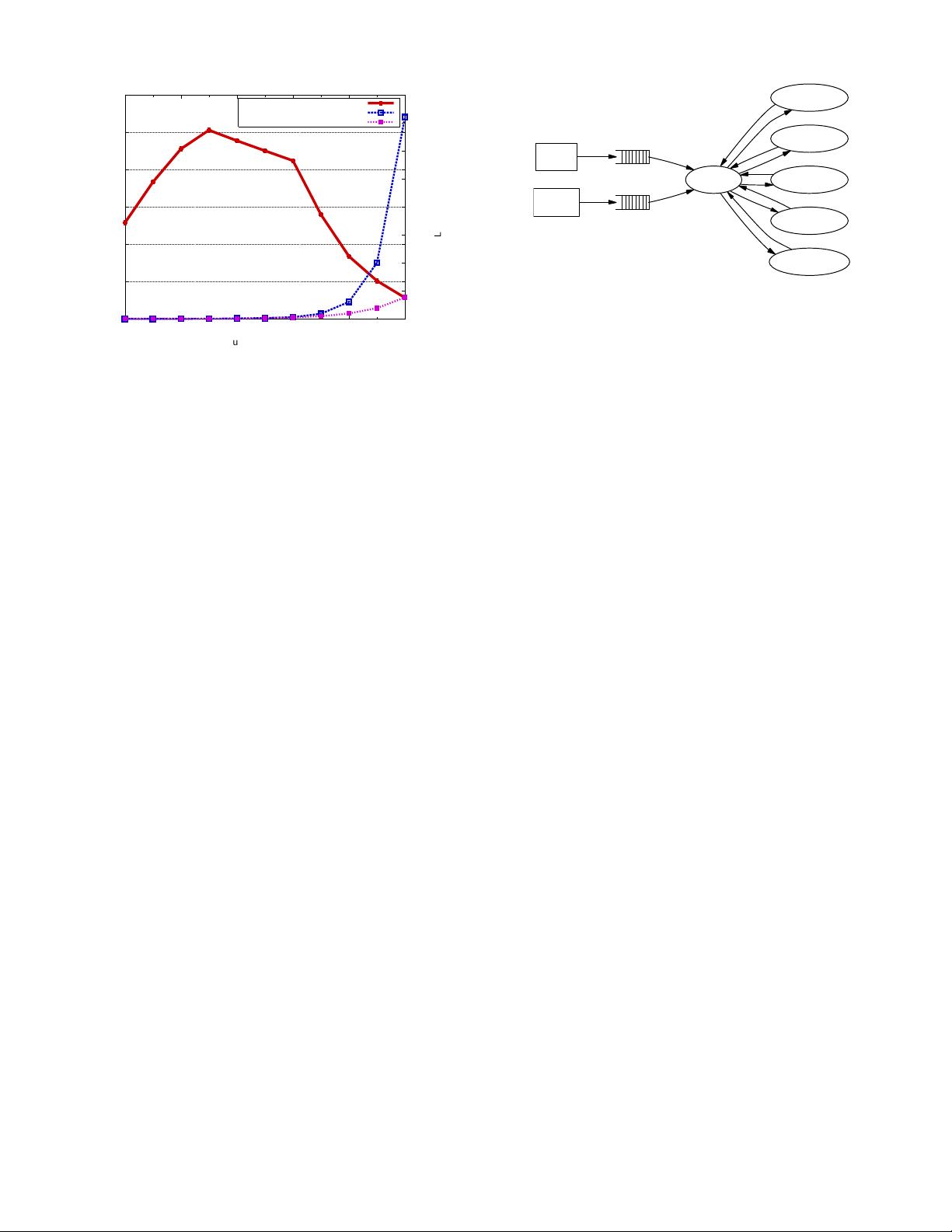

2.3 Event-driven concurrency

The scalability limits of threads have led many developers to eschew

them almost entirely and employ an event-driven approach to manag-

ing concurrency. In this approach, shown in Figure 3, a server consists

of a small number of threads (typically one per CPU) that loop continu-

ously, processing events of different types from a queue. Events may be

generated by the operating system or internally by the application, and

generally correspond to network and disk I/O readiness and completion

notifications, timers, or other application-specific events. The event-

driven approach implements the processing of each task as a finite state

machine, where transitions between states in the FSM are triggered by

events. In this way the server maintains its own continuation state for

each task rather than relying upon a thread context.

The event-driven design is used by a number of systems, including

scheduler

network

disk

request FSM 1

request FSM 2

request FSM 3

request FSM 4

request FSM N

Figure 3: Event-driven server design: This figure shows the flow of events

through an event-driven server. The main thread processes incoming events from

the network, disk, and other sources, and uses these to drive the execution of

many finite state machines. Each FSM represents a single request or flow of

execution through the system. The key source of complexity in this design is the

event scheduler, which must control the execution of each FSM.

the Flash [44], thttpd [4], Zeus [63], and JAWS [24] Web servers, and

the Harvest [12] Web cache. In Flash, each component of the server

responds to particular types of events, such as socket connections or

filesystem accesses. The main server process is responsible for contin-

ually dispatching events to each of these components, which are imple-

mented as library calls. Because certain I/O operations (in this case,

filesystem access) do not have asynchronous interfaces, the main server

process handles these events by dispatching them to helper processes

via IPC. Helper processes issue (blocking) I/O requests and return an

event to the main process upon completion. Harvest’s structure is very

similar: it is single-threaded and event-driven, with the exception of the

FTP protocol, which is implemented by a separate process.

The tradeoffs between threaded and event-driven concurrency mod-

els have been studied extensively in the JAWS Web server [23, 24].

JAWS provides a framework for Web server construction allowing the

concurrency model, protocol processing code, cached filesystem, and

other components to be customized. Like SEDA, JAWS emphasizes

the importance of adaptivity in service design, by facilitating both static

and dynamic adaptations in the service framework. To our knowledge,

JAWS has only been evaluated under light loads (less than 50 concur-

rent clients) and has not addressed the use of adaptivity for conditioning

under heavy load.

Event-driven systems tend to be robust to load, with little degrada-

tion in throughput as offered load increases beyond saturation. Figure 4

shows the throughput achieved with an event-driven implementation of

the service from Figure 2. As the number of tasks increases, the server

throughput increases until the pipeline fills and the bottleneck (the CPU

in this case) becomes saturated. If the number of tasks in the pipeline is

increased further, excess tasks are absorbed in the server’s event queue.

The throughput remains constant across a huge range in load, with the

latency of each task increasing linearly.

An important limitation of this model is that it assumes that event-

handling threads do not block, and for this reason nonblocking I/O

mechanisms must be employed. Although much prior work has in-

vestigated scalable I/O primitives [8, 9, 33, 46, 48], event-processing

threads can block regardless of the I/O mechanisms used, due to inter-

rupts, page faults, or garbage collection.

Event-driven design raises a number of additional challenges for the

application developer. Scheduling and ordering of events is probably

the most important concern: the application is responsible for deciding

when to process each incoming event and in what order to process the

FSMs for multiple flows. In order to balance fairness with low response

time, the application must carefully multiplex the execution of multiple