the storage allocation for buckets and overflow buckets, and so

on. A bucket page and one or more overflow pages (if any)

form the storage space for a bucket. The first page of a bucket

is the bucket page and other pages of the bucket are the

overflow pages. The bucket page and overflow pages in a

bucket are connected by a bi-directional link. Besides, the

bitmap page monitors the usage of overflow pages and the

bitmap page itself.

In the disk-oriented linear hashing, the bucket pages are

allocated in batch, and the number of each allocation is power

of 2. It means that we always first pre-allocate

bucket

pages each time, which are contiguously stored on the disk,

and then allocate some overflow pages behind these bucket

pages according to the requirements. For example, initially we

allocate bucket pages for bucket 0 and 1, and then when need

to allocate bucket 2, we allocate bucket pages for bucket 2 and

3. Analogously, when need to allocate bucket 4, we allocate

bucket pages for bucket 4-7, and so on. In addition, every time

before we pre-allocate bucket pages, we record a total count of

overflow pages and bitmap pages allocated by far in an array

called hashm_spare, which is stored in the metadata page. By

this way, using Formula (1), we can easily locate a bucket in an

index file through the bucket number (denoted as

). Figure 2

gives an example of the linear hashing’s storage allocation and

management.

2

1 _ log ( 1

1(

)1(0)

0)

n nn

n

b hashm spare b b

block numb

b

er

++ + − ≠

=

=

(1

B. Flash-Oriented Hash Indexes

In recent years, there are some studies focusing on

optimizing hash indexes on flash memory. MicroHash [11] is

an efficient external memory index structure for Wireless

Sensor Devices (WSDs), which stores data on flash by time or

value. However, the MicroHash can only be applied in an

architecture of a wireless sensor which performs the data

manipulations directly to raw NAND flash. MLDH [3] is a

multi-layered hash index, and the capacity of each level is

twice as its upper level. Updates to the MLDH is first buffered

in an in-memory index structure named

. When

is full,

it is merged with hash indexes on flash to produce a new hash

index. Though the MLDH has

construction time, it

can reduce flash writes if top levels of hash indexes are

buffered in main memory. Wang et al. [5] proposed a flash-

based self-adaptive extendible hash index in which each bucket

occupies a block (erase unit) of flash. A bucket, or a block,

consists of both data region and log region, which is similar to

the IPL storage model. The log region serves update and delete

operations, so in-place updates to the data region can be

delayed. In addition, a Split-or-Merge (SM) factor, which is

dynamically adjusted according to the log/data ratio, is added

to make the index self-adaptive. Hybrid Hash Index [6, 35]

delays split operations which cause additional writes and erase

operations by using overflow buckets. If the number of

overflow buckets attached to a bucket has reached the ceiling,

the Hybrid Hash Index determines whether a split operation is

triggered according to the ratio of update and delete records in

that bucket. Li et al. [1] proposed a Lazy-Split schema to

reduce writes at the expense of more reads. They declared that

the Lazy-split hash can adapt itself dynamically to different

search ratios by tuning the Split-cursor, but they did not offer

an effective method of tuning it.

In summary, most of the previous work focuses on

improving the update efficiency of hash indexes but neglecting

the degradation of the search performance. On the contrary, we

propose to optimize both update and search performance by

dynamically adapting the hash index structure according to the

change of access patterns. In Section V, we will compare our

proposal with the linear hashing and the Lazy-Split hash index

to demonstrate the efficiency of our proposal.

III. S

ELF-ADAPTIVE LINEAR HASHING

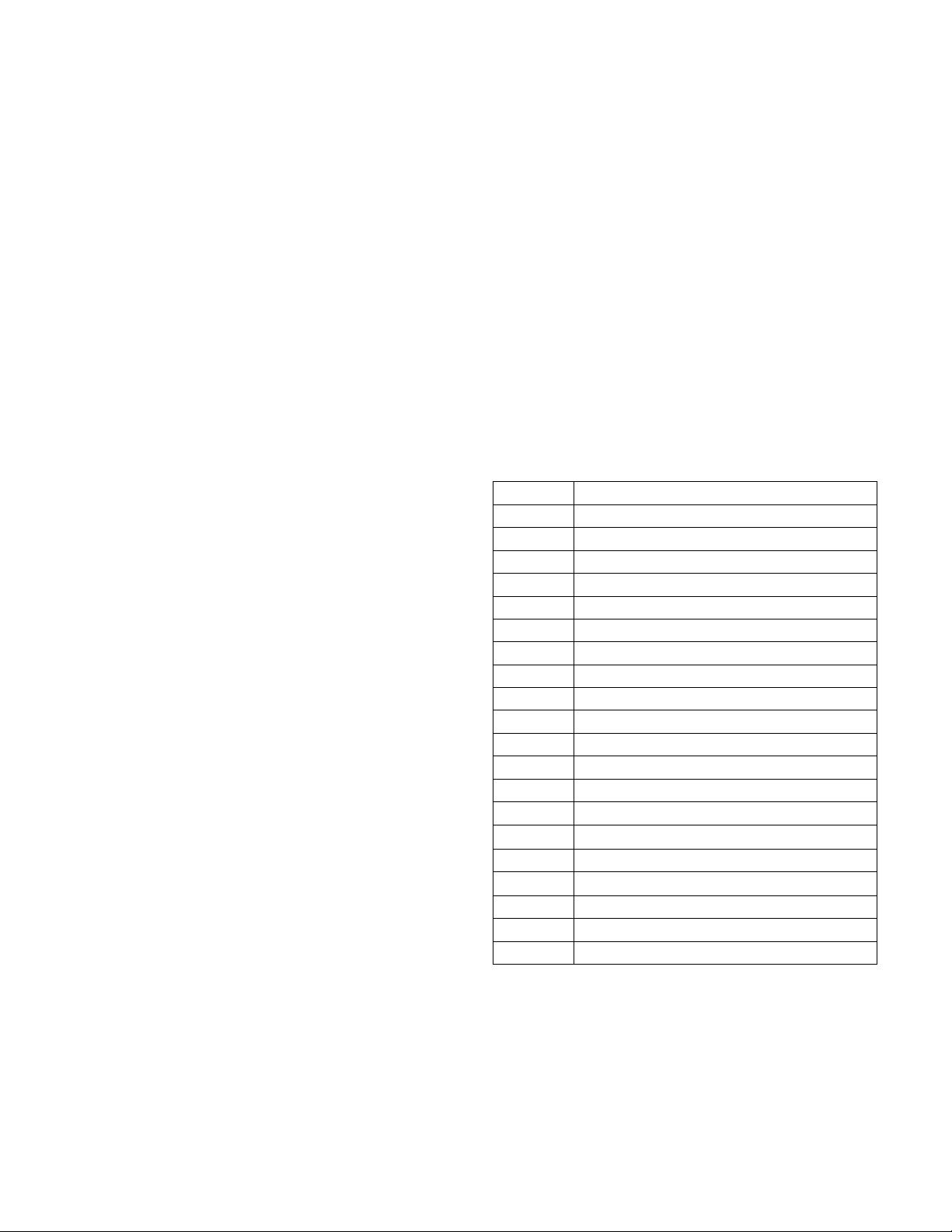

TABLE I.

N

OTATIONS USED IN THIS PAPER

Notation

w

Average cost of writing a flash page

w/n

a coarse-grained flash-page write

lf

l

pper bound) of the load factor

B

ity of a bucket page

b

buckets

g

of fixed buckets contained in a group

z

r

m

f

alse positive probability of Bloom filters

s

of log pages in the log region

d

count of log pages in the log region

jth Bucket

ith group

kth set, c is the count of bits used to generate the set

Bit(x)

ompute the bit number of the binary value of x

h(x, j)

et the last j bits of x

h(K)

unction to get the hash value of key K, and h(K) < b

In this section, we present the design of SAL-hashing. SAL-

hashing aims to reduce small random writes while maintaining

high search performance. The notations used throughout the

paper are summarized in Table I.

435