Distributed Hierarchical GPU Parameter Server for Massive Scale Deep Learning Ads Systems

justification of adopting DNN models for CTR prediction.

•

Hashing reduces the accuracy. Even with

k = 2

34

, the

test AUC is dropped by 0.7%.

•

Hash+DNN is a good combination for replacing LR.

Compared to the original baseline LR model, we can re-

duce the number of nonzero weights from 31B to merely

14.6M without affecting the accuracy.

Table 2 summarizes the experiments on web search ads data.

The trend is essentially similar to Table 1. The main differ-

ence is that we cannot really propose to use Hash+DNN for

web search ads CTR models, because that would reduce the

accuracy of current DNN-based models and consequently

would affect the revenue for the company.

Table 2. OP+OSRP for Web Search Sponsored Ads Data

# Nonzero Weights Test AUC

Baseline LR 199,359,034,971 0.7458

Baseline DNN 0.7670

Hash+DNN (k = 2

32

) 3,005,012,154 0.7556

Hash+DNN (k = 2

31

) 1,599,247,184 0.7547

Hash+DNN (k = 2

30

) 838,120,432 0.7538

Hash+DNN (k = 2

29

) 433,267,303 0.7528

Hash+DNN (k = 2

28

) 222,780,993 0.7515

Hash+DNN (k = 2

27

) 114,222,607 0.7501

Hash+DNN (k = 2

26

) 58,517,936 0.7487

Hash+DNN (k = 2

24

) 15,410,799 0.7453

Hash+DNN (k = 2

22

) 4,125,016 0.7408

Summary.

This section summarizes our effort on develop-

ing effective hashing methods for ads CTR models. The

work was done in 2015 and we had never attempted to pub-

lish the paper. The proposed algorithm, OP+OSRP, actually

still has some novelty to date, although it obviously com-

bines several previously known ideas. The experiments are

exciting in a way because it shows that one can use a single

machine to store the DNN model and can still achieve a

noticeable increase in AUC compared to the original (large)

LR model. However, for the main ads CTR model used in

web search which brings in the majority of the revenue, we

observe that the test accuracy is always dropped as soon

as we try to hash the input data. This is not acceptable in

the current business model because even a

0.1%

decrease in

AUC would result in a noticeable decrease in revenue.

Therefore, this report helps explain why we introduce the

distributed hierarchical GPU parameter server in this paper

to train the massive scale CTR models, in a lossless fashion.

3 DISTRIBUTED HIERARCHICAL

PARAMETER SERVER OVERVIEW

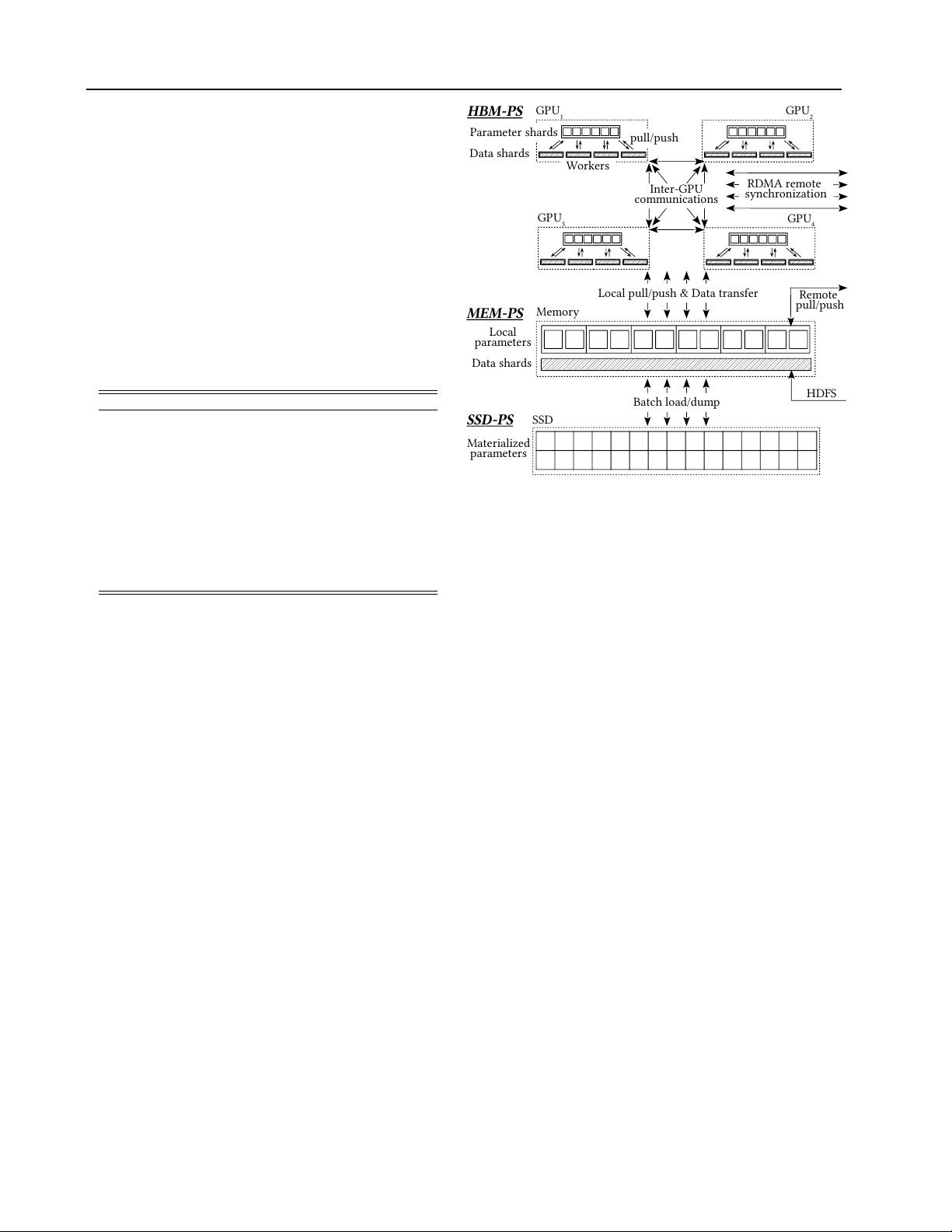

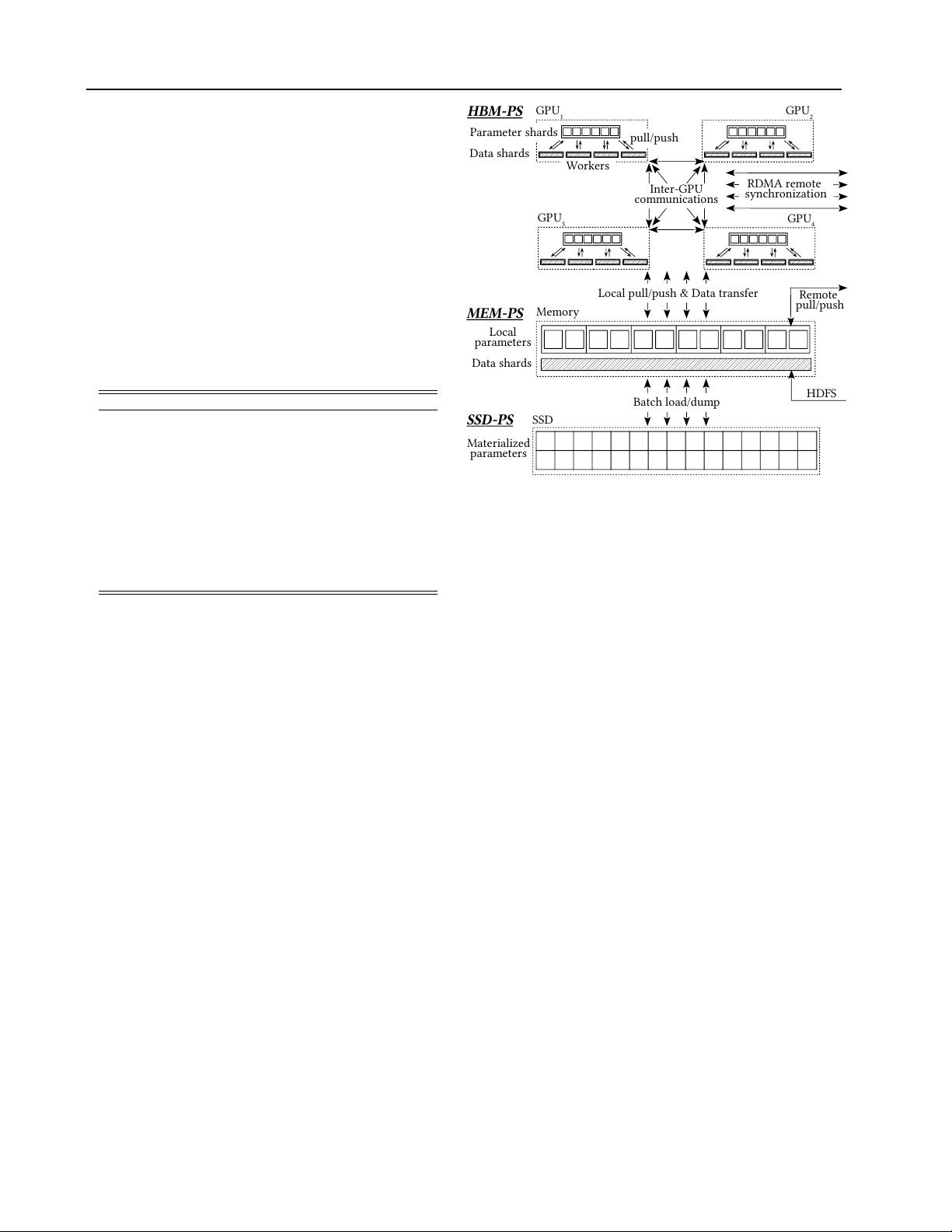

In this section, we present the distributed hierarchical param-

eter server overview and describe its main modules from

a high-level view. Figure 2 illustrates the proposed hier-

archical parameter server architecture. It contains three

major components: HBM-PS, MEM-PS and SSD-PS.

Workers

pull/push

Parameter shards

GPU

3

Inter-GPU

communications

Data shards

GPU

1

GPU

4

GPU

2

HDFS

Memory

Local

parameters

Data shards

SSD

Batch load/dump

Materialized

parameters

Local pull/push & Data transfer

SSD-PS

MEM-PS

HBM-PS

Remote

pull/push

RDMA remote

synchronization

Figure 2. Hierarchical parameter server architecture.

Workflow.

Algorithm 1 depicts the distributed hierarchi-

cal parameter server training workflow. The training data

batches are streamed into the main memory through a net-

work file system, e.g., HDFS (line 2). Our distributed train-

ing framework falls in the data-parallel paradigm (Li et al.,

2014; Cui et al., 2014; 2016; Luo et al., 2018). Each node

is responsible to process its own training batches—different

nodes receive different training data from HDFS. Then, each

node identifies the union of the referenced parameters in

the current received batch and pulls these parameters from

the local MEM-PS/SSD-PS (line 3) and the remote MEM-

PS (line 4). The local MEM-PS loads the local parameters

stored on local SSD-PS into the memory and requests other

nodes for the remote parameters through the network. Af-

ter all the referenced parameters are loaded in the memory,

these parameters are partitioned and transferred to the HBM-

PS in GPUs. In order to effectively utilize the limited GPU

memory, the parameters are partitioned in a non-overlapped

fashion—one parameter is stored only in one GPU. When a

worker thread in a GPU requires the parameter on another

GPU, it directly fetches the parameter from the remote GPU

and pushes the updates back to the remote GPU through

high-speed inter-GPU hardware connection NVLink (Foley

& Danskin, 2017). In addition, the data batch is sharded

into multiple mini-batches and sent to each GPU worker

thread (line 5-10). Many recent machine learning system

studies (Ho et al., 2013; Chilimbi et al., 2014; Cui et al.,

2016; Alistarh et al., 2018) suggest that the parameter stale-

ness shared among workers in data-parallel systems leads to

slower convergence. In our proposed system, a mini-batch

contains thousands of examples. One GPU worker thread

is responsible to process a few thousand mini-batches. An