2

INTRODUCTION

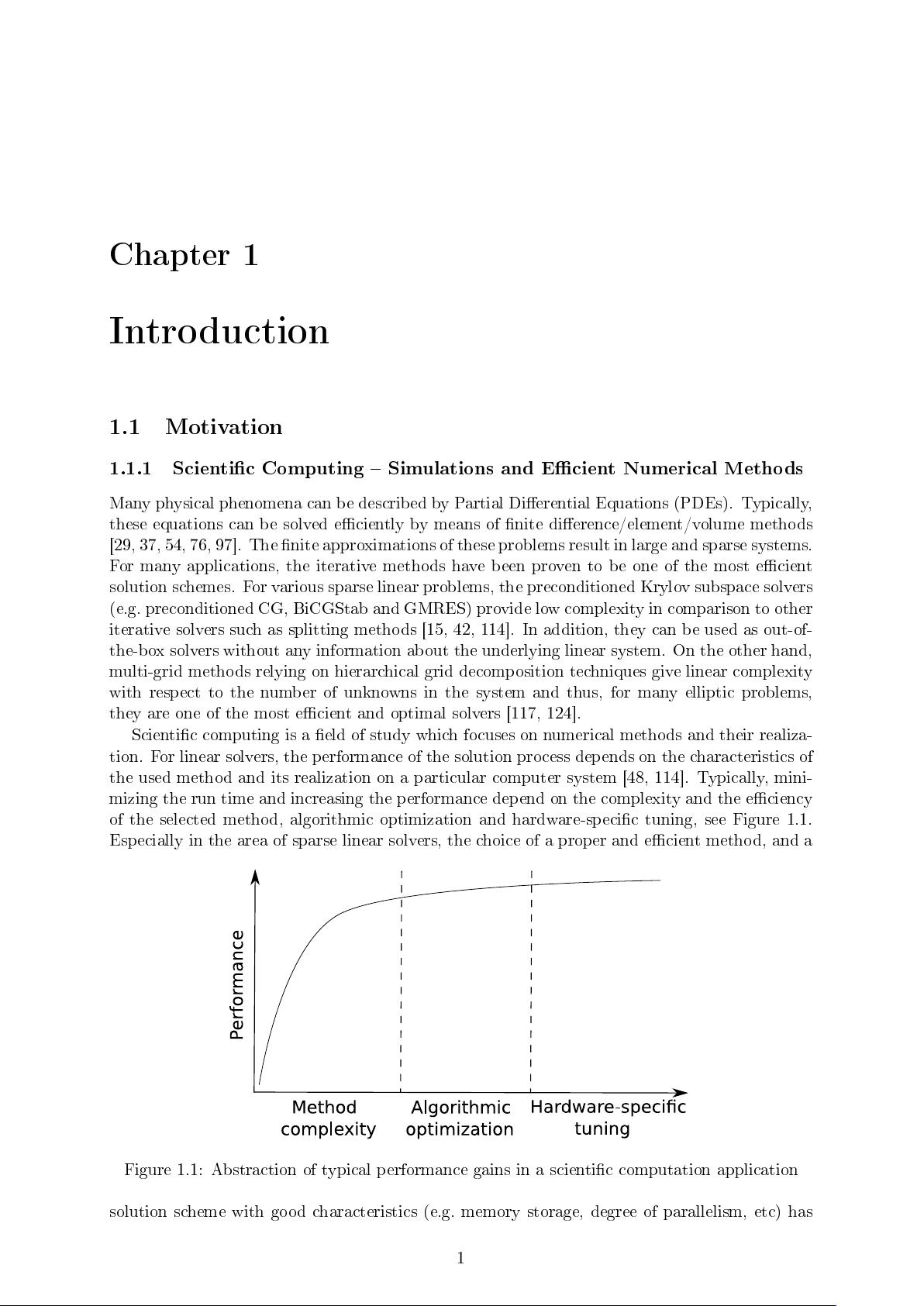

the largest impact on the performance prole. For many applications, algorithm optimization

and better software design can deliver additional improvement and performance gain. Typically,

hardware-specic tuning such as loop unrolling and peeling, instruction-level-parallelism, vector

units, etc can speed up the solution phase only by a limited factor.

Furthermore, the time of the solution process depends on the specic hardware features of

the system. Therefore, we need to ensure that the proper solution process takes into account the

characteristics of the selected method, specic implementation details and hardware features.

Numerical methods that are aware of the hardware features and utilize the platform eciently

provide the best performance.

1.1.2 Hardware Shifts

For most of the software products, single core processors have been proven to provide good

hardware performance, portability and forward compatibility. In this context, portability and

compatibility are dened as the ability to move a program from one computer system to another

without any code modication. To increase the performance of the single core processors, the

major micro-processor producers rely strongly on hardware improvements such as instruction

pipe-lining, out-of-order execution, pre-fetching schemes and, most important, increase of the

clock frequency [56]. These techniques ensure better performance on the majority of sequential

programs. However, due to physical limitations of the semiconductor technology these trends

are not sustainable [9]. One of the major obstacles in continuing to increase the clock frequency

is the power constraint combined with heat dissipation restrictions. In the last few years the

combined restrictions of the memory bandwidth and latency as well as the limited acceleration

factors of the instruction level parallelism have caused a hardware shift moving from single-core

to multi-core and many-core processors and devices.

1.1.3 Emerging Multi-core and Many-core Devices

New emerging multi-core and many-core technologies mostly dier from the previous single-core

concept by providing more cores on the chip. Furthermore, the internal memory structure of the

micro-processors is evolving the local internal processor memory is moving from caches that

are large, automatic and transparent to small and mostly manually managed local or shared

memory. This is a necessary step in order to provide a scalable internal memory system for

handling the accesses and transfers from the global memory to the processor. In addition, the

compute power is rearranged from a few fat computational cores to many lighter compute units in

dierent homogeneous or heterogeneous setups. Typical examples are Graphics Processing Unit

(GPU) devices [104, 105], Sony Toshiba IBM Cell Broadband Engine (STI Cell BE) processor

[67] and state-of-the-art technologies such as Intel Many Integrated Core (MIC) or Single-Chip

Cloud (SCC) architecture [72, 74].

1.1.4 Software Impact

The hardware shifts and emerging multi-core and many-core devices cause a signicant software

impact. The largest problem arises from the fact that old legacy codes are not able to automat-

ically take advantage of the new hardware technologies. Due to the growing peak performance

gap between single core and multi-core/many-core devices, the single-threaded programs tend

to perform even worse on the emerging platforms theoretically, on a dual core system (typical

Intel/AMD CPUs in 2006 [71]) a sequential program would utilize 50% of the peak performance

of the machine, while on a 500-core chip (typical NVIDIA GPUs in 2011 [104]) it would utilize

only 0.2%. Furthermore, programs designed for clusters do not utilize the full power potential

of modern multi-core CPUs due to the dierent synchronization mechanisms. These programs

are not able to run on any of the GPU devices, since none of the many-core platforms support

explicit communication control.