Mesos slave Mesos slave Mesos slave

MPI

executor

task

Hadoop

executor

task

MPI

executor

task

task

Hadoop

executor

task

task

Mesos

master

Hadoop

scheduler

MPI

scheduler

Standby

master

Standby

master

ZooKeeper

quorum

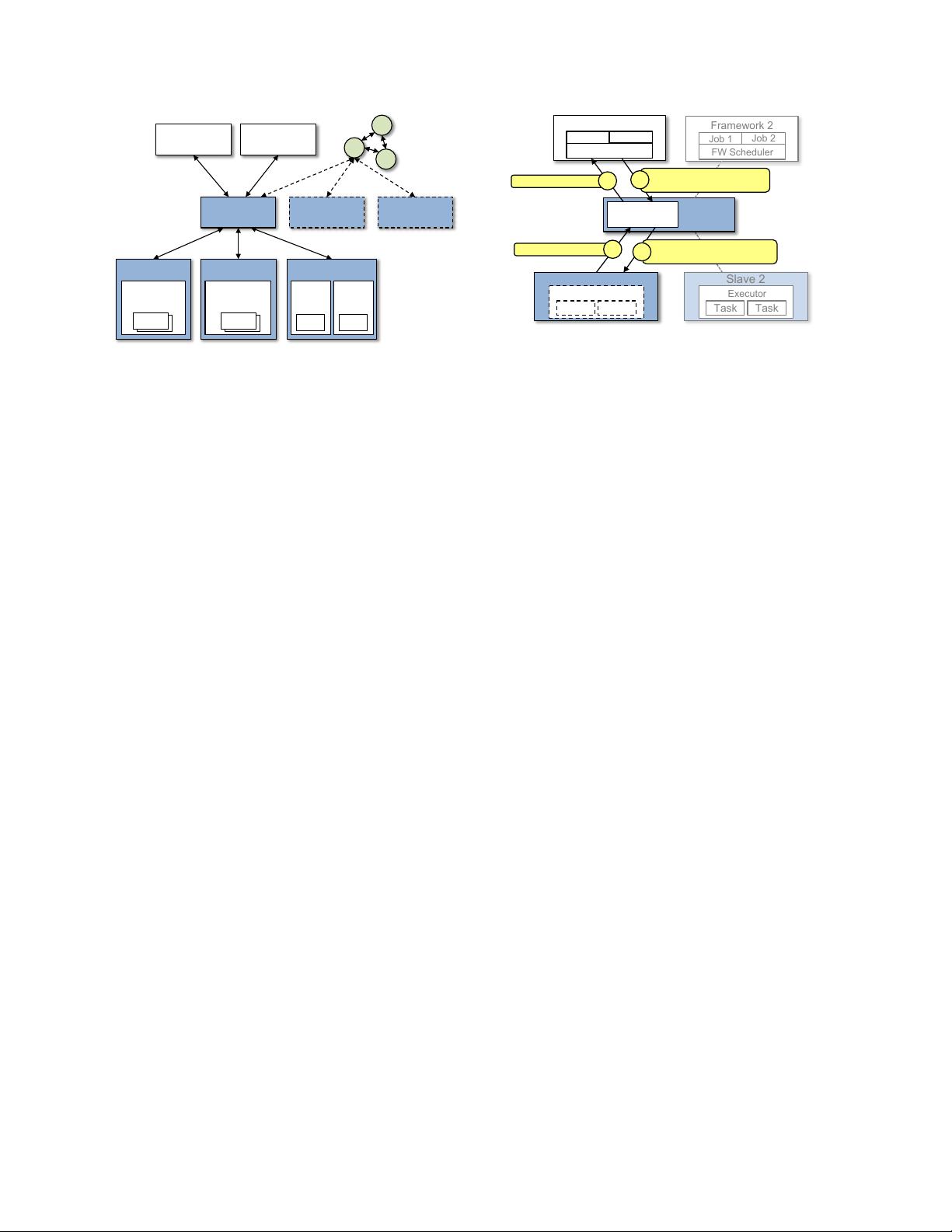

Figure 2: Mesos architecture diagram, showing two running

frameworks (Hadoop and MPI).

3 Architecture

We begin our description of Mesos by discussing our de-

sign philosophy. We then describe the components of

Mesos, our resource allocation mechanisms, and how

Mesos achieves isolation, scalability, and fault tolerance.

3.1 Design Philosophy

Mesos aims to provide a scalable and resilient core for

enabling various frameworks to efficiently share clusters.

Because cluster frameworks are both highly diverse and

rapidly evolving, our overriding design philosophy has

been to define a minimal interface that enables efficient

resource sharing across frameworks, and otherwise push

control of task scheduling and execution to the frame-

works. Pushing control to the frameworks has two bene-

fits. First, it allows frameworks to implement diverse ap-

proaches to various problems in the cluster (e.g., achiev-

ing data locality, dealing with faults), and to evolve these

solutions independently. Second, it keeps Mesos simple

and minimizes the rate of change required of the system,

which makes it easier to keep Mesos scalable and robust.

Although Mesos provides a low-level interface, we ex-

pect higher-level libraries implementing common func-

tionality (such as fault tolerance) to be built on top of

it. These libraries would be analogous to library OSes in

the exokernel [25]. Putting this functionality in libraries

rather than in Mesos allows Mesos to remain small and

flexible, and lets the libraries evolve independently.

3.2 Overview

Figure 2 shows the main components of Mesos. Mesos

consists of a master process that manages slave daemons

running on each cluster node, and frameworks that run

tasks on these slaves.

The master implements fine-grained sharing across

frameworks using resource offers. Each resource of-

fer contains a list of free resources on multiple slaves.

The master decides how many resources to offer to each

framework according to a given organizational policy,

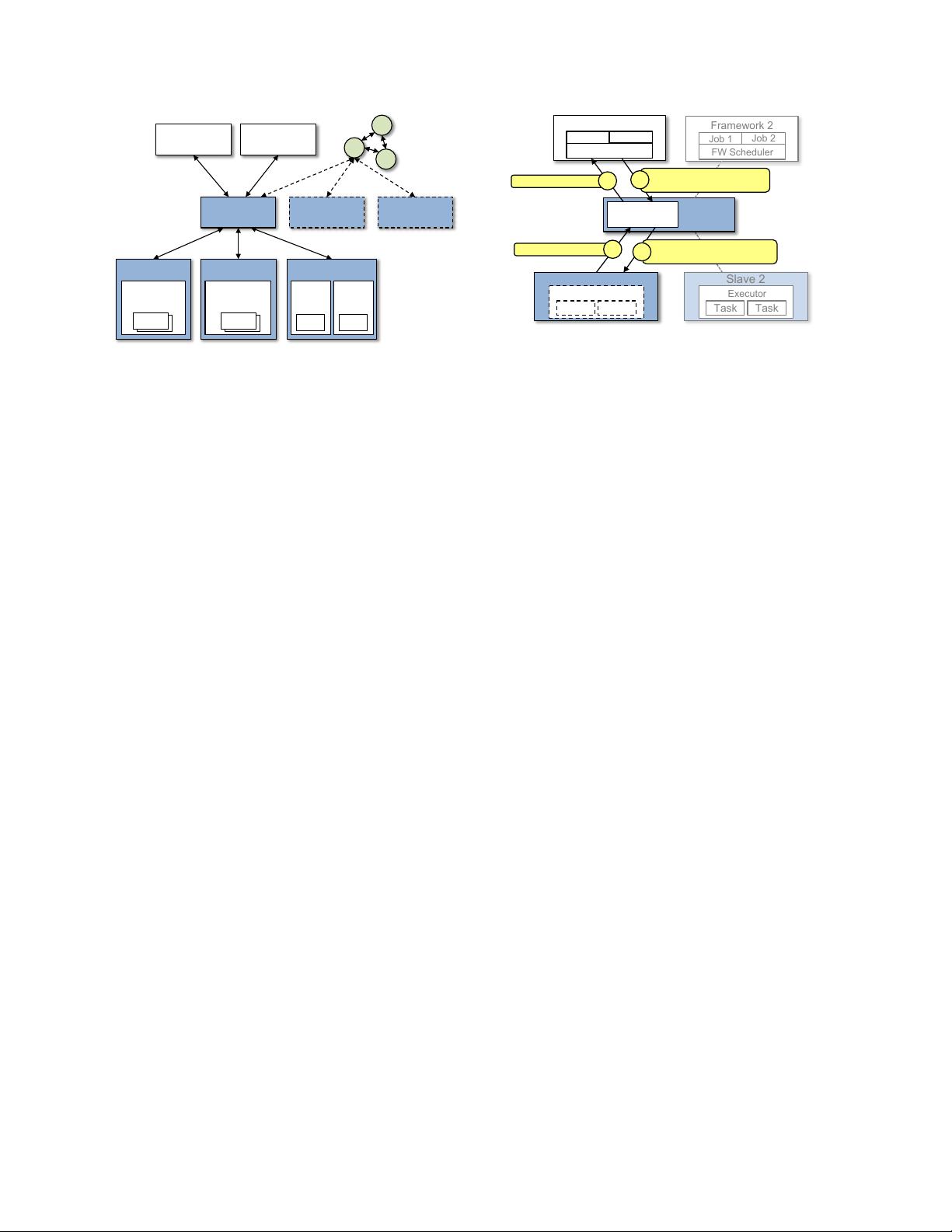

FW Scheduler

Job 1

Job 2

Framework 1

Allocation

module

Mesos

master

<s1, 4cpu, 4gb, … >

1

<fw1, task1, 2cpu, 1gb, … >

<fw1, task2, 1cpu, 2gb, … >

4

Slave 1

Task

Executor

Task

FW Scheduler

Job 1

Job 2

Framework 2

Task

Executor

Task

Slave 2

<s1, 4cpu, 4gb, … >

<task1, s1, 2cpu, 1gb, … >

<task2, s1, 1cpu, 2gb, … >

3

2

Figure 3: Resource offer example.

such as fair sharing, or strict priority. To support a di-

verse set of policies, the master employs a modular ar-

chitecture that makes it easy to add new allocation mod-

ules via a pluggin mechanism. To make the master fault-

tolerant we use ZooKeeper [4] to implement the failover

mechanism (see Section 3.6).

A framework running on top of Mesos consists of two

components: a scheduler that registers with the master

to be offered resources, and an executor process that is

launched on slave nodes to run the framework’s tasks.

While the master determines how many resources are of-

fered to each framework, the frameworks’ schedulers se-

lect which of the offered resources to use. When a frame-

works accepts offered resources, it passes to Mesos a de-

scription of the tasks it wants to run on them. In turn,

Mesos launches the tasks on the corresponding slaves.

Figure 3 shows an example of how a framework gets

scheduled to run a task. In step (1), slave 1 reports to the

master that it has 4 CPUs and 4 GB of memory free. The

master then invokes the allocation policy module, which

tells it that framework 1 should be offered all available

resources. In step (2) the master sends a resource of-

fer describing what is available on slave 1 to framework

1. In step (3), the framework’s scheduler replies to the

master with information about two tasks to run on the

slave, using h2 CPUs, 1 GB RAMi for the first task, and

h1 CPUs, 2 GB RAMi for the second task. Finally, in

step (4), the master sends the tasks to the slave, which al-

locates appropriate resources to the framework’s execu-

tor, which in turn launches the two tasks (depicted with

dotted-line borders in the figure). Because 1 CPU and 1

GB of RAM are still unallocated, the allocation module

may now offer them to framework 2. In addition, this

resource offer process repeats when tasks finish and new

resources become free.

While the thin interface provided by Mesos allows

it to scale and allows the frameworks to evolve inde-

pendently, one question remains: how can the con-

straints of a framework be satisfied without Mesos know-

ing about these constraints? In particular, how can a

3