没有合适的资源?快使用搜索试试~ 我知道了~

首页Image-Based Rendering -- Part IV

Image-Based Rendering -- Part IV

需积分: 9 64 下载量 91 浏览量

更新于2023-03-03

评论

收藏 5.36MB PDF 举报

基于图像的绘制的 Image-Based Rendering Shum, Heung-Yeung, Chan, Shing-Chow, Kang, Sing Bing 2007, XX, 408 p. 95 illus., Hardcover 来自 http://www.springer.com/computer/computer+imaging/book/978-0-387-21113-8 (如果侵犯的您的利益,请通知本人,马上删除) 此链接上有下载,但是是分章节下载的,且没有书签。 我合并了一下,并添加了比较全面的书签。 全书包括下面的6个文件 front-matter.pdf PartI Representations and Rendering Techniques.pdf Part II Sampling.pdf Part III Compression.pdf Part IV Systems and Applications.pdf --- 本文件 back-matter.pdf

资源详情

资源评论

资源推荐

Part IV

Systems and Applications

In the last part of the book, we detail four systems that acquire and render scenes

using different types of representations. The representations featured in this part are

geometryless (Chapters 14 and 17), with geometric proxies (Chapter 15), and layers

(Chapter 16).

Chapter 14 (Rendering by Manifold Hopping) shows how manifold mosaics or

multiperspective panoramas can be made more compact. This is accomplished by us-

ing the simple observation that humans perceive motion as continuous if the change

in scene is small enough. By capitalizing on this observation, the minimal number of

sampled manifolds can be derived; the scene is then rendered by "hopping" across

manifolds. No explicit geometry is used.

Constructing an image-based representation of large environments would, in

principle, require a massive amount of image data to be captured. However, we can

usually make the reasonable assumption that some parts of the environment are more

interesting than others. Areas of higher interest would then be captured using more

images and afforded higher degrees of freedom in (local) navigation. Chapter 15

(Large Environment Rendering using Plenoptic Primitives) describes an authoring

and rendering system that generates a combination of panoramic videos and Con-

centric Mosaics (CMs). CMs are placed in areas of more significant interest, and

they are connected by panoramic videos.

Chapter 16 (Pop-Up Light Field: An Interactive Image-Based Modeling and Ren-

dering System) shows how a layered-based representation can be extracted from a

series of images. The key is to allow the user indicate areas where artifacts occur.

Alpha matting at the layer boundaries and highlighted areas are then estimated to en-

sure coherence across the images and artifact-free rendering. Hardware-accelerated

rendering ensures that the editing process is truly interactive.

One characteristic of

a

light field is that it is difficult to edit and manipulate. This

limits the appeal of light fields. Chapter 17 (Feature-Based Light Field Morphing)

describes an interactive system that allows users to morph one light field into another

using a series of simple feature associations. This technique preserves the capability

304 Image-Based Rendering

of light fields to render complicated scenes (e.g., non-Lambertian or furry objects)

duritig the morphing process.

Additional Notes on Chapters

Chapter 14 (Rendering by Manifold Hopping) has appeared in International Journal

of Computer

Vision

(IJCV), volume 50, number 2, pages

185-201,

November 2002.

The article was co-authored by Heung-Yeung Shum, Lifeng Wang, Jin-Xiang Chai,

and Xin Tong.

Most of Chapter 15 (Large Environment Rendering using Plenoptic Primitives)

has appeared in IEEE Transactions on Circuits and Systems for Video Technology,

volume 13, number 11, November

2003,

pages 1064-1073. The co-authors of this

article are Sing Bing Kang, Mingsheng Wu, Yin Li, and Heung-Yeung Shum.

Heung-Yeung Shum, Jian Sun, Shuntaro Yamazaki, Yin Li, and Chi-Keung Tang

originally co-wrote the article "Pop-Up Light Field: An Interactive Image-Based

Modeling and Rendering System," which appeared in ACM Transaction on Graph-

ics,

volume 23, issue 2, April 2004, pages 143-162. Chapter 16 is an adaptation of

this article.

Finally, Chapter 17 (Feature-Based Light Field Morphing) first appeared as an ar-

ticle in ACM SIGGRAPH, July 2002, pages 457-464. The co-authors are Zhunping

Zhang, Lifeng Wang, Baining Guo, and Heung-Yeung Shum.

14

Rendering by Manifold Hopping

In Chapter 2, we surveyed image-based representations that do not require ex-

plicit scene reconstruction or geometry. Recall that representations such as the light

field

[1601,

Lumigraph [91], and Concentric Mosaics (CMs) [267] densely sample

rays in the space based on the plenoptic function [2] with reduced dimensionalities.

They allow photorealistic visualization, but at the cost of a large database require-

ment.

An effective way to reduce the amount of data needed for IBR is to constrain

the motion or the viewpoints of the rendering camera. For example, the movie-map

system [170] and the QuickTime VR system [41] allow a user to explore a large

environment only at pre-specified locations. Even though a continuous change in

viewing directions at each node is allowed, these systems can only jump between

two nodes that are far apart, thus causing visual discontinuity and discomfort to the

user. However, perceived continuous camera movement is very important for a user

to smoothly navigate in a virtual environment. Recently, several panoramic video

systems have been built to provide a dynamic and immersive "video" experience by

employing a large number of panoramic images.

This chapter shows that it is possible to achieve significant data reduction, not

through more sophisticated compression, but rather by strategically subsampling.

This is shown in the context of CMs, which is made up of densely sampled cylin-

drical manifold mosaics. By strategically sampling a small number of cylindrical

manifold mosaics, it is still possible to produce perceptually continuous navigation;

the resulting rendering technique is called manifold

hopping.

The term manifold hop-

ping is used to indicate that while motion is continuous within a manifold, motion

between manifolds is discrete, as shown in Figure 14.1.

Manifold hopping has two modes of navigation: moving continuously along any

manifold, and discretely between manifolds. An important feature of manifold hop-

ping is that significant data reduction can be achieved without sacrificing output vi-

sual fidelity, by carefully adjusting the hopping intervals. A novel view along the

manifold is rendered by locally warping a single manifold mosaic using a constant

depth assumption, without the need for accurate depth or feature correspondence.

The rendering errors caused by manifold hopping can be analyzed in the signed

306 Image-Based Rendering

Hough ray space. Experiments with real data demonstrate that the user can navigate

smoothly in a virtual environment with as little as 88fc data compressed from 11

cylindrical manifold mosaics.

Manifold hopping significantly reduces the amount of input data without sacrific-

ing output visual quality, by employing only a small number of strategically sampled

manifold mosaics. This technique is based on the observation that, for human vi-

sual systems to perceive continuous motion, it is not essential to render novel views

at infinitesimal steps. Moreover, manifold hopping does not require accurate depth

information or correspondence between images. At any point on a given manifold,

a novel view is generated by locally warping the manifold mosaic with a constant

depth assumption, rather than interpolating from two or more mosaics. Although

warping errors are inevitable because the true geometry is unknown, local warping

does not introduce structural features such as double images which can be visually

disturbing.

14.1 Preliminaries

In this section, we describe view interpolation using manifold mosaics, warping man-

ifold mosaics, and manifold hopping. Throughout this section, CMs are used as ex-

amples of manifold mosaics to illustrate these concepts. (The notion of manifold

mosaic has been covered in Chapter 2; the reader is encouraged to review the chapter

before proceeding.)

14.1.1 Warping manifold mosaics

High quality view interpolation is possible when the sampling rate is higher than

Nyquist frequency for plenoptic function reconstruction [33] (see also Chapter 5).

However, if the sampling interval between successive camera locations is too large,

view interpolation results in aliasing artifacts. More specifically, double images are

observed in the rendered image. Such artifacts can be reduced by the use of geometric

information (e.g.,

[91,

33]) or by pre-filtering the light fields [160, 33], thus reducing

output resolution.

A different approach is to locally warp manifold mosaics, which is similar to

3D warping of a perspective image. An example of locally warping CMs using an

assumed constant depth is illustrated in Figure 2.8(b). Any rendering ray that is not

directly available from a CM (i.e., not tangent to a concentric circle) can be retrieved

by first projecting it to the constant depth surface, and then re-projecting it to the

CM. Therefore, a novel view image can be warped using the local rays captured on

a single Concentric Mosaic, rather than interpolated by collecting rays from two or

more CMs.

According to Zorin and Barr

[353],

for humans to perceive a picture correctly,

it is essential that the retinal projection of a two-dimensional image of an object

should not contain any structural features that are not present in the object

itself.

In

contrast, human beings have a much larger degree of tolerance for other important

Rendering by Manifold Hopping 307

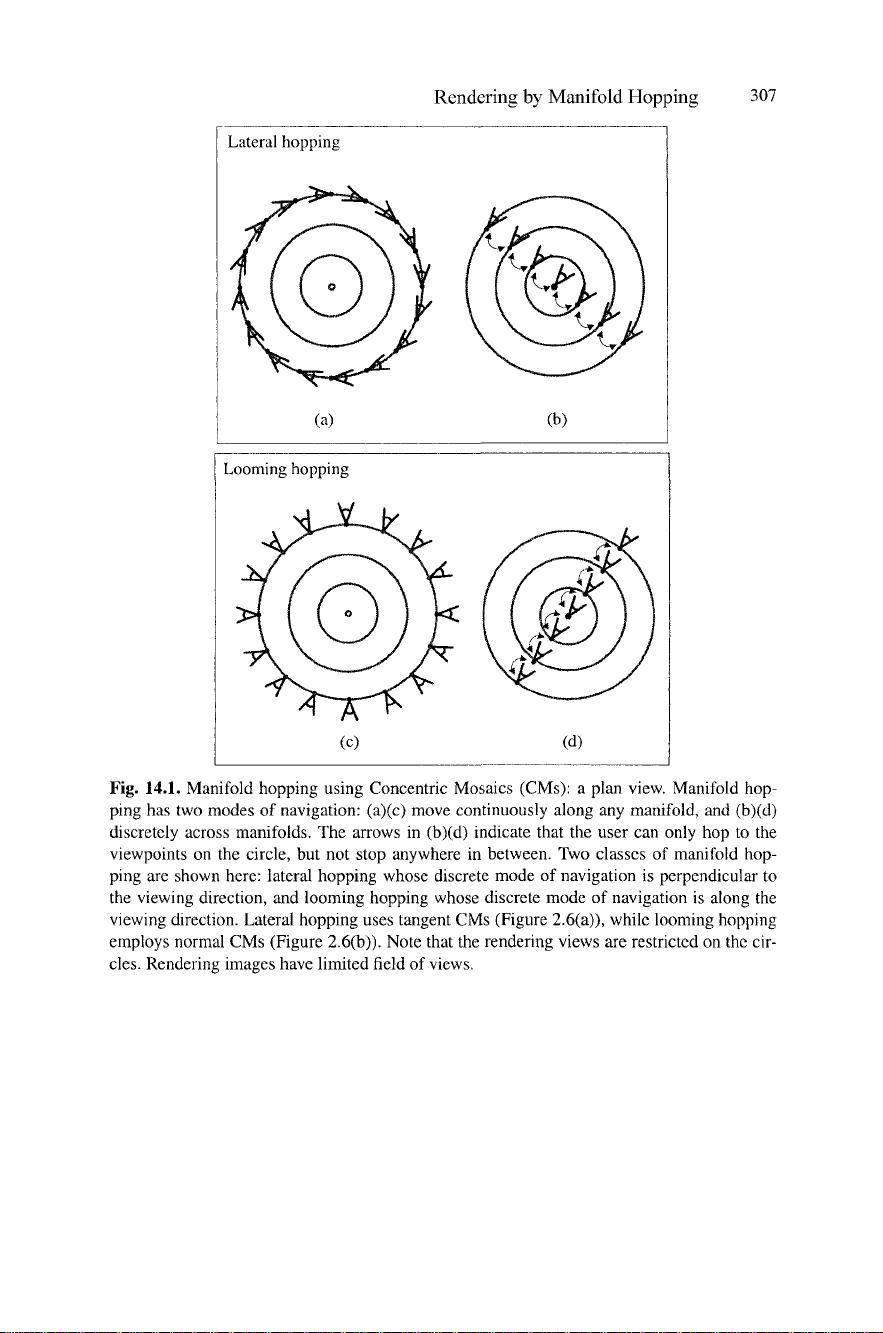

Fig. 14.1. Manifold hopping using Concentric Mosaics (CMs): a plan view. Manifold hop-

ping has two modes of navigation: (a)(c) move continuously along any manifold, and (b)(d)

discretely across manifolds. The arrows in (b)(d) indicate that the user can only hop to the

viewpoints on the circle, but not stop anywhere in between. Two classes of manifold hop-

ping are shown here: lateral hopping whose discrete mode of navigation is perpendicular to

the viewing direction, and looming hopping whose discrete mode of navigation is along the

viewing direction. Lateral hopping uses tangent CMs (Figure 2.6(a)), while looming hopping

employs normal CMs (Figure 2.6(b)). Note that the rendering views are restricted on the cir-

cles.

Rendering images have limited field of views.

剩余79页未读,继续阅读

1213151231

- 粉丝: 13

- 资源: 12

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- JSBSim Reference Manual

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

- SPC统计方法基础知识.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0