没有合适的资源?快使用搜索试试~ 我知道了~

首页最新《模仿学习(Imitation Learning》进展报告

最新《模仿学习(Imitation Learning》进展报告

需积分: 18 12 下载量 189 浏览量

更新于2023-03-03

评论

收藏 63.87MB PDF 举报

随着时空跟踪和传感数据的不断增长,现在人们可以在大范围内分析和建模细粒度行为。例如,收集每一场NBA篮球比赛的跟踪数据,包括球员、裁判和以25hz频率跟踪的球,以及带注释的比赛事件,如传球、投篮和犯规。

资源详情

资源评论

资源推荐

Towards Real-World Imitation Learning

Animation, Sports Analytics, Robotics, and More

Yisong Yue

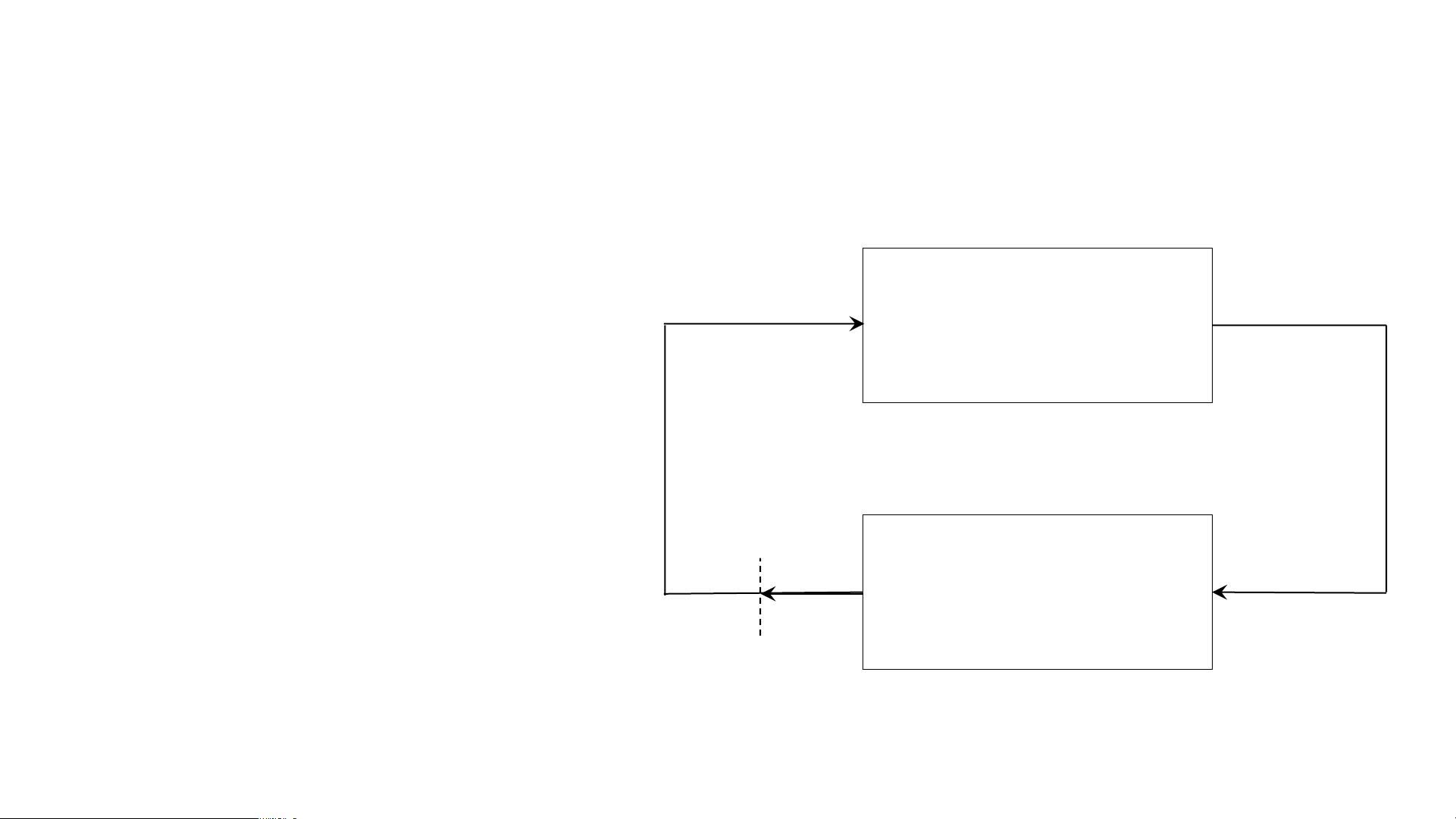

Agent

Environment / World

“Dynamics”

Action a

t

s

t+1

State/Context s

t

Goal: Find “Optimal” Policy

Imitation Learning:

Optimize imitation loss

Reinforcement Learning:

Optimize environmental reward

Policy/Controller Learning (Reinforcement & Imitation)

Learning-based Approach for

Sequential Decision Making

Non-learning approaches include: optimal control, robust control, adaptive control, etc.

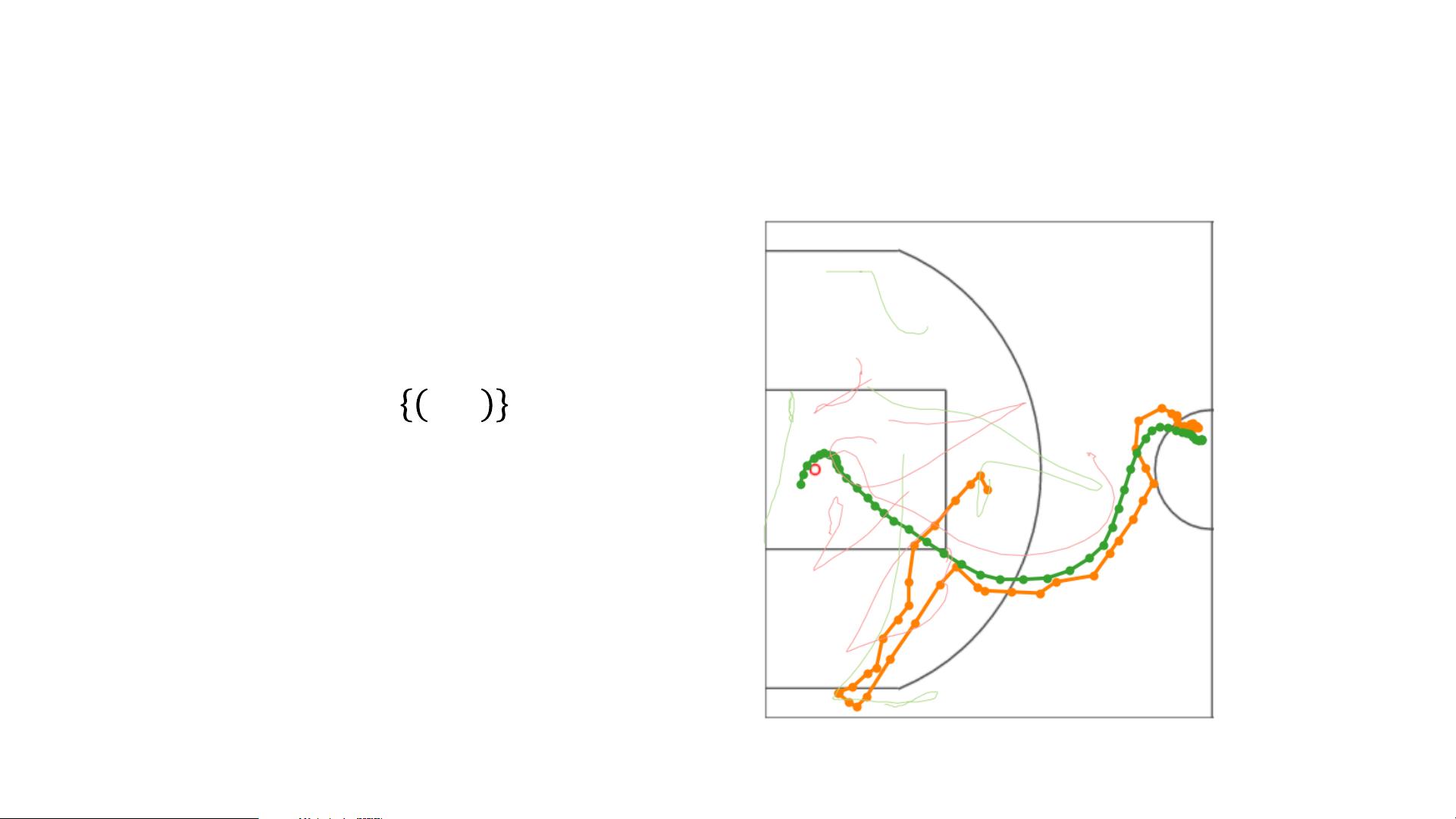

Example #1: Basketball Player Trajectories

• 𝑠 = location of players & ball

• 𝑎 = next location of player

• Training set: 𝐷 = %𝑠, %𝑎

• !𝑠 = sequence of 𝑠

• !𝑎 = sequence of 𝑎

• Goal: learn ℎ(𝑠) → 𝑎

Generating Long-term Trajectories Using Deep

Hierarchical Networks

Stephan Zheng

Caltech

stzheng@caltech.edu

Yisong Yue

Caltech

yyue@caltech.edu

Patrick Lucey

STATS

plucey@stats.com

Abstract

We study the problem of modeling spatiotemporal trajectories over long time

horizons using expert demonstrations. For instance, in sports, agents often choose

action sequences with long-term goals in mind, such as achieving a certain strategic

position. Conventional policy learning approaches, such as those based on Markov

decision processes, generally fail at learning cohesive long-term behavior in such

high-dimensional state spaces, and are only effective when fairly myopic decision-

making yields the desired behavior. The key difficulty is that conventional models

are “single-scale” and only learn a single state-action policy. We instead propose a

hierarchical policy class that automatically reasons about both long-term and short-

term goals, which we instantiate as a hierarchical neural network. We showcase our

approach in a case study on learning to imitate demonstrated basketball trajectories,

and show that it generates significantly more realistic trajectories compared to

non-hierarchical baselines as judged by professional sports analysts.

1 Introduction

Figure 1: The player (green)

has two macro-goals: 1)

pass the ball (orange) and

2) move to the basket.

Modeling long-term behavior is a key challenge in many learning prob-

lems that require complex decision-making. Consider a sports player

determining a movement trajectory to achieve a certain strategic position.

The space of such trajectories is prohibitively large, and precludes conven-

tional approaches, such as those based on simple Markovian dynamics.

Many decision problems can be naturally modeled as requiring high-level,

long-term macro-goals, which span time horizons much longer than the

timescale of low-level micro-actions (cf. He et al.

[8]

, Hausknecht and

Stone

[7]

). A natural example for such macro-micro behavior occurs in

spatiotemporal games, such as basketball where players execute complex

trajectories. The micro-actions of each agent are to move around the

court and, if they have the ball, dribble, pass or shoot the ball. These

micro-actions operate at the centisecond scale, whereas their macro-goals,

such as "maneuver behind these 2 defenders towards the basket", span

multiple seconds. Figure 1 depicts an example from a professional basketball game, where the player

must make a sequence of movements (micro-actions) in order to reach a specific location on the

basketball court (macro-goal).

Intuitively, agents need to trade-off between short-term and long-term behavior: often sequences of

individually reasonable micro-actions do not form a cohesive trajectory towards a macro-goal. For

instance, in Figure 1 the player (green) takes a highly non-linear trajectory towards his macro-goal of

positioning near the basket. As such, conventional approaches are not well suited for these settings,

as they generally use a single (low-level) state-action policy, which is only successful when myopic

or short-term decision-making leads to the desired behavior.

30th Conference on Neural Information Processing Systems (NIPS 2016), Barcelona, Spain.

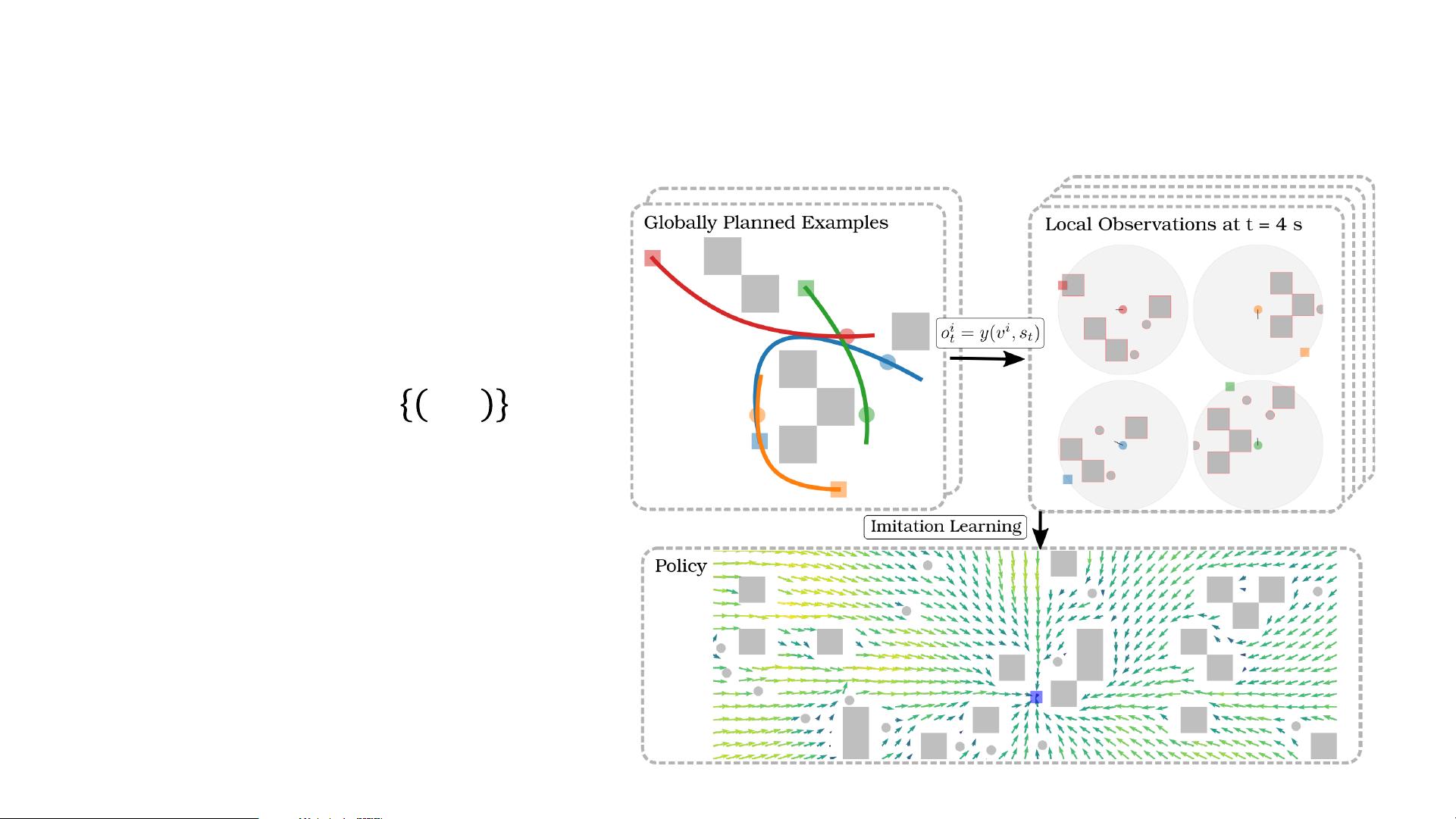

Example #2: Learning to Plan

• 𝑠 = location of robots

• 𝑎 = next location self robot

• Training set: 𝐷 = %𝑠, %𝑎

• !𝑠 = sequence of 𝑠

• !𝑎 = sequence of 𝑎

• Goal: learn ℎ(𝑠) → 𝑎

剩余41页未读,继续阅读

syp_net

- 粉丝: 158

- 资源: 1196

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- RTL8188FU-Linux-v5.7.4.2-36687.20200602.tar(20765).gz

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

- SPC统计方法基础知识.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0