This choice respects (2) since, obviously, for any

non-negative integers k, L, either :

k mod 2L

= k mod L

or :

k mod 2L

= k mod L + L.

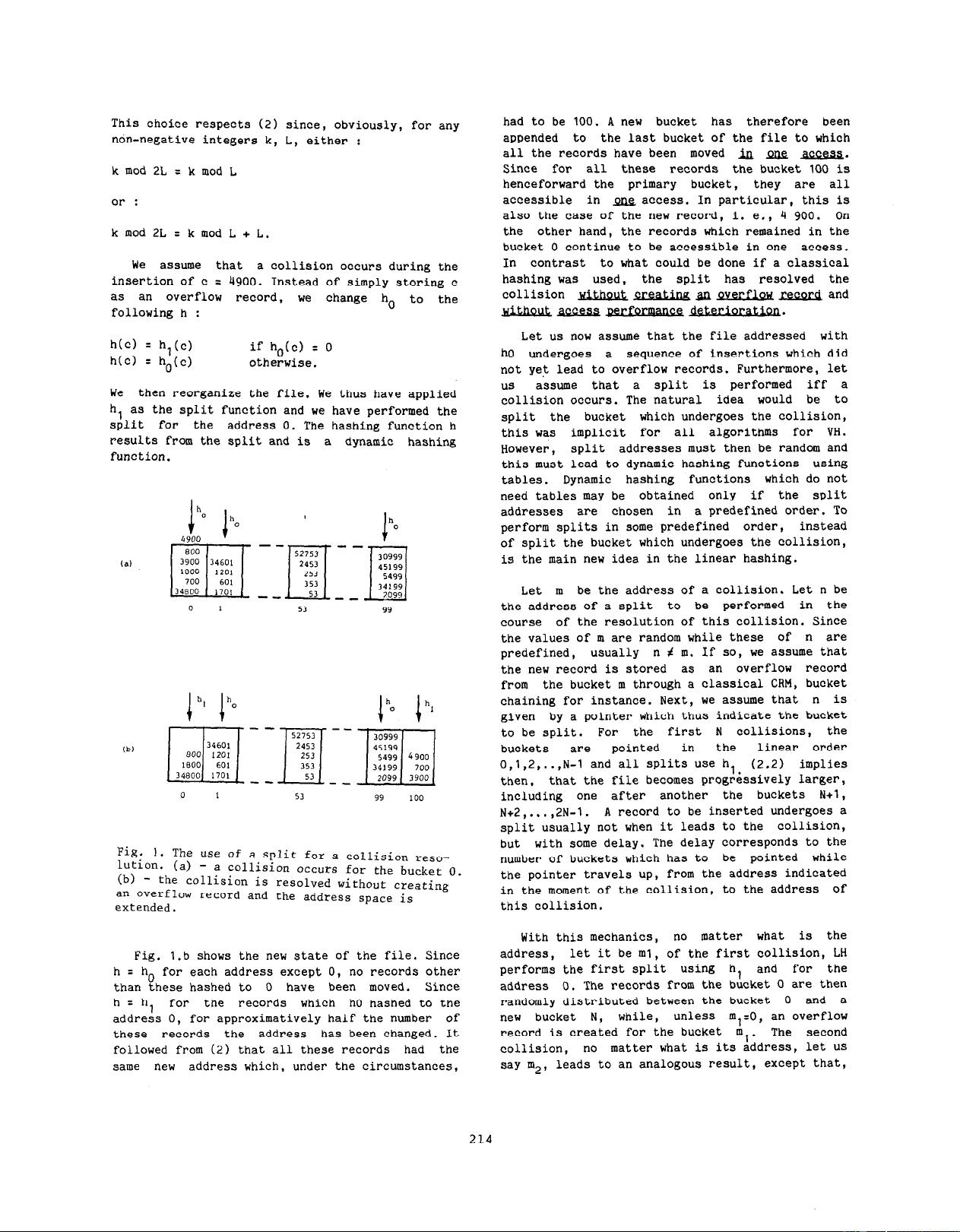

We assume that a collision occurs during the

insertion of c

= 4900. Instead of simply storing c

as an overflow record, we

change ho

to the

following h :

h(c) = h,(c)

h(c)

= ho(c)

if ho(c) = 0

otherwise.

We then reorganize the file. We thus have applied

h, as the split function and we have performed the

split for the address 0. The hashing function h

results from the spLit and is

a dynamic hashing

function.

h

0

ho 8 h

4 900

‘a)

$J;-%J--#iJ

0 1 53

99

h

1

0

1

53

99

100

Fig.

1. The use of a split for a collision reso-

t;;ion. (a) -

a collision occurs for the bucket 0.

- the collision is resolved without creating

an overflow record and the address space is

extended.

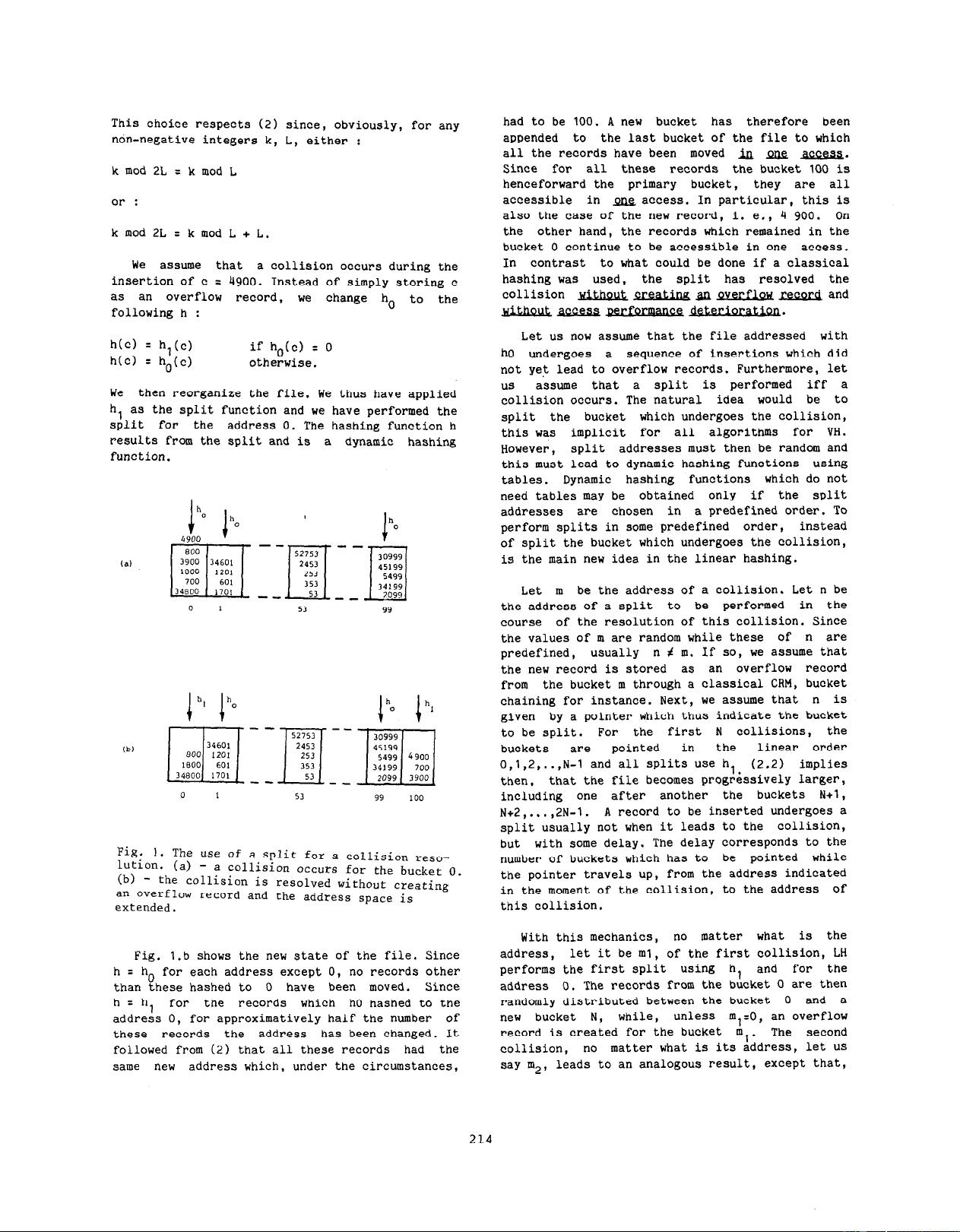

Fig. 1.b shows the new state of the file. Since

h = ho for each address except 0, no records other

than these hashed to 0 have been moved.

Since

hzh

for the records which h0 hashed to the

addre& 0, for approximatively half the number of

these

records the address has been changed. It

followed from (2) that all these records had the

same new address which, under the circumstances,

had to be 100. A new bucket has therefore been

appended to the last bucket of the file to which

all the records have been moved &l pne m.

Since for all these records the bucket 100 is

henceforward the primary bucket, they are all

accessible in m access. In particular, this is

also the case of the new record, i. e,, 4 900. On

the other hand, the records which remained in the

bucket 0 continue to be accessible in one access.

In contrast to what could be done if a classical

hashing was used,

the split has resolved the

collision ulthout creatina a

JELW.&X record and

without--deterioration.

Let us now assume that the file addressed with

h0 undergoes a

sequence of insertions which did

not yet lead to overflow records. Furthermore, let

us

assume that a split is performed iff a

collision occurs. The natural idea would be to

split the bucket which undergoes the collision,

this was

implicit for all algorithms for VH.

However, split

addresses must then be random and

this must lead to dynamic hashing functions using

tables.

Dynamic hashing functions which do not

need tables may be

obtained only if the split

addresses

are chosen in

a predefined order. To

perform splits in some predefined order, instead

of split the bucket which undergoes the collision,

is the main new idea in the linear hashing.

Let m be the address of a collision. Let n be

the address of a split to be performed in the

course

of the resolution of this collision. Since

the values of m are random while these of n are

predefined, usually

n f m. If so, we assume that

the new record is stored as an overflow record

from the bucket m through a classical CRM, bucket

chaining for instance. Next, we assume that n is

given by a pointer which thus indicate the bucket

to be split.

For the first N collisions, the

buckets are

pointed in the

linear order

0,1,2,..,

N-l

and all splits use h,

(2.2) implies

then,

that the file becomes progr&sively larger,

including one

after another the buckets N+l,

N+2 ,...,2N-1.

A record to be inserted undergoes a

split usually not when it leads to the collision,

but with some delay. The delay corresponds to the

number of buckets which has to

be pointed while

the pointer travels up, from the address indicated

in the moment of the collision, to the address of

this collision.

With this mechanics,

no matter what is the

address,

let it be ml, of the first collision, LH

performs the first split using h, and for the

address 0. The records from the bucket 0 are then

randomly distributed between the bucket

0 and a

new bucket N, while, unless m,=O, an overflow

record is created for the bucket m,.

The second

collision,

no matter what is its address, let

US

say m2,

leads to an analogous result, except that,

214