没有合适的资源?快使用搜索试试~ 我知道了~

首页CycleGAN论文.pdf

CycleGAN论文.pdf

需积分: 0 42 下载量 119 浏览量

更新于2023-05-14

评论 1

收藏 7.65MB PDF 举报

CycleGAN论文原文,描述了一致性循环生成对抗的结构、实验方法以及实验的结果等,适合计算机领域人工智能专业的学生阅读

资源详情

资源评论

资源推荐

Unpaired Image-to-Image Translation

using Cycle-Consistent Adversarial Networks

Jun-Yan Zhu

∗

Taesung Park

∗

Phillip Isola Alexei A. Efros

Berkeley AI Research (BAIR) laboratory, UC Berkeley

Zebras Horses

horse zebra

zebra horse

Summer Winter

summer winter

winter summer

Photograph Van Gogh CezanneMonet Ukiyo-e

Monet Photos

Monet photo

photo Monet

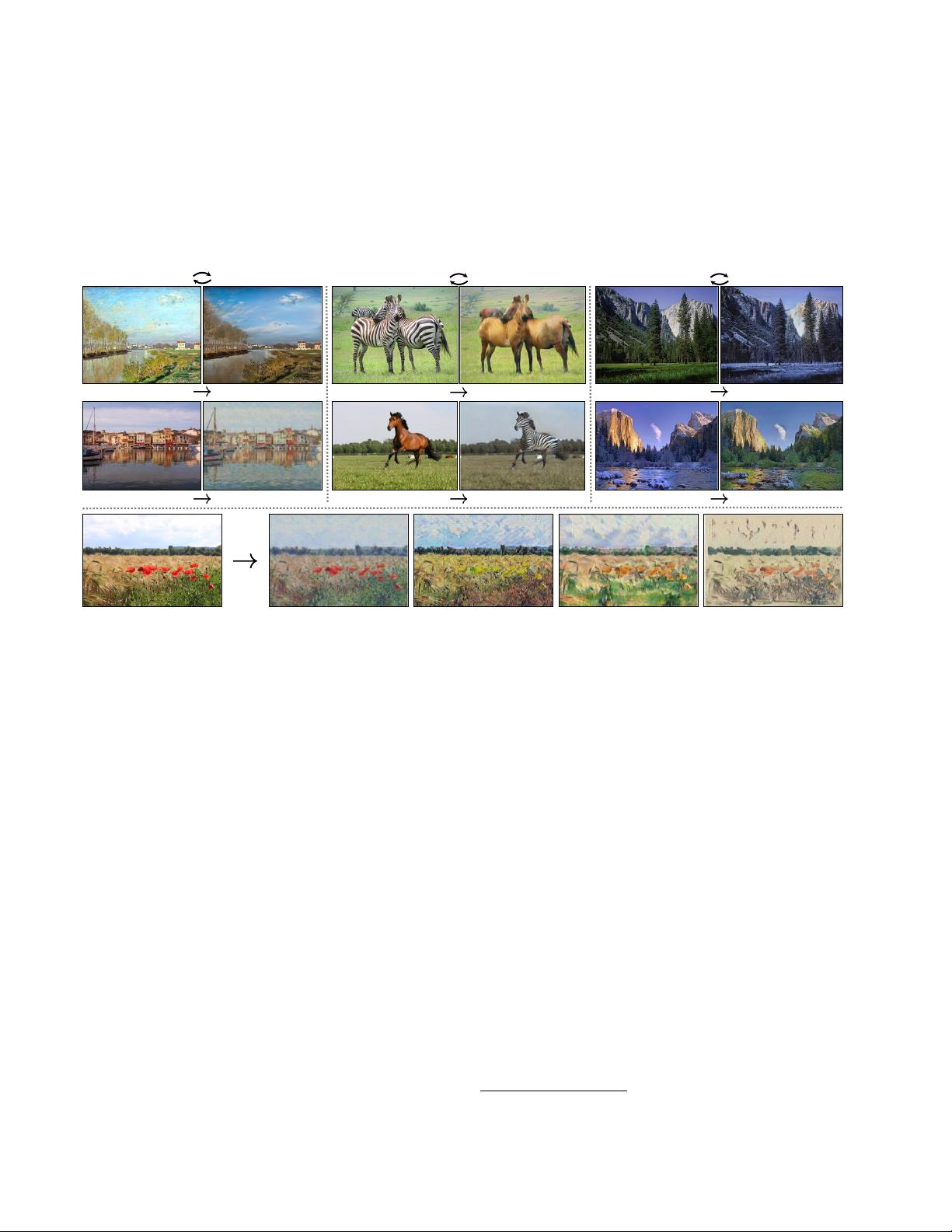

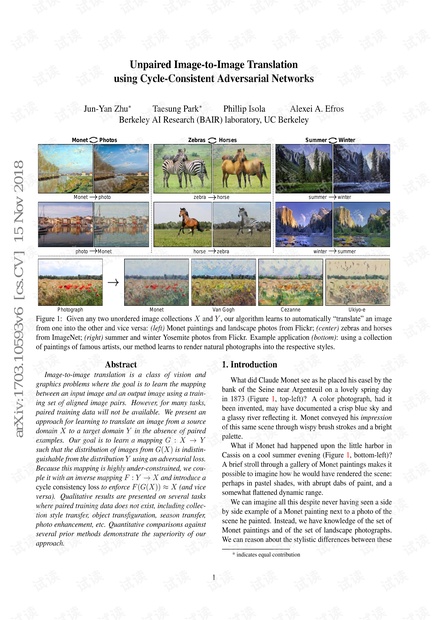

Figure 1: Given any two unordered image collections X and Y , our algorithm learns to automatically “translate” an image

from one into the other and vice versa: (left) Monet paintings and landscape photos from Flickr; (center) zebras and horses

from ImageNet; (right) summer and winter Yosemite photos from Flickr. Example application (bottom): using a collection

of paintings of famous artists, our method learns to render natural photographs into the respective styles.

Abstract

Image-to-image translation is a class of vision and

graphics problems where the goal is to learn the mapping

between an input image and an output image using a train-

ing set of aligned image pairs. However, for many tasks,

paired training data will not be available. We present an

approach for learning to translate an image from a source

domain X to a target domain Y in the absence of paired

examples. Our goal is to learn a mapping G : X → Y

such that the distribution of images from G(X) is indistin-

guishable from the distribution Y using an adversarial loss.

Because this mapping is highly under-constrained, we cou-

ple it with an inverse mapping F : Y → X and introduce a

cycle consistency loss to enforce F (G(X)) ≈ X (and vice

versa). Qualitative results are presented on several tasks

where paired training data does not exist, including collec-

tion style transfer, object transfiguration, season transfer,

photo enhancement, etc. Quantitative comparisons against

several prior methods demonstrate the superiority of our

approach.

1. Introduction

What did Claude Monet see as he placed his easel by the

bank of the Seine near Argenteuil on a lovely spring day

in 1873 (Figure 1, top-left)? A color photograph, had it

been invented, may have documented a crisp blue sky and

a glassy river reflecting it. Monet conveyed his impression

of this same scene through wispy brush strokes and a bright

palette.

What if Monet had happened upon the little harbor in

Cassis on a cool summer evening (Figure 1, bottom-left)?

A brief stroll through a gallery of Monet paintings makes it

possible to imagine how he would have rendered the scene:

perhaps in pastel shades, with abrupt dabs of paint, and a

somewhat flattened dynamic range.

We can imagine all this despite never having seen a side

by side example of a Monet painting next to a photo of the

scene he painted. Instead, we have knowledge of the set of

Monet paintings and of the set of landscape photographs.

We can reason about the stylistic differences between these

* indicates equal contribution

1

arXiv:1703.10593v6 [cs.CV] 15 Nov 2018

…

…

…

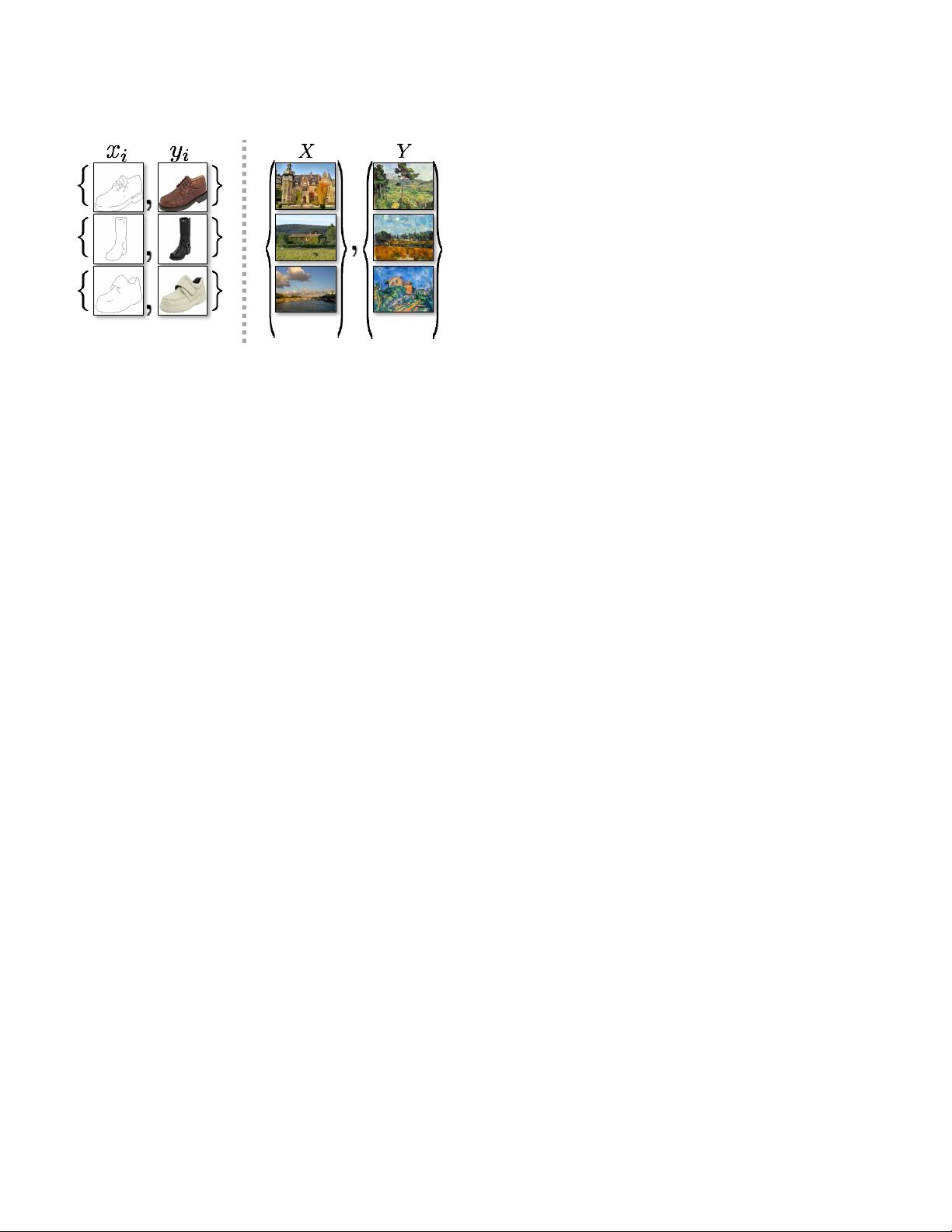

Paired Unpaired

Figure 2: Paired training data (left) consists of training ex-

amples {x

i

, y

i

}

N

i=1

, where the correspondence between x

i

and y

i

exists [22]. We instead consider unpaired training

data (right), consisting of a source set {x

i

}

N

i=1

(x

i

∈ X)

and a target set {y

j

}

j=1

(y

j

∈ Y ), with no information pro-

vided as to which x

i

matches which y

j

.

two sets, and thereby imagine what a scene might look like

if we were to “translate” it from one set into the other.

In this paper, we present a method that can learn to do the

same: capturing special characteristics of one image col-

lection and figuring out how these characteristics could be

translated into the other image collection, all in the absence

of any paired training examples.

This problem can be more broadly described as image-

to-image translation [22], converting an image from one

representation of a given scene, x, to another, y, e.g.,

grayscale to color, image to semantic labels, edge-map to

photograph. Years of research in computer vision, image

processing, computational photography, and graphics have

produced powerful translation systems in the supervised

setting, where example image pairs {x

i

, y

i

}

N

i=1

are avail-

able (Figure 2, left), e.g., [11, 19, 22, 23, 28, 33, 45, 56, 58,

62]. However, obtaining paired training data can be difficult

and expensive. For example, only a couple of datasets ex-

ist for tasks like semantic segmentation (e.g., [4]), and they

are relatively small. Obtaining input-output pairs for graph-

ics tasks like artistic stylization can be even more difficult

since the desired output is highly complex, typically requir-

ing artistic authoring. For many tasks, like object transfigu-

ration (e.g., zebra↔horse, Figure 1 top-middle), the desired

output is not even well-defined.

We therefore seek an algorithm that can learn to trans-

late between domains without paired input-output examples

(Figure 2, right). We assume there is some underlying rela-

tionship between the domains – for example, that they are

two different renderings of the same underlying scene – and

seek to learn that relationship. Although we lack supervi-

sion in the form of paired examples, we can exploit super-

vision at the level of sets: we are given one set of images in

domain X and a different set in domain Y . We may train

a mapping G : X → Y such that the output ˆy = G(x),

x ∈ X, is indistinguishable from images y ∈ Y by an ad-

versary trained to classify ˆy apart from y. In theory, this ob-

jective can induce an output distribution over ˆy that matches

the empirical distribution p

data

(y) (in general, this requires

G to be stochastic) [16]. The optimal G thereby translates

the domain X to a domain

ˆ

Y distributed identically to Y .

However, such a translation does not guarantee that an in-

dividual input x and output y are paired up in a meaningful

way – there are infinitely many mappings G that will in-

duce the same distribution over ˆy. Moreover, in practice,

we have found it difficult to optimize the adversarial objec-

tive in isolation: standard procedures often lead to the well-

known problem of mode collapse, where all input images

map to the same output image and the optimization fails to

make progress [15].

These issues call for adding more structure to our ob-

jective. Therefore, we exploit the property that translation

should be “cycle consistent”, in the sense that if we trans-

late, e.g., a sentence from English to French, and then trans-

late it back from French to English, we should arrive back

at the original sentence [3]. Mathematically, if we have a

translator G : X → Y and another translator F : Y → X,

then G and F should be inverses of each other, and both

mappings should be bijections. We apply this structural as-

sumption by training both the mapping G and F simultane-

ously, and adding a cycle consistency loss [64] that encour-

ages F (G(x)) ≈ x and G(F (y)) ≈ y. Combining this loss

with adversarial losses on domains X and Y yields our full

objective for unpaired image-to-image translation.

We apply our method to a wide range of applications,

including collection style transfer, object transfiguration,

season transfer and photo enhancement. We also compare

against previous approaches that rely either on hand-defined

factorizations of style and content, or on shared embed-

ding functions, and show that our method outperforms these

baselines. We provide both PyTorch and Torch implemen-

tations. Check out more results at our website.

2. Related work

Generative Adversarial Networks (GANs) [16, 63]

have achieved impressive results in image generation [6,

39], image editing [66], and representation learning [39, 43,

37]. Recent methods adopt the same idea for conditional

image generation applications, such as text2image [41], im-

age inpainting [38], and future prediction [36], as well as to

other domains like videos [54] and 3D data [57]. The key to

GANs’ success is the idea of an adversarial loss that forces

the generated images to be, in principle, indistinguishable

from real photos. This loss is particularly powerful for im-

age generation tasks, as this is exactly the objective that

much of computer graphics aims to optimize. We adopt an

adversarial loss to learn the mapping such that the translated

X

Y

G

F

D

Y

D

X

G

F

ˆ

Y

X

Y

(

X

Y

(

G

F

ˆ

X

(a)

(b)

(c)

cycle-consistency

loss

cycle-consistency

loss

D

Y

D

X

ˆy

ˆx

x

y

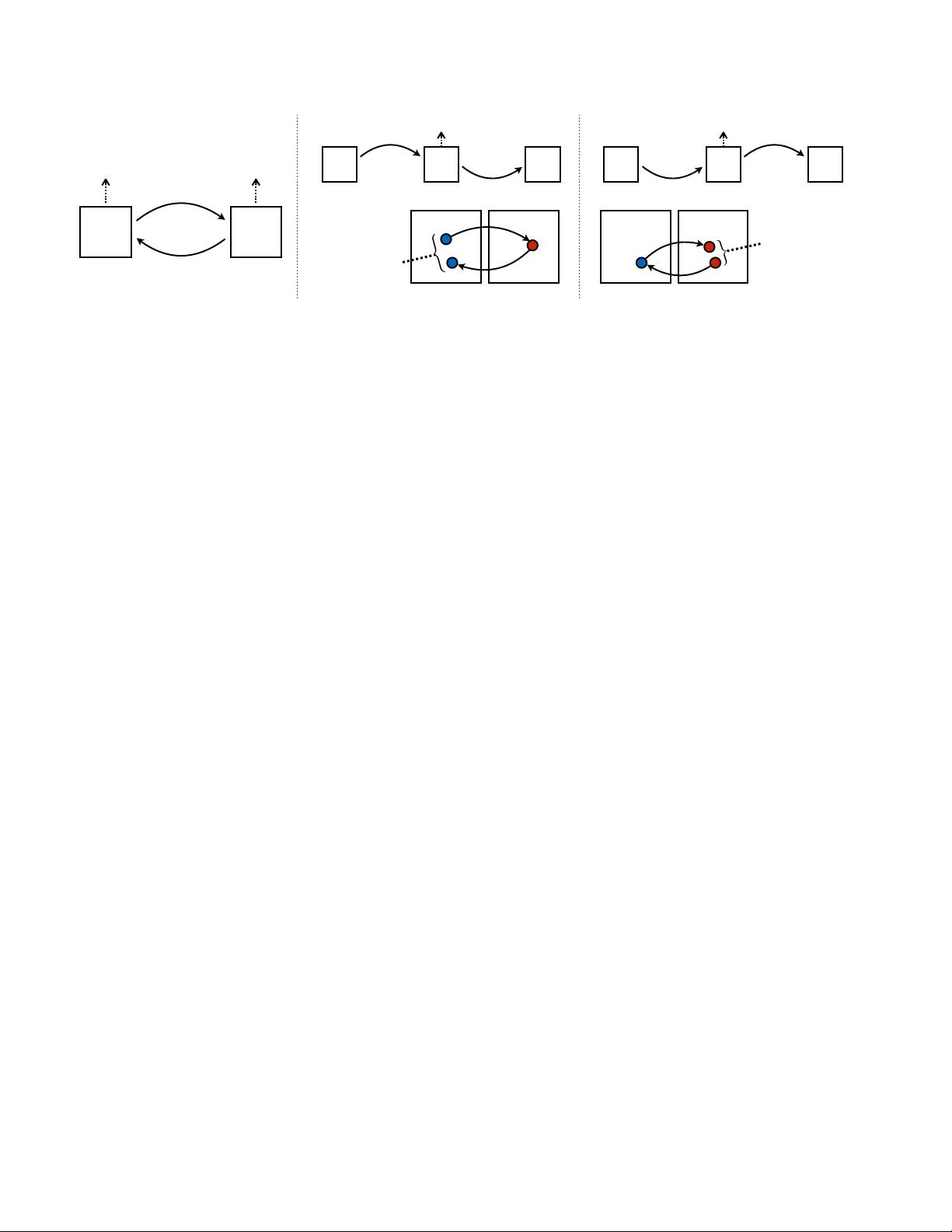

Figure 3: (a) Our model contains two mapping functions G : X → Y and F : Y → X, and associated adversarial

discriminators D

Y

and D

X

. D

Y

encourages G to translate X into outputs indistinguishable from domain Y , and vice versa

for D

X

and F. To further regularize the mappings, we introduce two cycle consistency losses that capture the intuition that if

we translate from one domain to the other and back again we should arrive at where we started: (b) forward cycle-consistency

loss: x → G(x) → F (G(x)) ≈ x, and (c) backward cycle-consistency loss: y → F (y) → G(F (y)) ≈ y

images cannot be distinguished from images in the target

domain.

Image-to-Image Translation The idea of image-to-

image translation goes back at least to Hertzmann et al.’s

Image Analogies [19], who employ a non-parametric tex-

ture model [10] on a single input-output training image pair.

More recent approaches use a dataset of input-output exam-

ples to learn a parametric translation function using CNNs

(e.g., [33]). Our approach builds on the “pix2pix” frame-

work of Isola et al. [22], which uses a conditional generative

adversarial network [16] to learn a mapping from input to

output images. Similar ideas have been applied to various

tasks such as generating photographs from sketches [44] or

from attribute and semantic layouts [25]. However, unlike

the above prior work, we learn the mapping without paired

training examples.

Unpaired Image-to-Image Translation Several other

methods also tackle the unpaired setting, where the goal is

to relate two data domains: X and Y . Rosales et al. [42]

propose a Bayesian framework that includes a prior based

on a patch-based Markov random field computed from a

source image and a likelihood term obtained from multiple

style images. More recently, CoGAN [32] and cross-modal

scene networks [1] use a weight-sharing strategy to learn a

common representation across domains. Concurrent to our

method, Liu et al. [31] extends the above framework with

a combination of variational autoencoders [27] and genera-

tive adversarial networks [16]. Another line of concurrent

work [46, 49, 2] encourages the input and output to share

specific “content” features even though they may differ in

“style“. These methods also use adversarial networks, with

additional terms to enforce the output to be close to the input

in a predefined metric space, such as class label space [2],

image pixel space [46], and image feature space [49].

Unlike the above approaches, our formulation does not

rely on any task-specific, predefined similarity function be-

tween the input and output, nor do we assume that the input

and output have to lie in the same low-dimensional embed-

ding space. This makes our method a general-purpose solu-

tion for many vision and graphics tasks. We directly com-

pare against several prior and contemporary approaches in

Section 5.1.

Cycle Consistency The idea of using transitivity as a

way to regularize structured data has a long history. In

visual tracking, enforcing simple forward-backward con-

sistency has been a standard trick for decades [24, 48].

In the language domain, verifying and improving transla-

tions via “back translation and reconciliation” is a technique

used by human translators [3] (including, humorously, by

Mark Twain [51]), as well as by machines [17]. More

recently, higher-order cycle consistency has been used in

structure from motion [61], 3D shape matching [21], co-

segmentation [55], dense semantic alignment [65, 64], and

depth estimation [14]. Of these, Zhou et al. [64] and Go-

dard et al. [14] are most similar to our work, as they use a

cycle consistency loss as a way of using transitivity to su-

pervise CNN training. In this work, we are introducing a

similar loss to push G and F to be consistent with each

other. Concurrent with our work, in these same proceed-

ings, Yi et al. [59] independently use a similar objective

for unpaired image-to-image translation, inspired by dual

learning in machine translation [17].

Neural Style Transfer [13, 23, 52, 12] is another way

to perform image-to-image translation, which synthesizes a

novel image by combining the content of one image with

the style of another image (typically a painting) based on

matching the Gram matrix statistics of pre-trained deep fea-

tures. Our primary focus, on the other hand, is learning

the mapping between two image collections, rather than be-

tween two specific images, by trying to capture correspon-

dences between higher-level appearance structures. There-

fore, our method can be applied to other tasks, such as

painting→ photo, object transfiguration, etc. where single

sample transfer methods do not perform well. We compare

these two methods in Section 5.2.

3. Formulation

Our goal is to learn mapping functions between two

domains X and Y given training samples {x

i

}

N

i=1

where

x

i

∈ X and {y

j

}

M

j=1

where y

j

∈ Y

1

. We denote the data

distribution as x ∼ p

data

(x) and y ∼ p

data

(y). As illus-

trated in Figure 3 (a), our model includes two mappings

G : X → Y and F : Y → X. In addition, we in-

troduce two adversarial discriminators D

X

and D

Y

, where

D

X

aims to distinguish between images {x} and translated

images {F (y)}; in the same way, D

Y

aims to discriminate

between {y} and {G(x)}. Our objective contains two types

of terms: adversarial losses [16] for matching the distribu-

tion of generated images to the data distribution in the target

domain; and cycle consistency losses to prevent the learned

mappings G and F from contradicting each other.

3.1. Adversarial Loss

We apply adversarial losses [16] to both mapping func-

tions. For the mapping function G : X → Y and its dis-

criminator D

Y

, we express the objective as:

L

GAN

(G, D

Y

, X, Y ) = E

y ∼p

data

(y )

[log D

Y

(y)]

+ E

x∼p

data

(x)

[log(1 − D

Y

(G(x))],

(1)

where G tries to generate images G(x) that look similar to

images from domain Y , while D

Y

aims to distinguish be-

tween translated samples G(x) and real samples y. G aims

to minimize this objective against an adversary D that tries

to maximize it, i.e., min

G

max

D

Y

L

GAN

(G, D

Y

, X, Y ).

We introduce a similar adversarial loss for the mapping

function F : Y → X and its discriminator D

X

as well:

i.e., min

F

max

D

X

L

GAN

(F, D

X

, Y, X).

3.2. Cycle Consistency Loss

Adversarial training can, in theory, learn mappings G

and F that produce outputs identically distributed as target

domains Y and X respectively (strictly speaking, this re-

quires G and F to be stochastic functions) [15]. However,

with large enough capacity, a network can map the same

set of input images to any random permutation of images in

the target domain, where any of the learned mappings can

induce an output distribution that matches the target dis-

tribution. Thus, adversarial losses alone cannot guarantee

that the learned function can map an individual input x

i

to

a desired output y

i

. To further reduce the space of possi-

ble mapping functions, we argue that the learned mapping

1

We often omit the subscript i and j for simplicity.

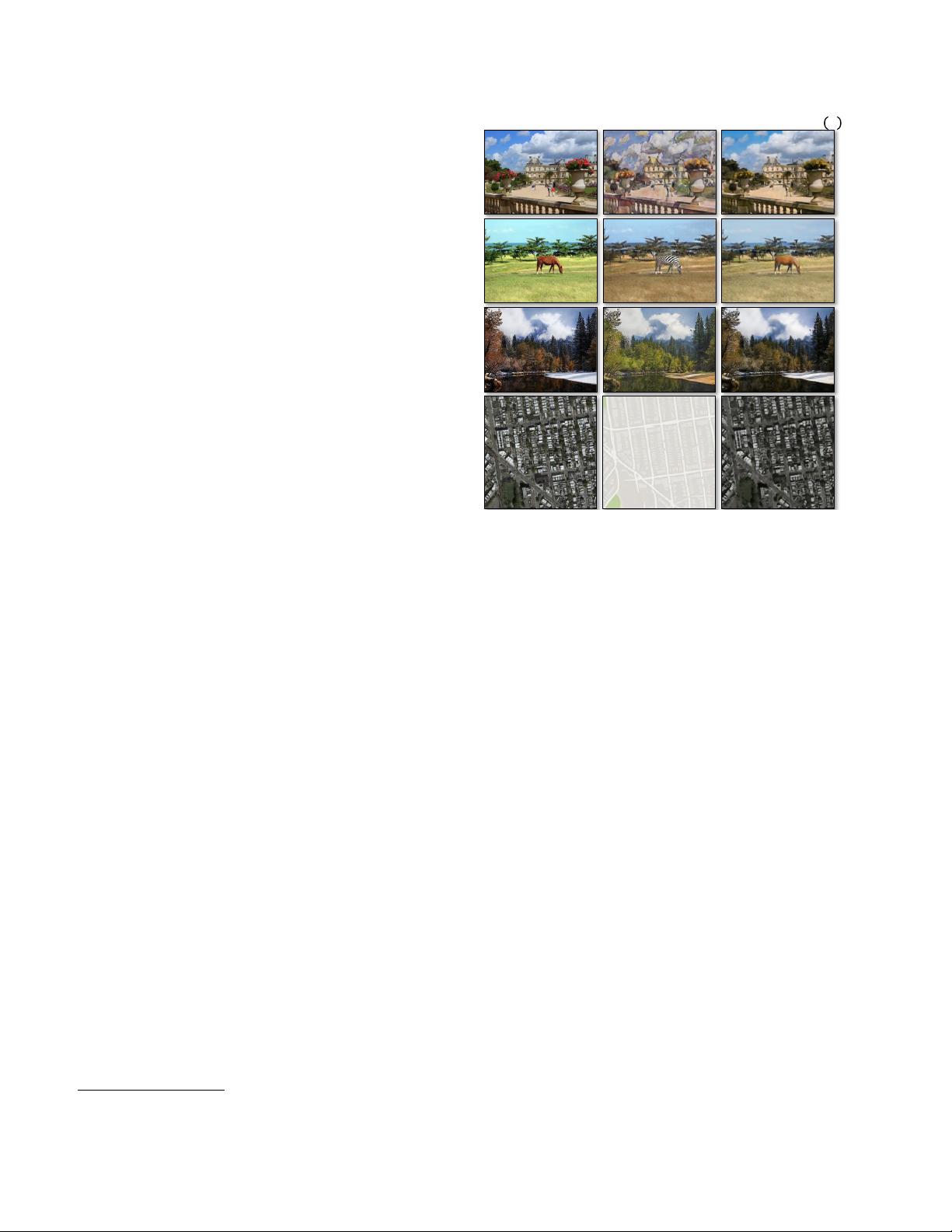

Input 𝑥 Output 𝐺(𝑥) Reconstruction F(𝐺 𝑥 )

Figure 4: The input images x, output images G(x) and the

reconstructed images F (G(x)) from various experiments.

From top to bottom: photo↔Cezanne, horses↔zebras,

winter→summer Yosemite, aerial photos↔Google maps.

functions should be cycle-consistent: as shown in Figure 3

(b), for each image x from domain X, the image translation

cycle should be able to bring x back to the original image,

i.e., x → G(x) → F (G(x)) ≈ x. We call this forward cy-

cle consistency. Similarly, as illustrated in Figure 3 (c), for

each image y from domain Y , G and F should also satisfy

backward cycle consistency: y → F (y) → G(F (y)) ≈ y.

We incentivize this behavior using a cycle consistency loss:

L

cyc

(G, F ) = E

x∼p

data

(x)

[kF (G(x)) − xk

1

]

+ E

y∼p

data

(y )

[kG(F (y)) − yk

1

]. (2)

In preliminary experiments, we also tried replacing the L1

norm in this loss with an adversarial loss between F(G(x))

and x, and between G(F (y)) and y, but did not observe

improved performance.

The behavior induced by the cycle consistency loss can

be observed in Figure 4: the reconstructed images F(G(x))

end up matching closely to the input images x.

3.3. Full Objective

Our full objective is:

L(G, F, D

X

, D

Y

) =L

GAN

(G, D

Y

, X, Y )

+ L

GAN

(F, D

X

, Y, X)

+ λL

cyc

(G, F ), (3)

剩余17页未读,继续阅读

weixin_41999309

- 粉丝: 0

- 资源: 1

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- zigbee-cluster-library-specification

- JSBSim Reference Manual

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论0