没有合适的资源?快使用搜索试试~ 我知道了~

首页论文研究 - 具有多个深度学习者的时间序列预测:从贝叶斯网络中选择

考虑到深度学习的最新发展,验证哪些方法对多元时间序列数据的预测有效已变得越来越重要。 在这项研究中,我们提出了一种使用多个深度学习者与贝叶斯网络相结合的时间序列预测的新方法,其中使用K-means聚类将训练数据划分为聚类。 我们根据贝叶斯信息准则确定了多少个聚类最适合K均值。 根据每个群集,将培训多个深度学习者。 我们使用了三种类型的深度学习器:深度神经网络(DNN),递归神经网络(RNN)和长短期记忆(LSTM)。 朴素的贝叶斯分类器用于确定哪个深度学习者负责预测特定的时间序列。 我们提议的方法将应用于一组金融时间序列数据(日经平均股票价格),以评估所做预测的准确性。 与采用单个深度学习器来获取所有数据的常规方法相比,我们提出的方法证明了F值和准确性得到了提高。

资源详情

资源评论

资源推荐

Journal of Data Analysis and Information Processing, 2017, 5, 115-130

http://www.scirp.org/journal/jdaip

ISSN Online: 2327-7203

ISSN Print: 2327-7211

DOI: 10.4236/jdaip.2017.53009

August 29, 2017

Time Series Forecasting with Multiple Deep

Learners: Selection from a Bayesian Network

Shusuke Kobayashi, Susumu Shirayama

Graduate School of Engineering, the University of Tokyo, Tokyo, Japan

Abstract

Considering the recent developments in deep learning, it has become incre

a-

singly important to verify what methods are valid for the prediction of mult

i-

variate time-series data. In this study, we propose a novel method of time-se

ries

prediction employing multiple deep learners combined with a Bayesian ne

t-

work where training data is divided into clusters using K-

means clustering.

We decided how many clusters are the best for K-

means with the Bayesian

information criteria. Depending on each cluster, the multiple deep learners

are trained. We used three types of deep learners:

deep neural network

(DNN), recurrent neural network (RNN), and long short-

term memory

(LSTM). A naive Bayes classifier is used to determine which deep learner is in

charge of predicting a particular time-series. Our propos

ed method will be

applied to a set of financial time-

series data, the Nikkei Average Stock price,

to assess the accuracy of the predictions made. Compared with the conve

n-

tional method of employing a single deep learner to acquire all the data, it is

demonstrated by our proposed method that F-value and accuracy are i

m-

proved.

Keywords

Time-Series Data, Deep Learning, Bayesian Network, Recurrent Neural

Network, Long Short-Term Memory, Ensemble Learning, K-Means

1. Introduction

Deep learning has been developed to compensate for the shortcomings of pre-

vious neural networks [1] and is well known for its high performance in the

fields of character and image recognition [2]. In addition, deep learning’s influ-

ence is impacting various other fields [3] [4] [5], and its efficiency and accuracy

have been much bolstered by recent research. However, deep learning is subject

to three main drawbacks. For instance, obtaining and generating appropriate

How to cite this paper:

Kobayashi, S

. and

Shirayama

, S. (2017) Time Series

Forecasting

with Multiple Deep Learners: Selection

from a Bayesian Network

.

Journal of Data

Analysis and Information Processing

,

5,

115

-130.

https://doi.org/10.4236/jdaip.2017.53009

Received:

July 10, 2017

Accepted:

August 26, 2017

Published:

August 29, 2017

Copyright © 201

7 by authors and

Scientific

Research Publishing Inc.

This work is licensed

under the Creative

Commons Attribution International

License (CC BY

4.0).

http://creativecommons.org/licenses/by/4.0/

Open Access

S. Kobayashi, S. Shirayama

116

training data is problematic, it suffers from excessively long calculation times.

Moreover, parameter selection is also difficult. While researchers are progressing

toward overcoming such issues, according to some reports, dealing with these

problems is difficult except for subjects in which the formation of feature spaces

such as images and sounds are the key to success [6].

However, while it is clear that deep learning is considered to underpin artifi-

cial intelligence and because the brain’s information processing mechanism is

not fully understood, it is possible to develop new learners by imitating what is

known about the information processing mechanisms of the brain. One way to

develop new learners is to use a Bayesian network [7]. Moreover, it is also con-

ceivable to combine multiple deep learners to create a single new learner. For ex-

ample, ensemble learning and complementary learning are representative learning

methods using multiple learners [8] [9] [10]. In addition, to realize a hierarchical

control mechanism, there are cases where multiple learners are used.

In this research, we develop a new learner using multiple deep learners in

combination with Bayesian networks as the selection method to choose the most

suitable type of learner for each set of test data.

In time-series data prediction with deep learning, overly long calculation times

are required for training. Moreover, a deep learner does not converge due to the

randomness of the time-series data. There is also an issue with employing a Baye-

sian network. In this paper, we try to reduce the computation time and improve

convergence by dividing training data into specific clusters using the K-means

method and creating multiple deep learners from the learning derived from the

divided training data. We also simplify the problem of ambiguity by using a Baye-

sian network to select a suitable deep learner for the task of prediction.

To demonstrate our model, we use a real-life application: predicting the Nik-

kei Average Stock price by taking into consideration the influence of multiple

stock markets. Specifically, we estimate the Nikkei Stock Average of the current

term based on the Nikkei Stock Average of the previous term as well as overseas

major stock price indicators such as NY Dow and FTSE 100. We evaluate the va-

lidity of our proposed method based on the accuracy of the estimation results.

2. Related Works

In this section, we introduce the related works of multiple learners.

In ensemble learning, outputs from each learner are integrated by weighted

averaging or a voting method [8]. In complementary learning, each learner is

combined with the group to compensate for each other’s disadvantages. Com-

plementary learning is a concept arising from the role sharing in the memory

mechanism of the hippocampus and cortex [9]. These learning methods tend to

mainly use weak learners. Conversely, to realize a hierarchical control mechan-

ism, there are cases where multiple learners are used. When the behavior of a

robot or multi-agent entity is controlled, a hierarchical control mechanism is of-

ten adopted as attention is paid to the fact that such task can be divided into

subtasks. Takahashi and Asada have proposed a robot behavior-acquisition me-

S. Kobayashi, S. Shirayama

117

thod by hierarchically constructing multiple learners of the same structure [10]. A

lower-level learner is responsible for different subtasks and learns low-level ac-

tions. A higher-level learner learns higher-level actions by exploiting a lower-level

learner’s knowledge.

Our proposed method, which will be described later, is based on the same

notion as the bagging method used in ensemble learning where training data

are divided and independently learned. The difference between our proposed

method and the bagging method is the division method, the integration of

multiple learners (the method of selecting suitable learners for each set of test

data) to improve learners’ accuracies in their acquisition of the material.

Therefore, similar to Takahashi and Asada [10], we do not hold to the premise

that tasks can be divided into subtasks. Our learner selection method is differ-

ent. However, our use of deep learning entities as learners is different to Ta-

kahashi and Asada’s approach as they simply used learners, which is a

Q-learning algorithm extended to a continuous state behavior space. Further-

more, prior research of learning methods has not fully established a method of

dividing training data, a method of integrating multiple learners, or a method

of hierarchizing learners. In addition, it has also failed to improve each learn-

er’s performance after learning.

3. Proposed Method

As we mentioned, because the information processing mechanism of the brain is

not fully understood, it is possible to develop new learners by imitating the in-

formation processing mechanism of the brain. In this research, we hypothesize

that the brain forms multiple learners in the initial stage of learning and im-

proves the performance of each learner in subsequent learning while selecting a

suitable learner.

To design learners based on this hypothesis, it is necessary to find ways of

constructing multiple learners, selecting a suitable learner, and improving the

accuracy of each learner by using feedback from a particular selected learner.

Hence, we assume that multiple learners have the same structure. The learners

are constructed by the clustering of input data. Selection of a suitable learner is

conducted with a naive Bayes classifier that forms the simplest Bayesian net-

work. Furthermore, after fixing learners, we construct a Bayesian network and

predict outcomes without changing the Bayesian network’s construction. How-

ever, it is preferable to improve each learner’s performance and the Bayesian

network by using feedback gained from the selected learners. This will form one

of our future research topics.

In the next section, we propose a method of constructing a single, unified

learner by using multiple deep learners. Moreover, in Section 3.2, we propose a

method of selecting a suitable learner with a naive Bayes classifier.

3.1. Learning with Multiple Deep Learners

In the analysis of time-series data with a deep learner, the prediction accuracy is

S. Kobayashi, S. Shirayama

118

uneven because the loss function of certain time-series data does not converge. It

is commonly assumed that the learning of weight parameters does not work due

to the non-stationary nature of the data. This problem often occurs when mul-

tiple time-series data are used as training data. In addition, the long computa-

tional times that are required is also an issue.

To solve these problems, we think it is effective to apply clustering methods,

such as K-means, SOM, and SVM, to training data; creating clusters; and con-

structing learners for each cluster. This is because training data divided into

some clusters and multiple learners constructed for each cluster enables us to

extract better patterns and improve convergence of the loss function compared

to constructing a single classifier from all the training data. This method also

enables the reduction of the computational time required. Moreover, classifiers

for selecting a suitable learner are constructed from clustering the results of

training data. This classifier achieves the task of associating test data to a suitable

learner.

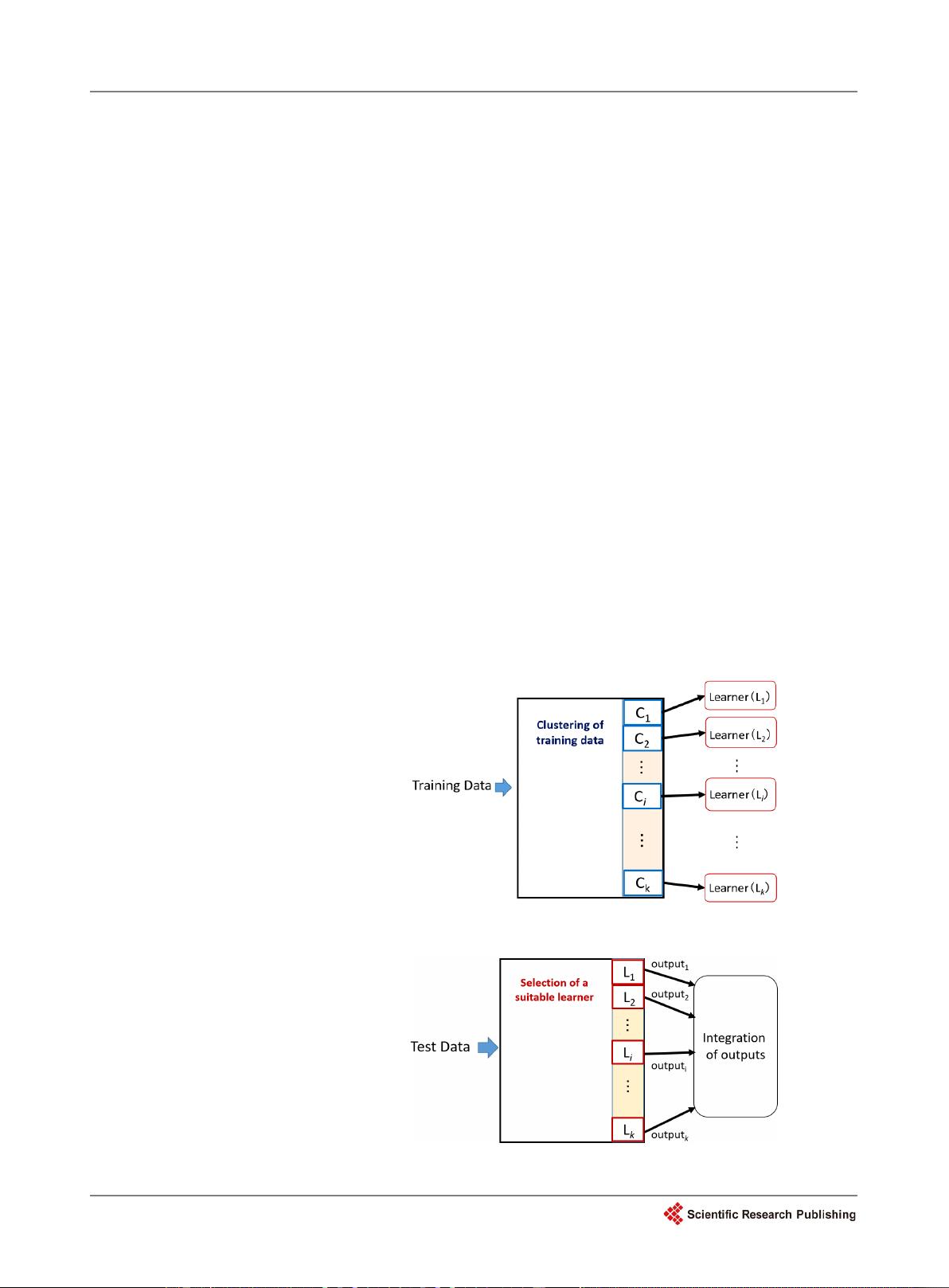

Figure 1 shows the framework of learning with multiple deep learners. We

divided the training data into

k

classes (C

1

, ∙∙∙, C

k

) and constructed

k

deep learn-

ers for each class.

Figure 2 shows the framework of this prediction along with

the test data. To determine which deep learner is in charge of prediction, we

constructed a classifier for test data based on the clustering results of the training

data. In this paper, we use K-means as a clustering method. Training data was

divided into clusters with K-means and for each cluster,

k

learners were con-

structed. However, it is necessary to determine the number of clusters in ad-

Figure 1. Multiple deep learner’s structure.

Figure 2. Prediction with multiple learners.

剩余15页未读,继续阅读

weixin_38551070

- 粉丝: 3

- 资源: 900

上传资源 快速赚钱

我的内容管理

收起

我的内容管理

收起

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

会员权益专享

最新资源

- zigbee-cluster-library-specification

- JSBSim Reference Manual

- c++校园超市商品信息管理系统课程设计说明书(含源代码) (2).pdf

- 建筑供配电系统相关课件.pptx

- 企业管理规章制度及管理模式.doc

- vb打开摄像头.doc

- 云计算-可信计算中认证协议改进方案.pdf

- [详细完整版]单片机编程4.ppt

- c语言常用算法.pdf

- c++经典程序代码大全.pdf

- 单片机数字时钟资料.doc

- 11项目管理前沿1.0.pptx

- 基于ssm的“魅力”繁峙宣传网站的设计与实现论文.doc

- 智慧交通综合解决方案.pptx

- 建筑防潮设计-PowerPointPresentati.pptx

- SPC统计过程控制程序.pptx

资源上传下载、课程学习等过程中有任何疑问或建议,欢迎提出宝贵意见哦~我们会及时处理!

点击此处反馈

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功

评论1