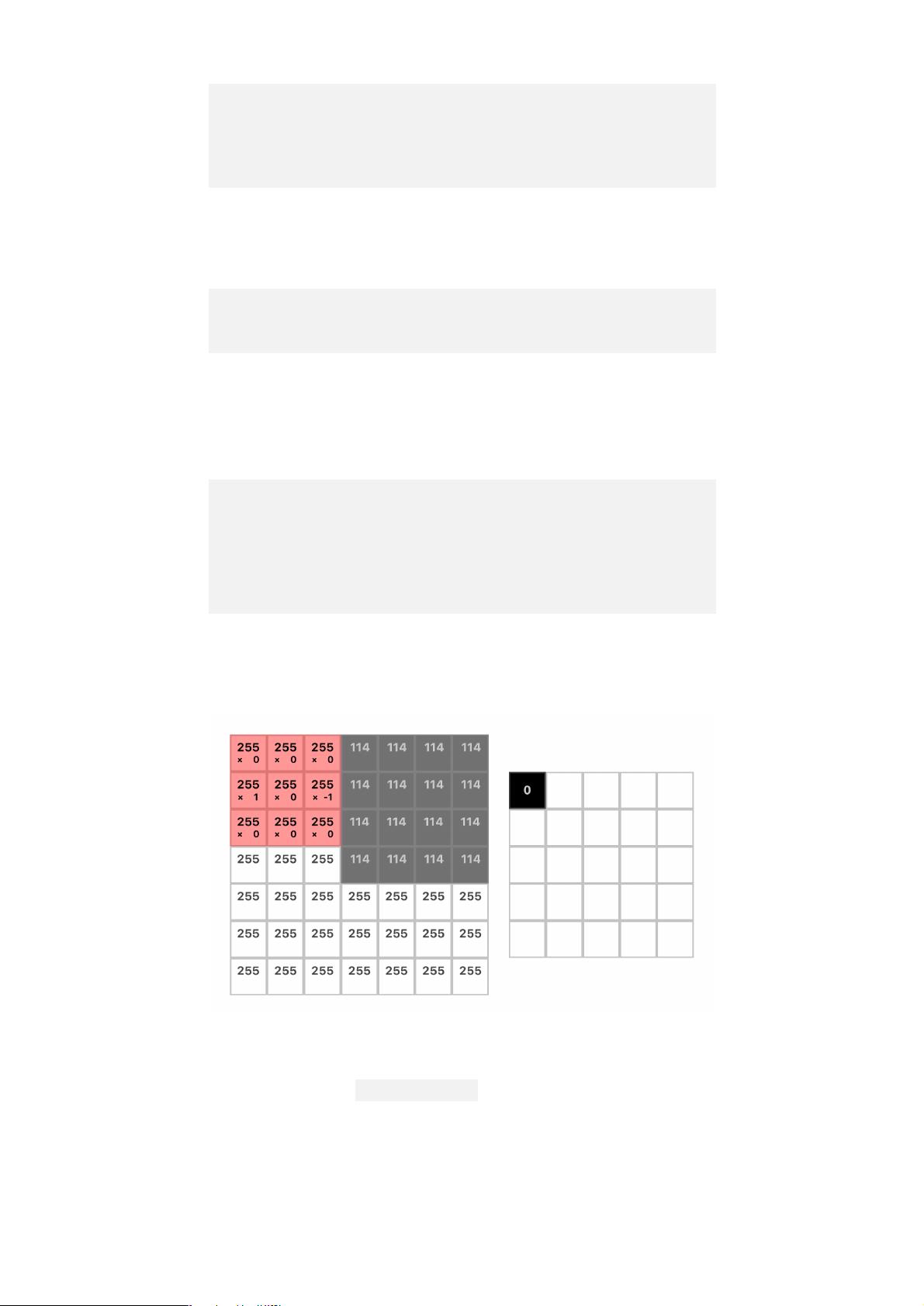

《理解胶囊网络:人工智能诱人的新架构》一文中,作者尼克·布尔达科斯(Nick Bourdakos)探讨了在卷积神经网络(CNN)取得显著成就的同时,业界对寻找新解决方案或改进的需求。文章聚焦于胶囊网络(Capsule Netw),这是一种旨在解决传统问题的新型深度学习架构。 胶囊网络的核心理念在于它试图超越传统的像素级特征检测,通过捕捉对象的部分和整体关系来提高模型的理解能力。胶囊层(Capsule Layer)是其核心组成部分,每个胶囊不仅代表一个像素,而是包含一组向量,这些向量描述了特定特征在不同角度下的可能性,从而更好地模拟人类视觉系统的结构。 在文章中,布尔达科斯首先介绍了输入阶段。在胶囊网络中,输入是神经网络接收到的实际图像,例如这个例子中的28x28像素图像。这些图像被馈送到网络的第一层,通常称为数据层或原始输入层,负责将像素值转换为神经元的激活。 接下来,文章深入讲解了胶囊网络的设计,包括主要结构如 Primary Capsule Layer,它负责提取基本的局部特征,如边缘、角点等。每个胶囊在这个层会学习到一组向量,这些向量表示该区域可能存在的不同特征。 然后,作者引入了动态路由算法,这是胶囊网络的一个关键创新。它允许不同层级的胶囊之间进行通信和协作,根据上下文信息调整特征表示的准确性。这个过程模仿了人类视觉系统中低级特征如何组合成更高级别的概念。 文章还提到作者开发了一个可视化工具,通过它,读者可以直观地观察胶囊网络内部的工作流程,逐层解析网络如何处理信息,并通过科学可视化(由亚历克斯·雷诺兹设计)配合简单的网络实现,帮助读者更好地理解胶囊网络的运作机制。 随着层次的加深,胶囊网络会不断抽象,形成更复杂的结构,直至到达顶层,如DigitCapsule Layer,这里的胶囊负责识别整个图像中的数字类别。每个顶层胶囊将包含一系列向量,它们的分布和方向有助于决定图像属于哪个类别。 《理解胶囊网络:人工智能的迷人新架构》是一篇详尽的指南,旨在帮助读者深入了解胶囊网络的理论背景、设计原理以及其与传统CNN的区别,同时提供了实践中的工具和技术,以便更好地应用这种新型架构在计算机视觉任务中。通过理解胶囊网络的工作方式,我们可以期待它在图像分类、物体检测等领域带来新的突破。

剩余25页未读,继续阅读

信息提交成功

信息提交成功