What to Do Next: Modeling User Behaviors by Time-LSTM

Yu Zhu

†

, Hao Li

†

, Yikang Liao

†

, Beidou Wang

]‡

, Ziyu Guan

?

, Haifeng Liu

]

, Deng Cai

†∗

†

State Key Lab of CAD&CG, College of Computer Science, Zhejiang University, China

?

College of Information and Technology, Northwest University of China

]

College of Computer Science, Zhejiang University, China

‡

School of Computing Science, Simon Fraser University, Canada

{zhuyu cad, haolics, ykliao, haifengliu, dcai}@zju.edu.cn, beidouw@sfu.ca, ziyuguan@nwu.edu.cn

Abstract

Recently, Recurrent Neural Network (RNN) solu-

tions for recommender systems (RS) are becoming

increasingly popular. The insight is that, there ex-

ist some intrinsic patterns in the sequence of users’

actions, and RNN has been proved to perform ex-

cellently when modeling sequential data. In tradi-

tional tasks such as language modeling, RNN so-

lutions usually only consider the sequential order

of objects without the notion of interval. However,

in RS, time intervals between users’ actions are of

significant importance in capturing the relations of

users’ actions and the traditional RNN architectures

are not good at modeling them. In this paper, we

propose a new LSTM variant, i.e. Time-LSTM,

to model users’ sequential actions. Time-LSTM

equips LSTM with time gates to model time inter-

vals. These time gates are specifically designed,

so that compared to the traditional RNN solutions,

Time-LSTM better captures both of users’ short-

term and long-term interests, so as to improve the

recommendation performance. Experimental re-

sults on two real-world datasets show the superi-

ority of the recommendation method using Time-

LSTM over the traditional methods.

1 Introduction

Recurrent Neural Network (RNN) solutions have become

state-of-the-art methods on modeling sequential data. They

are applied to a variety of domains, ranging from language

modeling to machine translation to image captioning. With

remarkable success achieved when RNN is applied to afore-

mentioned domains, there is an increasing number of works

trying to find RNN solutions in the area of recommender sys-

tems (RS).

[

Hidasi et al., 2016a; Tan et al., 2016; Hidasi et al.,

2016b

]

focus on RNN solutions in one certain type of recom-

mendation task, i.e. session-based recommendations, where

no user id exists and recommendations are based on previous

consumed items within the same session.

[

Yu et al., 2016

]

points out that RNN is able to capture users’ general inter-

est and sequential actions in RS and designs a RNN method

∗

corresponding author

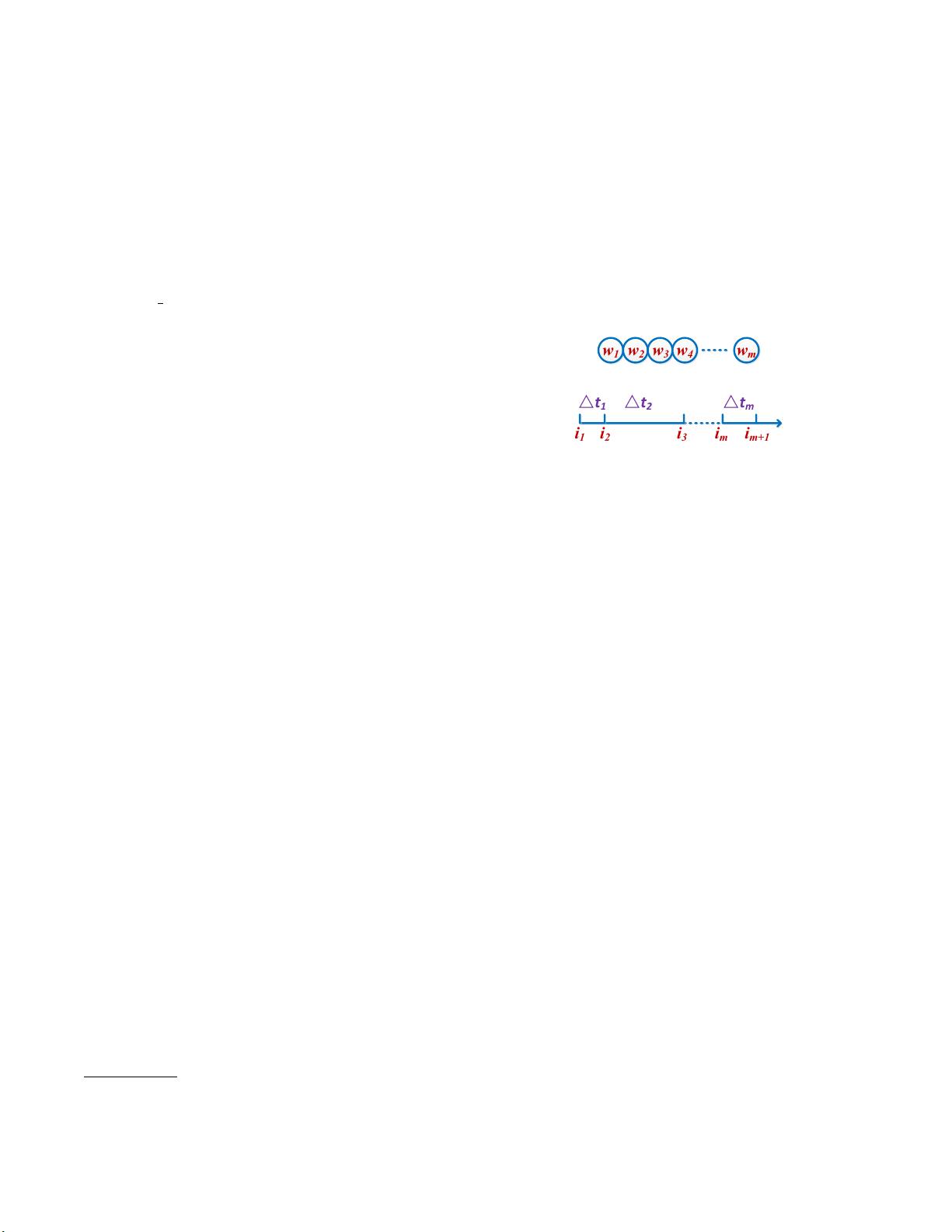

(a) Language Modeling

(b) Recommender Systems

Figure 1: w

m

in (a) represents the m-th word. In (b), i

m

represents

the m-th consumed item and 4t

m

is the time interval between the

time when i

m

and i

m+1

are consumed.

for the next-basket recommendations. The insight that RNN

works well in the above recommendation tasks is that, there

exist some intrinsic patterns in the sequence of users’ actions,

e.g. once a man buys a badminton racket, he tends to buy

some badmintons later, and RNN has been proved to perform

excellently when modeling this type of patterns.

However, none of the above RNN solutions in RS consid-

ers the time interval between users’ neighbour actions, while

these time intervals are important to capture the relations of

users’ actions, e.g. two actions within a short time tend to be

related and actions with a large time interval may aim at dif-

ferent goals. Therefore, it is important to exploit the time in-

formation when modeling users’ behaviors, so as to improve

the recommendation performance. We use Figure 1 to show

what the time interval is and how it makes RS different from

the traditional domains such as language modeling. Specifi-

cally, there is no notion of interval between neighbour words

(e.g. no interval between w

1

and w

2

) in language modeling,

while there are time intervals between neighbor actions (e.g.

4t

1

between i

1

and i

2

) in RS. Traditional RNN architectures

are good at modeling the order information of sequential data

as in Figure 1 (a), but they cannot well model time intervals

in Figure 1 (b). Therefore, new models need to be proposed

to address this problem.

A recently proposed model, i.e. Phased LSTM

[

Neil et al.,

2016

]

, tries to model the time information by adding one time

gate to LSTM

[

Hochreiter and Schmidhuber, 1997

]

, where

LSTM is an important ingredient of RNN architectures. In

this model, the timestamp is the input of the time gate which

controls the update of the cell state, the hidden state and

thus the final output. Meanwhile, only samples lying in the

model’s active state are utilized, resulting in sparse updates

during training. Thus, Phased LSTM can obtain a rather fast

Proceedings of the Twenty-Sixth International Joint Conference on Artificial Intelligence (IJCAI-17)

3602