没有合适的资源?快使用搜索试试~ 我知道了~

首页医学影像质量评估:从ICRU Report 54的视角

"ICRU Report 54:The Assessment of Image Quality"

报告《ICRU Report 54:图像质量评估》详细探讨了医学成像领域在过去的二十年间取得的巨大进步,这些进步主要体现在成像系统能力的提升和系统复杂性的增加。这一变革的主要驱动力是计算技术的发展以及数字技术在数据采集、处理和显示中的广泛应用。尽管医学成像的各个分支都受到了显著的影响,但最为突出的例子是X射线计算机断层扫描(CT)和磁共振成像(MRI)。

报告中指出,对诊断成像技术进行定量测量方法的共识正在逐渐形成。这是基于对不同成像模态之间共同特征的认识,使得我们能够在统计决策分析的框架内理解这些技术的局限性。这种评估方法对于确保医学成像的有效性和可靠性至关重要,因为它可以帮助识别并减少由于系统性能限制导致的误诊可能性。

图像质量的评估涵盖了多个方面,包括空间分辨率、对比度、噪声、信噪比(SNR)、对比度噪声比(CNR)等关键指标。空间分辨率是衡量成像系统能分辨最小细节的能力,而对比度则涉及到图像中不同区域之间亮度差异的识别。噪声是图像中随机变化的部分,它可能掩盖或干扰实际的解剖结构。信噪比和对比度噪声比则是评估图像质量和诊断效能的重要参数,高信噪比和对比度噪声比意味着图像更清晰,更利于医生做出准确的诊断。

报告还强调了质量控制的重要性,这包括定期的系统校准、维护和性能测试,以确保设备在整个工作周期内都能提供一致的图像质量。此外,还讨论了图像处理技术如何通过提高图像的视觉表现来改善图像质量,例如使用滤波、降噪算法以及后处理技术。

报告进一步涵盖了法律和通知方面的内容,强调了ICRU作为国际辐射单位和测量委员会的责任,旨在提供准确、完整且实用的信息。报告的编制者们对其内容的准确性承担责任,同时也提醒用户,尽管努力确保信息无误,但使用者仍需结合具体情况进行判断和应用。

《ICRU Report 54》为医学成像领域的专业人员提供了一个全面的指南,用于评估和优化图像质量,从而提升诊断的准确性和患者护理的质量。报告涵盖了从基本的图像质量指标到复杂的统计决策分析,对理解和改进医学成像系统的性能具有深远的意义。

4

requires

measurements

of

the

intrinsic

contrast

of

the

feature,

such

as

a lesion,

being

detected

(i.e.,

the

signal),

the

contrast

transfer

function

of

the

imaging

system,

and

the

noise

power

spectrum

(NPS)

at

a

given

imaging

condition.

These

fundamental

measure-

ments

and

their

normalization

for

portability

be-

tween

laboratories

will

be

described

in

Section 3.2.

These

measurements

are

the

ingredients

required

to

calculate

the

SNR

that

determines

the

intrinsic

detect-

ability

of

a lesion

in

a simple

phantom,

the

so-called

ideal

observer

signal-to-noise ratio.

1.4.2

Quasi-Ideal

Observers

While

the

strength

of

the

ideal

observer

model is

that

it

indicates

the

best

possible

performance,

its

application is

usually

limited

to

simple

phantoms

and

decision

tasks

because

of

the

difficulty

of

specifying

it

for

more

complicated

tasks.

While sacrificing

the

ideal

nature

of

the

analysis

it

is possible

to

modify

this

observer

to

give model decision

makers

which

are

applicable

to

a

wider

range

of

tasks

and

which

may,

in

principle,

more

closely

approximate

the

performance

of

human

observers.

Several

such

models

are

re-

viewed

in

Section

3.5. A

method

is also

presented

for

directly

measuring

the

SNRs

of

these

observers

with-

out

requiring

the

full

set

of

physical

measurements

needed

to

calculate

the

ideal-observer

performance.

These

observers

are

based

on

matched

filters

and

various

discriminant

functions

(e.g.,

Hotelling/

Fisher).

They

can

be

used

to

assess

the

contributions

to

the

separation

of

image

classes

from

the

combina-

tion

of

random

or

stochastic

noise

and

the

determinis-

tic

artifacts

that

arise

in

the

detection

and

formation

of

images.

Work

is also

presented

to

show

how

well

these

models

correlate

with

the

performance

of

a

human

observer.

1.4.3

Human

Observers

While model

observers

are

useful

for

providing

a

rapid

assessment

of

performance,

they

are

still lim-

ited

to

relatively simple

tasks.

To

incorporate

the

complete

realm

of

clinical complexity

it

is

necessary

to

present

images

to

a

human

observer

and

analyze

performance

by psychophysical

techniques.

The

most

rigorous

of

these,

the

only

one

allowing

performance

to

be

separated

from

the

observer's

bias, is

the

application

of

the

ROC methodology.

This

is de-

scribed

in

detail

in

Section

4.

In

such

studies,

the

observer

is

asked

to

perform

some

visual

task,

and,

through

training,

develops a decision

strategy

for

doing

so.

An

important

aspect

of

psychophysical

studies

of

image

quality

is

that

they

can

use

real

clinical

images

of

patients,

incorporating

the

natu-

rally

occurring

biological variability

to

arrive

at

a

realistic

task.

1.5

Image

Quality

and

the

Diagnostic

Process

Image

assessment

is defined

here

in

terms

of

the

performance

of

well-defined signal

detection

tasks.

Physical

performance

assessment

is

often

specified

in

terms

of

the

detection

or

discrimination

of

simulated

lesions while clinical

performance

assessment

is speci-

fied

in

terms

of

the

detection

or

discrimination

of

clinical lesions

or

other

disease

states.

These

tasks

are

only

part

of

the

larger

clinical

task,

or

process,

that

involves

the

management

of

the

patient

and

the

determination

of

the

patient

outcome

and

its

effect

on

society.

These

issues

come

under

the

broader

heading

referred

to

as

efficacy.

Fryback

and

Thornbury

(1991)

have

proposed

a

six-level model

of

efficacy

and

this

is

summarized

in

Table

1.1.

The

two lowest levels

are

the

concern

of

this

Report,

namely

technical

efficacy (i.e., physical

image

performance

assessment)

and

diagnostic accu-

racy

(i.e., clinical

image

performance

assessment).

A

major

purpose

of

this

Report

is

to

present

consensus

methodology so

that

these

levels

of

efficacy

may

be

characterized

quantitatively.

It

is

to

be

expected,

furthermore,

that

the

quantification

at

the

first level

will serve

as

a specification

of

the

state

of

technology

that

can

be

used

to

label

or

normalize

studies

at

the

second level,

and

ultimately

to

understand

their

ranking

of

imaging

systems.

Similarly,

quantification

at

both

of

these

lower levels

can

serve

to

label

or

normalize

studies

at

higher

levels

and

contribute

toward

the

understanding

of

their

results.

Work

at

all

of

these

levels,

then,

represents

major

efforts

towards

a

quantitative

science

of

medical decision

making.

1.6

Outline

of

the

Report

The

intention

of

this

Report

is

to

present

a frame-

work

within

which

the

diagnostic

quality

of

images

produced

by

a

variety

of

clinical

imaging

devices

can

be

evaluated.

It

is

not

proposed

to

deal

with

the

detail

of

how

this

framework

can

be

applied

to

each

modal-

ity;

although

examples

are

given

in

Appendix D,

this

task

will

be

tackled

in

subsequent

reports.

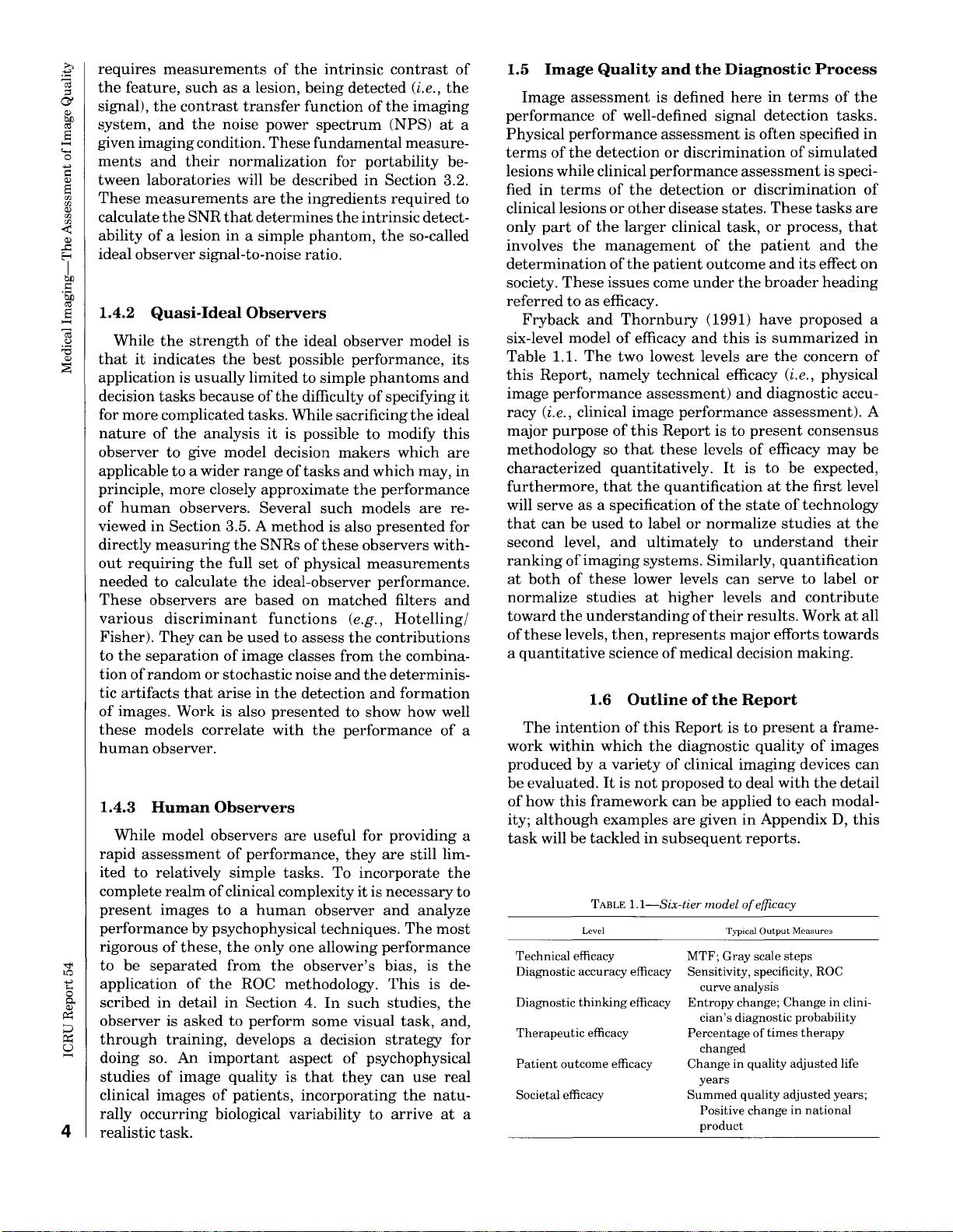

TABLE

1.1-Six-tier

model

of

efficacy

Level

Technical

efficacy

Diagnostic

accuracy

efficacy

Diagnostic

thinking

efficacy

Therapeutic

efficacy

Patient

outcome

efficacy

Societal efficacy

Typical

Output

Measures

MTF;

Gray

scale

steps

Sensitivity,

specificity, ROC

curve

analysis

Entropy

change;

Change

in

clini-

cian's

diagnostic

probability

Percentage

of

times

therapy

changed

Change

in

quality

adjusted

life

years

Summed

quality

adjusted

years;

Positive

change

in

national

product

Downloaded from https://academic.oup.com/jicru/article-abstract/os28/1/NP/2924011 by Oxford University Press USA user on 30 October 2018

It

is

suggested

that,

using

the

paradigm

of

statisti-

cal decision

theory,

the

quality

can

be

measured

for

both

the

displayed image,

as

viewed

by

the

human

observer,

and

for

the

acquired

data,

that

is,

the

image

data

prior

to

display. In

both

cases, a

SNR

may

be

calculated which, for

the

acquired

data,

may,

in

certain

circumstances,

be

the

optimum

or

maximum

value achievable.

It

will

be

suggested

that

this

general

approach

provides

comprehensive

measures

of

image

quality.

For

the

benefit

of

the

reader

who is

not

conversant

with

medical imaging,

an

introduction

to

the

basic

principles

of

commonly

used

medical

imaging

tech-

niques

is given

in

Appendix A

together

with

com-

ments

on

the

applicability

of

the

proposed process for

measuring

quality. Also,

in

Appendix B,

there

is a

general

discussion

of

the

various

types

of

image

degradation.

The

currently

used

measures

of

image

quality

will

be

reviewed

in

Section 2

and

the

approaches

to

be

recommended

in

this

Report

will

be

given

in

Sections

3

and

4.

Section 3 describes

how

the

performance

of

the

imaging

device

may

be

assessed

by

measuring

the

SNR

achieved

when

an

ideal

or

quasi-ideal

observer

analyzes

the

acquired

data.

The

mathematical

con-

cepts

underlying

this

ideal

or

Bayesian

observer

are

given

in

Sections 3.2

and

3.3,

and

presented

more

rigorously

in

Appendix

C.

Section 3.4

outlines

the

potential

value

ofthe

quantity

noise equivalent

quanta

(NEQ) for describing

imaging

system

performance.

In Section 3.5, two non-ideal

observer

models

are

outlined

which

may

be useful

in

circumstances

where

the

ideal observer model is

not

readily calculable,

or

where

a model is

required

which

approximates

more

closely

to

human

performance.

These

concepts

are

developed

in

mathematical

detail

in

appendices E, F

and

G.

Finally,

in

Section 3.8

and

Appendix H, a

brief

consideration

is given

to

the

problem

of

estimation.

Section 4 deals

with

the

assessment

of

the

dis-

played image

by

the

human

observer. Section 4.2.2

describes how

ROC curves

are

generated

and

Section

4.2.3 defines

various

indices

of

performance

which

can

be

derived

from

them.

A discussion

of

different

types

of

test

patterns

for visual

assessment

is given

in

Section 4.4.

General

guidance

on

the

practical

implementation

of

these

techniques,

in

a form

which

can

be

adopted

by

the

potential

user,

is given

in

Section 5.

The

concluding section

emphasizes

how

the

tech-

niques

may

be

developed so

as

to

apply

to

more

sophisticated

problems

of

clinical diagnosis.

An

alphabetic glossary is provided

in

Appendix I.

The

point

has

been

made

that

a

measure

of

image

quality

is

meaningful

only

when

related

to

a

particu-

lar

task.

It

is

the

intention

of

this

Report

to

suggest

a

framework

within

which

the

diagnostic

quality

of

images

produced

by a

variety

of

clinical

imaging

devices

can

be

evaluated;

any

proposals for

judging

quality

must

fit

into

the

wider

process

of

clinical

efficacy

assessment

(Fryback

and

Thornbury,

1991;

Thornbury,

1994).

5

Downloaded from https://academic.oup.com/jicru/article-abstract/os28/1/NP/2924011 by Oxford University Press USA user on 30 October 2018

6

2.

Present

Approaches

to

Measuring

Image

Quality

Parameters

2.1

Introduction

A

variety

of

techniques

for

measuring

the

perfor-

mance

of

imaging

systems

has

evolved. In

general,

these

involve

presenting

a

known

input

to

the

imag-

ing

system

and

using

the

resulting

image(s)

to

assess

performance.

This

assessment

may

be

done

subjec-

tively

or

using

any

of

a wide

range

of

degrees

of

objectivity.

It

may

involve

the

participation

of

human

observers

or

use

mathematical

models.

It

may

apply

to

the

entire

imaging

system

or

only

to

a

part

of

it.

Clearly, objective

measures

of

image

quality

are

desirable

in

order

to

characterize

the

performance

of

an

imaging

system

and

not

simply

that

of

a

particular

set

of

observers.

On

the

other

hand,

even

relatively

simple

interpretation

tasks

are

difficult

to

model

realistically, so

it

may

prove

problematical

to

extrapo-

late

from

objective

measures

of

quality

to

perfor-

mance

in

the

clinical

situation.

Subjective

methods

have

the

advantage

that

clinical

utility

can

be

as-

sessed

more

directly,

but

it

is

not

easy

to

achieve

controlled

test

conditions

and,

as

a

consequence,

results

may

be

difficult

to

interpret.

Since,

as

was

noted

in

the

previous

section,

image

quality

must

relate

to

a clearly defined

image

interpretation

task,

the

ability

to

relate

the

performance

measurement

to

a

task

must

be

a

fundamental

criterion

against

which

quality

assessment

methodologies

should

be

judged.

The

purpose

of

this

Section

is

to

describe

some

of

the

techniques

commonly

used

for

the

assessment

of

the

performance

of

imaging

systems.

These

range

from

simple, subjective

ones

to

the

highly

sophisti-

cated

methods

outlined

in

detail

in

the

following

two

sections. Deficiencies

of

the

simple

methods

are

noted

and

an

indication

of

the

rationale

underlying

those

to

be

recommended

is given.

2.2

Physical

Image

Assessment

Methods

Physical

image

assessment

is accomplished

by

mea-

suring

certain

physical

parameters

and

combining

them

according

to

the

requirements

of

a

particular

imaging

task.

This

may

involve

using

one

or

more

models

of

observers

to

calculate

performance,

such

as

the

ideal

Bayesian

observer

and

other

(quasi-ideal)

observers.

2.2.1

Physical

Parameters

There

are

three

kinds

of

physical

parameters

which

are

fundamental

to

imaging

system

specification.

These

are:

1.

Large-scale (macro)

system

transfer

function

(characteristic

curve)

which

measures

the

rela-

tionship

between

system

input,

e.g.,

exposure

quanta,

and

the

output

image, e.g., optical den-

sity

2.

Spatial

resolution

properties

3. Noise

properties

These

parameters

are

required

for

any

serious

determination

of

system

performance,

but

they

are

largely

task

independent

and,

thus,

by

themselves,

do

not

provide

any

definitive way

of

rating

or

ranking

systems.

They

do, however, serve

as

the

basic

means

of

system

specification

and

as

the

building

blocks for

more

complete

performance

appraisal.

Note,

how-

ever,

that

these

tools do

not

cover

image

dependent

artifacts,

such

as

aliasing

due

to

undersampling.

Some

of

these

problems

are

considered

in

Section

3.

The

large-scale

transfer

function

is a

prerequisite

for

quantitative

performance

analysis

since

it

pro-

vides

the

relationship

between

values

in

the

object

and

those

measured

in

the

output

image.

For

many

systems

this

is

not

a

simple

linear

relationship,

but

non-linear,

such

as

logarithmic.

Systematic

image

degradation

due

to

spatial

resolu-

tion

properties

can

be

characterized

effectively

by

observing

the

response

of

a

system

to

known,

simple

objects. In principle,

such

information

can

be

used

to

predict

the

response

to

more

complex objects.

The

point

spread

function

(PSF),

line

spread

function

(LSF)

and

edge

spread

function

(ESF)

are

the

re-

sponses

of

a

system

to

point,

line

and

step-edge

objects, respectively.

In

each

case, a

highly

localized

feature

will

generally

produce

a

blurred

image

which

defines

the

degree

of

spatial

correlation.

The

modula-

tion

transfer

function

(MTF)

of

a

system

is defined

as

its

response

to

a

sinusoidal

input;

it

specifies

the

relative

amplitude

of

the

output

signal

as

a

function

of

the

spatial

frequency

of

the

sinusoid.

In

general,

the

response

will

decrease

as

the

frequency

increases.

The

MTF

can

be

derived

simply

by

computing

the

two-dimensional

Fourier

transform

of

the

PSF

or,

more

commonly,

by

the

one-dimensional

transform

of

the

line

spread

function

(Metz

and

Doi, 1979).

Issues

that

must

be

addressed

in

calculating

and

interpret-

ing

the

transfer

functions

of

digital

imaging

systems

are

discussed

in

Giger

and

Doi (1984)

and

Metz

(1985).

The

introduction

of

noise

into

an

imaging

process

means

that

the

system

is

no

longer

deterministic

and

that

its

performance

must

be

analyzed

using

statisti-

cal

methods.

The

simplest

measure

of

output

noise is

given

by

the

variance

of

the

intensity

over

the

image

of

a

uniform

object.

This

description

is, however,

incomplete

because

it

does

not

specify

the

spatial

correlation

of

the

noise.

This

is

an

important

omis-

sion

since

interactions

between

the

spatial

structure

of

the

noise,

the

structure

of

the

signal

and

the

imaging

blur

are

major

causes

of

irreversible

image

Downloaded from https://academic.oup.com/jicru/article-abstract/os28/1/NP/2924011 by Oxford University Press USA user on 30 October 2018

degradation.

The

spatial

correlation

of

noise

can

be

fully

characterized

for

most

important

cases by

its

Wiener

spectrum

which

measures

the

noise

power

as

a

function

of

spatial

frequency

(Giger

et

al., 1984).

The

Wiener

spectrum

is also

the

Fourier

transform

of

the

noise

autocorrelation

function

(see

Section

3.2.3).

2.2.2

Difference

Metrics

The

most

direct

method

of

evaluating

image qual-

ity,

in

the

sense

of

fidelity

to

the

original object, is

to

determine

the

mean

squared

error

(MSE).

This

in-

volves

computing

the

average, over

the

image

format,

of

the

square

of

the

difference

between

the

output

image

of

the

system

and

the

image

that

a

perfect

system

would

have

provided

of

the

same

object.

This

represents

the

degree

of

difference

between

the

im-

ages

from

the

actual

and

ideal systems.

Mean

squared

error

(which is closely

related

to

the

cross-correlation

of

the

two

images)

and

other

point

difference

metrics

are

superficially

attractive,

but

have

limited

practical

value

because

they

fail

to

differentiate

between

quite

different

forms

of

degradation.

For

example,

many

small

differences

can

give

the

same

value

of

MSE

as

one

large

difference,

and

a simple

uniform

contrast

or

position

off-set would yield a

large

value

of

MSE,

but

be

completely

irrelevant

to

performance

on

most

imaging

tasks.

This

figure

of

merit

is, however,

useful

for

tasks

involving

estimation.

2.2.3

Statistical

Decision

Theory

Modern

image

evaluation

methodology

has

devel-

oped

from

concepts

based

on

statistical

decision

theory.

These

concepts

arose

naturally

during

the

evolution

of

modern

communications

engineering,

where

message

transmission

and

reception

with

low

probability

of

error

is

the

desired

goal.

Military

and

commercial applications

abound,

and

major

develop-

ments

were

made

in

applications

to

diagnostic medi-

cine

and

psychology.

Statistical

decision

theory,

and

the

related

field

of

information

theory,

find a

natural

application

in

the

assessment

of

the

performance

of

imaging

systems.

These

fields

address

the

problem

of

making

the

best

possible choice

among

several

alter-

natives

when

given a

certain

amount

of

information.

Thus,

specifying a

task

and

specifying

the

capabilities

of

the

observer

using

the

imaging

system

leads

natu-

rally

to

the

question

of

how

the

system

performs

in

providing

the

observer

with

the

information

it

can

use

to

accomplish

the

task.

Methods

of

system

assessment

based

on

informa-

tion

theory

and

those

based

on

statistical

decision

theory

converge

to

the

measures

of

SNR

that

are

discussed

in

Section

3. A critical

component

of

this

approach

is

the

type

of

decision

maker,

or

observer

model,

used

and

a few

general

comments

concerning

this

are

given below.

2.2.3.1

Ideal

Observer

Formalism.

The

ideal

Bayesian

observer

is

one

who

is able

to

use

all

the

information

available

in

carrying

out

the

imaging

task.

The

ideal

observer

can

correctly

account

for

correlations

in

the

noise

and

is unaffected by

any

reversible

(information

conserving) image process-

ing.

It

does

as

well

as

any

observer

possibly

can

do (in

a

minimum

cost

or

error

sense),

and

its

performance

can

be

measured

at

any

stage

in

the

imaging

system

as

a

measure

ofthe

task-related

information

transmit-

ted

by

the

system

up

through

that

stage.

2.2.3.2

Other

Observers.

For

complicated

tasks,

calculation

of

the

ideal

observer

performance

mea-

sure

may

not

be

tractable,

and

other,

more

easily

calculable, models

may

be

required.

In addition,

the

human

observer

is

not

ideal,

in

particular,

lacking

the

ability

to

account

effectively for (or

prewhiten)

corre-

lations

in

image

noise.

For

these

reasons,

various

model observers,

with

characteristics

more

closely

tuned

to

those

of

the

human

observer,

are

frequently

used

to

infer

human

performance.

Two

such

observ-

ers,

the

Hotelling

observer

and

the

NPWMF,

are

discussed

in

Section 3

and

Appendices E

and

F.

2.3

Psychophysical

Approaches

Psychophysical

approaches

to

the

evaluation

of

imaging

system

performance

measure

the

perfor-

mance

of

real

observers,

often

on

real

clinical

tasks.

2.3.1

Subjective

Assessment

of

Image Quality

"Subjective"

refers

to

individual

human

judge-

ment,

so

in

a

strict

sense

all

methods

employing

human

observers

are

subjective.

Techniques

have

been

developed, however,

to

distill

quantitative,

objec-

tive

results

from

human

observer

studies;

the

present

Section

discusses

those

techniques

in

which

observer

preference

is

the

primary

element.

Subjective

judgement

is

subject

to

sources

of

bias

ranging

from

preference

for

the

aesthetically

pleasing

to

prejudice

against

the

unfamiliar,

and

thus

poten-

tially provides

the

least

reliable

assessment

of

image

quality.

On

the

other

hand,

it

may

be

fast, easy

to

do

and,

at

least

for experienced observers, provide

an

early

indication

of

the

strengths

and

weaknesses

of

an

imaging

system.

It

is clearly

of

potential

value

in

two

circumstances.

Firstly,

when

no

objective

method

is

available,

usually

because

the

technology

of

a

new

imaging

modality

or

technique

is evolving too rapidly

to

allow a statistically

useful

sample

of

images

to

be

collected

under

controlled conditions. Secondly,

it

may

provide

an

insight

into

factors

influencing

image

7

Downloaded from https://academic.oup.com/jicru/article-abstract/os28/1/NP/2924011 by Oxford University Press USA user on 30 October 2018

8

quality

which

may

be

missed

by

approaches

using

too

rigidly defined

study

protocols.

One

potentially

useful

approach

in

such

situations

involves subjective

comparisons

of

image

quality

in

which

the

observer's

attention

is focused systemati-

cally

upon

specific

normal

or

pathological

anatomical

features

in

similar

views

of

a

particular

patient

imaged

with

two modalities (Vucich, 1979).

After

attending

to

each

feature,

the

observer

is

required

to

report

the

relative fidelity

with

which

it

is demon-

strated

by

the

two modalities,

using

a five-

or

seven-

point

rating

scale, for example.

Although

results

obtained

in

this

way

are

inevitably subject

to

bias

and

variations

in

different

observers'

use

of

the

scale

on

which

impressions

are

reported,

the

use

of

a

common

patient

sample

and

the

act

of

focusing

attention

on

specific image

features

may

help

to

guard

against

gross violations

of

objectivity.

A second

technique

involves

the

observer

ranking

versions

of

the

same

image, differing according

to

some

imaging

parameter,

using

a specific

criterion

such

as

image

sharpness.

Comparison

of

the

rank

order

produced for

many

different images allows one

to

test

for

particular

preferences for images displayed

in

one

certain

way.

This

has, e.g.,

been

applied

to

the

study

of

the

effect

of

image pixel size

on

image

quality,

the

ranking

criteria

being

observer

prefer-

ence

(Sharp

et al., 1982).

The

development

of

tech-

niques

for

"multidimensional

scaling" (MDS)

may

also

be

of

benefit

to

these

rank-order

type

studies.

Given

rank

ordering

of

image preference

or

similarity

judgements,

MDS

techniques

determine

the

number

of

relevant

dimensions

that

yield

the

subjective deter-

mination

of

image preference

or

similarity

(Kruskal

and

Wish, 1978).

2.3.2

Method

of

Constant

Stimulus

Historically,

many

experiments

in

the

field

of

psy-

chophysics

have

used

the

"method

of

constant

stimu-

lus,"

in

which

a

sensory

signal

with

constant

charac-

teristics

is

presented

to

an

observer

on

multiple

occasions.

After

each

trial,

the

observer

is

required

to

report

whether

the

signal,

which

was,

in

fact, always

present,

had

been

"detected."

The

level

of

perfor-

mance

achieved by

the

observer

is

represented

by

the

fraction

of

trials

in

which

the

observer

reports

the

signal

to

be detectable.

This

experimental

paradigm

was adopted in psycho-

physics

at

a

time

when

most

sensory

detection pro-

cesses were believed

to

be well-represented by

"thresh-

old

theory,"

according

to

which a

stimulus

is detected

if

and

only

if

it

exceeds a fixed sensory

threshold

and

false positive

reports

are

ascribed

to

observer

error.

Beginning

in

the

early 1950s,

threshold

theory

was

challenged

and

eventually

supplanted

by

statistical

decision

theory

in

visual detection

tasks

(Tanner

and

Swets, 1954). According

to

statistical

decision

theory,

visual detection involves a

trade-off

between

the

frequencies

of

true

positive

and

false positive

reports,

with

the

balance achieved

in

an

experiment

depend-

ing

upon

the

particular

setting

of

a critical confidence

level

or

"decision

criterion"

that

the

observer

chooses

to

adopt.

Thus,

the

observer

can

produce

virtually

any

detection

rate

between

zero

and

100

percent

by

setting

the

decision

criterion

appropriately.

From

this

perspective,

experimental

results

obtained

with

the

method

of

constant

stimulus

are

compromised

severely by

the

fact

that

potential

effects

of

the

observer's

variable decision

criterion

are

not

taken

into

account; in effect, a potentially

important

source

of

variation

is

not

controlled.

An

apparent

advantage

of

the

method

of

constant

stimulus

is

that

it

can

be

used

to

determine

the

dependence

of

detectability

upon

any

physical

param-

eter

of

the

stimulus

(e.g., object

or

imaging

system

in

image-evaluation studies)

in

a direct

and

easily

under-

stood way. However,

the

validity

of

the

method's

results

depends crucially

upon

the

ability

of

each

observer

to

hold

constant

the

FPF

that

would be

produced

if

actually negative

trials

were

presented,

and

to

do so across different

imaging

conditions - a

notoriously difficult

task.

Clearly,

the

results

depend

also

upon

the

observer's

ability

to

resist

the

tempta-

tions

of

"wishful

thinking,"

in

which

it

is imagined

that

a

virtually

invisible

stimulus

is

"seen"

because

it

is

known

that

it

is

present

(Levison

and

Restle, 1968).

In

view

of

these

considerations,

the

method

of

con-

stant

stimulus

cannot

be

recommended

generally for

the

evaluation

of

image quality.

2.3.3

Diagnostic

Accuracy

Many

investigators

have

reported

the

results

of

medical

tests

in

terms

of

the

overall

percentage

of

correct diagnoses produced

by

the

test

in

a

mixture

of

actually positive

and

actually negative cases.

The

validity

of

this

index (often called

"diagnostic

accu-

racy"

in

the

medical

literature)

is

extremely

limited,

in

part

because

its

numerical

value depends

strongly

on

the

prevalence

of

actually positive cases;

in

part

because

its

value depends

upon

the

observer's

setting

of

his critical confidence level;

and

in

part

because

it

does

not

reveal

the

balance

of

false positive

and

false

negative

errors,

which

can

have

very different clinical

consequences (Metz, 1978).

It

should

also be

noted

that

some

authors,

e.g.,

Swets

and

Pickett

(1982)

and

Getty

et al. (1988),

have

used

the

term

"diagnostic

accuracy"

more

generally

to

indicate disease detection

performance

as

mea-

sured

by ROC analysis

and

summarized,

e.g.,

by

the

area

under

the

ROC

curve

(A

z

)

index (see Section

4.2.3).

Downloaded from https://academic.oup.com/jicru/article-abstract/os28/1/NP/2924011 by Oxford University Press USA user on 30 October 2018

剩余99页未读,继续阅读

2021-09-20 上传

2021-11-18 上传

点击了解资源详情

132 浏览量

2025-01-03 上传

haz2yy

- 粉丝: 5

- 资源: 24

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- J2EE开发全程实录.doc

- J2EE WEB端知识及案例使用顺序.pdf

- Microsoft编写优质无错C程序秘诀

- risk and utility in portfolio optimization

- End-to-End Web Content in WebSphere Portal using Web Content Management 6.0(中文版)

- Java+Struts教程(chinese).pdf

- CCIE BGP命令配置手册

- GFS(google文件系统)

- ARM MMU详解(中文版本)

- ASP_NET的网站信息发布管理系统设计与实现

- Experiences with MapReduce

- Bigtable(google的技术论文)

- MAX471数据手册

- 2008年程序员下半年

- MAX485芯片详细资料

- 学位论文撰写及排版格式手册(插图版).pdf

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功