Before actually rendering a pixel into the frame−buffer for the screen display, inspect the Z−buffer and notice

whether a pixel had already been rendered at the location that was closer to the viewer than the current pixel.

If the value in the Z−buffer is less than the current pixel’s distance from the viewer, the pixel should be

obscured by the closer pixel and you can skip drawing it into the frame−buffer.

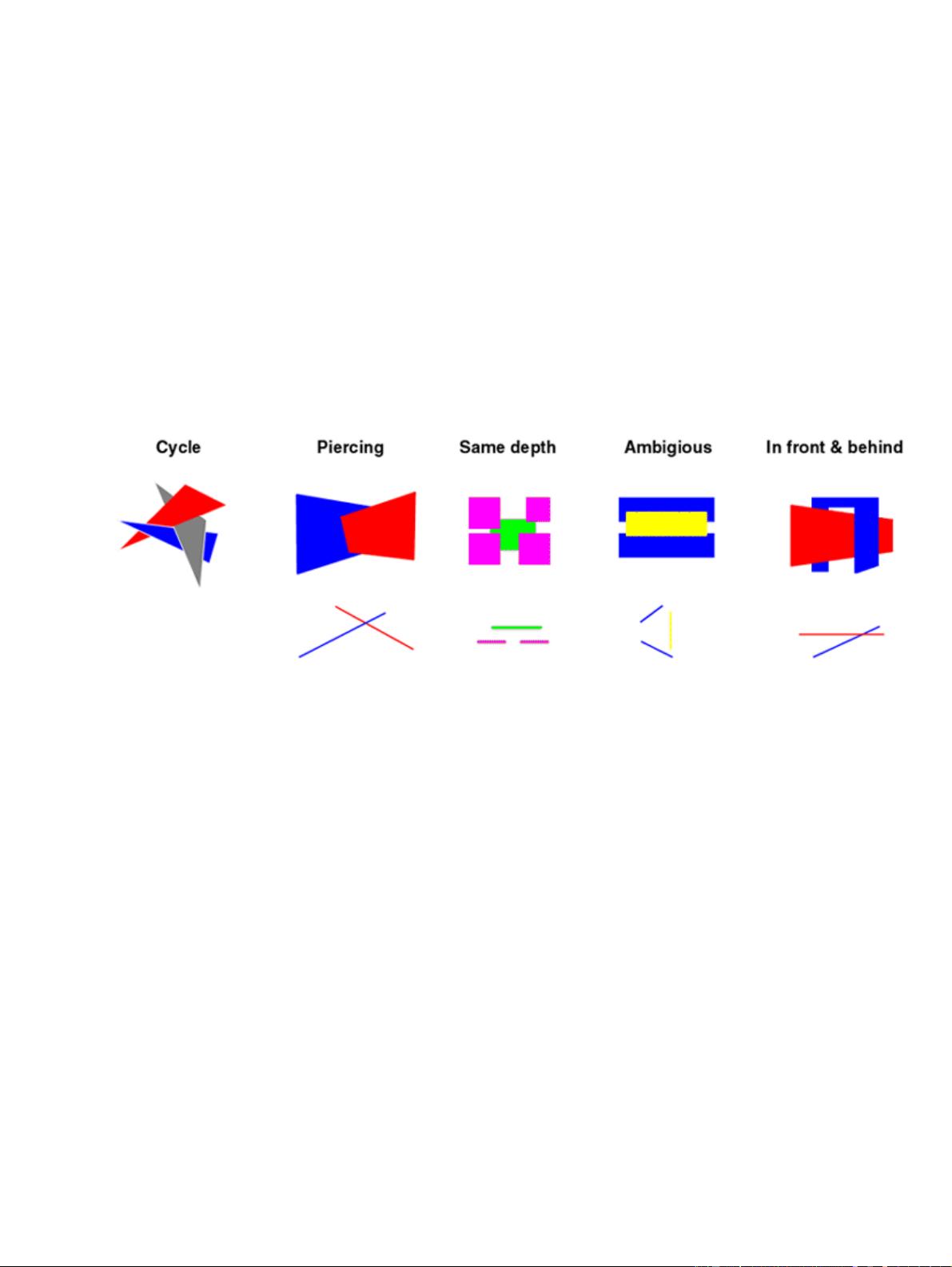

It should be clear that this algorithm is fairly easy to implement, as long as you are rendering at pixel level;

and if you can calculate the distance of a pixel from the viewer, things are pretty straightforward. This

algorithm also has other desirable qualities: it can cope with complex intersecting shapes and it doesn’t need

to split triangles. The depth testing is performed at the pixel level, and is essentially a filter that prevents some

pixel rendering operations from taking place, as they have already been obscured.

The computational complexity of the algorithm is also far more manageable and it scales much better with

large numbers of objects in the scene. To its detriment, the algorithm is very memory hungry: when rendering

at 1024 × 800 and using 32−bit values for each Z−buffer entry, the amount of memory required is 6.25 MB.

The memory requirement is becoming less problematic, however, with newer video cards (such as the nVidia

Geforce II/III) shipping with 64 MB of memory.

The Z−buffer is susceptible to problems associated with loss of precision. This is a fairly complex topic, but

essentially there is a finite precision to the Z−buffer. Many video cards also use 16−bit Z−buffer entries to

conserve memory on the video card, further exacerbating the problem. A 16−bit value can represent 65,536

values—so essentially there are 65,536 depth buckets into which each pixel may be placed. Now imagine a

scene where the closest object is 2 meters away and the furthest object is 100,000 meters away. Suddenly only

having 65,536 depth values does not seem so attractive. Some pixels that are really at different distances are

going to be placed into the same bucket. The precision of the Z−buffer then starts to become a problem and

entries that should have been obscured could become randomly rendered. Thirty−two−bit Z−buffer entries

will obviously help matters (4,294,967,296 entries), but greater precision merely shifts the problem out a little

further. In addition, precision within the Z−buffer is not uniform as described here; there is greater precision

toward the front of the scene and less precision toward the rear.

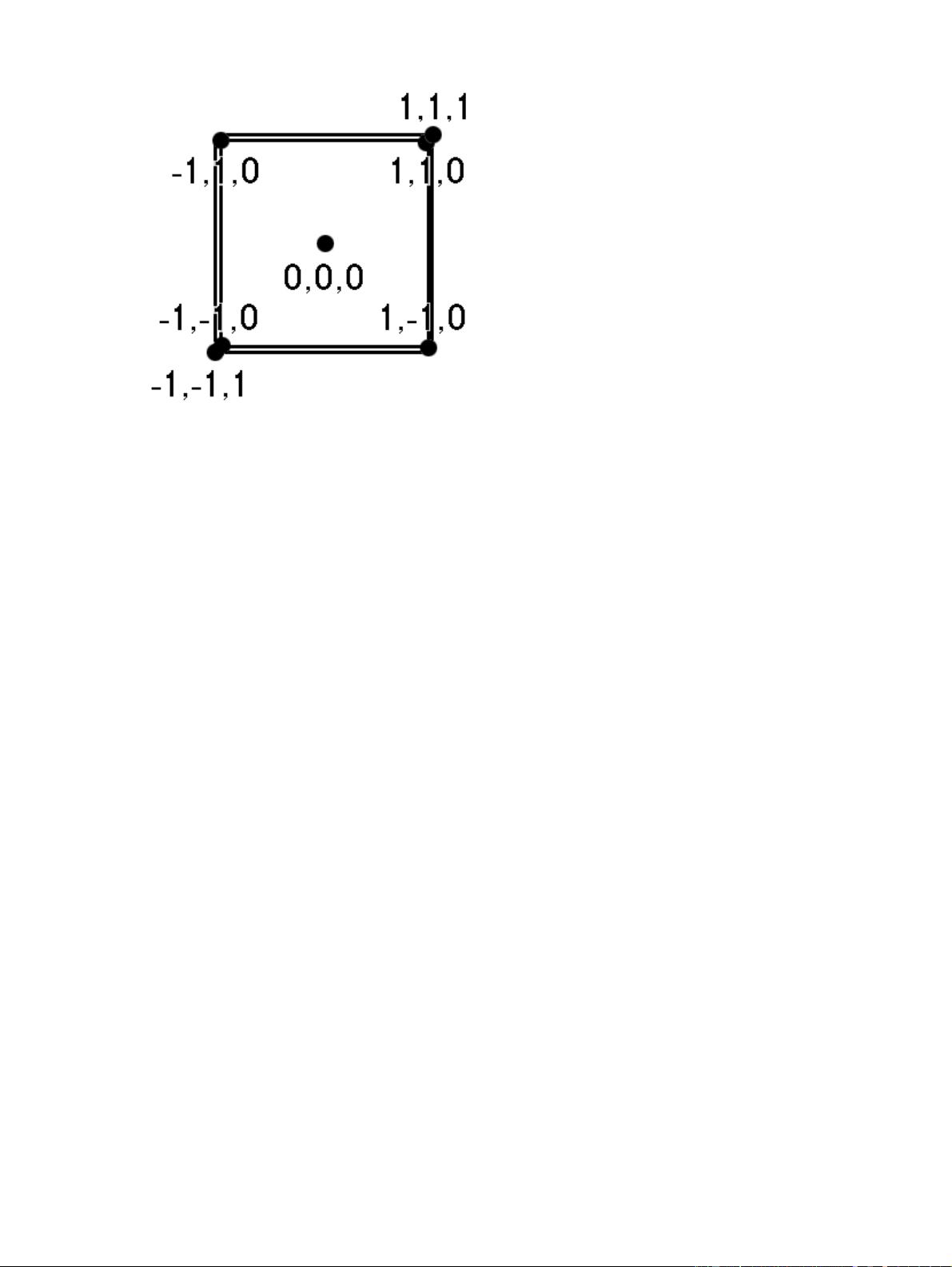

When rendering using a Z−buffer, the rendering system typically requires that you specify a near and a far

clipping plane. If the near clipping plane is located at z = 2 and the far plane is located at z = 10, then only

objects that are between 2 and 10 meters from the viewer will get rendered. A 16−bit Z−buffer would then be

quantized into 65,536 values placed between 2 and 10 meters. This would give you very high precision and

would be fine for most applications. If the far plane were moved out to z = 50,000 meters then you will start

to run into precision problems, particularly at the back of the visible region.

In general, the ratio between the far and near clipping (far/near) planes should be kept to below 1,000 to avoid

loss of precision. You can read a detailed description of the precision issues with the OpenGL depth buffer at

the OpenGL FAQ and Troubleshooting Guide (http://www.frii.com/~martz/oglfaq/depthbuffer.htm).

2.3 Lighting effects

MyJava3D includes some simple lighting calculations. The lighting equation sets the color of a line to be

proportional to the angle between the surface and the light in the scene. The closer a surface is to being

perpendicular to the vector representing a light ray, the brighter the surface should appear. Surfaces that are

perpendicular to light rays will absorb light and appear brighter. MyJava3D includes a single white light and

uses the Phong lighting equation to calculate the intensity for each triangle in the model (figure 2.6).

19