没有合适的资源?快使用搜索试试~ 我知道了~

首页GPU缓存模型:基于重用距离理论的详细分析

GPU缓存模型:基于重用距离理论的详细分析

需积分: 11 10 下载量 150 浏览量

更新于2024-07-22

收藏 40.78MB PDF 举报

"HPCA 2014 Proceeding——计算机科学与计算机体系结构的重要会议,包含30多篇关于GPU缓存模型和性能优化的文章。"

在计算机科学领域,特别是计算机体系结构,HPCA(High-Performance Computer Architecture)是与ISCA和MICRO齐名的顶级会议,它聚焦于最新的技术进展和研究成果。2014年的HPCA会议收录了一系列文章,虽然上传的只是部分,但包含了30多篇针对GPU缓存模型的深入研究。

GPU(Graphics Processing Unit)在现代计算中扮演着关键角色,尤其是在高性能计算和深度学习等领域。随着GPU对片上内存的依赖增加,如何有效地利用其缓存以提高性能和降低能耗变得至关重要。论文“ADetailedGPUCacheModelBasedonReuseDistanceTheory”关注的正是这一问题。

传统的CPU中,栈距离或重用距离理论被广泛用于预测和理解缓存行为。然而,GPU因其独特的并行执行模型、细粒度多线程、条件分支、非均匀延迟、缓存关联性、Miss Status Holding Registers (MSHRs)以及线程分叉(warp divergence)等特点,使得直接应用该理论变得复杂。

该论文扩展了重用距离理论,以适应GPU的特性:

1. **线程层次结构**:模型考虑了GPU中的线程、线程束(warp)、线程块以及活动线程集,这些是GPU并行执行的基础单元。

2. **条件和非均匀延迟**:GPU的指令执行可能因条件分支而产生不同延迟,模型需能捕捉这种差异。

3. **缓存关联性**:GPU缓存的关联性会影响数据访问模式,模型需要模拟这种情况下的行为。

4. **Miss Status Holding Registers (MSHRs)**:MSHRs在处理缓存未命中时起作用,它们管理并发请求,模型需考虑其影响。

5. **线程分叉(warp divergence)**:当线程束中的线程执行不同的路径时,这会影响缓存行为,模型需要能够表示这种情况。

论文作者实现了一个基于C++的模型,并扩展了Ocelot GPU模拟器,以验证和演示这个扩展的重用距离理论在GPU环境中的适用性。这样的工作对于理解和优化GPU的性能,尤其是在面临内存墙挑战时,具有重大意义。通过深入理解GPU缓存的行为,开发者和研究人员可以设计出更高效、更节能的GPU应用和架构。

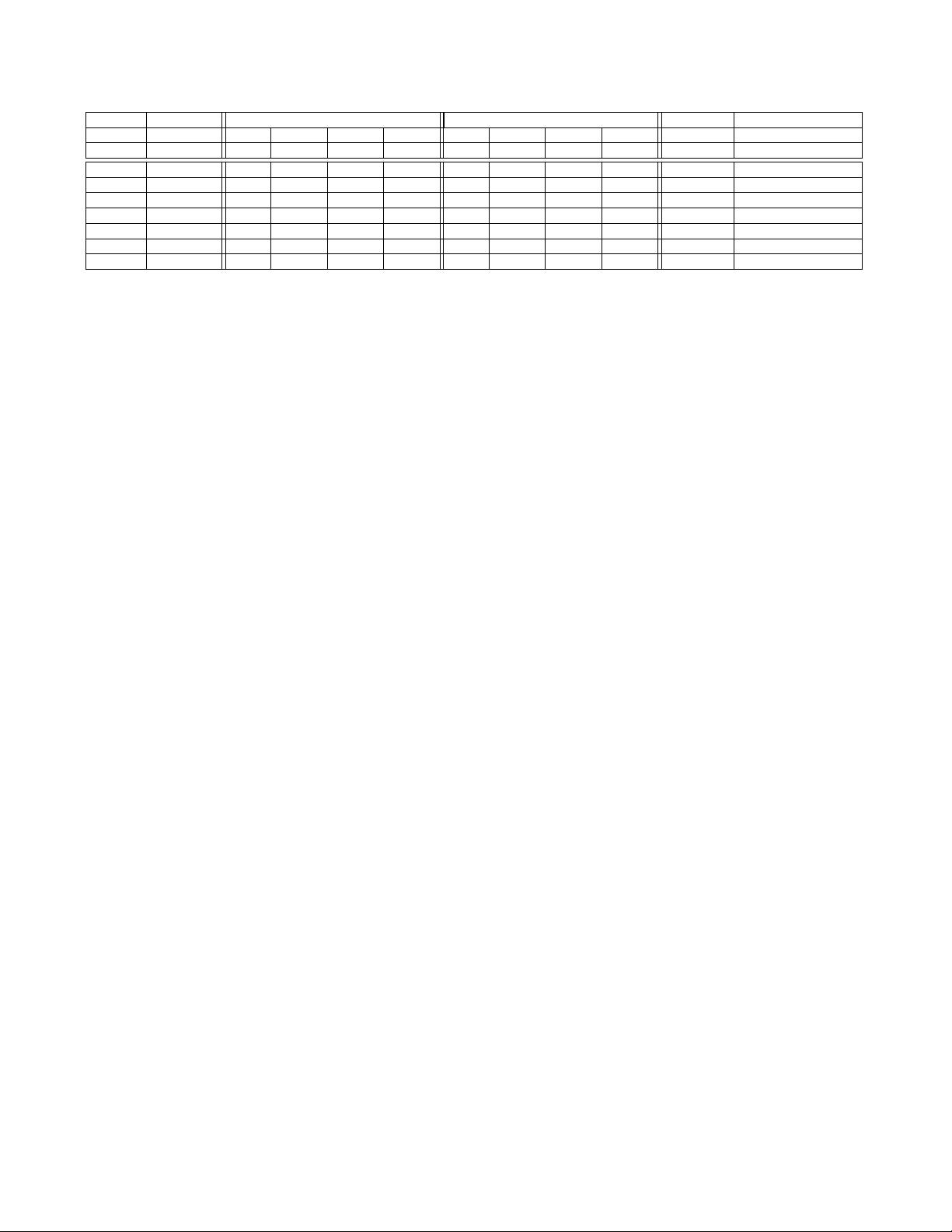

Initial Permissions New Permissions

S/PT Hyp. OS User S/PT Hyp. OS User

Rule ID Requester S P R W X R W X R W X S P R W X R W X R W X Action Note

1 Hypervisor - - - - - - - - - - -

* * * * * * * * * * *

None * = don’t care

2 Hypervisor

* * * * * * * * * * *

- - - - - - - - - - - Wipe Page * = don’t care

3 OS - - - - - - - - - - -

* *

- - -

* * * * * *

None * = don’t care

4 OS

* *

- - -

* * * * * *

- - - - - - - - - - - Wipe Page * = don’t care

5 Hypervisor - - - W - - - - - - - - - - - X - - - - - - None Hypervisor code page

6 OS - - - - - - W - - - - - - - - - - - X - - - None OS code page

7 OS - - - - - - - - - W - - - - - - - - - - - X None User-level code page

Table 2: Permission Assignment Rules to Mitigate Cross-Layer Attacks Considered in our Threat Model

3.3. PRM and Verification of Permissions

The Permission Reference Monitor (PRM) has two responsi-

bilities. First, it ensures that a given memory access is allowed

by the permissions specified for a given physical page. This

is only a minor extension of the type of permission check

performed by existing hardware.

The second responsibility of the PRM is to ensure that the

permission specified for a given physical page is allowed by

the requested memory access. To better understand this, con-

sider a potential attack against the NIMP permission system

that may allow a compromised OS to violate the confiden-

tiality of a user-level page. Assume a page has permissions

- - - | - - - | R W -

. An application may plan to

use this to store confidential information. During the ap-

plication’s run-time, suppose that the OS returns the page

to the null-state using rule 4 (wiping the page in the pro-

cess) and then uses rule 3 to set the page’s permissions to

- - - | R - - | R W -

, hence allowing itself read ac-

cess. Although existing confidential data on the page was

wiped, any future data written by the application could now

be read by the OS.

One solution to this problem would be to simply not permit

the OS to alter the permissions of the page, and instead make

that the sole responsibility of the application. The problem,

however, is that then the OS cannot reclaim memory from a

killed or misbehaving application, which is an unacceptable

outcome. A better solution is for the application to be able

to verify the permissions of the page prior to accessing it.

Then, if the permissions have changed, the write should not

occur. This check needs to be atomic with the write in order

to prevent time-of-check race conditions.

In order to accomplish this, the instruction set is modified

to allow memory access instructions to specify what permis-

sions they explicitly do not want other privilege levels to have.

This information can be encoded within memory instructions.

When a memory instruction is executed, then the PRM per-

forms verification and either allows or denies the request.

4. NIMP Implementation Details

In this section we describe our implementation of NIMP.

4.1. Permission Store

Permissions for each physical page are stored in a special area

of memory called the permission store. The permission store

is made of individual page permissions stored in a Permission

Structure (PS). The PS entry for each physical page speci-

fies the currently active permissions for this page, such that

separate "read", "write" and "execute" bits are provided for

each of the privilege layers (hypervisor, OS and user-level). In

addition, the shared (S) and page table (PT) bits are also in-

cluded. We assume that 2 bytes are needed in memory to store

each of the PS entries, with five bits reserved for future use.

We include the five reserved bits to make each PS entry byte

aligned. Figure 3a shows the PS layout for a single physical

page.

The PS entries for each physical page are securely stored

in a protected region of memory accessible only by hardware

that is in charge of enforcing these new permissions. Neither

the OS nor the hypervisor have a direct access to this memory

and every request to set up or change the permissions has to

go through the NIMP hardware.

Physical memory demand for storing the PS bits is very

modest. For example, for a system with 16GB of physical

memory and 4KB pages, the PS entries for all pages require

only 8MB of memory (2 bytes for each of the 4M pages in the

system), which represents 0.05% of the total memory space.

Additionally, the PS bits are also cached in the instruction and

data TLB entries, just like regular permission bits are cached

in traditional systems. Therefore, the access to PS data in

memory is only needed following a TLB miss. The PS data is

also stored in the regular caches, similar to other system-level

data, such as the page tables.

It is important to observe that the permission bits are not

modified directly by any software layer. All changes must be

approved by the MPM. In order to facilitate this, we add a

new instruction (called PERM_SET) to the ISA to perform the

validity check against the Rule Database and setup the page

permission. This new instruction is described in detail below.

4.2. Memory Permission Manager

In this section, we describe the MPM implementation.

4.2.1. Rule Database and Secure System Boot

To modify

permissions in NIMP, we rely on the use of securely stored

permission modification rules — only the transitions specified

by the rules are allowed, and this is directly controlled by

the MPM hardware. All transitions not specified in the Rule

Database are disallowed. These modification rules are stored

in a dedicated Rule Database (RD), which is located inside a

processor die in a small TCAM structure. Once loaded at boot

time, the contents of the RD never change. Initially, the rules

are stored as part of the system BIOS. At system boot time,

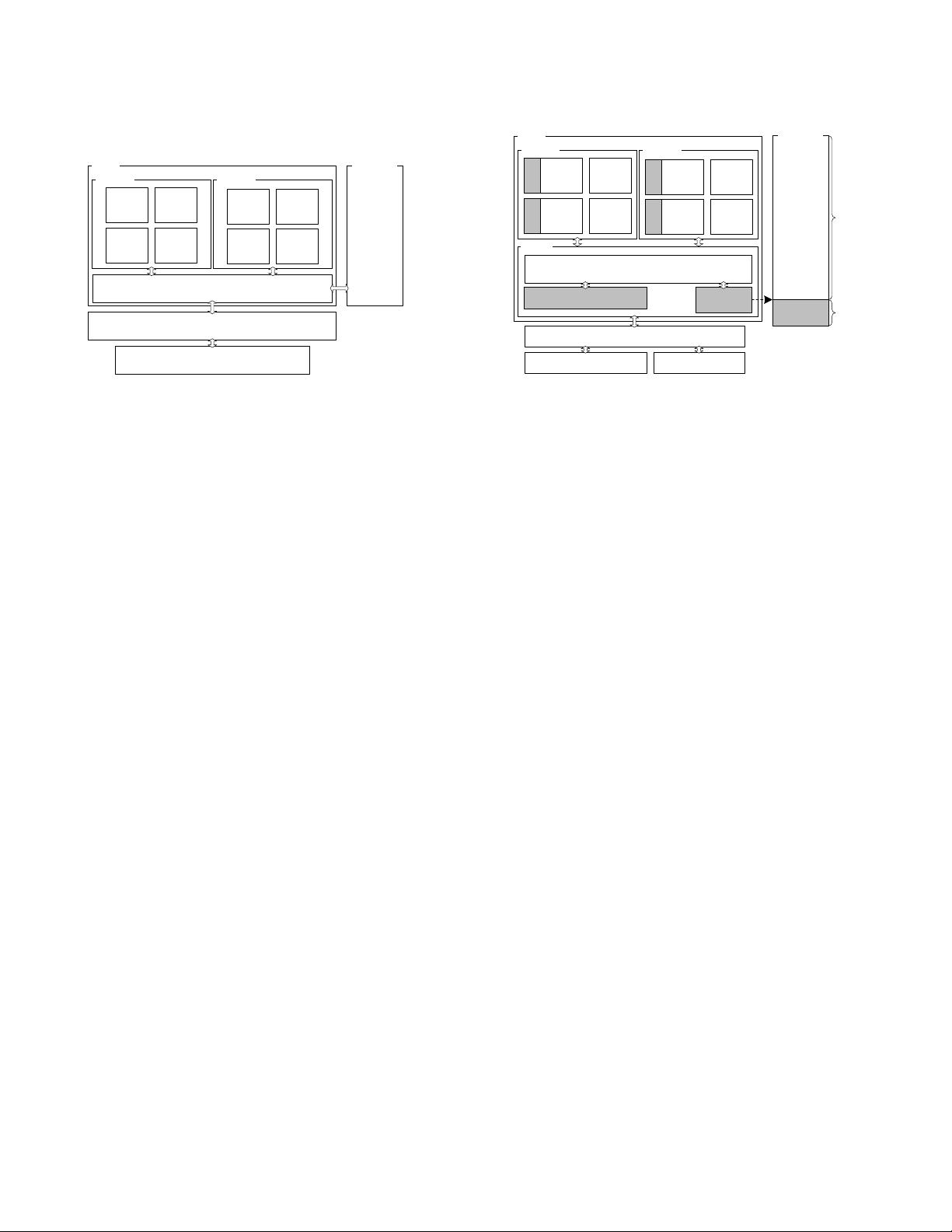

Figure 3: Format of RD and PS Entries

(a) Format of a PS Entry

(b) Format of a RD Entry

New PermissionsCurrent PermissionsRAction

UserOSHyper.

P

T

S

XWRXWRXWR

Reserved

0124 356789101112131415

111128

the integrity of the BIOS is measured by the TPM [

54

] and

then the rules are loaded into the RD.

Each RD entry has the following fields, shown in Figure 3b

• Current permissions

: these store the currently active per-

missions for a physical page, as specified by its PS entry.

• New permissions

: this field has the same format as the cur-

rent permissions field, but it specifies requested new permis-

sions. The RD entry dictates whether or not the transition

from the current set to the requested set of permissions is

allowed.

• The requester of the permission change

: this is necessary

to distinguish between hypervisor, OS kernel or the user-

level process. Two bits (we call them the R bits) are needed

to differentiate between these three entities.

• Action bits

: these specify any special actions needed to be

performed on the page for the rule to be upheld, such as

zeroing out the page, or encrypting it.

4.2.2. Hardware Support for NIMP

We now describe the

hardware support required to realize the MPM. The modified

processor is depicted in Figure 4b. First, the processor is

augmented with the cache-like structure that implements the

RD. The RD is a fully-associative cache that is implemented

as a TCAM (associatively-addressed memory that supports

"don’t care" bits in the search key: this takes care of the "don’t

care" bits in the rules) and its search key is composed of the

tuple <Requester, Current PS, New PS>. Each RD entry is

4 bytes long. For a system with 7 rules (that we use in our

evaluation and that are shown in Table 2), the RD requires 28

bytes of storage plus the logic to implement a fully-associative

search in TCAM. We show in the evaluation section that the

access delay of such a TCAM is below that of an integer ALU.

In addition, the new hardware includes a 64-bit register

(called PS_Base) that points to the beginning address of the

PS table stored in memory. This register is protected from

all software layers and is securely setup at boot time, along

with the initialization of the RD. The index into the PS table

to access the permissions associated with a physical page is

computed in the following manner:

Index = PS_Base + (phys_page_number ∗ sizeo f (PS))

Finally, existing TLB entries (for both instruction and data)

need to be augmented with PS entries for each page stored in

the TLB, so that the PS bits can be read out directly from the

TLB without requiring a memory access on a TLB hit. Since

existing TLB entries already have 16 bytes (8 bytes each for

virtual and physical page number cached), then the addition of

2 extra bytes of PS data results in an overhead of about 9% in

terms of the TLB area (peripheral logic does not get impacted).

Note that the PS bits are added as an extra field to each TLB

entry, leaving the traditional protection bits unchanged. If

the system uses the traditional way of managing permissions,

these permissions bits are still available in the TLB and in the

page tables and can be used by the processor and the OS.

4.2.3. Initial Page Permission Setup

In a traditional system,

a new physical page allocated for processes or virtual machines

(VMs) becomes accessible after their corresponding physical

frame numbers and protection bits are written into the page

tables. In NIMP, the process of initial permission setup is

different. We discuss it first for dynamic memory allocation

and then for static memory allocation.

When an application requests a dynamic page allocation

(for example, using sbrk system call via malloc()), the OS or

the hypervisor would locate a free physical page and establish

a corresponding page table entry. Once this step has completed

the OS or hypervisor will use the new PERM_SET instruction

to assign permissions in a controlled way.

The PERM_SET instruction has the following format:

PERM_SET <virtual page address, new PS entry>

The activities triggered by this instruction are as follows.

First, it performs an address translation using the virtual page

address, TLBs and page tables. Since this page has been

recently setup by the OS, the translation will be found in

the TLB. After that, the current set of permissions for the

corresponding physical page (obtained from its PS entry) is

read. The combination of the selected current PS entry and the

new PS entry as specified in the instruction is then used as a

key to search through the RD. On a match in the RD rules, the

hardware first takes any action specified in the actions field

of the matched RD entry (such as zeroing out the physical

page) and then sets up the new permission bits both in the

TLB and in the protected memory region. If no match in the

RD occurs for this type of transition, then the transition is

disallowed and a permission violation exception is raised. All

these activities are performed by the MPM. The execution of

the PERM_SET instruction is illustrated in Figure 5a, and the

process of accessing the RD is depicted in Figure 5b.

For the initial setup of permissions for static memory pages,

such as code and static data, a similar approach is used. On

a system call such as exec() or CreateProcess(), the OS first

sets up the necessary amount of memory by creating virtual

to physical mappings. Next, the OS uses the PERM_SET

instruction to assign the initial set of permissions which allow

writing to the page (e.g. by the loader). Then the OS would

return from the system call and allow the loader to do its work.

Once the loader has finished writing the code and read-only

data to these pages, it makes another system call (such as

mprotect()) to mark the code pages as executable. At this

point the OS uses the PERM_SET instruction again to carry

out this operation. This operation assumes that the loader is

trustworthy, and that is why it is a part of our TCB.

4.2.4. Permission Changes During Execution

There are sit-

uations during the normal execution of a system that the per-

missions of a page may need to be changed, for example, to

CPU

Physical

Memory

Core 1

Core 0

MMU

L1

Data

L1

Inst.

DTLB

ITLB

L1

Data

L1

Inst.

DTLB

ITLB

Hypervisor

OS

(a) Traditional Hardware and System Software

PS

Table

CPU

Physical

Memory

Core 1

L1

Data

L1

Inst.

DTLB

PermsPerms

ITLB

L1

Data

L1

Inst.

DTLB

PermsPerms

ITLB

Core 0

PS_Base

Register

Rule Database

MMU

MPM

Regular

Memory

Protected

Memory

Hypervisor OS

PERM_SET

(b) NIMP Hardware and System Software

Figure 4: Traditional Hardware vs. NIMP Hardware

support copy-on-write semantics. To request changes to the

existing page permissions, any software layer requesting such

a change does so through the hardware interface provided by

the PERM_SET instruction described above. Regardless of

what layer is invoking this instruction, it directly communi-

cates with the MPM hardware. Notice that because neither

the hypervisor nor the OS directly set the actual PS bits, the

PERM_SET instruction should not be trappable or emulatable

by the hypervisor.

We now describe the means by which the PERM_SET in-

struction is initiated by the various software layers. To enable

the OS kernel to use the page permission change interface

using the PERM_SET instruction, the implementation of the

paging mechanism is slightly modified to call this new in-

struction after setting up the page table entry. Note that the

existing kernel implementation of managing the protection

bits (which stores them as part of the page table entries) can

still remain intact. When the processor executes in the NIMP

mode, then these page table entry bits can be ignored. Alter-

natively, they can still be consulted and the most restrictive of

the two permissions (traditional and NIMP) will be enforced.

For the hypervisor, the permission changing process is sim-

ilar to that of the OS kernel described above. Specifically,

after the page table entry is setup, the call to PERM_SET

instruction is initiated with appropriate operands. The hyper-

visor only needs to perform this activity for its own pages,

as the pages belonging to the OS or the user-level processes

are handled separately, as described above. The addition of

the PERM_SET instruction to the paging implementation is

the only modification to the hypervisor/OS code required by

NIMP.

In the NIMP design, there is currently no distinction be-

tween various user-level programs from the standpoint of man-

aging page permissions. In traditional systems, software PIDs

managed by the OS play this role. However, since the OS is

untrusted in our threat model, we cannot rely on these software

PIDs because they can be easily forged by a compromised OS.

In our design, some level of protection is already presented by

the S bit, which is a part of every PS entry. Specifically, if this

bit is not set for a page, then this page cannot be mapped in

more than one page table at a time, ensuring that it cannot be

shared with any other application. It is reasonable to assume

that if security is needed for some pages, then the application

owning those pages would not set the S bit to prevent sharing,

thus potentially exposing critical data. However, if a more flex-

ible design is desired where several applications can securely

share data in a controlled fashion, NIMP can easily adapt to

them by adding hardware-generated PIDs [

10

,

51

] in place of

software-maintained ones, and storing these hardware PIDs

as part of the PS entry, somewhat increasing the overhead of

the NIMP logic. We leave the detailed quantification of this

feature for future work, as this aspect is not central to the

NIMP design.

4.3. Permission Reference Monitor

We now describe how the last component of the NIMP system

– the Permission Reference Monitor (PRM) – is implemented.

The PRM’s purpose is to enforce (in hardware) the new permis-

sions, while also allowing the lower-privilege level software

to verify that these permissions have not been tampered with.

The PRM’s responsibilities are similar to that of a traditional

MMU, but some additional actions are also required.

When programs are compiled for the NIMP system, each

load and store instruction is augmented with the permission

that it expects other software layers to have for this particular

section of data or code. This information can be communicated

to the PRM hardware in several different ways, depending on

the specifics of the instruction set used.

For example, for CISC ISAs (such as x86), which use

variable-length instructions and opcodes, an additional byte

can be added to every memory instruction to specify permis-

sions of other layers. In this case, 4 bits can be used to check

against the permissions of different privilege layers. Three bits

specify the expected permissions of all other layers, as well

as the expected S bit. We call these the Expected Permission

(EP) bits.

Alternatively, if extending instructions is challenging due,

for example, to the memory alignment issues (as would be the

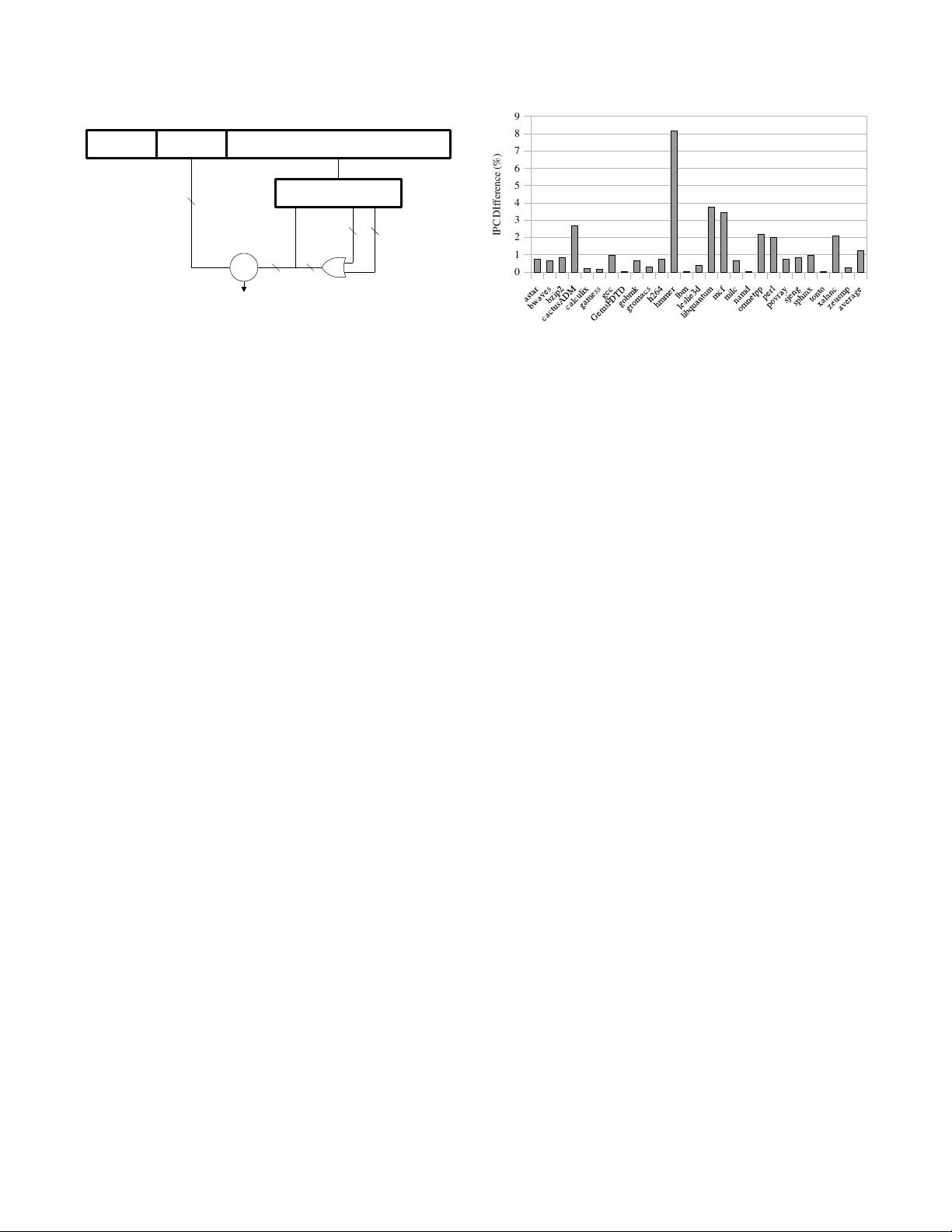

Access

TLB

Access Rule Database

Perform

Action

Exception

Virtual

Address

New

Permissions

Write PS +

TLB

PERM_SET %eax, %ebx

HitMiss

Access Page

Tables

Read PS

Entry

Match

No Match

Current

Permissions

Requester

(Current Privilege Level)

(a) Memory Dataflow Triggered by PERM_SET Instruction

.

.

.

.

.

.

=

=

=

=

Current

Permissions

New

Permissions

Action

11 11

24

8

.

.

.

.

.

.

Current Permission Input New Permission Input

Action

Output

2

Requester Input

Requester

(b) Accessing the Rule Database

Figure 5: Activities Generated by the PERM_SET Instruction

case for fixed-length RISC ISAs) then the EP bits can either

be conveyed through the opcodes (if they are available), or

using compiler annotations, similar to what is used for static

branch prediction.

Regardless of the implementation, when a LOAD or a

STORE instruction enters the memory access stage of the

pipeline, the PRM unit extracts the EP bits and compares them

with the PS bits related to the OS/Hypervisor access rights.

On a match, the memory access is allowed, and on a mismatch,

the access is not performed and an exception is raised. The

exception handling actions depend on the specifics of the mis-

match. The details of this process for a user-level verified

memory access are shown in Figure 6. In the figure we have

assumed that an extra byte in the instruction is used to specify

the EP bits. Note that the regular permission check (i.e. the

user-level permission check in this case) is not depicted since

it is similar to traditional checking. Since the loader is trusted

in NIMP, this ensures that the EP bits are tamper-proof, as is

the rest of the program binary.

An alternative way to implement permission verification

is to augment the context of each process with another reg-

ister that holds the EP bits that are checked by all memory

instructions. In this approach, a new instruction also needs to

be added to the ISA to change the contents of this register as

the EP bits change. Such a scheme avoids significant changes

to the application binaries, but requires more changes to the

library code and may have performance implications if the

toggling of the new register occurs frequently.

4.4. Other Considerations

To complete the NIMP implementation, a few additional

system-level considerations have to be taken into account.

• Secure Context Switches and Interrupts

During context

switches and interrupts, the registers of a running entity may

be exposed. In order to prevent such an exposure, register

contents need to be saved in protected memory, and then

wiped before control is transferred to a higher-privilege

interrupt handler. The NIMP hardware will then have to be

involved in restoring the register state from the protected

memory when the process is resumed.

• Secure Page Swapping

While NIMP prevents malicious

supervisor software from getting access to the application

memory pages, the same functionality also prevents soft-

ware from reading pages in order to swap them to disk.

In order to support swap-out, the rules can be extended to

allow a supervisor to add read permission for itself on a

page, but the associated action encrypts the page using a

key derived from a hash of the application’s code space,

random nonce generated by the hardware and stored in the

application’s memory space, and the page’s current permis-

sions. The supervisor is unable to determine the key without

knowing the nonce, and including a hash of the code in its

derivation ensures that the page can only be swapped back

into the same application. During a swap-in, the encrypted

data can be read back from disk into memory, the correct

permissions restored to the page, and the decryption per-

formed by the hardware.

• Supporting DMA

To handle DMA operations, the NIMP

system uses the same set of permissions as those assigned

for the software privilege level responsible for initiating

DMA requests. It would be possible to assign DMA its

own set of permissions under the NIMP framework, but the

security benefits of this are not clear.

• Protecting from Multithreaded Attacks

A possible attack

avenue exists when a malicious OS spawns a thread that

was not requested by the program. In this case, the OS

chooses the starting instruction address for that thread —

for example, it can cause the movement of confidential data

to an OS-readable page. To protect against such attacks,

the act of setting the program counter for a newly spawned

EP BitsOpcode

Load/Store Instruction Bits

Address

=

TLB/Permission Store

OS

Hypervisor

4

3

3

3

Access Decision

PS Bits

S Bit

4

Figure 6: Permission Verification by the PRM

thread should not be performed without application or user-

level library involvement.

5. Attack Mitigation

We now summarize how the new page permissions utilized by

NIMP and the supporting infrastructure mitigate the various

cross-layer attacks considered in our threat model.

5.1. Mitigating Malicious Supervisor Attacks

To protect against malicious supervisor attacks, some pages

can be set up in a way similar to Page 3 shown in the exam-

ple of Table 1. Such pages are configurable to be readable

and writable only by the application layer. Therefore, the

compromised supervisor layers will have no access to these

pages. Furthermore, the supervisor layers will be incapable

of granting themselves permissions for such pages and later

accessing these pages with these permissions, because there

is no rule in the RD to support such a transition. An attempt

to perform such an unspecified page permission change will

result in the generation of a security exception by the MPM

hardware, as shown in Figure 5a.

5.2. Mitigating Page Remapping Attacks

Page Remapping Attacks can be performed in two styles. In

the first attack variation, a target page is remapped within the

same address space, but with a different set of permissions.

For example, Page 3 shown in Table 1 can be unmapped

and then a new page remapped in its place with a new set

of permissions that now include the OS or the hypervisor’s

read/write permissions. While the current data on the page

will be wiped out during this process, the new data written

to this page by an unsuspecting application would then be

accessible to the supervisor layers. The permission verification

mechanism described in Section 4.3, however, prevents this

attack. In this case, the application would detect the new

permissions upon its first attempt to write, as the permission

verification would fail.

The second variation of remapping attacks involves remap-

ping a page to a different address space, such as that of another

process. To prevent this type of attack, NIMP ensures that

when a non-sharable page is unmapped, its contents are zeroed

out before a new mapping can be established. Mapping and

unmapping events are detected when writes occur to pages

marked with the PT bit, which is stored in each PS entry.

0

1

2

3

4

5

6

7

8

9

IPC DIfference (%)

Figure 7: IPC Overhead of Caching Permissions

5.3. Mitigating Memory Escalation Attacks

In current systems, these types of attacks leverage the fact that

a page marked as executable by a user level application can

also be executed in a hypervisor/OS context. Under NIMP,

it is possible to use Rules 1,2,3 and 4 in Table 2 to create a

page where a higher privilege level has "execute" permission,

while some lower privilege level has "write" permission thus

creating an environment for these attacks. However, the only

way that such a combination of permissions is possible is when

the victim layer itself gives the "write" permission to the lower

privileged layer that initiates the attack. It is not possible for

the attacking (lower-privileged) layer to set up the "execute"

permission for a higher-privileged layer.

6. Performance and Complexity Evaluation of

NIMP

In this section we evaluate the performance impact and hard-

ware complexity of NIMP.

6.1. Performance Evaluation

There are two main sources of NIMP performance overhead

due to the additional actions that need to be performed in

this architecture for address translations and memory accesses.

First, we need to access the permission bits that are stored

separately from the regular page tables. While these bits are

cached inside the regular TLBs and therefore do not impact

the memory access latency on TLB hits, additional memory

access is required on a TLB miss following the page table

walk. Second, some of our new permission changing rules

dictate that the corresponding physical pages are zeroed out

by the hardware before the new permission settings can take

effect — this also adds execution cycles.

For estimating the impact of the extra delays due to ac-

cessing the new permission bits, we used MARSSx86 [

1

] —

a full-system x86-64 simulator. We modified the TLB miss

handling code to perform the regular cache lookups and re-

placements for the PS data to estimate the impact of PS data

on the number of cycles needed to execute applications. Since

the permission bits are also stored in the cache hierarchy (and

therefore, a DRAM access is not always needed to access

them), we accurately simulated this impact as well. Our pro-

cessor configuration is shown in Table 3.

剩余358页未读,继续阅读

2018-09-20 上传

2018-09-13 上传

2019-02-19 上传

2020-08-10 上传

2023-11-20 上传

2021-04-22 上传

2021-04-22 上传

2021-06-15 上传

2023-07-11 上传

ssy630414

- 粉丝: 0

- 资源: 1

上传资源 快速赚钱

我的内容管理

展开

我的内容管理

展开

我的资源

快来上传第一个资源

我的资源

快来上传第一个资源

我的收益 登录查看自己的收益

我的收益 登录查看自己的收益 我的积分

登录查看自己的积分

我的积分

登录查看自己的积分

我的C币

登录后查看C币余额

我的C币

登录后查看C币余额

我的收藏

我的收藏  我的下载

我的下载  下载帮助

下载帮助

最新资源

- Elasticsearch核心改进:实现Translog与索引线程分离

- 分享个人Vim与Git配置文件管理经验

- 文本动画新体验:textillate插件功能介绍

- Python图像处理库Pillow 2.5.2版本发布

- DeepClassifier:简化文本分类任务的深度学习库

- Java领域恩舒技术深度解析

- 渲染jquery-mentions的markdown-it-jquery-mention插件

- CompbuildREDUX:探索Minecraft的现实主义纹理包

- Nest框架的入门教程与部署指南

- Slack黑暗主题脚本教程:简易安装指南

- JavaScript开发进阶:探索develop-it-master项目

- SafeStbImageSharp:提升安全性与代码重构的图像处理库

- Python图像处理库Pillow 2.5.0版本发布

- mytest仓库功能测试与HTML实践

- MATLAB与Python对比分析——cw-09-jareod源代码探究

- KeyGenerator工具:自动化部署节点密钥生成

安全验证

文档复制为VIP权益,开通VIP直接复制

信息提交成功

信息提交成功