274 CHINESE OPTICS LETTERS / Vol. 5, No. 5 / May 10, 2007

Feature-based fusion of infrared and visible dynamic images

using target detection

Congyi Liu (

þþþ

)

1

, Zhongliang Jing (

)

2

, Gang Xiao (

)

2

, and Bo Yang (

)

1

1

School of Electronic Information and Electrical Engineering, Shanghai Jiao Tong University, Shanghai 200030

2

Institute of Aerospace Science & Technology, Shanghai Jiao Tong University, Shanghai 200030

Received September 26, 2006

We employ the target detection to improve the performance of the feature-based fusion of infrared and

visible dynamic images, which forms a novel fusion scheme. First, the target detection is used to segment

the source image sequences into target and background regions. Then, the dual-tree complex wavelet

transform (DT-CWT) is proposed to decompose all the source image sequences. Different fusion rules are

applied respectively in target and background regions to preserve the target information as muc h as possi-

ble. Real world infrared and visible image sequences are used to validate the performance of the proposed

novel scheme. Compared with the previous fusion approaches of image sequences, the improvements of

shift invariance, temporal stability and consistency, and computation cost are all ensured.

OCIS codes: 100.2000, 100.2980, 100.7410, 110.3080.

The techniques of multi-source image fusion originated

in the military fields, and their impetuses also came

from military fields. The battlefield detecting technol-

ogy, based on the pivotal content of multi-sources image

fusion, has become one of the most important military

advanced technologies, including target detection, track,

recognition, and scene awareness.

Image fusion must satisfy the following requirements

[1]

:

preserve all r elevant information (as much as possible) in

the composite image; do not introduce any artifacts or in-

consistencies; be shift and rotational invariant; be tempo-

ral stability and consistency. The later two requirements

are especially important in dynamic images (or image se-

quences) fusion.

Image fusion can be performed at different levels of

information representation, classified in ascending or-

der of abstraction: signal, pixel, feature, and symbol

levels

[2]

. Recently, static images pixel-based fusion meth-

ods have been researched extensively

[3,4]

. However, few

researchers have recently done some work on the dynamic

images (or image sequences) fusion. Even so, they just

focused on the pixel-based fusion of the image sequences.

In this paper, we will do the research on the feature-based

fusion of the image sequences using the region target de-

tection. It will get both qualitative and quantitative im-

provements compared with the pixel-level methods. Be-

cause it has more intelligent semantic fusion r ules which

can be considered based on actual features, it can pre-

serve the target information as much as possible.

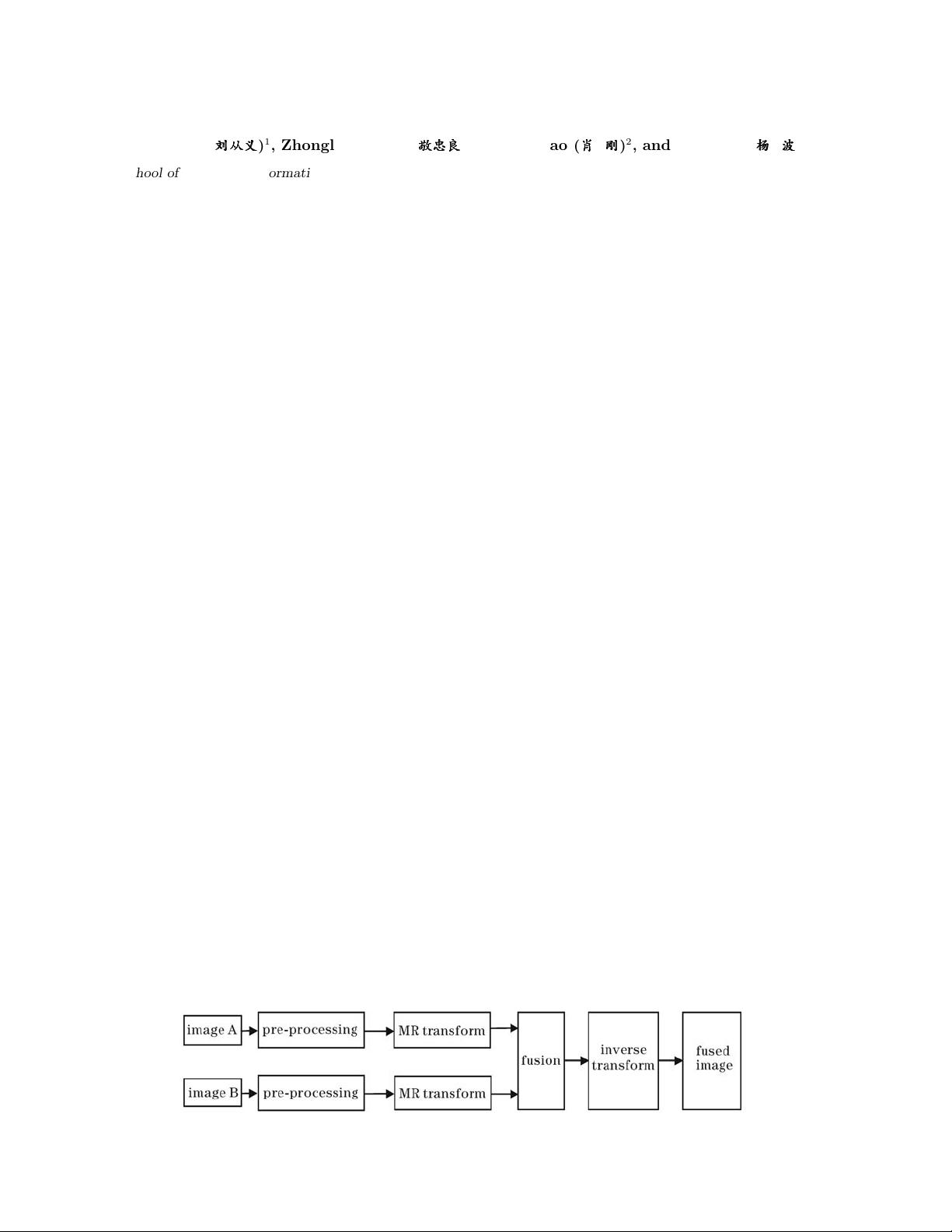

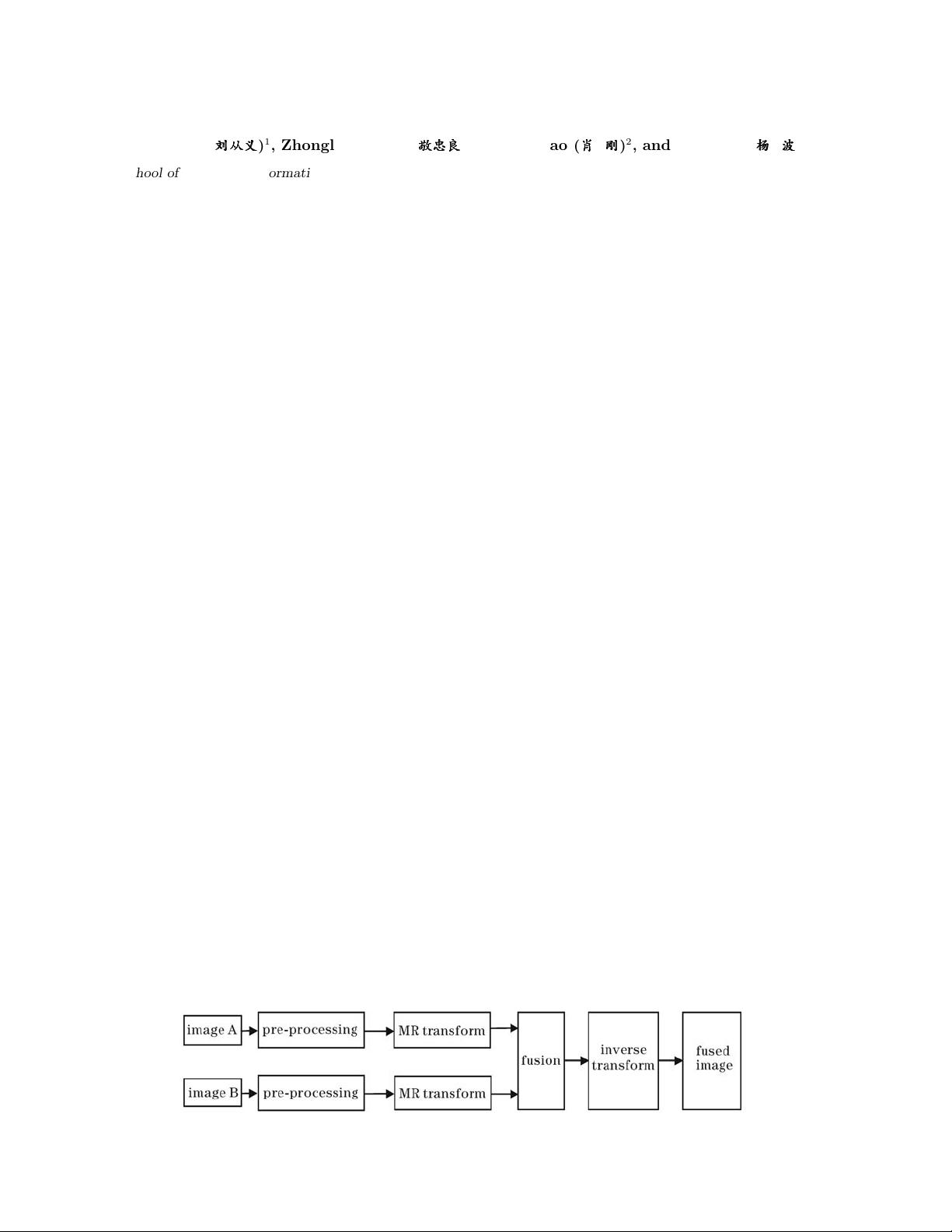

Figure 1 shows the generic pixel-based image fusion

method, which can be divided into three steps as fol-

lows. First, all so urce images are decomposed by using

multi-resolution (MR) method, which can be the discrete

wavelet transform (DWT)

[5]

, discrete wavelet frames

(D WF)

[1,2,6]

etc.. Then, the decomposition coefficients

are fused by applying some fusion rule, which can be a

point-based maximum selection (MS) rule or more so-

phisticated area-based rules. Finally, the fused image is

reconstructed by using the corresponding inverse trans-

form.

For pixel-based approaches, the MR decomposition

coefficients are treated independently (MS rule) or

filtered by a small fixed window (area-based rule). How-

ever, the most applications of a fusion scheme are inter-

ested in features within the image, not the actual pixels.

Therefore, it seems reasonable to incorporate feature in-

formation into the fusion process. Indeed, a number of

feature-level fusion schemes have been proposed. How-

ever, most of them are designed for static image fusion,

and every frame of each source sequence is processed

individually in image sequences case. These methods

do not take full advantage of the wealth of inter-frame-

information within source sequences. We can make use

of the advantage of the inconspicuous changes between

the adjacent frames among the image sequences, and use

the information of the former frame to supervise the pro-

cess of r ecent frame. Therefore, not only can it increase

the speed of the processing, but also make full use of the

abundant inter-frame-information.

In this paper, we propose a novel scheme of feature-

based fusion of infrared (IR) and visible image sequences

using the target detection, as shown in Fig. 2, where the

target detection (TD) technique is introduced to segment

target regions intelligently. To be convenient, We assume

Fig. 1. Pixel-based image fusion scheme.

1671-7694/2007/050274-04

c

2007 Chinese Optics Letters