思科1240AG无线AP配置指南:快速入门与设置详解

版权申诉

150 浏览量

更新于2024-07-05

收藏 667KB PDF 举报

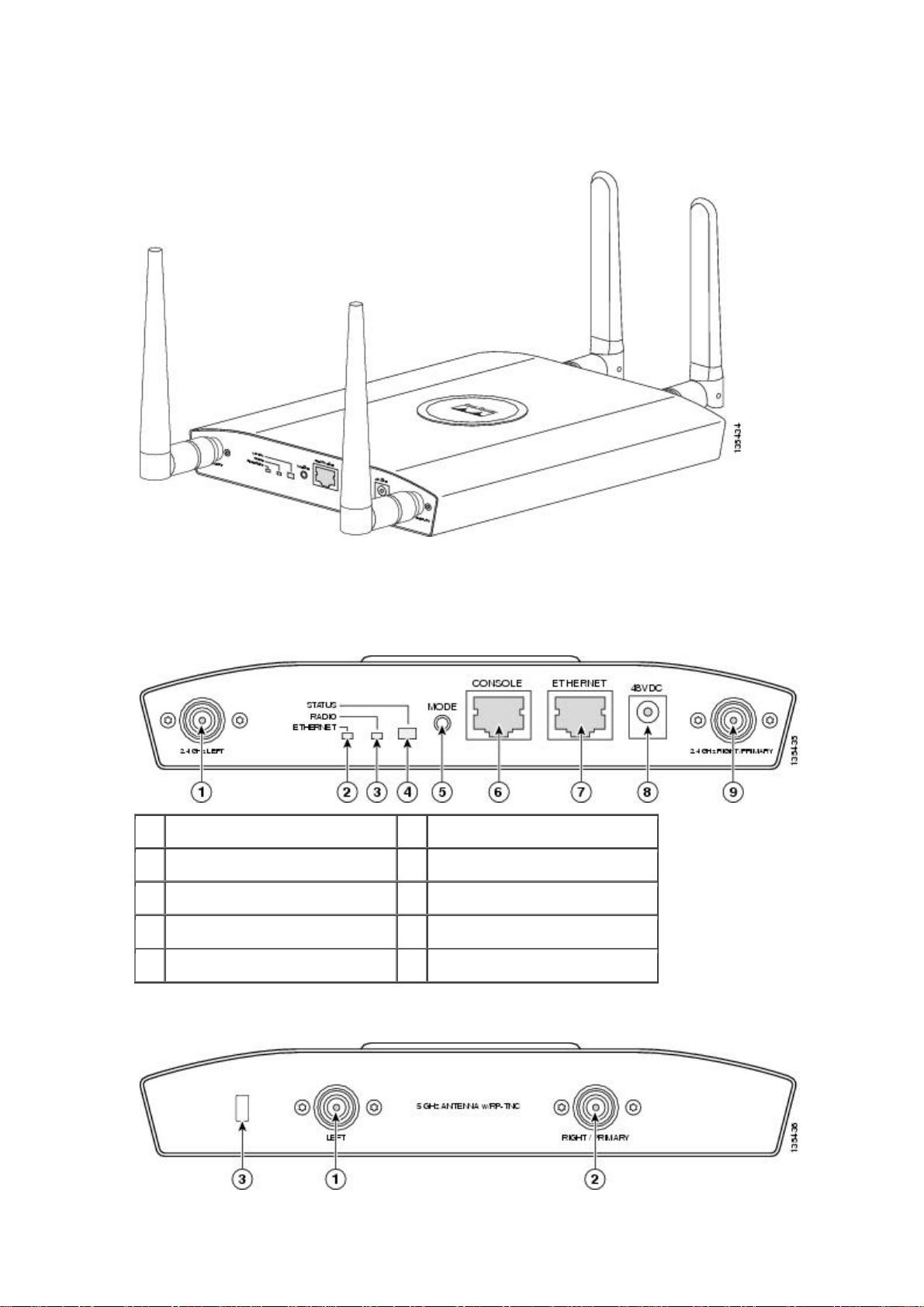

思科WLN00066-无线AP1240AG快速配置手册是一份详细的指南,由韩啸晨翻译,叶俊校对,完成于2008年11月30日。该手册基于英文原版QuickStart Guide Cisco Aironet 1240 AG Series Access Point,针对思科1240AG系列无线接入点提供快速配置步骤。手册特别指出,对于1242G型号的AP,需注意其802.11a功能并不适用,用户应关注802.11B和802.11G的相关内容。

配置过程包括默认登录信息(用户名为Cisco,密码也是Cisco),以及推荐使用DHCP获取IP地址。若无法通过DHCP,用户需要通过Console接口进行手动配置,包括设定SSID(服务标识符)、IP地址、子网掩码和网关。在安装前,确保已准备AP的设备名称、多个频段(如802.11g和802.11a)的SSID、SNMP网管信息,以及可能需要的MAC地址和IP地址设置软件。

设备的安全性是手册的重要部分,所有设备都遵循FCC的安全标准,射频辐射被认为是无害的。在安装过程中,强调了避免将天线靠近人体和头部,以及在危险区域禁止放置无线设备的警告。此外,AP支持IEEE 802.3af供电标准,并符合IEC 60950国际电气安全标准,具备过载保护功能,因此在连接电源和安装位置选择上需严格按照手册指导。

附件中提供了多语言的安全警示,确保用户在操作过程中充分理解并遵守相关规定。这份配置手册为用户提供了全面的设置步骤和安全注意事项,是初次配置或维护思科1240AG无线AP的重要参考资料。

点击了解资源详情

点击了解资源详情

点击了解资源详情

2022-03-10 上传

2023-06-10 上传

2023-05-29 上传

2014-04-22 上传

bigtiger

- 粉丝: 0

- 资源: 920

最新资源

- JHU荣誉单变量微积分课程教案介绍

- Naruto爱好者必备CLI测试应用

- Android应用显示Ignaz-Taschner-Gymnasium取消课程概览

- ASP学生信息档案管理系统毕业设计及完整源码

- Java商城源码解析:酒店管理系统快速开发指南

- 构建可解析文本框:.NET 3.5中实现文本解析与验证

- Java语言打造任天堂红白机模拟器—nes4j解析

- 基于Hadoop和Hive的网络流量分析工具介绍

- Unity实现帝国象棋:从游戏到复刻

- WordPress文档嵌入插件:无需浏览器插件即可上传和显示文档

- Android开源项目精选:优秀项目篇

- 黑色设计商务酷站模板 - 网站构建新选择

- Rollup插件去除JS文件横幅:横扫许可证头

- AngularDart中Hammock服务的使用与REST API集成

- 开源AVR编程器:高效、低成本的微控制器编程解决方案

- Anya Keller 图片组合的开发部署记录