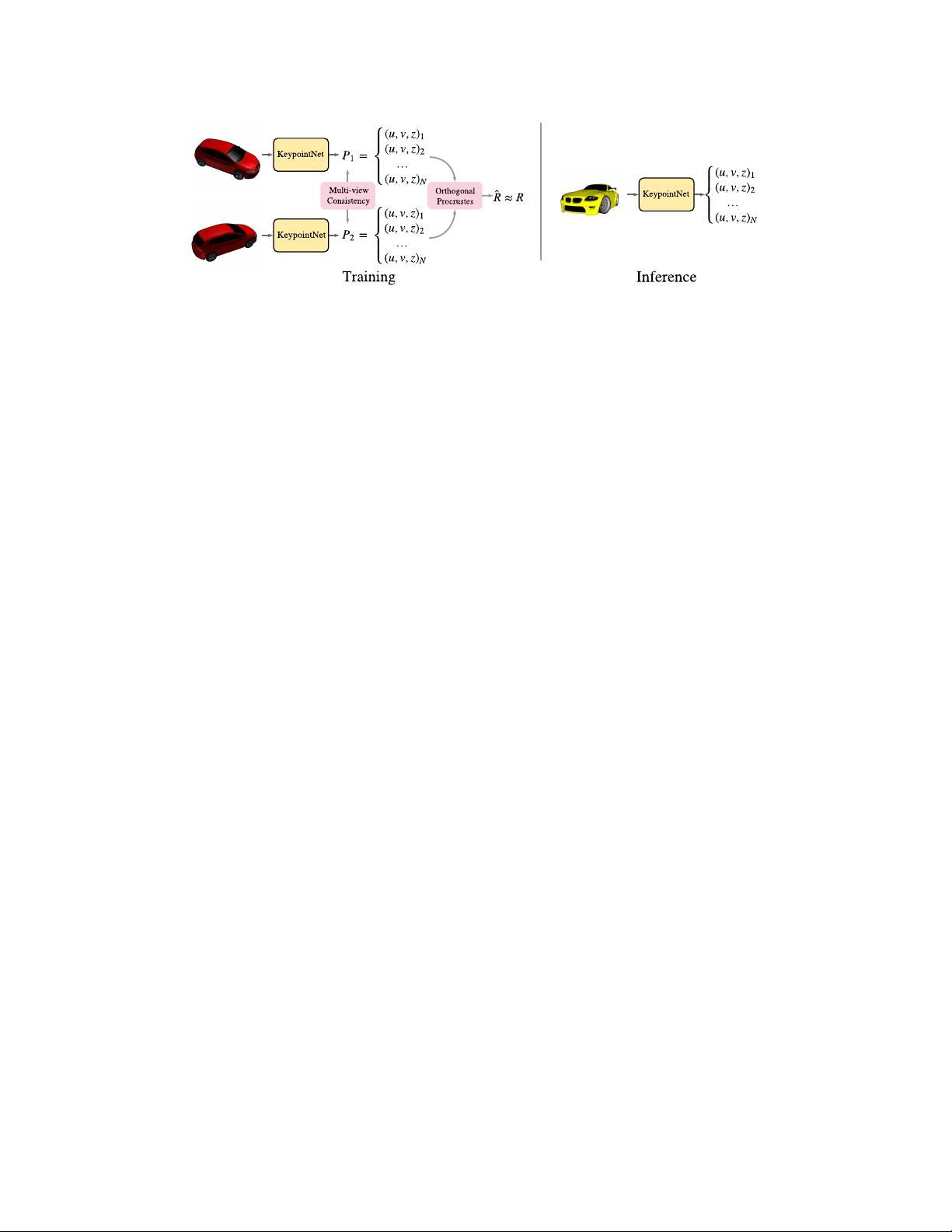

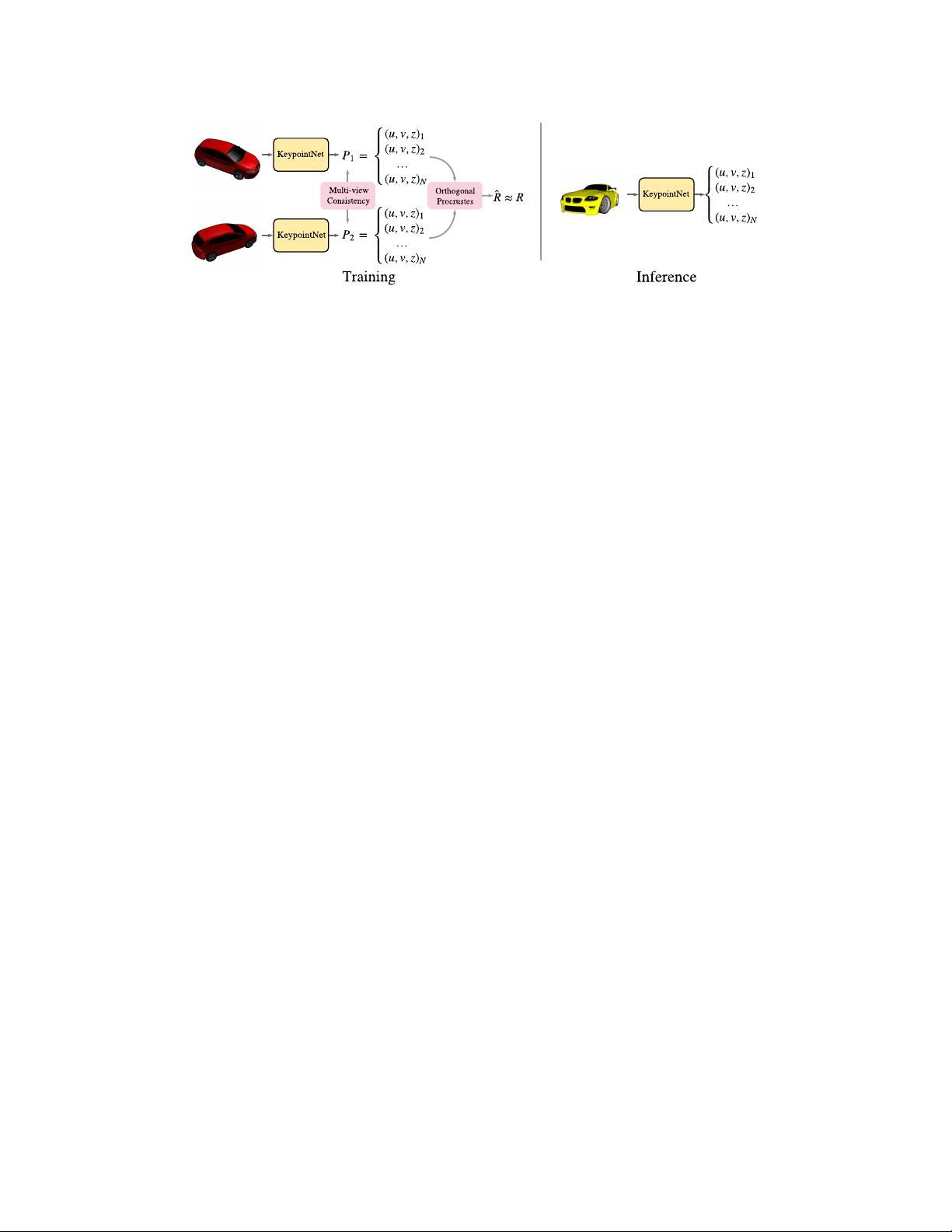

Figure 1: During training, two views of the same object are given as input to the KeypointNet. The

known rigid transformation

(R, t)

between the two views is provided as a supervisory signal. We

optimize an ordered list of 3D keypoints that are consistent in both views and enable recovery of the

transformation. During inference, KeypointNet extracts 3D keypoints from an individual input image.

by a predefined 3D descriptor. Zhou et al. [

61

] use view-consistency as a supervisory signal to predict

3D keypoints, although only on depth maps. Similarly, Su et al. [

43

] leverage synthetically rendered

models to estimate object viewpoint by matching them to real-world image via CNN viewpoint

embedding.

3 End-to-end Optimization of 3D Keypoints

Given a single image of a known object category, our model predicts an ordered list of 3D keypoints,

defined as pixel coordinates and associated depth values. Such keypoints are required to be geometri-

cally and semantically consistent across different viewing angles and instances of an object category

(e.g., see Figure 4). Our KeypointNet has

N

heads that extract

N

keypoints, and the same head tends

to extract 3D points with the same semantic interpretation. These keypoints will serve as a building

block for feature representations based on a sparse set of points, useful for geometric reasoning and

pose-aware or pose-invariant object recognition (e.g., [38]).

In contrast to approaches that learn a supervised mapping from images to a list of annotated keypoint

positions, we do not define the keypoint positions a priori. Instead, we jointly optimize keypoints

with respect to a downstream task. We focus on the task of relative pose estimation at training

time, where given two views of the same object with a known rigid transformation

T

, we aim to

predict optimal lists of 3D keypoints,

P

1

and

P

2

in the two views that best match one view to the

other (Figure 1). We formulate an objective function

O(P

1

, P

2

)

, based on which one can optimize

a parametric mapping from an image to a list of keypoints. Our objective consists of two primary

components:

•

A multi-view consistency loss that measures the discrepancy between the two sets of points

under the ground truth transformation.

•

A relative pose estimation loss, which penalizes the angular difference between the ground

truth rotation R vs. the rotation

ˆ

R recovered from P

1

and P

2

using orthogonal procrustes.

We demonstrate that these two terms allow the model to discover important keypoints, some of which

correspond to semantically meaningful locations that humans would naturally select for different

object classes. Note that we do not directly optimize for keypoints that are semantically meaningful,

as those may be sub-optimal for downstream tasks or simply hard to detect. In what follows, we first

explain our objective function and then describe the neural architecture of KeypointNet.

Notation.

Each training tuple comprises a pair of images

(I, I

0

)

of the same object from differ-

ent viewpoints, along with their relative rigid transformation

T ∈ SE(3)

, which transforms the

underlying 3D shape from I to I

0

. T has the following matrix form:

T =

R

3×3

t

3×1

0 1

, (1)

where

R

and

t

represent a 3D rotation and translation respectively. We learn a function

f

θ

(I)

,

parametrized by

θ

, that maps a 2D image

I

to a list of 3D points

P = (p

1

, . . . , p

N

)

where

p

i

≡

(u

i

, v

i

, z

i

), by optimizing an objective function of the form O(f

θ

(I), f

θ

(I

0

)).

3