2327-4662 (c) 2017 IEEE. Personal use is permitted, but republication/redistribution requires IEEE permission. See http://www.ieee.org/publications_standards/publications/rights/index.html for more information.

This article has been accepted for publication in a future issue of this journal, but has not been fully edited. Content may change prior to final publication. Citation information: DOI 10.1109/JIOT.2017.2779865, IEEE Internet of

Things Journal

IEEE INTERNET OF THINGS JOURNAL 3

EAM-CNN

EAM-IS

EAM-VS

FAM

man

is

LSTM

LSTM

playing

Multi-Space Feature Extraction Attention-In-Attention Decoding (Multi-Event Recognition/Video Captioning)

Softmax

Softmax

Softmax

Mean Pooling

Sigmoid

walk

turn around

eat/drink

get food/drink

use phone

write

discussion

object handover

0.31

0.22

0.55

0.54

0.59

0.27

0.72

0.65

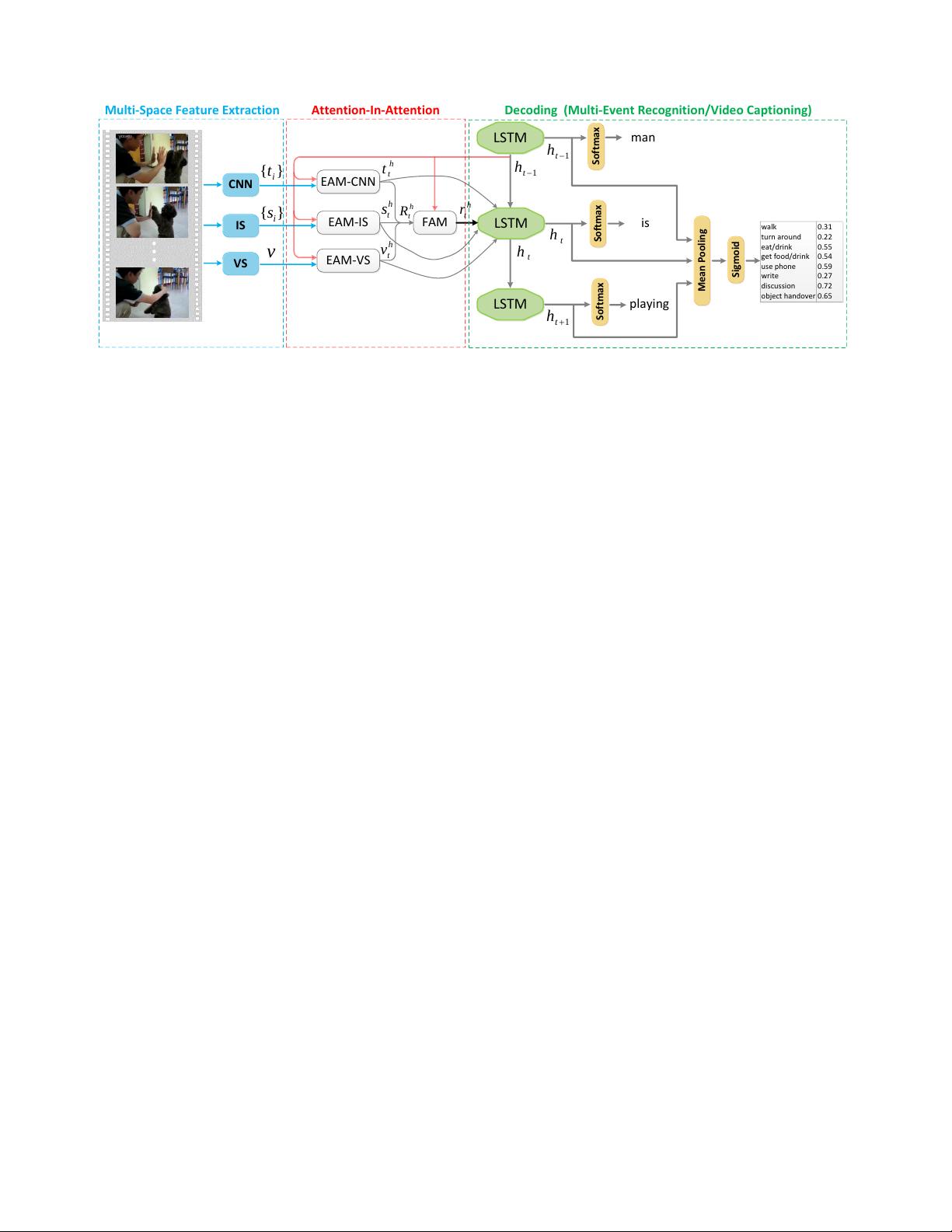

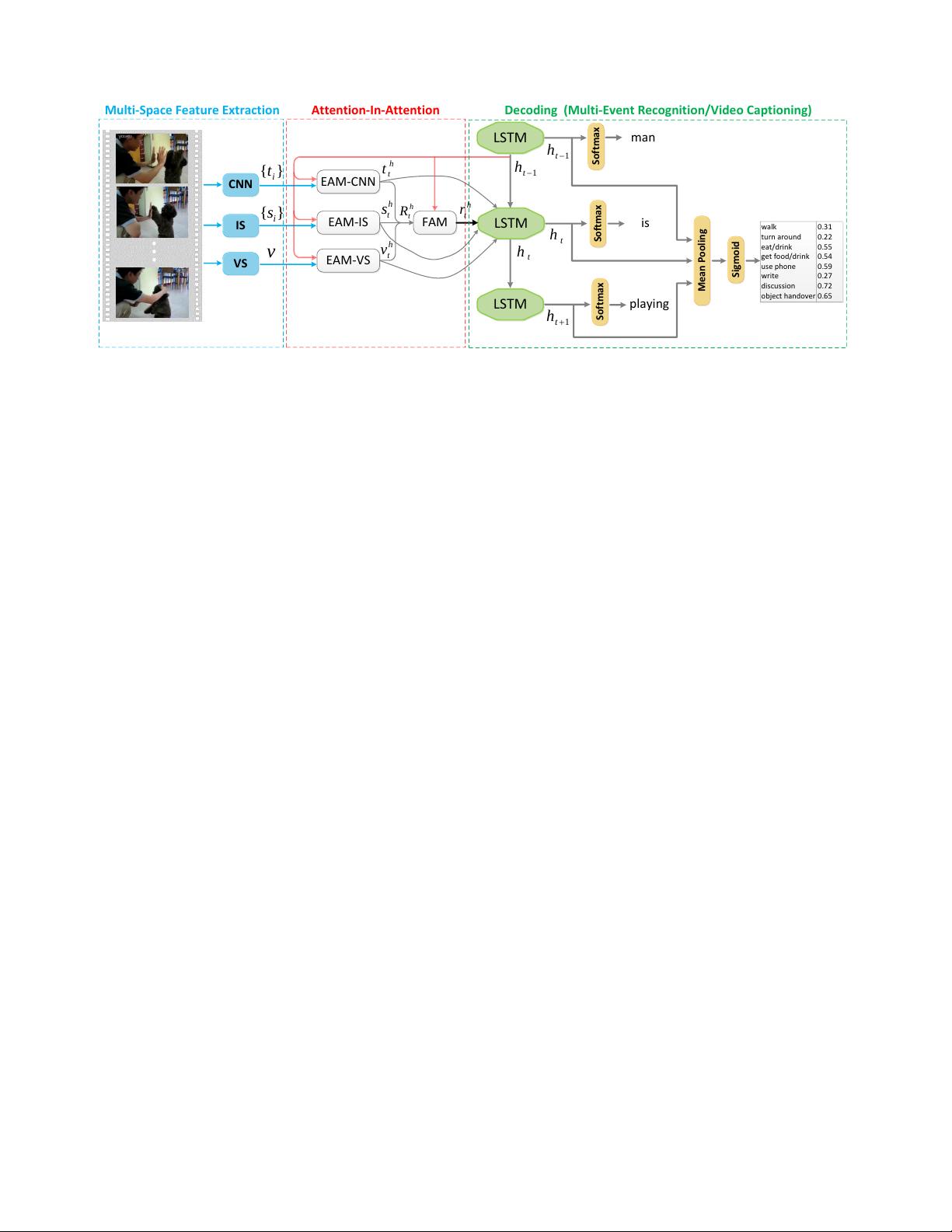

Fig. 2: Illustration of the Attention-In-Attention (AIA) framework, which consists of three components. First, in the Multi-Space Feature

Extraction component, the space-specific features are obtained by multiple off-the-shelf feature extraction methods. Second, the Attention-

In-Attention component is utilized for feature selection and fusion, which includes multiple EAMs and FAM to generate the space-specific

attentive features and further project them into a space with the identical dimension. Third, in the Decoding component, one LSTM unit is

employed to decode the fusion representation and attentive features, where the hidden state is simultaneously fed into both EAMs and FAM

for sentence-induced feature selection and fusion. Particularly, for the multi-event recognition task, we first mean pool the hidden states from

the LSTM unit and then assign a probability distribution by the sigmoid layer. For the video captioning task, the LSTM unit sequentially

generates the description by the softmax layer. The proposed framework is learned end-to-end and we can easily add extra branches for

additional features. CNN: Convolutional Neural Network; IS: Image-wise Semantic; VS: Video-wise Semantic; EAM: Encoder Attention

Module; FAM: Fusion Attention Module.

methods, sequential learning-based methods, and attention-

based methods.

The template-based methods predefine the specific grammar

rules and splits sentences into several terms (e.g., subject,

verb, object, etc.). With such sentence fragments, each term

is aligned with visual content and then the sentence is gen-

erated [18], [19]. Guadarrama et al. [18] designed semantic

hierarchies to choose an appropriate level of the specificity and

accuracy of sentence fragments. Rohrbach et al. [19] learned

to model the relationships between different components of

the input video for descriptions. The advantage of template-

based methods is that the resulting captions are more likely to

be grammatically correct. However, they highly rely on hard-

coded visual concepts and suffer from the implied limits on

the variety of the output.

The sequential learning-based methods have been widely

applied to video captioning, where an encoder maps a se-

quence of video frames to fixed-length feature vectors in the

embedding space and a decoder then generates a translated

sentence in the target language [3], [21], [22], [40]. This

problem is analogous to translate a sequence of words in the

input language to a sequence of words in the output language

in the area of machine translation. The early video captioning

method [22] extended the image caption methods by simply

pooling the features of multiple frames to form a single

representation. Venugopalan et al. [3] applied the sequence to

sequence model to transfer the temporal visual information

to natural language description and further extended it by

inputting both appearance features and optical flow. However,

this strategy can only work for short video clips, which only

contain one major event with limited visual variation, and

ignore the rich fine-grained information conveyed by the video

stream.

Recently, the attention-based methods employ soft attention

mechanism [27] to weight each temporal feature vector in

order to exploit the temporal structure and rich intermediate

description of long videos. For instance, Yao et al. [4] propose

to exploit temporal structure based on soft attention mecha-

nism, which allows to go beyond local temporal modeling

and learns to select the most relevant temporal segments for

video captioning. Ballas et al. [41] leverages convolutional

GRU-RNN to extract visual representation and generate sen-

tence based on the LSTM text-generator with soft-attention

mechanism. Yu et al. [7] further exploit temporal- and spatial-

attention mechanisms to selectively focus on visual elements

during generation.

However, seldom work has been done to hierarchically

learn and integrate the multi-space representations from visu-

al/semantic modalities in the data-driven manner. In this paper,

we explore the comprehensive video representation under the

multi-space features condition for video understanding in IoT

(Internet of Things).

III. ATTENTION-IN-ATTENTION NETWORK (AIA)

In this section, we first give an overview of the proposed

framework and the details of the computational pipeline in

Section III-A. Then, the three key components, including

multi-space feature extraction, attention-in-attention module,

and decoding module, will be detailed in Section III-B, Section

III-C and Section III-D, respectively.

A. Framework Overview

Attention-In-Attention network (AIA) can adaptively &

jointly perform the procedures of space-specific feature se-

lection and multi-space attentive feature fusion. Fig. 2 de-

picts the AIA framework for video captioning. Given several