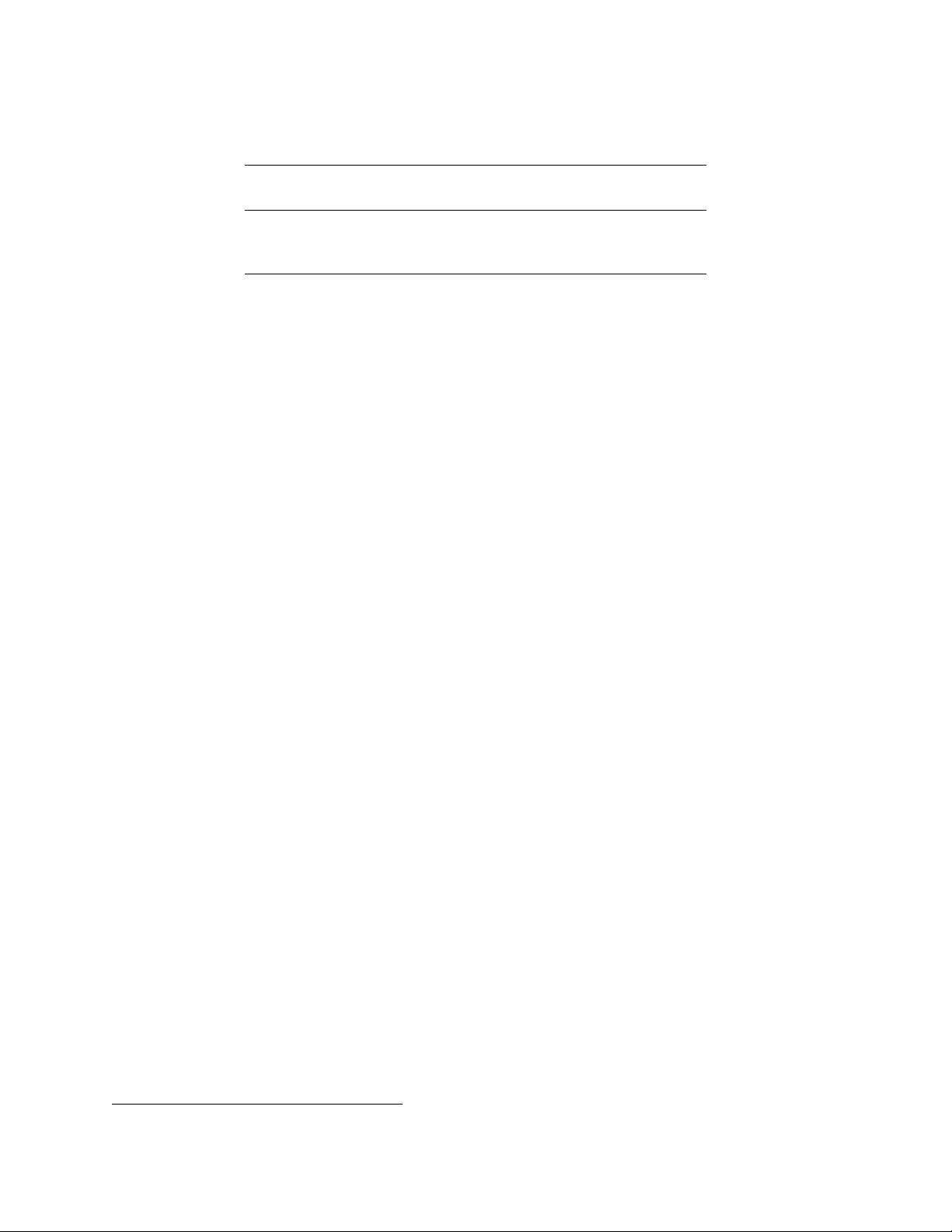

Table 10: Results on the FRMT (Few-shot Regional Machine Translation) benchmark of dialect-specific translation.

Inputs are 5-shot exemplars and scores are computed with BLEURT.

Portuguese Portuguese Chinese Chinese

(Brazil) (Portugal) (Mainland) (Taiwan)

PaLM 78.5 76.1 70.3 68.6

Google Translate 80.2 75.3 72.3 68.5

PaLM 2 81.1 78.3 74.4 72.0

Regional translation experimental setup

We also report results on the FRMT benchmark (Riley et al., 2023) for

Few-shot Regional Machine Translation. By focusing on region-specific dialects, FRMT allows us to measure PaLM

2’s ability to produce translations that are most appropriate for each locale—translations that will feel natural to each

community. We show the results in Table 10. We observe that PaLM 2 improves not only over PaLM but also over

Google Translate in all locales.

Potential misgendering harms

We measure PaLM 2 on failures that can lead to potential misgendering harms in

zero-shot translation. When translating into English, we find stable performance on PaLM 2 compared to PaLM, with

small improvements on worst-case disaggregated performance across 26 languages. When translating out of English into

13 languages, we evaluate gender agreement and translation quality with human raters. Surprsingly, we find that even in

the zero-shot setting PaLM 2 outperforms PaLM and Google Translate on gender agreement in three high-resource

languages: Spanish, Polish and Portuguese. We observe lower gender agreement scores when translating into Telugu,

Hindi and Arabic with PaLM 2 as compared to PaLM. See Appendix E.5 for results and analysis.

4.6 Natural language generation

Due to their generative pre-training, natural language generation (NLG) rather than classification or regression has

become the primary interface for large language models. Despite this, however, models’ generation quality is rarely

evaluated, and NLG evaluations typically focus on English news summarization. Evaluating the potential harms or

bias in natural language generation also requires a broader approach, including considering dialog uses and adversarial

prompting. We evaluate PaLM 2’s natural language generation ability on representative datasets covering a typologically

diverse set of languages

10

:

• XLSum

(Hasan et al., 2021), which asks a model to summarize a news article in the same language in a single

sentence, in Arabic, Bengali, English, Japanese, Indonesian, Swahili, Korean, Russian, Telugu, Thai, and Turkish.

• WikiLingua

(Ladhak et al., 2020), which focuses on generating section headers for step-by-step instructions

from WikiHow, in Arabic, English, Japanese, Korean, Russian, Thai, and Turkish.

• XSum (Narayan et al., 2018), which tasks a model with generating a news article’s first sentence, in English.

We compare PaLM 2 to PaLM using a common setup and re-compute PaLM results for this work. We use a custom

1-shot prompt for each dataset, which consists of an instruction, a source document, and its generated summary,

sentence, or header. As evaluation metrics, we use ROUGE-2 for English, and SentencePiece-ROUGE-2, an extension

of ROUGE that handles non-Latin characters using a SentencePiece tokenizer—in our case, the mT5 (Xue et al., 2021)

tokenizer—for all other languages.

We focus on the 1-shot-learning setting, as inputs can be long. We truncate extremely long inputs to about half the max

input length, so that instructions and targets can always fit within the model’s input. We decode a single output greedily

and stop at an exemplar separator (double newline), or continue decoding until the maximum decode length, which is

set to the 99th-percentile target length.

10

We focus on the set of typologically diverse languages also used in TyDi QA (Clark et al., 2020).

19