Making Lockless Synchronization Fast:

Performance Implications of Memory Reclamation

Thomas E. Hart

1∗

, Paul E. McKenney

2

, and Angela Demke Brown

1

1

University of Toronto

2

IBM Beaverton

Dept. of Computer Science Linux Technology Center

Toronto, ON M5S 2E4 CAN Beaverton, OR 97006 USA

{tomhart, demke}@cs.toronto.edu paulmck@us.ibm.com

Abstract

Achieving high performance for concurrent app lications

on modern multiprocesso rs remains challenging. Many pro-

grammers av oid locking to improve performance, while o th-

ers replace locks with non-blocking synchronization to pro-

tect against deadlock, priority inversion, and convoying. In

both cases, dynamic data structures that avoid locking, re-

quire a memory reclamation scheme that reclaims nodes

once they are no longer in use.

The performance of existing memory reclamation

schemes has not been thoroughly evaluate d. We conduct

the first fair and comprehen sive comparison of three recent

schemes—quiescent-state-based r e c l a m a t i o n, epoch-based

reclamation, and hazard-pointer-based reclamation—using

a flexible microbenchmark. Our results show that there is

no globally optimal scheme. When evaluating lockless syn-

chronization, programmers and algorithm designers should

thus carefully consider the data structure, the workload,

and the execution environ ment, each of which can dramati-

cally affect memory reclamat i o n performance.

1 Introduction

As multiprocessors become mainstrea m , multithreaded

applications will become more common, increa sing the

need for efficient coordination of concurrent accesses to

shared data struc tures. Traditional locking requires expen-

sive atomic operations, such as com pare-and-swap (CAS),

even when locks are uncontended. For example, acquiring

and releasing an uncontended spinlock req uires over 400

cycles on an IBM

R

!

POWER

TM

CPU. Therefore, many re-

searchers recommend avoiding lock ing [2, 7, 22]. Some

systems, such as Linux

TM

, use c oncurrently-read able syn-

chronization, which uses locks for updates but not for read s.

∗

Supported by an NSERC Canada Graduate Scholarship.

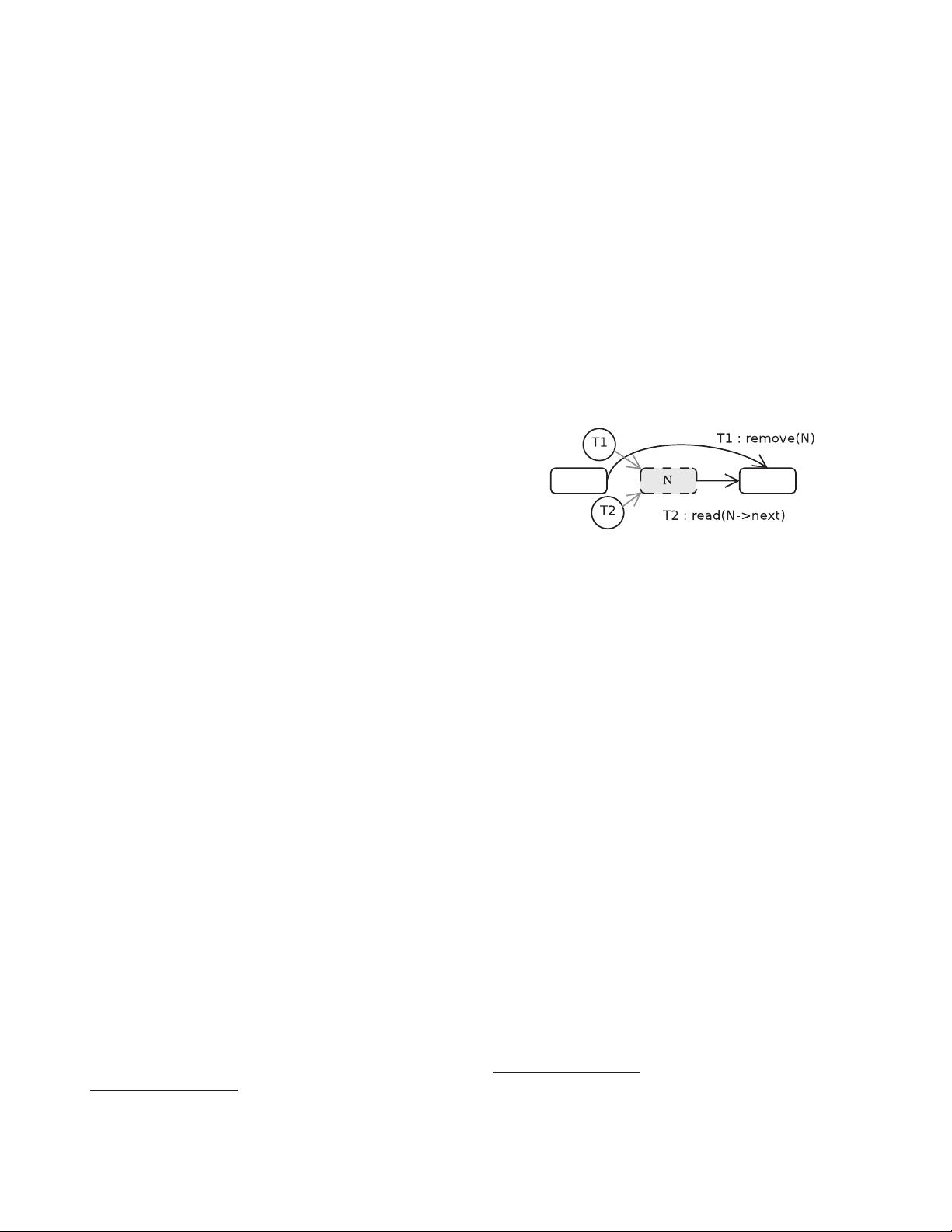

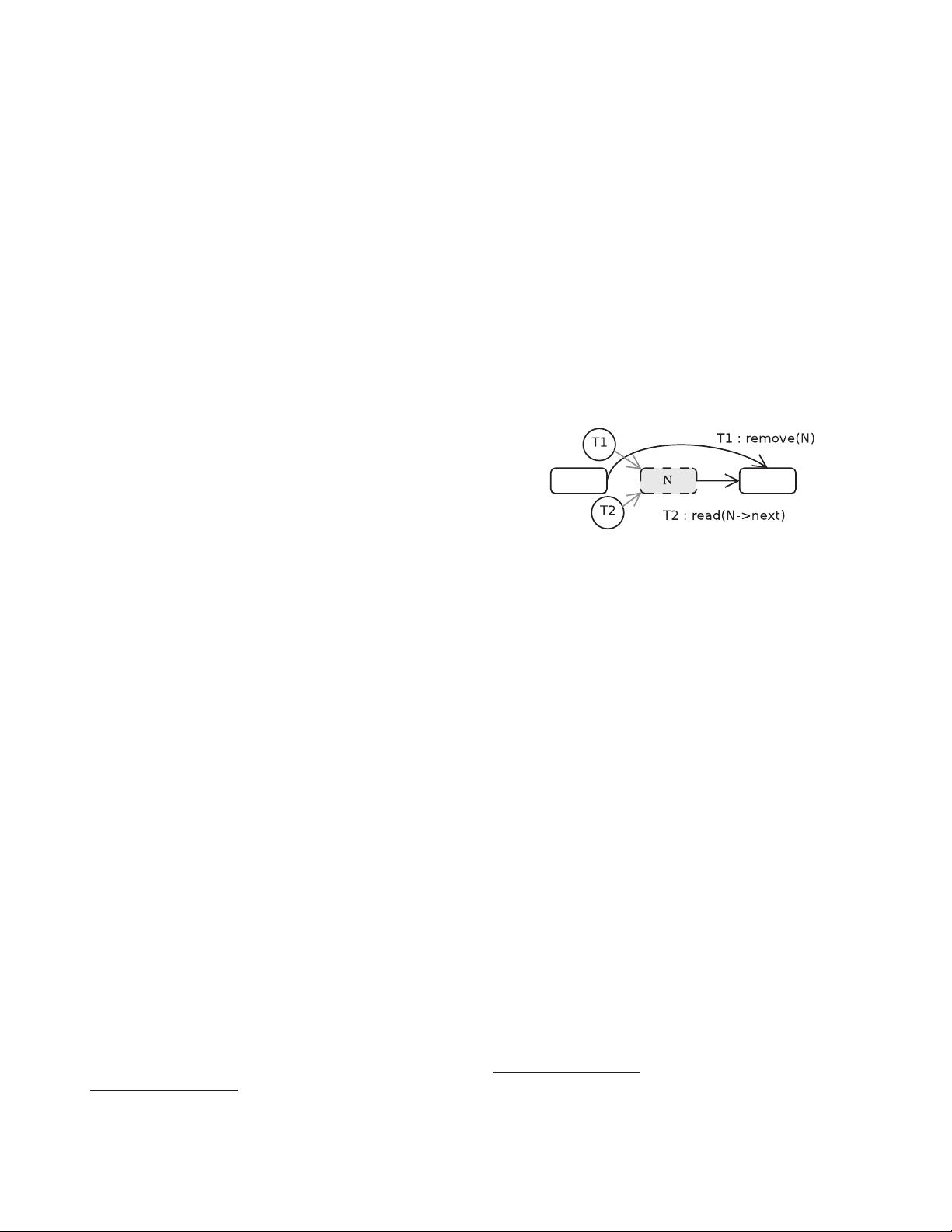

Figure 1. Read/reclaim race.

Locking is also susceptible to priority inversion, convoying,

deadlock , and blocking due to thread failure [3, 10], leading

researchers to pursue non-blocking (or lock-free) synchro-

nization [6, 12, 13, 1 4, 16, 29]. In some cases, lock-free

approaches can bring perform ance benefits [25]. For clar-

ity, we describe all strategies that avoid locks as lockless.

A major challenge for lockless synchroniz ation is han-

dling the read/reclaim races that arise in dynamic data

structures. Fig ure 1 illustrates the problem: thread T1 re-

moves node N fro m a list while thread T2 is referencin g it.

N ’s memory must be rec laimed to allow reuse, lest memory

exhaustion block all threads, but such reuse is unsafe while

T2 continues referencing N . For lan guages like C, where

memory m ust be explicitly recl a i m e d (e.g. via free()),

program mers must combine a memory reclamation scheme

with their lockl e ss d a t a structures to resolve these r ac es .

1

Several such reclamation schemes have been proposed.

Programmers need to understand the semantics and the

performance imp lications of ea ch scheme, since the over-

head of inefficien t recla mation can be worse than that of

locking. For example, reference counting [ 5, 29] has high

overhead in the base case and scales po orly with data-

structure size. Thi s is unacce ptable when performance is

the motivation for lock less synchronization. Unf ortunately,

there is no single optimal scheme and existing work is rela-

tively silent on factors affecting reclamat i on performance.

1

Reclamation is subsumed into automatic garbage collectors in envi-

ronments that provide them, such as Java

TM

.

1-4244-0054-6/06/$20.00 ©2006 IEEE