the feature compared with label $FT for each sentence.

J&L’s method was only applied to noun and pronoun. To

differentiate noun and pronoun that are not comparators or

features, they added the fourth label $NEF, i.e., nonentity-

feature. These labels were used as pivots together with

special tokens li & rj

1

(tokens at specific positions), #start

(beginning of a sentence), and #end (end of a sentence) to

generate sequence data, sequences with single label only

and minimum support greater than 1 percent are retained,

and then LSRs were created. When applying the learned

LSRs for extraction, LSRs with higher confidence were

applied first.

J&L’s method have been proved effective in their

experimental setups. However, it has the following

weaknesses:

. The performance of J&L’s method relies heavily on a

set of comparative sentence indicative keywords.

These keywords were manually created and they

offered no guidelines to select keywords for inclu-

sion. It is also difficult to ensure the completeness of

the keyword list.

. Users can express comparative sentences or ques-

tions in many different ways. To have high recall, a

large annotated training corpus is necessary. This is

an expensive process.

. Example CSRs and LSRs given in Jindal and Liu [7]

are mostly a combination of POS tags and keywords.

It is a surprise that their rules achieved high

precision but low recall. They attributed most errors

to POS tagging errors. However, we suspect that

their rules might be too specific and overfit their

small training set (about 2,600 sentences). We would

like to increase recall, avoid overfitting, and allow

rules to include discriminative lexical tokens to

retain precision.

In the next section, we introduce our method to address

these shortcomings.

3WEAKLY SUPERVISED METHOD FOR

COMPARATOR MINING

Our weakly supervised method is a pattern-based

approach similar to J&L’s method, but it is different in

many aspects: instead of using separate CSRs and LSRs,

our method aims to learn sequential patterns which can

be used to identify comparative question and extract

comparators simultaneously.

In our approach, a sequential pattern is defined as a

sequence Sðs

1

s

2

...s

i

...s

n

Þ where s

i

can be a word, a POS

tag, or a symbol denoting either a comparator ($C), or the

beginning (#start) or the end of a question (#end). A

sequential pattern is called an indicative extraction pattern

(IEP) if it can be used to identify comparative questions

and extract comparators in them with high reliability. We

will formally define the reliability score of a pattern in the

next section.

Once a question matches an IEP, it is classified as a

comparative question and the token sequences correspond-

ing to the comparator slots in the IEP are extracted as

comparators. When a question can match multiple IEPs, the

longest IEP is used.

2

Therefore, instead of manually creating

a list of indicative keywords, we create a set of IEPs. We will

show how to acquire IEPs automatically using a boot-

strapping procedure with minimum supervision by taking

advantage of a large unlabeled question collection in the

following sections. The evaluations shown in Section 5

confirm that our weakly supervised method can achieve

high recall while retain high precision.

This pattern definition is inspired by the work of

Ravichandran and Hovy [14]. Table 1 shows some

examples of such sequential patterns. We also allow POS

constraint on comparators as shown in the pattern

“<; $C=NN or $C=NN ? #endj>”. It means that a valid

comparator must have an NN POS tag.

3.1 Mining Indicative Extraction Patterns

Our weakly supervised IEP mining approach is based on

two key assumptions.

. If a sequential pattern can be used to extract many

reliable comparator pairs, it is very likely to be an

IEP.

. If a comparator pair can be extracted by an IEP, the

pair is reliable.

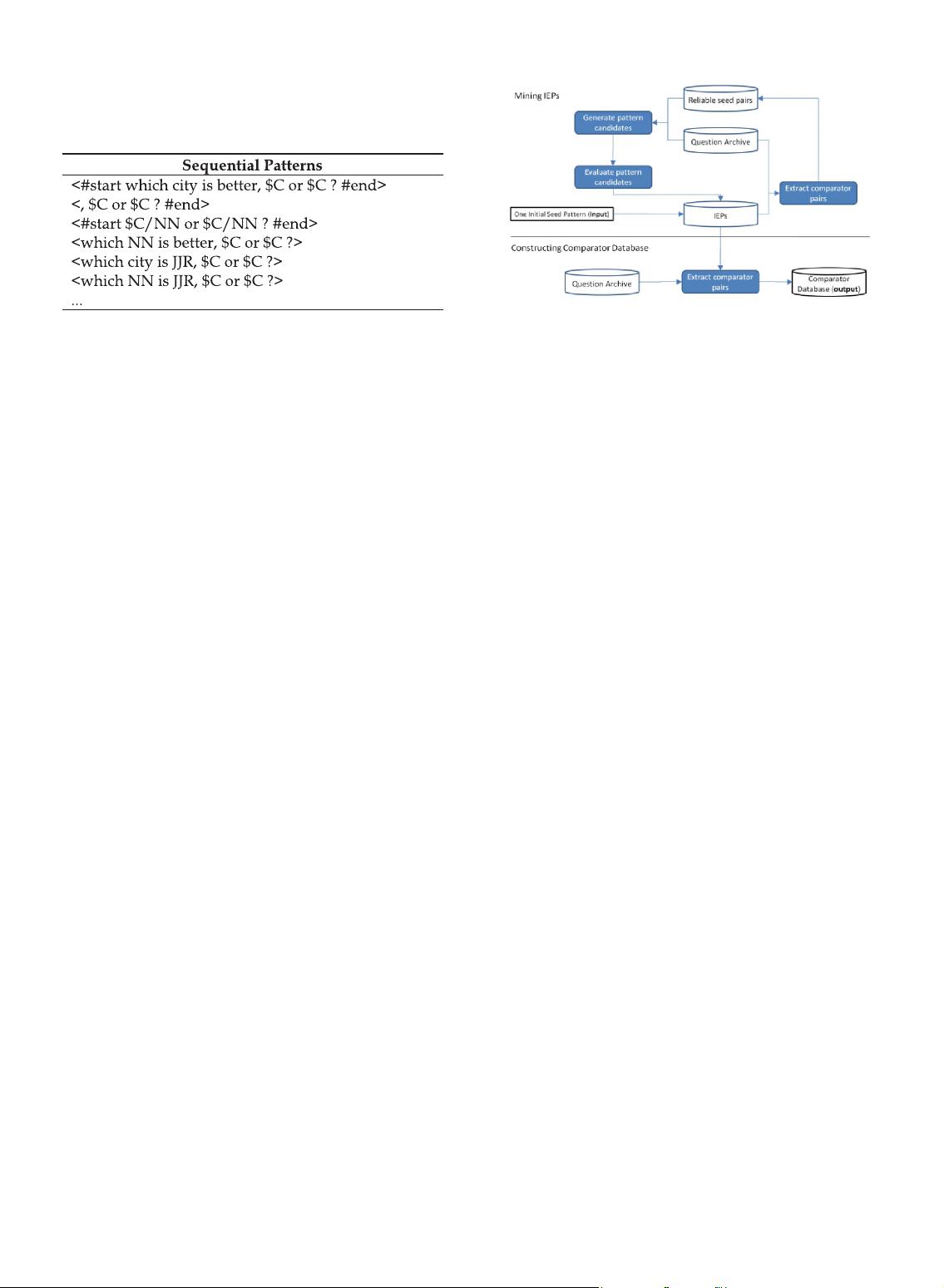

Based on these two assumptions, we design our boot-

strapping algorithm as shown in Fig 1. The bootstrapping

process starts with a single IEP. From it, we extract a set of

1500 IEEE TRANSACTIONS ON KNOWLEDGE AND DATA ENGINEERING, VOL. 25, NO. 7, JULY 2013

1. l

i

marks a token is at the ith position to the left of the pivot and r

j

marks a token is at jth position to the right of the pivot where i and j are

between 1 and 4 in J&L [7].

2. It is because the longest IEP is likely to be the most specific and

relevant pattern for the given question.

TABLE 1

Candidate Indicative Extraction Pattern (IEP) Examples of the

Question “Which City Is Better, NYC or Paris?”

Fig. 1. Overview of the bootstrapping algorithm.