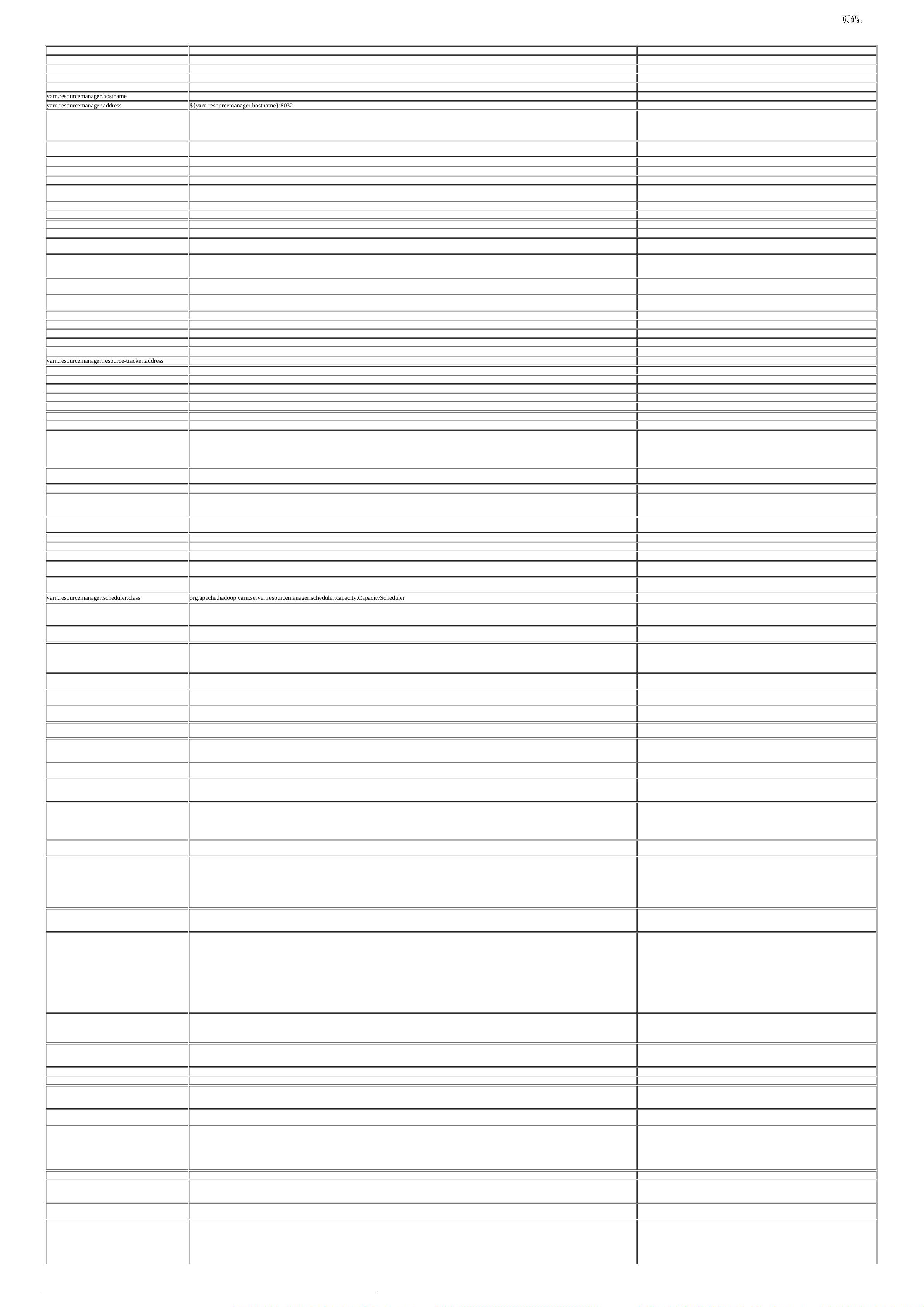

name value description

yarn.ipc.client.factory.class Factory to create client IPC classes.

yarn.ipc.server.factory.class Factory to create server IPC classes.

yarn.ipc.record.factory.class Factory to create serializeable records.

yarn.ipc.rpc.class org.apache.hadoop.yarn.ipc.HadoopYarnProtoRPC RPC class implementation

yarn.resourcemanager.hostname 0.0.0.0 The hostname of the RM.

yarn.resourcemanager.address ${yarn.resourcemanager.hostname}:8032 The address of the applications manager interface in the RM.

yarn.resourcemanager.bind-host

The actual address the server will bind to. If this optional address is set, the RPC and webapp

servers will bind to this address and the port specified in yarn.resourcemanager.address and

yarn.resourcemanager.webapp.address, respectively. This is most useful for making RM listen

to all interfaces by setting to 0.0.0.0.

yarn.resourcemanager.auto-update.containers false

If set to true, then ALL container updates will be automatically sent to the NM in the next

heartbeat

yarn.resourcemanager.client.thread-count 50 The number of threads used to handle applications manager requests.

yarn.resourcemanager.amlauncher.thread-count 50 Number of threads used to launch/cleanup AM.

yarn.resourcemanager.nodemanager-connect-retries 10 Retry times to connect with NM.

yarn.dispatcher.drain-events.timeout 300000

Timeout in milliseconds when YARN dispatcher tries to drain the events. Typically, this

happens when service is stopping. e.g. RM drains the ATS events dispatcher when stopping.

yarn.am.liveness-monitor.expiry-interval-ms 600000 The expiry interval for application master reporting.

yarn.resourcemanager.principal The Kerberos principal for the resource manager.

yarn.resourcemanager.scheduler.address ${yarn.resourcemanager.hostname}:8030 The address of the scheduler interface.

yarn.resourcemanager.scheduler.client.thread-count 50 Number of threads to handle scheduler interface.

yarn.resourcemanager.application-master-

service.processors

Comma separated class names of ApplicationMasterServiceProcessor implementations. The

processors will be applied in the order they are specified.

yarn.http.policy HTTP_ONLY

This configures the HTTP endpoint for YARN Daemons.The following values are supported: -

HTTP_ONLY : Service is provided only on http - HTTPS_ONLY : Service is provided only

on https

yarn.resourcemanager.webapp.address ${yarn.resourcemanager.hostname}:8088

The http address of the RM web application. If only a host is provided as the value, the webapp

will be served on a random port.

yarn.resourcemanager.webapp.https.address ${yarn.resourcemanager.hostname}:8090

The https address of the RM web application. If only a host is provided as the value, the

webapp will be served on a random port.

yarn.resourcemanager.webapp.spnego-keytab-file The Kerberos keytab file to be used for spnego filter for the RM web interface.

yarn.resourcemanager.webapp.spnego-principal The Kerberos principal to be used for spnego filter for the RM web interface.

yarn.resourcemanager.webapp.ui-actions.enabled true Add button to kill application in the RM Application view.

yarn.webapp.ui2.enable false To enable RM web ui2 application.

yarn.webapp.ui2.war-file-path Explicitly provide WAR file path for ui2 if needed.

yarn.resourcemanager.resource-tracker.address ${yarn.resourcemanager.hostname}:8031

yarn.acl.enable false Are acls enabled.

yarn.acl.reservation-enable false Are reservation acls enabled.

yarn.admin.acl * ACL of who can be admin of the YARN cluster.

yarn.resourcemanager.admin.address ${yarn.resourcemanager.hostname}:8033 The address of the RM admin interface.

yarn.resourcemanager.admin.client.thread-count 1 Number of threads used to handle RM admin interface.

yarn.resourcemanager.connect.max-wait.ms 900000 Maximum time to wait to establish connection to ResourceManager.

yarn.resourcemanager.connect.retry-interval.ms 30000 How often to try connecting to the ResourceManager.

yarn.resourcemanager.am.max-attempts 2

The maximum number of application attempts. It's a global setting for all application masters.

Each application master can specify its individual maximum number of application attempts

via the API, but the individual number cannot be more than the global upper bound. If it is, the

resourcemanager will override it. The default number is set to 2, to allow at least one retry for

AM.

yarn.resourcemanager.container.liveness-

monitor.interval-ms

600000 How often to check that containers are still alive.

yarn.resourcemanager.keytab /etc/krb5.keytab The keytab for the resource manager.

yarn.resourcemanager.webapp.delegation-token-auth-

filter.enabled

true

Flag to enable override of the default kerberos authentication filter with the RM authentication

filter to allow authentication using delegation tokens(fallback to kerberos if the tokens are

missing). Only applicable when the http authentication type is kerberos.

yarn.resourcemanager.webapp.cross-origin.enabled false

Flag to enable cross-origin (CORS) support in the RM. This flag requires the CORS filter

initializer to be added to the filter initializers list in core-site.xml.

yarn.nm.liveness-monitor.expiry-interval-ms 600000 How long to wait until a node manager is considered dead.

yarn.resourcemanager.nodes.include-path Path to file with nodes to include.

yarn.resourcemanager.nodes.exclude-path Path to file with nodes to exclude.

yarn.resourcemanager.node-ip-cache.expiry-interval-

secs

-1 The expiry interval for node IP caching. -1 disables the caching

yarn.resourcemanager.resource-tracker.client.thread-

count

50 Number of threads to handle resource tracker calls.

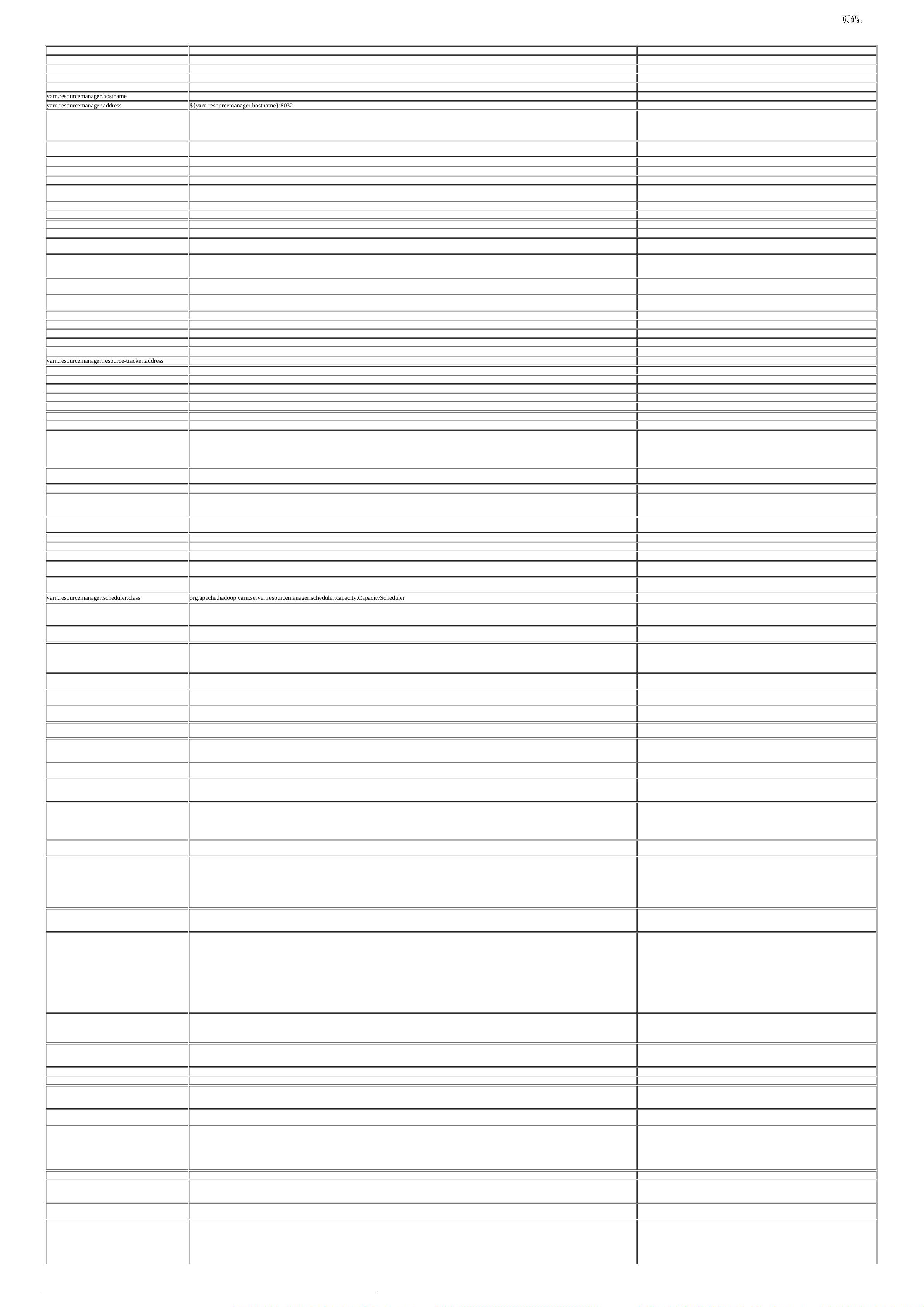

yarn.resourcemanager.scheduler.class org.apache.hadoop.yarn.server.resourcemanager.scheduler.capacity.CapacityScheduler The class to use as the resource scheduler.

yarn.scheduler.minimum-allocation-mb 1024

The minimum allocation for every container request at the RM in MBs. Memory requests

lower than this will be set to the value of this property. Additionally, a node manager that is

configured to have less memory than this value will be shut down by the resource manager.

yarn.scheduler.maximum-allocation-mb 8192

The maximum allocation for every container request at the RM in MBs. Memory requests

higher than this will throw an InvalidResourceRequestException.

yarn.scheduler.minimum-allocation-vcores 1

The minimum allocation for every container request at the RM in terms of virtual CPU cores.

Requests lower than this will be set to the value of this property. Additionally, a node manager

that is configured to have fewer virtual cores than this value will be shut down by the resource

manager.

yarn.scheduler.maximum-allocation-vcores 4

The maximum allocation for every container request at the RM in terms of virtual CPU cores.

Requests higher than this will throw an InvalidResourceRequestException.

yarn.scheduler.include-port-in-node-name false

Used by node labels. If set to true, the port should be included in the node name. Only usable if

your scheduler supports node labels.

yarn.resourcemanager.recovery.enabled false

Enable RM to recover state after starting. If true, then yarn.resourcemanager.store.class must

be specified.

yarn.resourcemanager.fail-fast ${yarn.fail-fast}

Should RM fail fast if it encounters any errors. By defalt, it points to ${yarn.fail-fast}. Errors

include: 1) exceptions when state-store write/read operations fails.

yarn.fail-fast false

Should YARN fail fast if it encounters any errors. This is a global config for all other

components including RM,NM etc. If no value is set for component-specific config (e.g

yarn.resourcemanager.fail-fast), this value will be the default.

yarn.resourcemanager.work-preserving-

recovery.enabled

true

Enable RM work preserving recovery. This configuration is private to YARN for

experimenting the feature.

yarn.resourcemanager.work-preserving-

recovery.scheduling-wait-ms

10000

Set the amount of time RM waits before allocating new containers on work-preserving-

recovery. Such wait period gives RM a chance to settle down resyncing with NMs in the

cluster on recovery, before assigning new containers to applications.

yarn.resourcemanager.store.class org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore

The class to use as the persistent store. If

org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore is used, the store is

implicitly fenced; meaning a single ResourceManager is able to use the store at any point in

time. More details on this implicit fencing, along with setting up appropriate ACLs is

discussed under yarn.resourcemanager.zk-state-store.root-node.acl.

yarn.resourcemanager.ha.failover-controller.active-

standby-elector.zk.retries

When automatic failover is enabled, number of zookeeper operation retry times in

ActiveStandbyElector

yarn.resourcemanager.state-store.max-completed-

applications

${yarn.resourcemanager.max-completed-applications}

The maximum number of completed applications RM state store keeps, less than or equals to

${yarn.resourcemanager.max-completed-applications}. By default, it equals to

${yarn.resourcemanager.max-completed-applications}. This ensures that the applications kept

in the state store are consistent with the applications remembered in RM memory. Any values

larger than ${yarn.resourcemanager.max-completed-applications} will be reset to

${yarn.resourcemanager.max-completed-applications}. Note that this value impacts the RM

recovery performance.Typically, a smaller value indicates better performance on RM recovery.

yarn.resourcemanager.zk-state-store.parent-path /rmstore

Full path of the ZooKeeper znode where RM state will be stored. This must be supplied when

using org.apache.hadoop.yarn.server.resourcemanager.recovery.ZKRMStateStore as the value

for yarn.resourcemanager.store.class

yarn.resourcemanager.zk-state-store.root-node.acl

ACLs to be used for the root znode when using ZKRMStateStore in an HA scenario for

fencing. ZKRMStateStore supports implicit fencing to allow a single ResourceManager write-

access to the store. For fencing, the ResourceManagers in the cluster share read-write-admin

privileges on the root node, but the Active ResourceManager claims exclusive create-delete

permissions. By default, when this property is not set, we use the ACLs from

yarn.resourcemanager.zk-acl for shared admin access and rm-address:random-number for

username-based exclusive create-delete access. This property allows users to set ACLs of their

choice instead of using the default mechanism. For fencing to work, the ACLs should be

carefully set differently on each ResourceManger such that all the ResourceManagers have

shared admin access and the Active ResourceManger takes over (exclusively) the create-delete

access.

yarn.resourcemanager.fs.state-store.uri ${hadoop.tmp.dir}/yarn/system/rmstore

URI pointing to the location of the FileSystem path where RM state will be stored. This must

be supplied when using

org.apache.hadoop.yarn.server.resourcemanager.recovery.FileSystemRMStateStore as the

value for yarn.resourcemanager.store.class

yarn.resourcemanager.fs.state-store.retry-policy-spec 2000, 500

hdfs client retry policy specification. hdfs client retry is always enabled. Specified in pairs of

sleep-time and number-of-retries and (t0, n0), (t1, n1), ..., the first n0 retries sleep t0

milliseconds on average, the following n1 retries sleep t1 milliseconds on average, and so on.

yarn.resourcemanager.fs.state-store.num-retries 0 the number of retries to recover from IOException in FileSystemRMStateStore.

yarn.resourcemanager.fs.state-store.retry-interval-ms 1000 Retry interval in milliseconds in FileSystemRMStateStore.

yarn.resourcemanager.leveldb-state-store.path ${hadoop.tmp.dir}/yarn/system/rmstore

Local path where the RM state will be stored when using

org.apache.hadoop.yarn.server.resourcemanager.recovery.LeveldbRMStateStore as the value

for yarn.resourcemanager.store.class

yarn.resourcemanager.leveldb-state-store.compaction-

interval-secs

3600

The time in seconds between full compactions of the leveldb database. Setting the interval to

zero disables the full compaction cycles.

yarn.resourcemanager.ha.enabled false

Enable RM high-availability. When enabled, (1) The RM starts in the Standby mode by

default, and transitions to the Active mode when prompted to. (2) The nodes in the RM

ensemble are listed in yarn.resourcemanager.ha.rm-ids (3) The id of each RM either comes

from yarn.resourcemanager.ha.id if yarn.resourcemanager.ha.id is explicitly specified or can be

figured out by matching yarn.resourcemanager.address.{id} with local address (4) The actual

physical addresses come from the configs of the pattern - {rpc-config}.{id}

yarn.resourcemanager.ha.automatic-failover.enabled true Enable automatic failover. By default, it is enabled only when HA is enabled

yarn.resourcemanager.ha.automatic-failover.embedded true

Enable embedded automatic failover. By default, it is enabled only when HA is enabled. The

embedded elector relies on the RM state store to handle fencing, and is primarily intended to

be used in conjunction with ZKRMStateStore.

yarn.resourcemanager.ha.automatic-failover.zk-base-

path

/yarn-leader-election

The base znode path to use for storing leader information, when using ZooKeeper based leader

election.

yarn.resourcemanager.zk-appid-node.split-index 0 Index at which last section of application id (with each section separated by _ in application id)

will be split so that application znode stored in zookeeper RM state store will be stored as two

different znodes (parent-child). Split is done from the end. For instance, with no split, appid

znode will be of the form application_1352994193343_0001. If the value of this config is 1,

the appid znode will be broken into two parts application_1352994193343_000 and 1

respectively with former being the parent node. application_1352994193343_0002 will then be

1/6

2018/4/2http://hadoop.apache.org/docs/r2.9.0/hadoop-yarn/hadoop-yarn-common/yarn-default.xml