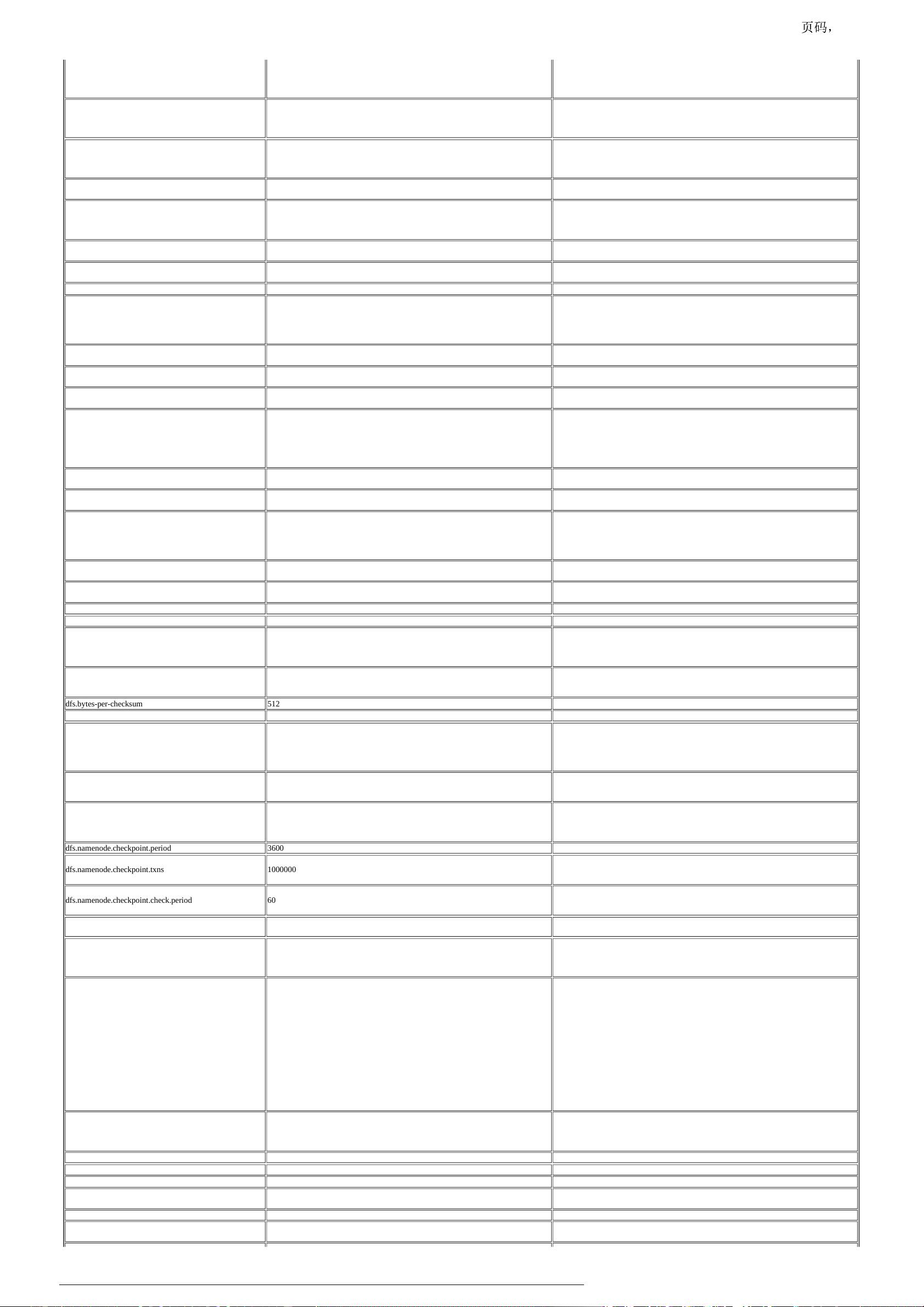

dfs.namenode.lifeline.handler.count Sets an absolute number of RPC server threads the NameNode runs for handling the

DataNode Lifeline Protocol and HA health check requests from ZKFC. If this property is

defined, then it overrides the behavior of dfs.namenode.lifeline.handler.ratio. By default, it is

not defined. This property has no effect if dfs.namenode.lifeline.rpc-address is not defined.

dfs.namenode.safemode.threshold-pct 0.999f

Specifies the percentage of blocks that should satisfy the minimal replication requirement

defined by dfs.namenode.replication.min. Values less than or equal to 0 mean not to wait for

any particular percentage of blocks before exiting safemode. Values greater than 1 will make

safe mode permanent.

dfs.namenode.safemode.min.datanodes 0

Specifies the number of datanodes that must be considered alive before the name node exits

safemode. Values less than or equal to 0 mean not to take the number of live datanodes into

account when deciding whether to remain in safe mode during startup. Values greater than

the number of datanodes in the cluster will make safe mode permanent.

dfs.namenode.safemode.extension 30000

Determines extension of safe mode in milliseconds after the threshold level is reached.

Support multiple time unit suffix (case insensitive), as described in dfs.heartbeat.interval.

dfs.namenode.resource.check.interval 5000

The interval in milliseconds at which the NameNode resource checker runs. The checker

calculates the number of the NameNode storage volumes whose available spaces are more

than dfs.namenode.resource.du.reserved, and enters safemode if the number becomes lower

than the minimum value specified by dfs.namenode.resource.checked.volumes.minimum.

dfs.namenode.resource.du.reserved 104857600

The amount of space to reserve/require for a NameNode storage directory in bytes. The

default is 100MB.

dfs.namenode.resource.checked.volumes

A list of local directories for the NameNode resource checker to check in addition to the

local edits directories.

dfs.namenode.resource.checked.volumes.minimum 1 The minimum number of redundant NameNode storage volumes required.

dfs.datanode.balance.bandwidthPerSec 10m

Specifies the maximum amount of bandwidth that each datanode can utilize for the

balancing purpose in term of the number of bytes per second. You can use the following

suffix (case insensitive): k(kilo), m(mega), g(giga), t(tera), p(peta), e(exa)to specify the size

(such as 128k, 512m, 1g, etc.). Or provide complete size in bytes (such as 134217728 for

128 MB).

dfs.hosts

Names a file that contains a list of hosts that are permitted to connect to the namenode. The

full pathname of the file must be specified. If the value is empty, all hosts are permitted.

dfs.hosts.exclude

Names a file that contains a list of hosts that are not permitted to connect to the namenode.

The full pathname of the file must be specified. If the value is empty, no hosts are excluded.

dfs.namenode.max.objects 0

The maximum number of files, directories and blocks dfs supports. A value of zero indicates

no limit to the number of objects that dfs supports.

dfs.namenode.datanode.registration.ip-hostname-check true

If true (the default), then the namenode requires that a connecting datanode's address must

be resolved to a hostname. If necessary, a reverse DNS lookup is performed. All attempts to

register a datanode from an unresolvable address are rejected. It is recommended that this

setting be left on to prevent accidental registration of datanodes listed by hostname in the

excludes file during a DNS outage. Only set this to false in environments where there is no

infrastructure to support reverse DNS lookup.

dfs.namenode.decommission.interval 30

Namenode periodicity in seconds to check if decommission or maintenance is complete.

Support multiple time unit suffix(case insensitive), as described in dfs.heartbeat.interval.

dfs.namenode.decommission.blocks.per.interval 500000

The approximate number of blocks to process per decommission or maintenance interval, as

defined in dfs.namenode.decommission.interval.

dfs.namenode.decommission.max.concurrent.tracked.nodes 100

The maximum number of decommission-in-progress or entering-maintenance datanodes

nodes that will be tracked at one time by the namenode. Tracking these datanode consumes

additional NN memory proportional to the number of blocks on the datnode. Having a

conservative limit reduces the potential impact of decommissioning or maintenance of a

large number of nodes at once. A value of 0 means no limit will be enforced.

dfs.namenode.replication.interval 3

The periodicity in seconds with which the namenode computes replication work for

datanodes.

dfs.namenode.accesstime.precision 3600000

The access time for HDFS file is precise upto this value. The default value is 1 hour. Setting

a value of 0 disables access times for HDFS.

dfs.datanode.plugins Comma-separated list of datanode plug-ins to be activated.

dfs.namenode.plugins Comma-separated list of namenode plug-ins to be activated.

dfs.namenode.block-placement-policy.default.prefer-local-

node

true

Controls how the default block placement policy places the first replica of a block. When

true, it will prefer the node where the client is running. When false, it will prefer a node in

the same rack as the client. Setting to false avoids situations where entire copies of large

files end up on a single node, thus creating hotspots.

dfs.stream-buffer-size 4096

The size of buffer to stream files. The size of this buffer should probably be a multiple of

hardware page size (4096 on Intel x86), and it determines how much data is buffered during

read and write operations.

dfs.bytes-per-checksum 512 The number of bytes per checksum. Must not be larger than dfs.stream-buffer-size

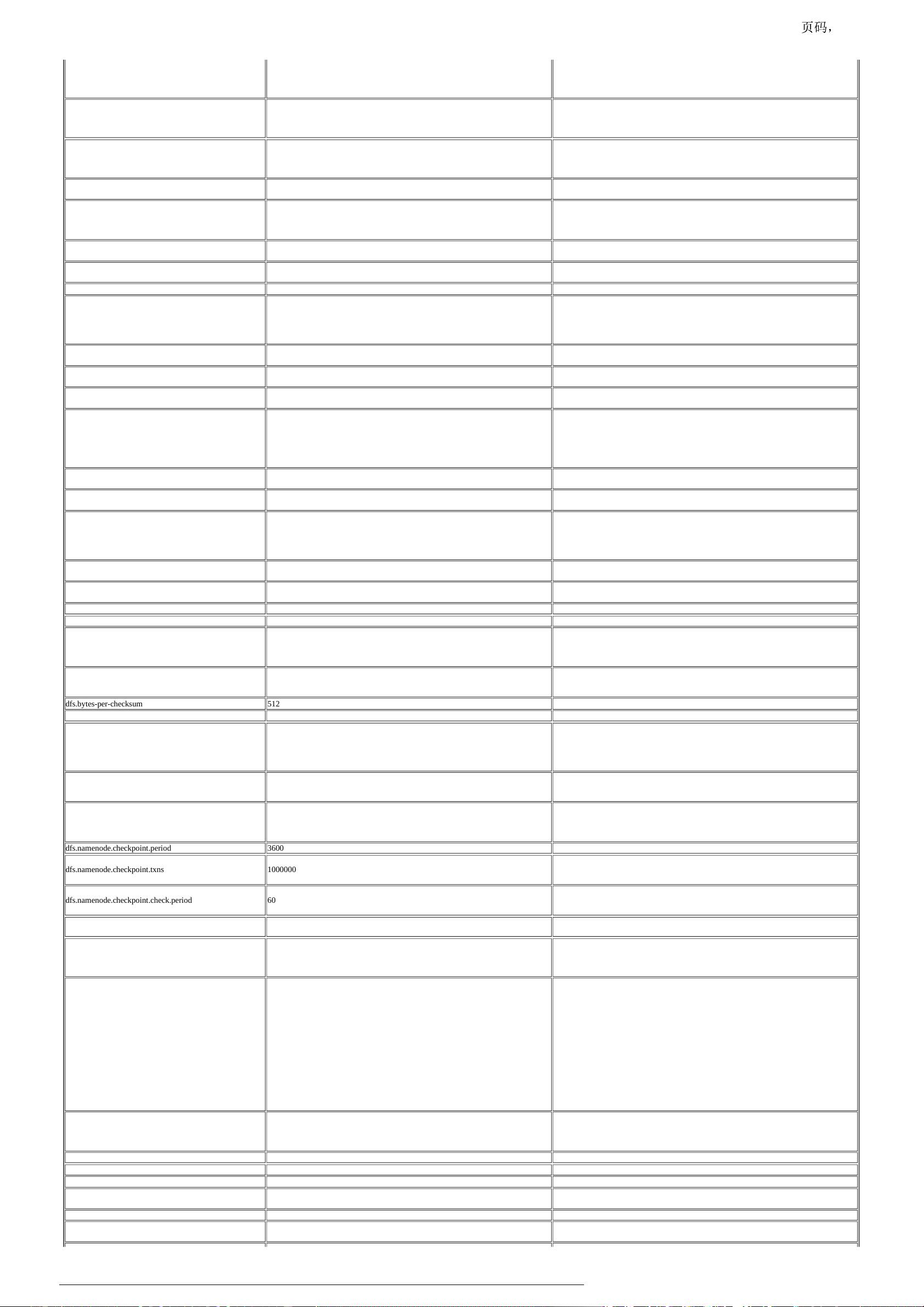

dfs.client-write-packet-size 65536 Packet size for clients to write

dfs.client.write.exclude.nodes.cache.expiry.interval.millis 600000

The maximum period to keep a DN in the excluded nodes list at a client. After this period, in

milliseconds, the previously excluded node(s) will be removed automatically from the cache

and will be considered good for block allocations again. Useful to lower or raise in situations

where you keep a file open for very long periods (such as a Write-Ahead-Log (WAL) file) to

make the writer tolerant to cluster maintenance restarts. Defaults to 10 minutes.

dfs.namenode.checkpoint.dir file://${hadoop.tmp.dir}/dfs/namesecondary

Determines where on the local filesystem the DFS secondary name node should store the

temporary images to merge. If this is a comma-delimited list of directories then the image is

replicated in all of the directories for redundancy.

dfs.namenode.checkpoint.edits.dir ${dfs.namenode.checkpoint.dir}

Determines where on the local filesystem the DFS secondary name node should store the

temporary edits to merge. If this is a comma-delimited list of directories then the edits is

replicated in all of the directories for redundancy. Default value is same as

dfs.namenode.checkpoint.dir

dfs.namenode.checkpoint.period 3600 The number of seconds between two periodic checkpoints.

dfs.namenode.checkpoint.txns 1000000

The Secondary NameNode or CheckpointNode will create a checkpoint of the namespace

every 'dfs.namenode.checkpoint.txns' transactions, regardless of whether

'dfs.namenode.checkpoint.period' has expired.

dfs.namenode.checkpoint.check.period 60

The SecondaryNameNode and CheckpointNode will poll the NameNode every

'dfs.namenode.checkpoint.check.period' seconds to query the number of uncheckpointed

transactions.

dfs.namenode.checkpoint.max-retries 3

The SecondaryNameNode retries failed checkpointing. If the failure occurs while loading

fsimage or replaying edits, the number of retries is limited by this variable.

dfs.namenode.num.checkpoints.retained 2

The number of image checkpoint files (fsimage_*) that will be retained by the NameNode

and Secondary NameNode in their storage directories. All edit logs (stored on edits_* files)

necessary to recover an up-to-date namespace from the oldest retained checkpoint will also

be retained.

dfs.namenode.num.extra.edits.retained 1000000

The number of extra transactions which should be retained beyond what is minimally

necessary for a NN restart. It does not translate directly to file's age, or the number of files

kept, but to the number of transactions (here "edits" means transactions). One edit file may

contain several transactions (edits). During checkpoint, NameNode will identify the total

number of edits to retain as extra by checking the latest checkpoint transaction value,

subtracted by the value of this property. Then, it scans edits files to identify the older ones

that don't include the computed range of retained transactions that are to be kept around, and

purges them subsequently. The retainment can be useful for audit purposes or for an HA

setup where a remote Standby Node may have been offline for some time and need to have a

longer backlog of retained edits in order to start again. Typically each edit is on the order of

a few hundred bytes, so the default of 1 million edits should be on the order of hundreds of

MBs or low GBs. NOTE: Fewer extra edits may be retained than value specified for this

setting if doing so would mean that more segments would be retained than the number

configured by dfs.namenode.max.extra.edits.segments.retained.

dfs.namenode.max.extra.edits.segments.retained 10000

The maximum number of extra edit log segments which should be retained beyond what is

minimally necessary for a NN restart. When used in conjunction with

dfs.namenode.num.extra.edits.retained, this configuration property serves to cap the number

of extra edits files to a reasonable value.

dfs.namenode.delegation.key.update-interval 86400000 The update interval for master key for delegation tokens in the namenode in milliseconds.

dfs.namenode.delegation.token.max-lifetime 604800000 The maximum lifetime in milliseconds for which a delegation token is valid.

dfs.namenode.delegation.token.renew-interval 86400000 The renewal interval for delegation token in milliseconds.

dfs.datanode.failed.volumes.tolerated 0

The number of volumes that are allowed to fail before a datanode stops offering service. By

default any volume failure will cause a datanode to shutdown.

dfs.image.compress false Should the dfs image be compressed?

dfs.image.compression.codec org.apache.hadoop.io.compress.DefaultCodec

If the dfs image is compressed, how should they be compressed? This has to be a codec

defined in io.compression.codecs.

3/11

2018/3/15file:///F:/Hadoop/hadoop-2.9.0/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml