+

...

...

... ...

Victim Page Cache Miss

-

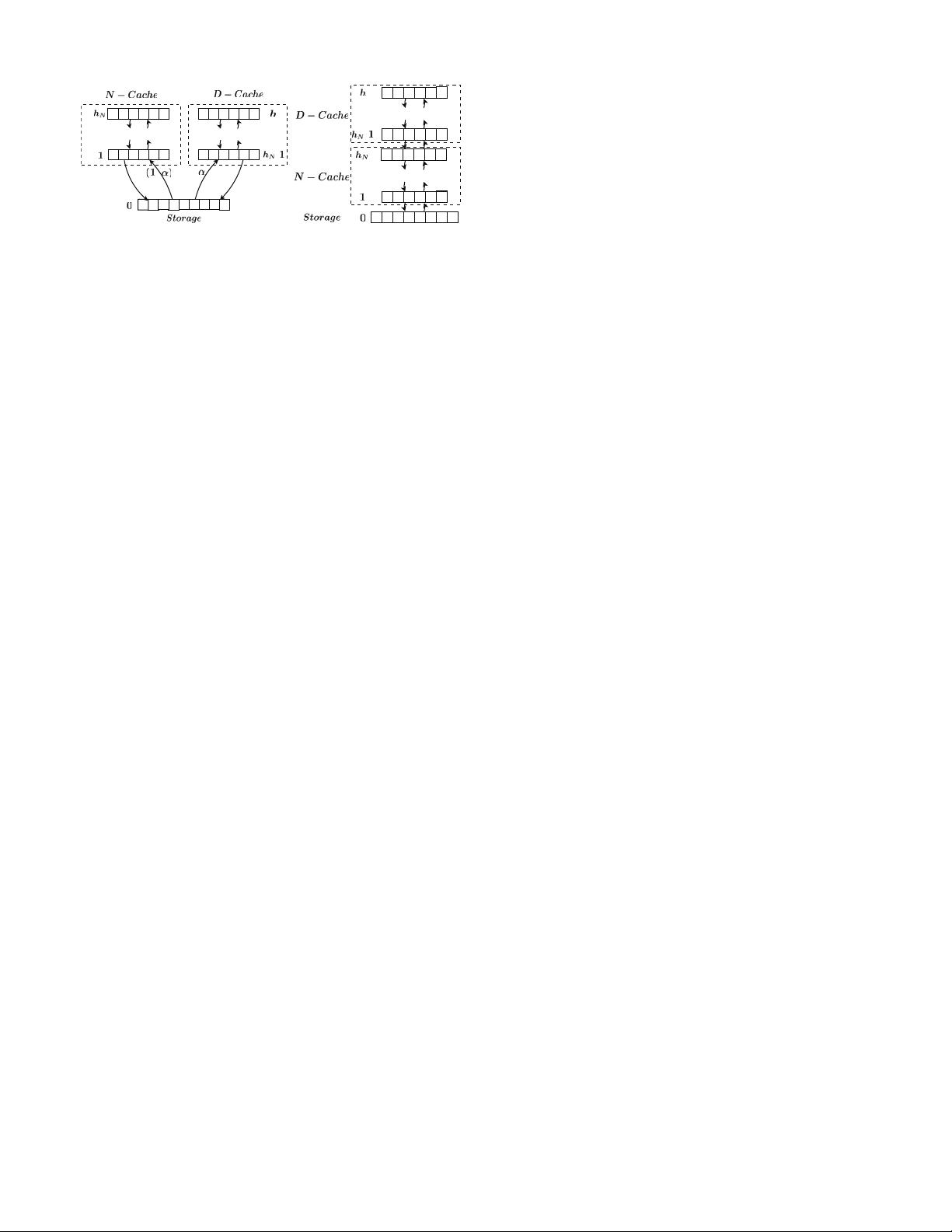

(a) Flat Architecture

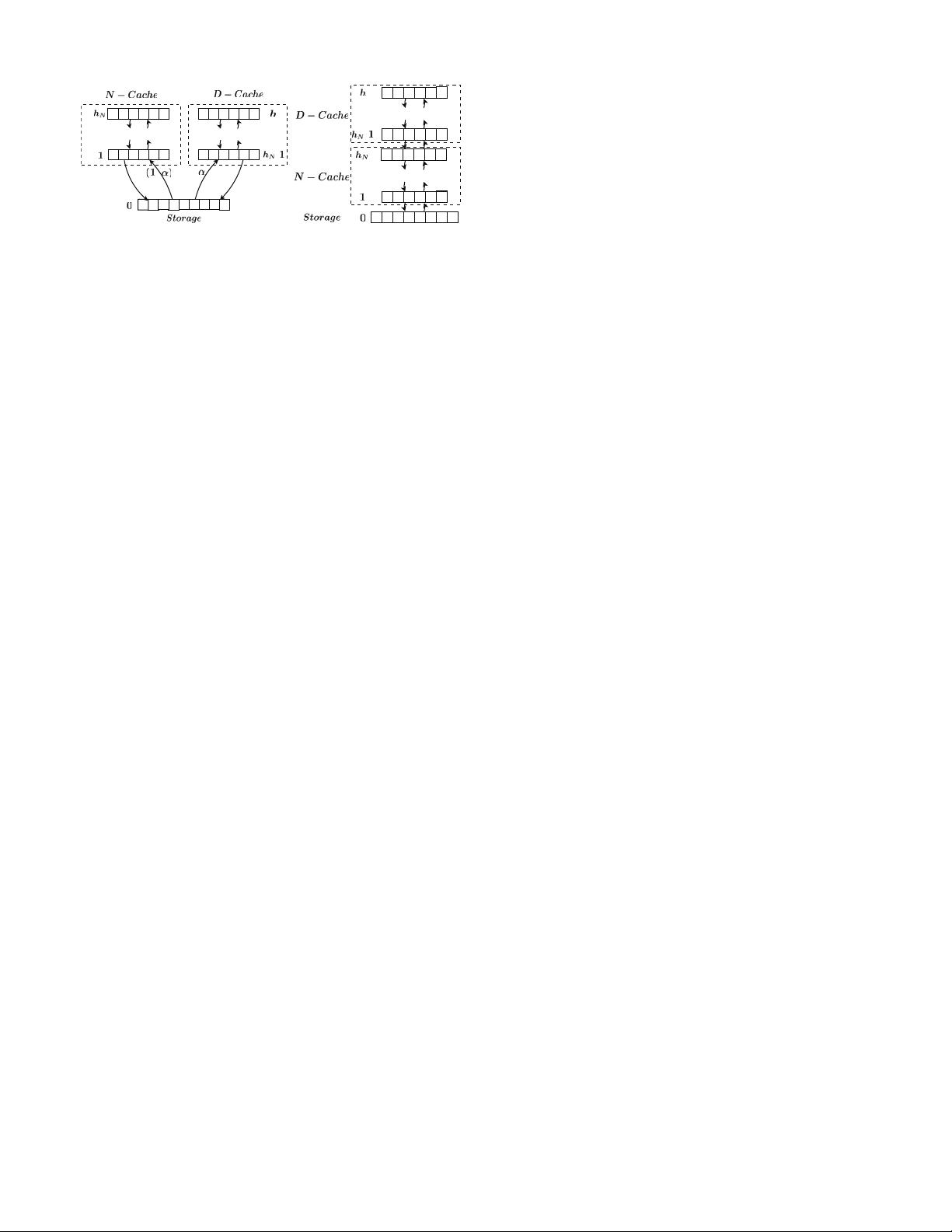

(b) Layered Architecture

Fig. 1. Architecture of hybrid cache.

probability 1−α. Note that α is a tunable parameter, and

increasing it implies that D-Cache is more preferred to

be used. In the flat architecture, pages are never migrated

between the two types of caches.

Note that both D-Cache and N-Cache contain multiple

lists. To exploit workload locality, we let pages be

first buffered in the list with the smallest label in the

corresponding cache, and then upgrade to the larger-

numbered lists when they become hot (e.g., when cache

hit happens). That is, lists in the same cache device are

organized in a layered structure.

• Layered architecture: In this design, we use D-Cache as a

caching layer for N-Cache as shown in Figure 1(b). Par-

ticularly, new data page is directly buffered in N-Cache

first, and when page in the list of the largest label in N-

Cache is accessed, it is upgraded to D-Cache. Similarly,

we also organize lists in both D-Cache and N-Cache in

a layered structure. Note that data migration between D-

Cache and N-Cache happens here, and usually, data in

D-Cache is considered to be hotter than data in N-Cache.

B. Cache Replacement Algorithm

For cache replacement, we follow the list-based algorithm

introduced in [6], and extend it to hybrid cache with different

architectures. Roughly speaking, a new data page enters into

a cache through the first list and moves to the upper list by

exchanging with a randomly selected data page whenever a

cache hit occurs. Specifically, when a data page k is requested

at time t, one of the three events below happens:

• Cache miss: Page k is not in D-Cache nor N-Cache. In

this case, page k enters into the first list in D-Cache

(i.e., list l

h

N

+1

) with probability α or into the first list

in N-Cache (i.e., list l

1

) with probability (1 − α) under

the flat architecture. For the layered architecture, page k

enters into the first list of N-Cache (i.e., list l

1

). For both

architectures, the position in the list for writing page k is

chosen uniformly at random. Meanwhile, the victim page

in the position moves back to list 0.

• Cache hit in list l

i

where l

i

6= l

h

N

and l

i

6= l

h

: In this

case, page k moves to a randomly selected position v of

list l

i+1

, meanwhile, the victim page in position v of list

l

i+1

takes the former position of page k.

• Cache hit in list l

i

where l

i

= l

h

N

or l

i

= l

h

: In this

case, page k remains at the same position under the flat

architecture. However, for the layered architecture with

l

i

= l

h

N

, page k moves to a random position in list l

i+1

as in the second case.

Figure 1 shows the data flow under flat and layered archi-

tectures. Note that data migration happens between lists of the

same type of cache, while the migration between D-Cache and

N-Cache happens only in the case of layered architecture.

C. Design Issues

Note that the overall performance of a hybrid cache system

may depend on various factors, such as system architecture,

capacity allocation between DRAM and NVM, as well as

the configuration parameters like the number of lists in each

cache device. Thus, it poses a wide range of design choices

for hybrid cache, which makes it very difficult to explore

the full design space and optimize the cache performance.

To understand the impact of hybrid cache design on system

performance, in this work, we aim to address the following

issues by developing mathematical models.

• For each architecture (flat or layered), what is the impact

of the list-based hierarchical design, and how to set the

best parameters so as to optimize the overall performance,

including the numbers of lists h

D

and h

N

, as well as the

preference parameter α for the flat architecture?

• Which architecture should be used when considering both

DRAM and NVM into a hybrid design?

• Under a fixed budget C, what is the best capacity

allocation of each cache type for better performance?

III. SYSTEM MODEL

In this section, we first describe the workload model, then

characterize the dynamics of data pages in hybrid cache, and

finally derive the cache content distribution in steady state.

After that, we define a latency-based performance metric based

on the cache content distribution so as to quantify the overall

cache performance.

A. Workload Model

In this work, we focus on cache-effective applications like

web search and database query [22], [11], in which memory

and I/O latency are critical to system performance. Thus,

caching files in main memory becomes necessary to provide

sufficient throughput for these applications. To provide high

data reliability, we assume to use the write-through policy, in

which data is also written to the storage tier once it is buffered

in the page cache. With this policy, all data pages in cache

should have a copy in the secondary storage.

In this paper, we focus on the independent reference model

[6] in which requests in a workload are independent of each

other. Since cache mainly benefits the read performance, we

focus on read requests only, while we can also extend our

model to write requests. Suppose that we have n total data

pages in the system. In each time slot, one read request arrives,

and it accesses data pages according to a particular distribution

where page k (k = 1, 2, ..., n) is accessed with probability p

k

.

Clearly, we have

P

n

k=1

p

k

= 1. Without loss of generality,

we assume that pages are sorted in the decreasing order of