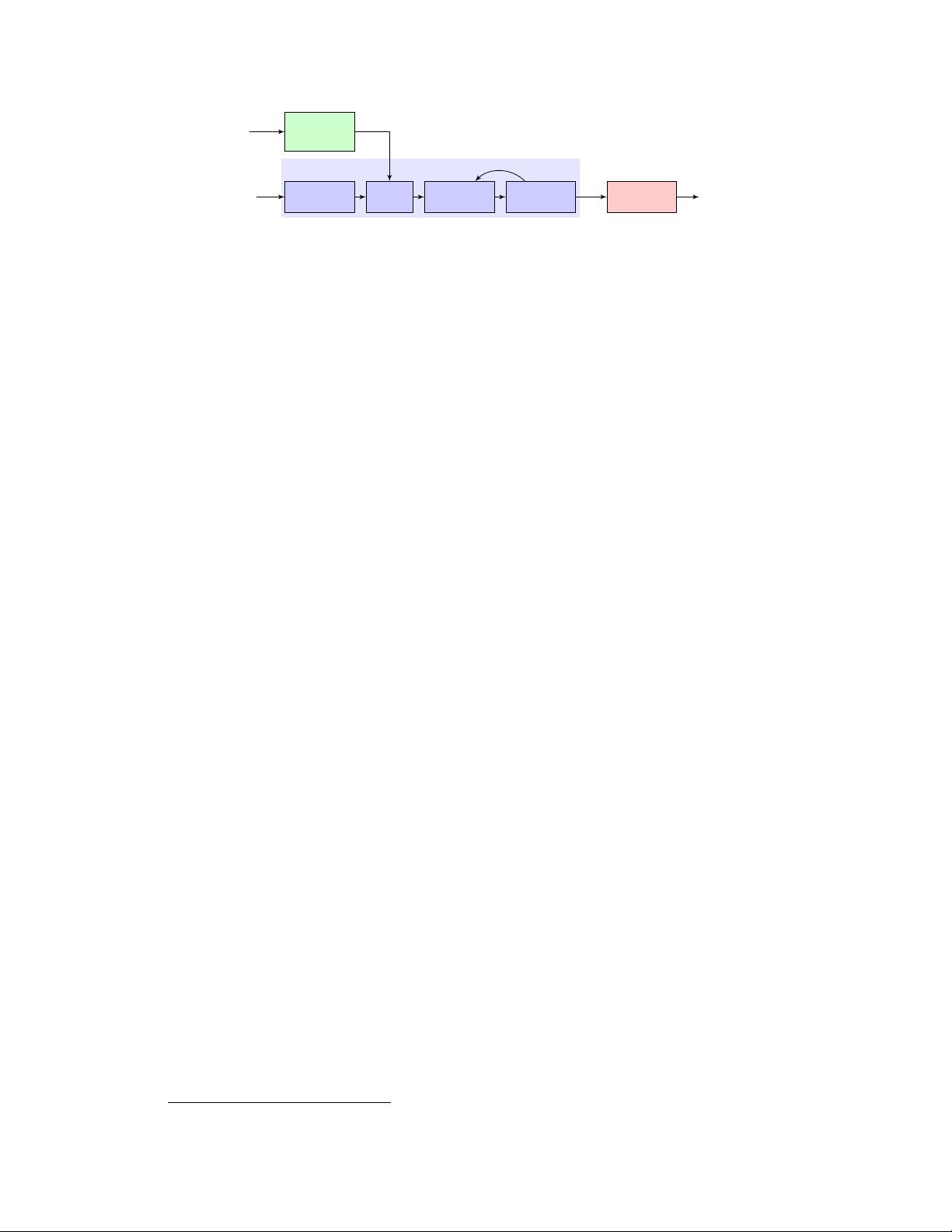

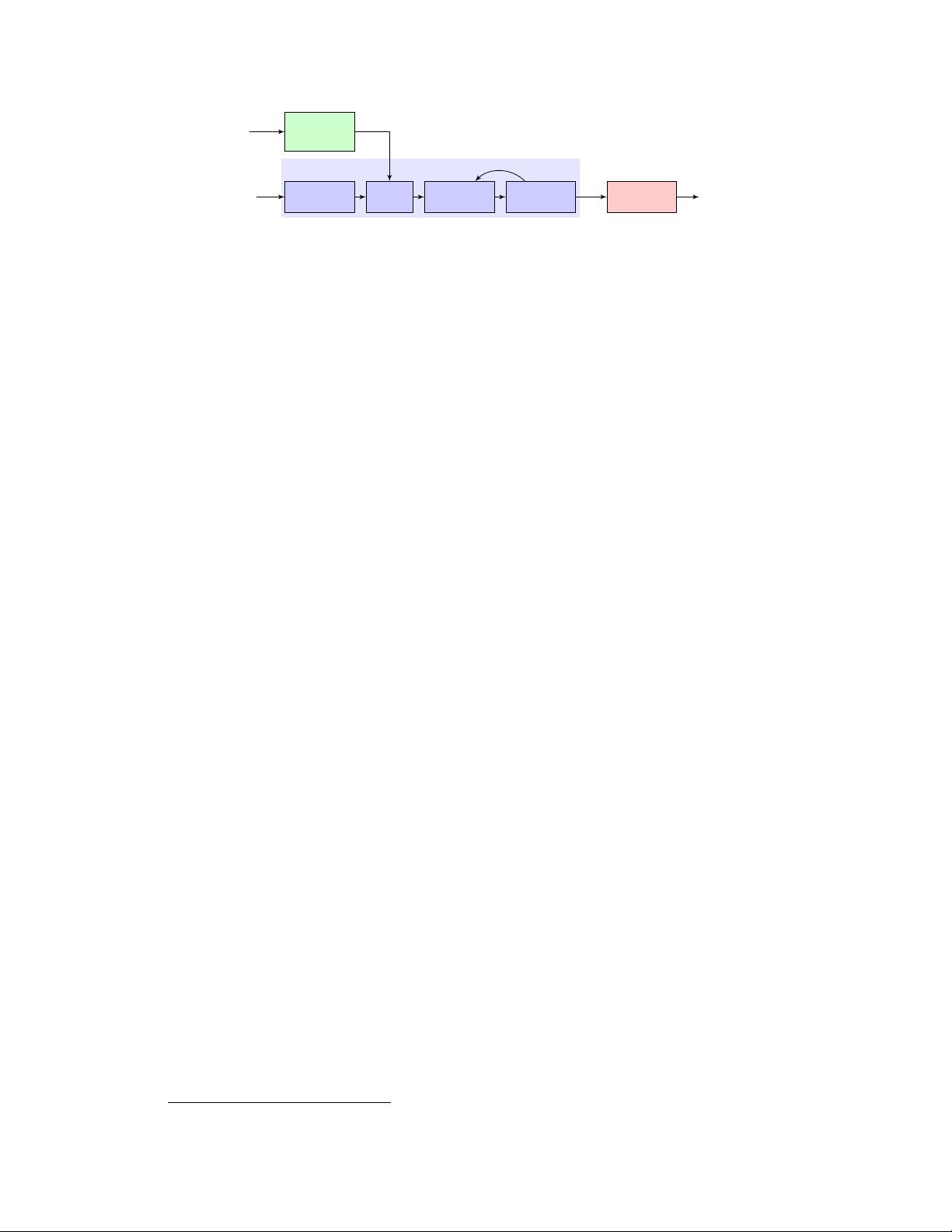

speaker

reference

waveform

Speaker

Encoder

grapheme or

phoneme

sequence

Encoder

concat

Attention Decoder

Synthesizer

Vocoder

waveform

speaker

embedding

log-mel

spectrogram

Figure 1: Model overview. Each of the three components are trained independently.

signal, (2) a sequence-to-sequence synthesizer, based on [

15

], which predicts a mel spectrogram from

a sequence of grapheme or phoneme inputs, conditioned on the speaker embedding vector, and (3) an

autoregressive WaveNet [

19

] vocoder, which converts the spectrogram into time domain waveforms.

1

2.1 Speaker encoder

The speaker encoder is used to condition the synthesis network on a reference speech signal from the

desired target speaker. Critical to good generalization is the use of a representation which captures the

characteristics of different speakers, and the ability to identify these characteristics using only a short

adaptation signal, independent of its phonetic content and background noise. These requirements are

satisfied using a speaker-discriminative model trained on a text-independent speaker verification task.

We follow [

22

], which proposed a highly scalable and accurate neural network framework for speaker

verification. The network maps a sequence of log-mel spectrogram frames computed from a speech

utterance of arbitrary length, to a fixed-dimensional embedding vector, known as d-vector [

20

,

9

]. The

network is trained to optimize a generalized end-to-end speaker verification loss, so that embeddings

of utterances from the same speaker have high cosine similarity, while those of utterances from

different speakers are far apart in the embedding space. The training dataset consists of speech audio

examples segmented into 1.6 seconds and associated speaker identity labels; no transcripts are used.

Input 40-channel log-mel spectrograms are passed to a network consisting of a stack of 3 LSTM

layers of 768 cells, each followed by a projection to 256 dimensions. The final embedding is created

by

L

2

-normalizing the output of the top layer at the final frame. During inference, an arbitrary length

utterance is broken into 800ms windows, overlapped by 50%. The network is run independently on

each window, and the outputs are averaged and normalized to create the final utterance embedding.

Although the network is not optimized directly to learn a representation which captures speaker

characteristics relevant to synthesis, we find that training on a speaker discrimination task leads to an

embedding which is directly suitable for conditioning the synthesis network on speaker identity.

2.2 Synthesizer

We extend the recurrent sequence-to-sequence with attention Tacotron 2 architecture [

15

] to support

multiple speakers following a scheme similar to [

8

]. An embedding vector for the target speaker is

concatenated with the synthesizer encoder output at each time step. In contrast to [

8

], we find that

simply passing embeddings to the attention layer, as in Figure 1, converges across different speakers.

We compare two variants of this model, one which computes the embedding using the speaker

encoder, and a baseline which optimizes a fixed embedding for each speaker in the training set,

essentially learning a lookup table of speaker embeddings similar to [8, 13].

The synthesizer is trained on pairs of text transcript and target audio. At the input, we map the text to

a sequence of phonemes, which leads to faster convergence and improved pronunciation of rare words

and proper nouns. The network is trained in a transfer learning configuration, using a pretrained

speaker encoder (whose parameters are frozen) to extract a speaker embedding from the target audio,

i.e. the speaker reference signal is the same as the target speech during training. No explicit speaker

identifier labels are used during training.

Target spectrogram features are computed from 50ms windows computed with a 12.5ms step, passed

through an 80-channel mel-scale filterbank followed by log dynamic range compression. We extend

[

15

] by augmenting the

L

2

loss on the predicted spectrogram with an additional

L

1

loss. In practice,

1

See https://google.github.io/tacotron/publications/speaker_adaptation for samples.

3