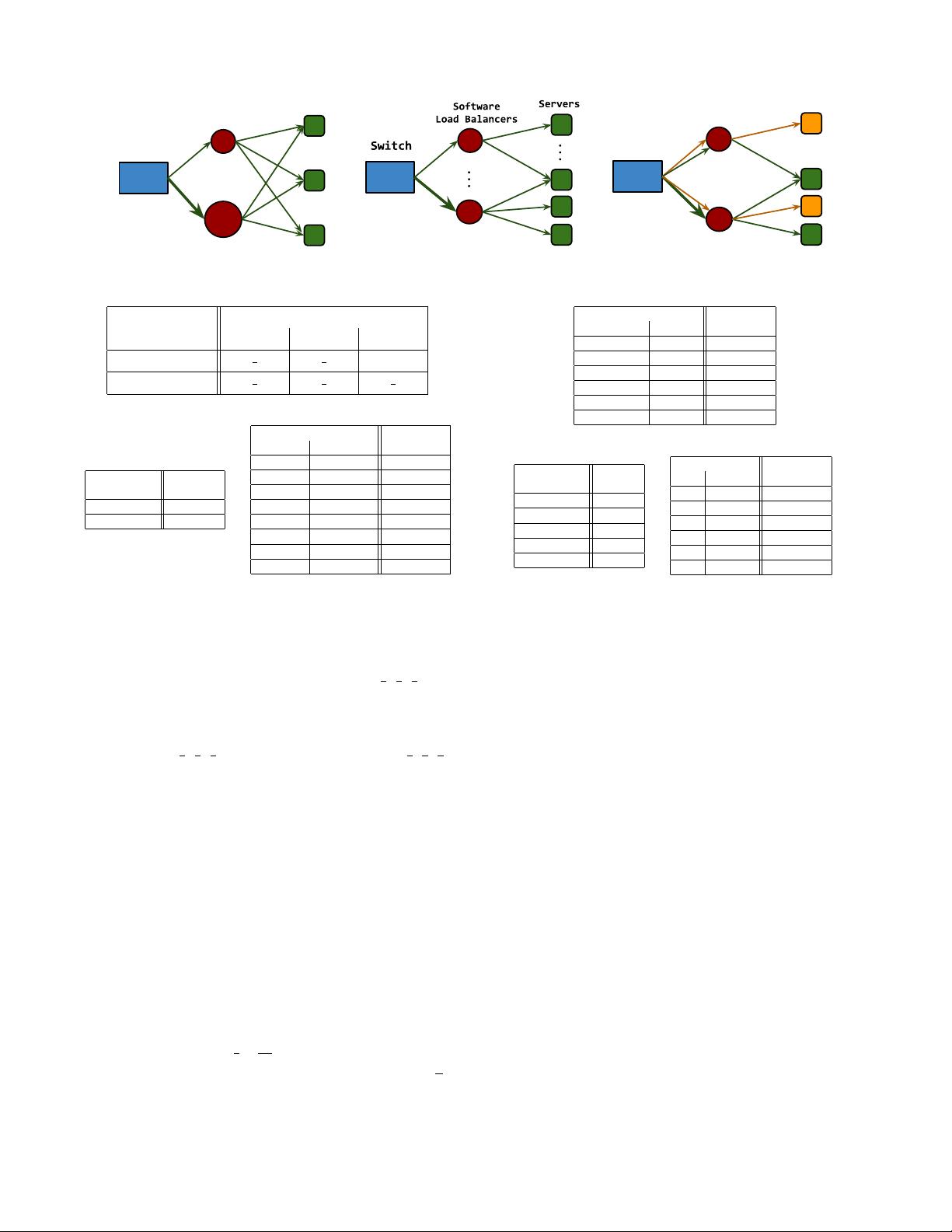

(a) Some SLBs are more powerful (b) SLBs handle different servers

(c) Services require different split

Figure 1: Weighted server load balancing examples.

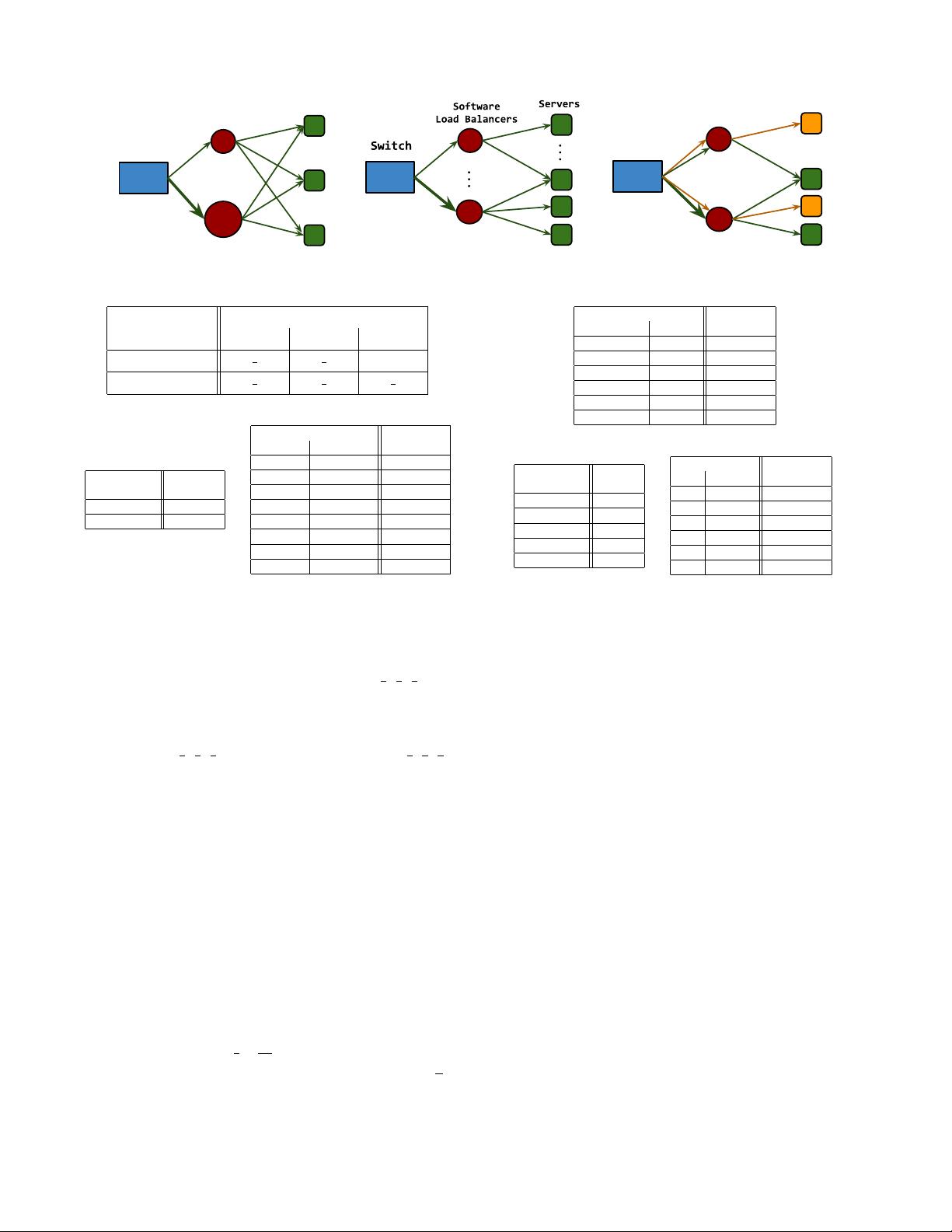

DIP (service VIP) Next-hops (SLBs)

17.12.11.1 17.12.12.1 17.12.13.1

63.12.28.42

1

2

1

2

0

63.12.28.34

1

6

1

3

1

2

(a) Weights for load balancing two services.

Match Action

DIP Group id

63.12.28.42 1

63.12.28.34 2

=⇒

Match Action

group id hash value next-hop

1 0 17.12.11.1

1 1 17.12.12.1

2 0 17.12.11.1

2 1 17.12.12.1

2 2 17.12.12.1

2 3 17.12.13.1

2 4 17.12.13.1

2 5 17.12.13.1

(b) The forwarding table (left) directs packets to an ECMP group;

the multipath table (right) forwards packets based on the ECMP

group and the hash value.

Figure 2: Hash-based approaches for load balancing.

UCMP and WCMP [3] handle unequal split by repeating

next-hops in an ECMP group. UCMP achieves (

1

6

,

1

3

,

1

2

) split

for service 63.12.28.34 with six rules (Figure 2(b)). Given

the constrained size of the multipath table (i.e., TCAM),

WCMP [3] approximates the desired split with less rules.

For example, (

1

6

,

1

3

,

1

2

) can be approximated with (

1

4

,

1

4

,

1

2

),

which takes four rules by only repeating the last-hop twice.

The hash-based approaches incur several drawbacks.

Accuracy and Scalability. While ECMP only handles

equal weights, the accuracy and scalability of UCMP and

WCMP is restricted by the size of multipath table, typically

numbering in a small hundreds or thousands on commod-

ity switches [3]. UCMP and WCMP are infeasible when the

number of flow aggregates exceed the table size. Given a 8-

weight distribution (i.e., 8 SLBs), WCMP takes 74 rules on

average and 288 rules in the worst case. In other words, with

4,000 rules, WCMP can only handle up to

4000

/74 ≈ 54 ser-

vices, which is too small for load balancing in data centers.

Churn. Updating an ECMP group unnecessarily shuffles

packets among next-hops.When a next-hop is removed from

a N-member group (or a next-hop is added to an (N − 1)-

member group), at least

1

4

+

1

4N

of the flow space are shuffled

to different next-hops [11], while the minimum churn is

1

N

.

Visibility. In ECMP, hash functions and mappings from

hash values to next-hops are proprietary, preventing effective

Match Action

DIP SIP Next-hop

63.12.28.42 ∗0 17.12.11.1

63.12.28.42 ∗ 17.12.12.1

63.12.28.34 ∗00100 17.12.11.1

63.12.28.34 ∗000 17.12.11.1

63.12.28.34 ∗0 17.12.12.1

63.12.28.34 ∗ 17.12.13.1

(a) Load balancing two services.

Match Action

DIP Tag

63.12.28.34 1

63.12.28.53 1

63.12.28.27 1

63.12.28.42 2

63.12.28.43 2

=⇒

Match Action

Tag SIP Next-hop

1 ∗0 17.12.11.1

1 ∗ 17.12.12.1

2 ∗00100 17.12.11.1

2 ∗000 17.12.11.1

2 ∗0 17.12.12.1

2 ∗ 17.12.13.1

(b) Grouping and load balancing five services.

Figure 3: Example wildcard rules for load balancing.

analysis of forwarding behaviors. Network operators have to

examine all next-hops when debugging a flow. Furthermore,

making this information public does not make the problem

much easier. Recently proposed debugging and verification

frameworks [24, 25] rely on the assumption that consecutive

chunks of flow space are given the same actions to reduce

their complexity. ECMP deviates from this assumption by

pseudo-randomly casting flows to next-hops.

SDN-based approaches. SDN supports programming

rule-tables in switches, enabling finer-grained control and

more accurate splitting. Unlike hash-based approaches, the

programmable rule table offers full visibility into how pack-

ets are forwarded. A simple solution [14, 17] is to direct

the first packet of each client request to a controller, which

then installs rules for forwarding the remaining packets of

the connection. This approach incurs extra delay for the first

packet of each flow, and controller load and hardware rule-

table capacity quickly become bottlenecks. A more scalable

alternative is to proactively install coarse-grained rules that

direct a consecutive chunk of flows to a common next-hop. A

preliminary exploration of using wildcard rules is discussed

in [5]. Niagara follows the same high-level approach, but

presents more sophisticated algorithms for optimizing rule-

table size, while also addressing churn under updates. We

discuss [5] in detail in §4.1.

3. NIAGARA OVERVIEW

3