COCO-Text: Dataset and Benchmark for Text Detection and Recognition in

Natural Images

Andreas Veit

1,2

, Tom

´

a

ˇ

s Matera

2

, Luk

´

a

ˇ

s Neumann

3

, Ji

ˇ

r

´

ı Matas

3

, Serge Belongie

1,2

1

Department of Computer Science, Cornell University

2

Cornell Tech

3

Department of Cybernetics, Czech Technical University, Prague

1

{av443,sjb344}@cornell.edu,

2

tomas@matera.cz,

3

{neumalu1,matas}@cmp.felk.cvut.cz

Abstract

This paper describes the COCO-Text dataset. In recent

years large-scale datasets like SUN and Imagenet drove the

advancement of scene understanding and object recogni-

tion. The goal of COCO-Text is to advance state-of-the-art

in text detection and recognition in natural images. The

dataset is based on the MS COCO dataset, which contains

images of complex everyday scenes. The images were not

collected with text in mind and thus contain a broad vari-

ety of text instances. To reflect the diversity of text in natu-

ral scenes, we annotate text with (a) location in terms of a

bounding box, (b) fine-grained classification into machine

printed text and handwritten text, (c) classification into leg-

ible and illegible text, (d) script of the text and (e) tran-

scriptions of legible text. The dataset contains over 173k

text annotations in over 63k images. We provide a statis-

tical analysis of the accuracy of our annotations. In addi-

tion, we present an analysis of three leading state-of-the-art

photo Optical Character Recognition (OCR) approaches on

our dataset. While scene text detection and recognition en-

joys strong advances in recent years, we identify significant

shortcomings motivating future work.

1. Introduction

The detection and recognition of scene text in natural

images with unconstrained environments remains a chal-

lenging problem in computer vision. The ability to ro-

bustly read text in unconstrained scenes can significantly

help with numerous real-world application, e.g., assistive

technology for the visually impaired, robot navigation and

geo-localization. The problem of detecting and recogniz-

ing text in scenes has received increasing attention from the

computer vision community in recent years [1, 6, 14, 19].

The evaluation is typically done on datasets comprising im-

ages with mostly iconic text and containing hundreds of im-

ages at best.

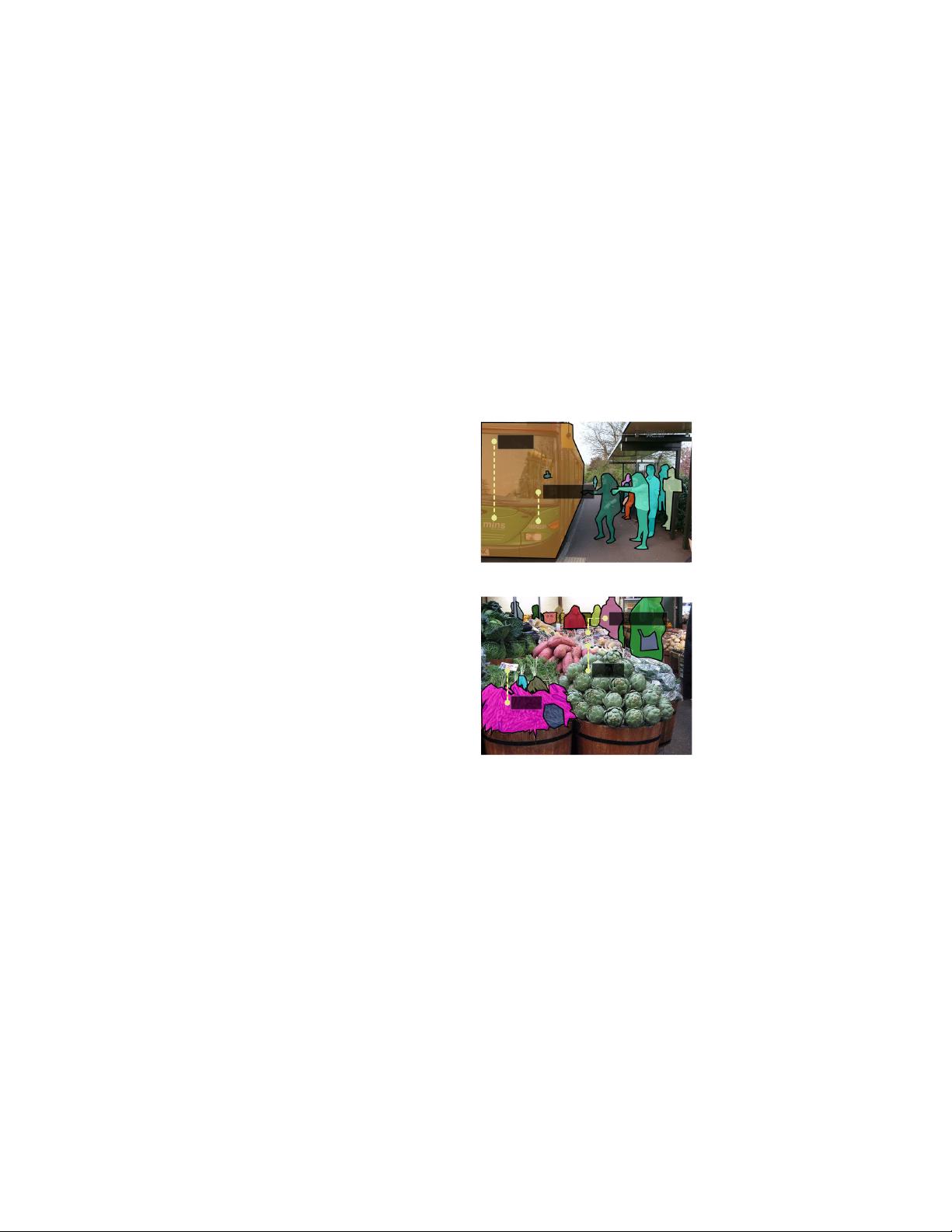

a group of people point towards a green bus.

MS COCO image and annotations

COCO-Text annotations

1) 'mins',

legible

machine printed

English

2) 'Transport'

legible

machine printed

English

OCR results:

CORRECT

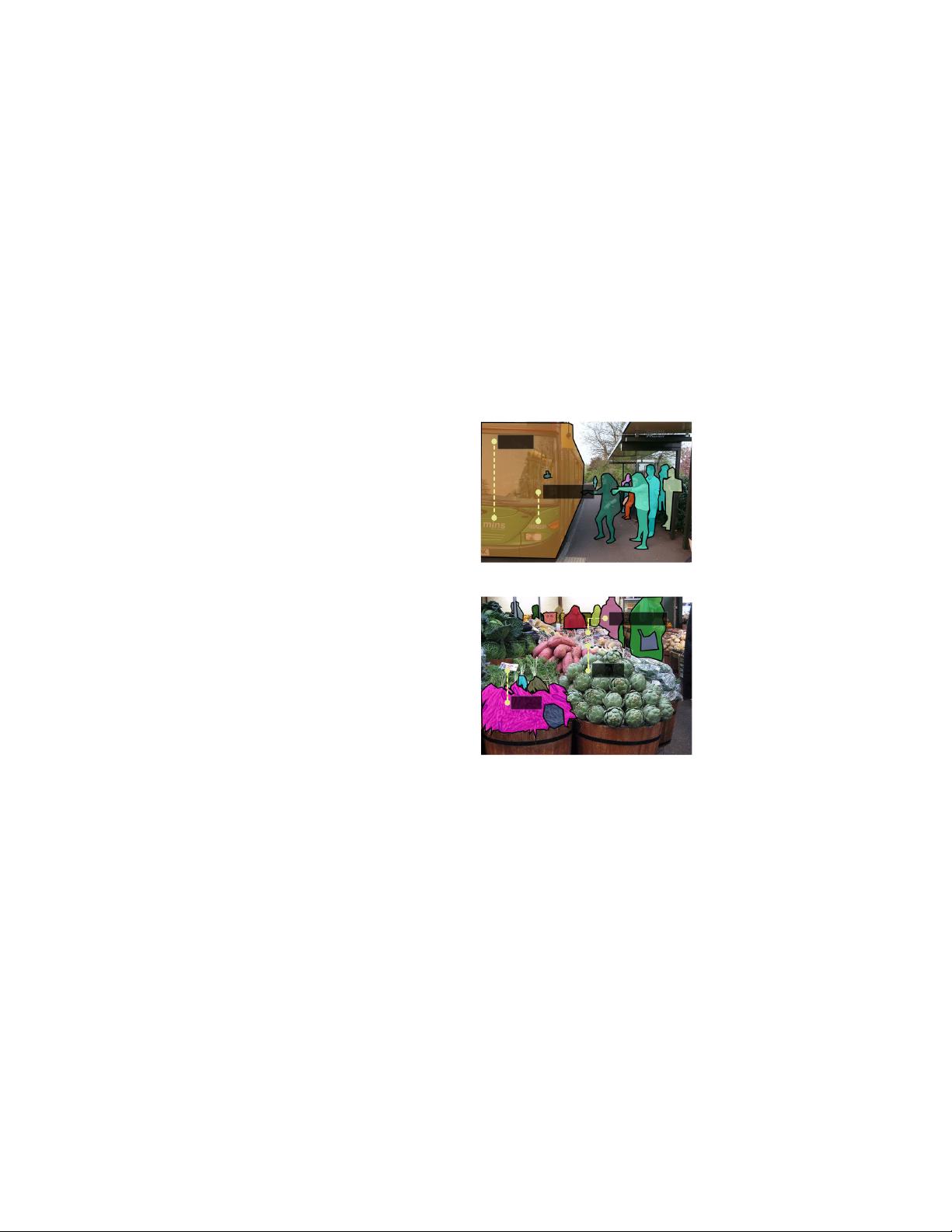

1) 'sweet potato',

legible

handwritten

English

2) '2.20'

legible

handwritten

English

3) '1.50'

legible

handwritten

English

...

OCR results:

INCORRECT

huge barrels of vegetables with people near them.

1) ‘mins’

1) ‘sweet potato’

2) ‘2.20’

3) ‘1.50’

2) ‘Transport’

Figure 1. Left: Example MS COCO images with object segmen-

tation and captions. Right: COCO-Text annotations. For the top

image, the photo OCR finds and recognizes the text printed on the

bus. For the bottom image, the OCR does not recognize the hand-

written price tags on the fruit stand.

To advance the understanding of text in unconstrained

scenes we present a new large-scale dataset for text in nat-

ural images. The dataset is based on the Microsoft COCO

dataset [10] that annotates common objects in their natural

contexts. Combining rich text annotations and object an-

notations in natural images provides a great opportunity for

research in scene text detection and recognition. MS COCO

was not collected with text in mind and is thus potentially

less biased towards text. Further, combining text with object

annotations allows for contextual reasoning about scene text

and objects. During a pilot study of state-of-the-art photo

OCR methods on MS COCO, we made two key observa-

tions: First, text in natural scenes is very diverse ranging

arXiv:1601.07140v2 [cs.CV] 19 Jun 2016