Method Entity/Relation embeddings Energy function

TransE (Bordes et al., 2013) e, r ∈ R

d

f(e

i

, r

k

, e

j

) = ∥e

i

+ r

k

−e

j

∥

ℓ

1

/ℓ

2

SME (lin) (Bordes et al., 2014) e, r ∈ R

d

f(e

i

, r

k

, e

j

) =

(

W

u1

r

k

+ W

u2

e

i

+ b

u

)

T

W

v1

r

k

+ W

v2

e

j

+ b

v

SME (bilin) (Bordes et al., 2014) e, r ∈ R

d

f(e

i

, r

k

, e

j

) =

W

u

¯

×

3

r

k

e

i

+ b

u

T

W

v

¯

×

3

r

k

e

j

+ b

v

SE (Bordes et al., 2011)

e

∈

R

d

,

R

u

,

R

v

∈

R

d×d

f

(

e

i

, r

k

, e

j

) = ∥R

u

k

e

i

−R

v

k

e

j

∥

ℓ

1

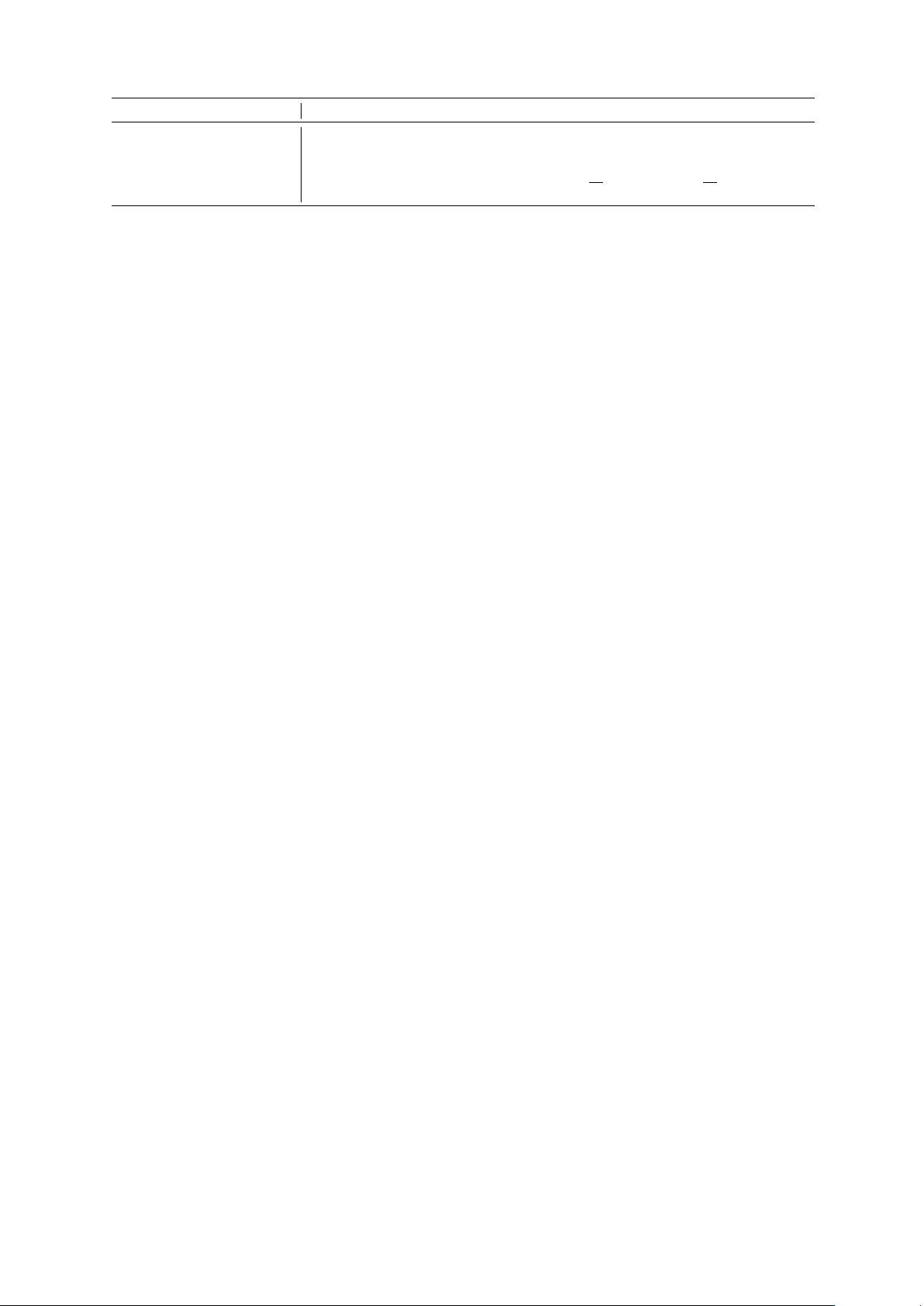

Table 1: Existing KG embedding models.

function definition. Three state-of-the-art embed-

ding models, namely TransE (Bordes et al., 2013),

SME (Bordes et al., 2014), and SE (Bordes et al.,

2011), are detailed below. Please refer to (Jenat-

ton et al., 2012; Socher et al., 2013; Wang et al.,

2014b; Lin et al., 2015) for other methods.

TransE (Bordes et al., 2013) represents both en-

tities and relations as vectors in the embedding s-

pace. For a given triple ⟨e

i

, r

k

, e

j

⟩, the relation is

interpreted as a translation vector r

k

so that the

embedded entities e

i

and e

j

can be connected by

r

k

with low error. The energy function is defined

as f (e

i

, r

k

, e

j

) = ∥e

i

+ r

k

− e

j

∥

ℓ

1

/ℓ

2

, where

∥

·

∥

ℓ

1

/ℓ

2

denotes the ℓ

1

-norm or ℓ

2

-norm.

SME (Bordes et al., 2014) also represents enti-

ties and relations as vectors, but models triples in

a more expressive way. Given a triple ⟨e

i

, r

k

, e

j

⟩,

it first employs a function g

u

(

·, ·

)

to combine r

k

and e

i

, and g

v

(

·, ·

)

to combine r

k

and e

j

. Then,

the energy function is defined as matching g

u

(

·, ·

)

and g

v

(

·, ·

)

by their dot product, i.e., f (e

i

, r

k

, e

j

) =

g

u

(r

k

, e

i

)

T

g

v

(r

k

, e

j

). There are two versions of

SME, linear and bilinear (denoted as SME (lin)

and SME (bilin) respectively), obtained by defin-

ing different g

u

(

·, ·

)

and g

v

(

·, ·

)

.

SE (Bordes et al., 2011) represents entities as

vectors but relations as matrices. Each relation is

modeled by a left matrix R

u

k

and a right matrix R

v

k

,

acting as independent projections to head and tail

entities respectively. If a triple ⟨e

i

, r

k

, e

j

⟩ holds,

R

u

k

e

i

and R

v

k

e

j

should be close to each other. The

energy function is f (e

i

, r

k

, e

j

) = ∥R

u

k

e

i

− R

v

k

e

j

∥

ℓ

1

.

Table 1 summarizes the entity/relation representa-

tions and energy functions used in these models.

3 Semantically Smooth Embedding

The methods introduced above perform the em-

bedding task based solely on observed facts. The

only requirement is that the learned embeddings

should be compatible within each individual fact.

However, they fail to discover the intrinsic geo-

metric structure of the embedding space. To deal

with this limitation, we introduce Semantically S-

mooth Embedding (SSE) which constrains the em-

bedding task by incorporating geometrically based

regularization terms, constructed by using addi-

tional semantic categories of entities.

3.1 Problem Formulation

Suppose we are given a KG consisting of n entities

and m relations. The facts observed are stored as

a set of triples O =

⟨e

i

, r

k

, e

j

⟩

. A triple ⟨e

i

, r

k

, e

j

⟩

indicates that entity e

i

and entity e

j

are connected

by relation r

k

. In addition, the entities are classi-

fied into multiple semantic categories. Each entity

e is associated with a label c

e

indicating the cate-

gory to which it belongs. SSE aims to embed the

entities and relations into a continuous vector s-

pace which is compatible with the observed facts,

and at the same time semantically smooth.

To make the embedding space compatible with

the observed facts, we make use of the triple set O

and follow the same strategy adopted in previous

methods. That is, we define an energy function

on each candidate triple (e.g. the energy functions

listed in Table 1), and require observed triples to

have lower energies than unobserved ones (i.e. the

margin-based ranking loss defined in Eq. (1)).

To make the embedding space semantically s-

mooth, we further leverage the entity category in-

formation

{

c

e

}

, and assume that entities within the

same semantic category should lie close to each

other in the embedding space. This smoothness

assumption is similar to the local invariance as-

sumption exploited in manifold learning theory

(i.e. nearby points are likely to have similar em-

beddings or labels). So we employ two manifold

learning algorithms Laplacian Eigenmaps (Belkin

and Niyogi, 2001) and Locally Linear Embed-

ding (Roweis and Saul, 2000) to model such se-

mantic smoothness, termed as LE and LLE for

short respectively.

3.2 Modeling Semantic Smoothness by LE

Laplacian Eigenmaps (LE) is a manifold learning

algorithm that preserves local invariance between