Browser

Web

Server

CDN

1 2

3

4

7

8

Photo Store

Server

Photo Store

Server

5

6

NFS

NASNASNAS

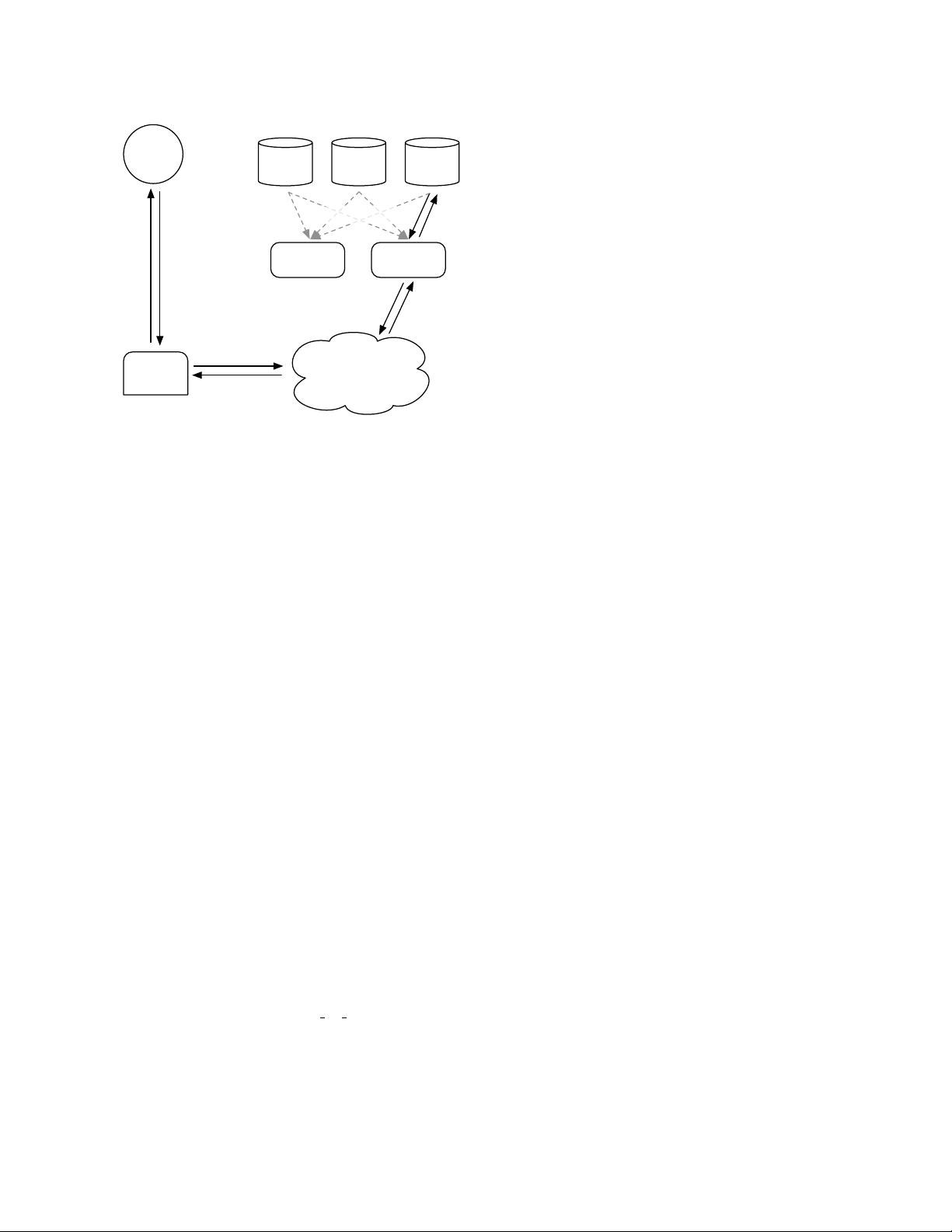

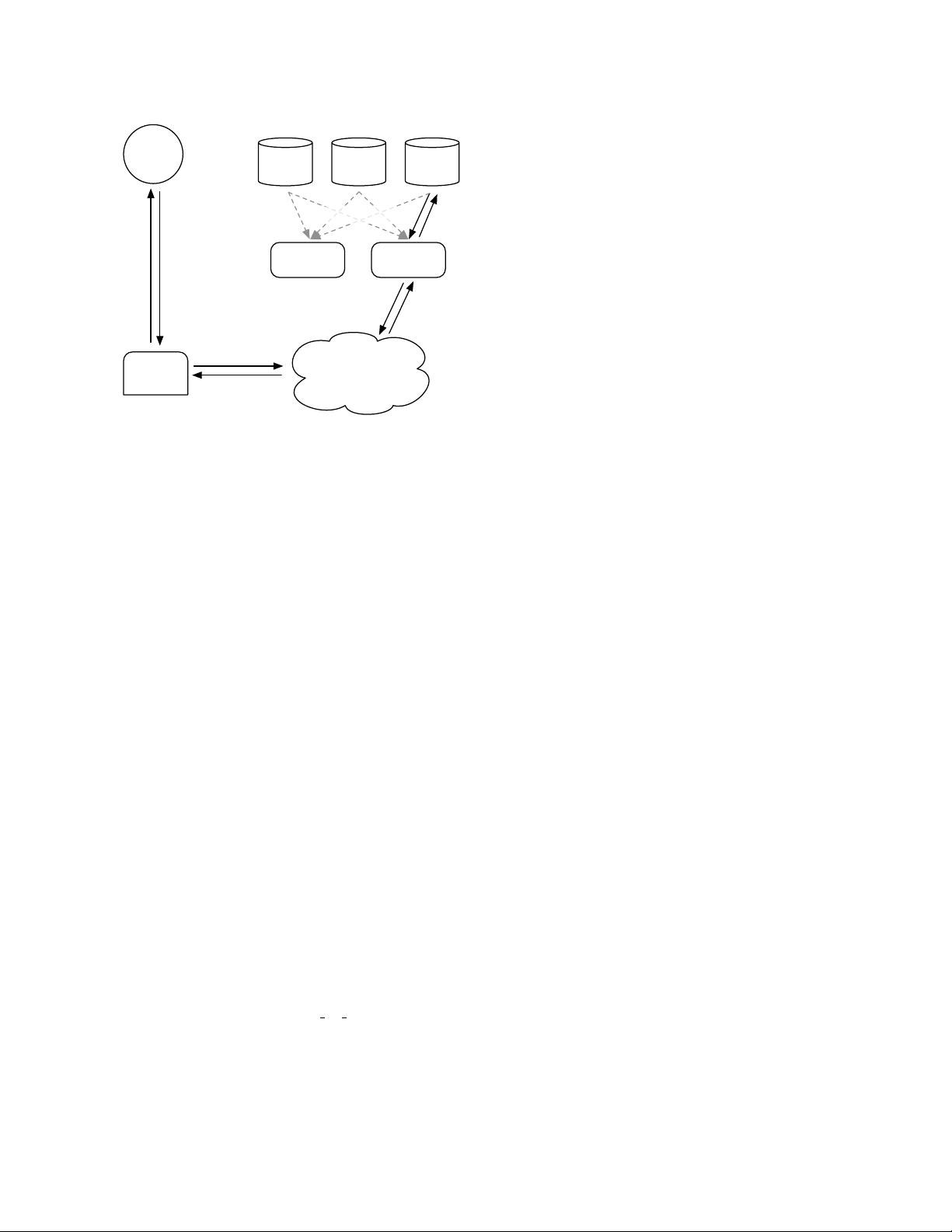

Figure 2: NFS-based Design

machines, Photo Store servers, then mount all the vol-

umes exported by these NAS appliances over NFS. Fig-

ure 2 illustrates this architecture and shows Photo Store

servers processing HTTP requests for images. From an

image’s URL a Photo Store server extracts the volume

and full path to the file, reads the data over NFS, and

returns the result to the CDN.

We initially stored thousands of files in each directory

of an NFS volume which led to an excessive number of

disk operations to read even a single image. Because

of how the NAS appliances manage directory metadata,

placing thousands of files in a directory was extremely

inefficient as the directory’s blockmap was too large to

be cached effectively by the appliance. Consequently

it was common to incur more than 10 disk operations to

retrieve a single image. After reducing directory sizes to

hundreds of images per directory, the resulting system

would still generally incur 3 disk operations to fetch an

image: one to read the directory metadata into memory,

a second to load the inode into memory, and a third to

read the file contents.

To further reduce disk operations we let the Photo

Store servers explicitly cache file handles returned by

the NAS appliances. When reading a file for the first

time a Photo Store server opens a file normally but also

caches the filename to file handle mapping in mem-

cache [18]. When requesting a file whose file handle

is cached, a Photo Store server opens the file directly

using a custom system call, open by filehandle, that

we added to the kernel. Regrettably, this file handle

cache provides only a minor improvement as less pop-

ular photos are less likely to be cached to begin with.

One could argue that an approach in which all file han-

dles are stored in memcache might be a workable solu-

tion. However, that only addresses part of the problem

as it relies on the NAS appliance having all of its in-

odes in main memory, an expensive requirement for tra-

ditional filesystems. The major lesson we learned from

the NAS approach is that focusing only on caching—

whether the NAS appliance’s cache or an external cache

like memcache—has limited impact for reducing disk

operations. The storage system ends up processing the

long tail of requests for less popular photos, which are

not available in the CDN and are thus likely to miss in

our caches.

2.3 Discussion

It would be difficult for us to offer precise guidelines

for when or when not to build a custom storage system.

However, we believe it still helpful for the community

to gain insight into why we decided to build Haystack.

Faced with the bottlenecks in our NFS-based design,

we explored whether it would be useful to build a sys-

tem similar to GFS [9]. Since we store most of our user

data in MySQL databases, the main use cases for files

in our system were the directories engineers use for de-

velopment work, log data, and photos. NAS appliances

offer a very good price/performance point for develop-

ment work and for log data. Furthermore, we leverage

Hadoop [11] for the extremely large log data. Serving

photo requests in the long tail represents a problem for

which neither MySQL, NAS appliances, nor Hadoop are

well-suited.

One could phrase the dilemma we faced as exist-

ing storage systems lacked the right RAM-to-disk ra-

tio. However, there is no right ratio. The system just

needs enough main memory so that all of the filesystem

metadata can be cached at once. In our NAS-based ap-

proach, one photo corresponds to one file and each file

requires at least one inode, which is hundreds of bytes

large. Having enough main memory in this approach is

not cost-effective. To achieve a better price/performance

point, we decided to build a custom storage system that

reduces the amount of filesystem metadata per photo so

that having enough main memory is dramatically more

cost-effective than buying more NAS appliances.

3 Design & Implementation

Facebook uses a CDN to serve popular images and

leverages Haystack to respond to photo requests in the

long tail efficiently. When a web site has an I/O bot-

tleneck serving static content the traditional solution is

to use a CDN. The CDN shoulders enough of the bur-

den so that the storage system can process the remaining

tail. At Facebook a CDN would have to cache an unrea-