Fusion-Aware Point Convolution for Online Semantic 3D Scene Segmentation

Jiazhao Zhang

1,∗

Chenyang Zhu

1,

*

Lintao Zheng

1

Kai Xu

1,2†

1

National University of Defense Technology

2

SpeedBot Robotics Ltd.

Abstract

Online semantic 3D segmentation in company with real-

time RGB-D reconstruction poses special challenges such

as how to perform 3D convolution directly over the progres-

sively fused 3D geometric data, and how to smartly fuse in-

formation from frame to frame. We propose a novel fusion-

aware 3D point convolution which operates directly on the

geometric surface being reconstructed and exploits effec-

tively the inter-frame correlation for high quality 3D fea-

ture learning. This is enabled by a dedicated dynamic data

structure which organizes the online acquired point cloud

with global-local trees. Globally, we compile the online re-

constructed 3D points into an incrementally growing coor-

dinate interval tree, enabling fast point insertion and neigh-

borhood query. Locally, we maintain the neighborhood in-

formation for each point using an octree whose construction

benefits from the fast query of the global tree. Both levels

of trees update dynamically and help the 3D convolution ef-

fectively exploits the temporal coherence for effective infor-

mation fusion across RGB-D frames. Through evaluation

on public benchmark datasets, we show that our method

achieves the state-of-the-art accuracy of semantic segmen-

tation with online RGB-D fusion in 10 FPS.

1. Introduction

Semantic segmentation of 3D scenes is an fundamental task

in 3D vision. The recent state-of-the-art methods mostly

apply deep learning on either 3D geometric data solely [

25]

or the fusion of 2D and 3D data [

20]. These approaches,

however, are usually offline, working with an already re-

constructed 3D scene geometry [

5, 14]. Online scene un-

derstanding associated with real-time RGB-D reconstruc-

tion [

13, 22], on the other hand, is deemed to be more

appealing due to the potential applications in robot and

AR. Technically, online analysis can also fully exploit the

spatial-temporal information during RGB-D fusion.

For the task of semantic scene segmentation in company

with RGB-D fusion, deep-learning-based approaches com-

monly adopt the frame feature fusion paradigm. Such meth-

*

Joint first authors

†

Corresponding author: kevin.kai.xu@gmail.com

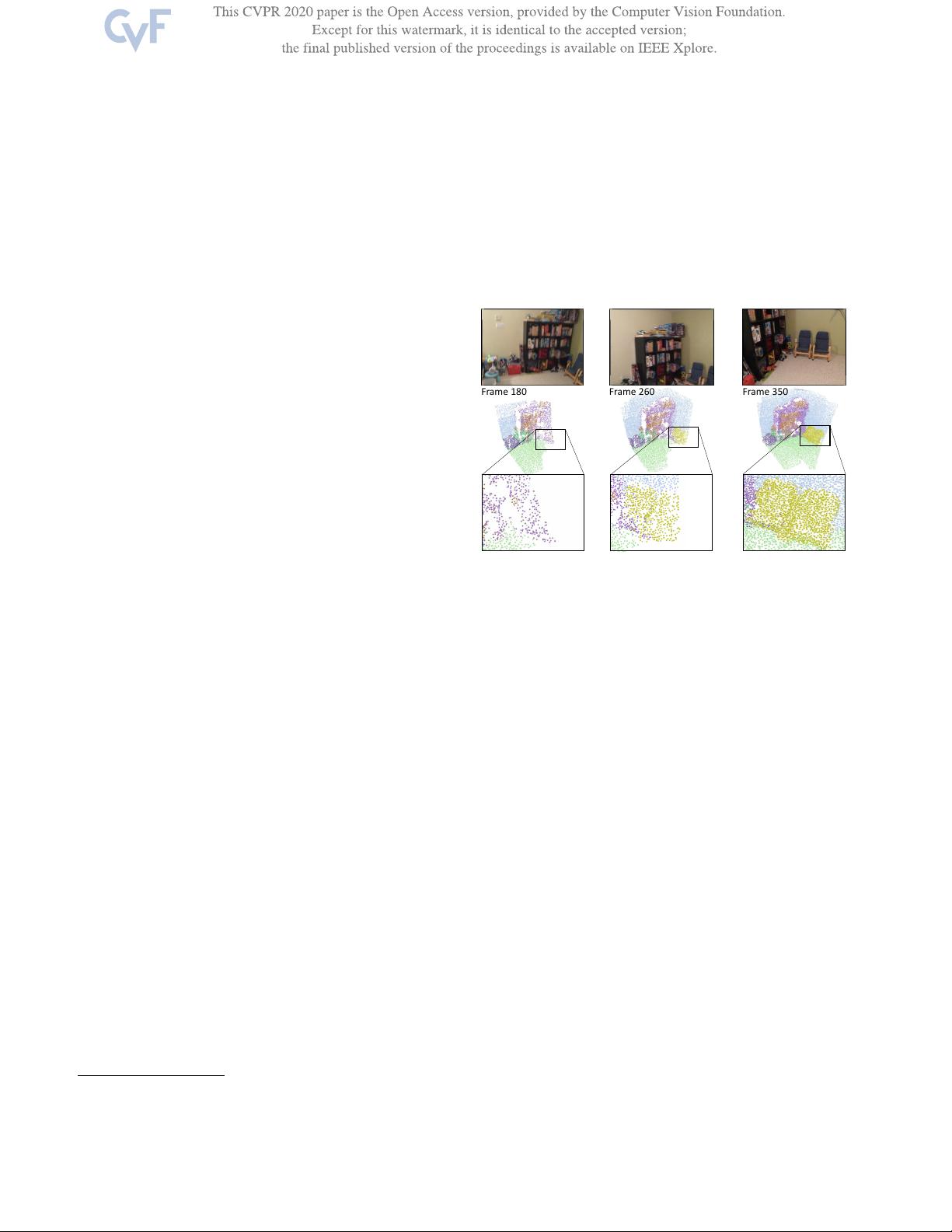

Frame 180

Frame 350Frame 260

Figure 1: We present fusion-aware 3D point convolution

which operates directly over the progressively acquired and

online reconstructed scene surface. We show the point-wise

labeling is being gradually improved (the chairs are recog-

nized) as more and more frames (first row) are fused in.

ods first perform 2D convolution in the individual RGB-D

frames and then fuse the extracted 2D features across con-

secutive frames. Previous works conduct such feature fu-

sion through either max-pooling operation [

14] or Bayesian

probability updating [

20]. We advocate the adoption of di-

rect convolution over 3D surfaces for frame feature fusion.

3D convolution on surfaces learns features of the intrinsic

structure of the geometric surfaces [

2] that cannot be well-

captured by view-based convolution and fusion. During on-

line RGB-D fusion, however, the scene geometry changes

progressively with the incremental scanning and reconstruc-

tion. It is difficult to perform 3D convolution directly over

the time-varying geometry. Besides, to attain a powerful

3D feature learning, special designs are needed to exploit

the temporal correlation between adjacent frames.

In this work, we argue that a fast and powerful 3D convo-

lution for online segmentation necessitates an efficient and

versatile in-memory organization of dynamic 3D geomet-

ric data. To this end, we propose a tree-based global-local

dynamic data structure to enable efficient data maintenance

and 3D convolution of time-varying geometry. Globally, we

organize the online fused 3D points with an incrementally

growing coordinate interval tree, which enables fast point

1

4534