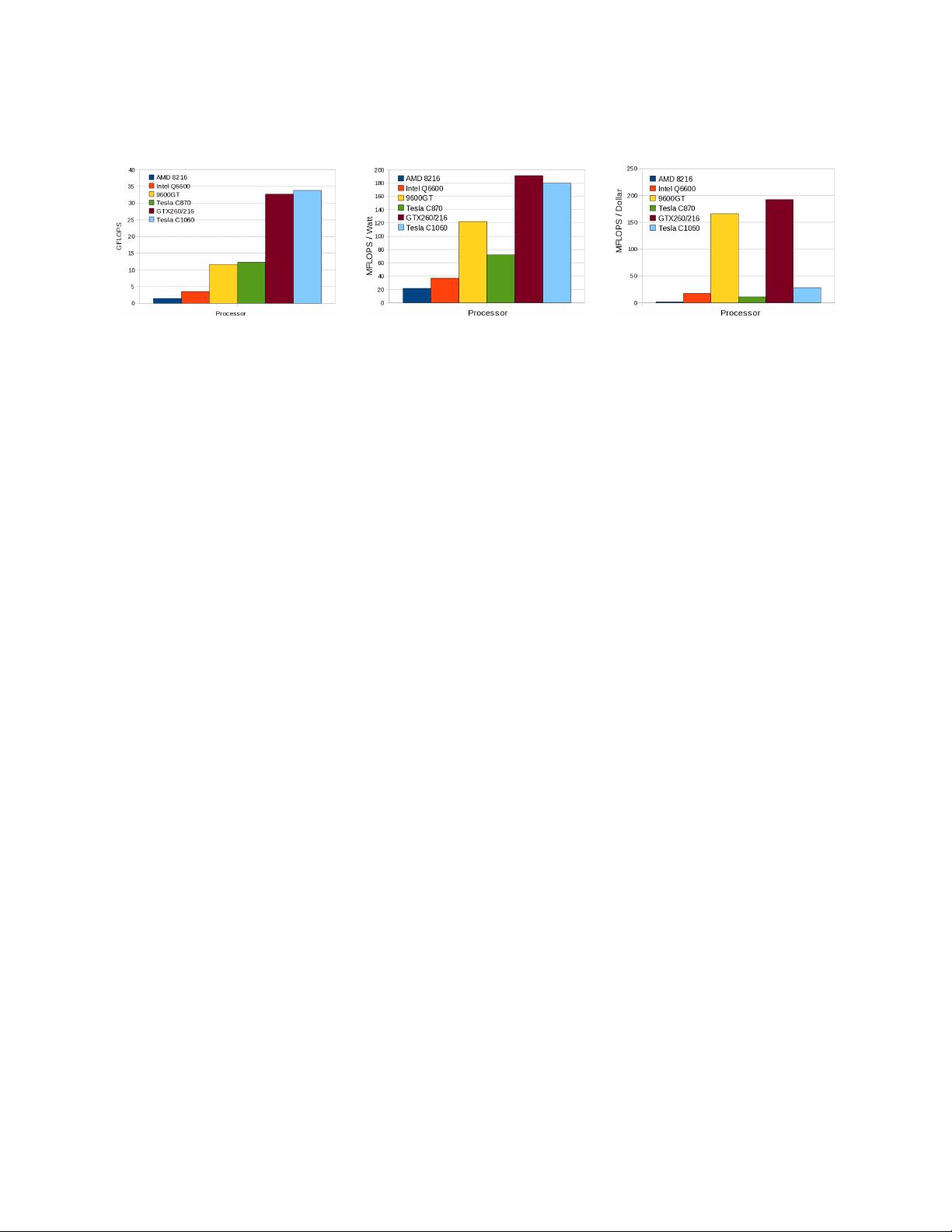

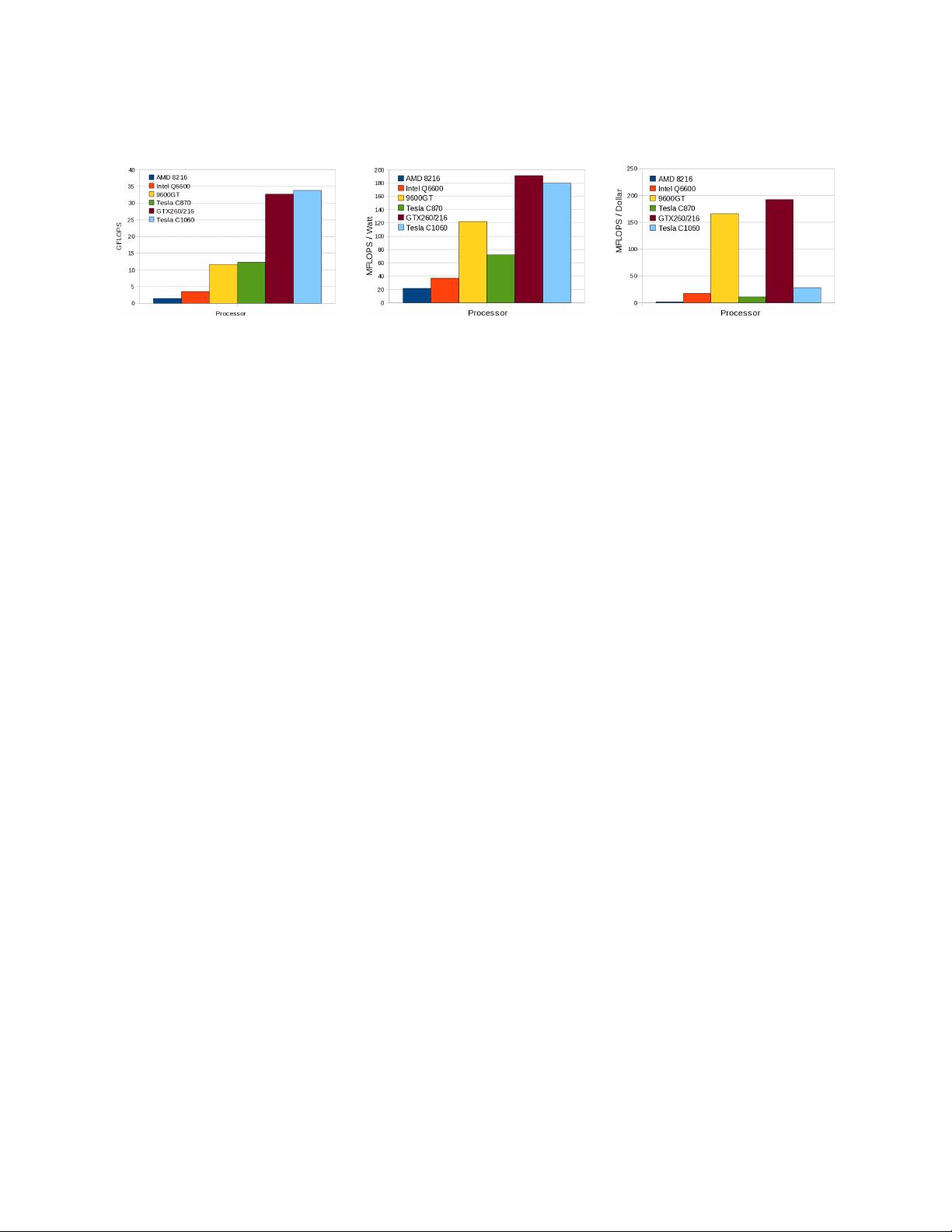

GFLOPS.

a) b) c)

Figure 1. Three performance metrics on six selected CPU and GPU devices based on incompressible flow

computations on a single device. Actual sustained performance is used rather than peak device performance.

a) Sustained GFLOPS, b) MFLOPS/Watt, c) MFLOPS/Dollar

Figure 1a shows three performance metrics on each platform using an incompressible flow CFD code. The GPU

version of the CFD code is clearly an improvement over the Pthreads shared-memory parallel CPU version. Both

of these implementations are written in C and use identical numerical methods

9

. The main impact on individual

GPU performance was the introduction of compute capability 1.3, which greatly reduces the memory latency in some

computing kernels due to the relaxed memory coalescing rules

4

. Compute capability 1.3 also added support for double

precision which is important in many solutions.

Figure 1b shows the performance relative to the peak electrical power of the device. GPU devices show a definite

advantage over the CPUs in terms of energy-efficient computing. The consumer video cards have a slight power

advantage over the Tesla series, partly explained by having significantly less active global memory. The recent paper

by Kindratenko et al.

12

details the measured power use of two clusters built using NVIDIA Tesla S1070 accelerators.

They find significant power usage from intensive global memory accesses, implying CUDA kernels using shared

memory not only can achieve higher performance but can use less power at the same time. Approximately 70%

of the S1070’s peak power is used while running their molecular dynamics program NAMD. Figure 1c shows the

performance relative to the street price of the device which sheds light on the cost effectiveness of GPU computing.

The consumer GPUs are better in this regard, ignoring other factors such as the additional memory present in the

compute server GPUs.

The rationale for clusters of GPU hardware is identical to that for CPU clusters – larger problems can be solved

and total performance increases. Figure 1c indicates that clusters of commodity hardware can offer compelling

price/performance benefits. By spreading the models over a cluster with multiple GPUs in each node, memory size

limitations can be overcome such that inexpensive GPUs become practical for solving large computational problems.

Today’s motherboards can accommodate up to 8 GPUs in a single node

6

, enabling large-scale compute power in

small to medium size clusters. However, the resulting heterogeneous architecture with a deep memory hierarchy cre-

ates challenges in developing scalable and efficient simulation applications. In the following sections, we focus on

maximizing performance on a multi-GPU cluster through a series of mixed MPI-CUDA implementations.

III. Related Work

GPU computing has evolved from hardware rendering pipelines that were not amenable to non-rendering tasks, to

the modern General Purpose Graphics Processing Unit (GPGPU) paradigm. Owens et al.

13

survey the early history

as well as the state of GPGPU computing in 2007. Early work on GPU computing is extensive and used custom

programming to reshape the problem in a way that could be processed by a rendering pipeline, often one without

32-bit floating point support. The advent of DirectX 9 hardware in 2003 with floating point support, combined with

early work on high level language support such as BrookGPU, Cg, and Sh, led to a rapid expansion of the field.

14–19

Brandvik and Pullan

20

show the implementation of 2D and 3D Euler solver on a single GPU, showing 29× speedup

for the 2D solver and 16× speedup for the 3D solver. One unique feature of their paper is the implementation of the

solvers in both BrookGPU and CUDA. Elsen et al.

21

show the conversion of a subset of an existing Navier-Stokes

3

American Institute of Aeronautics and Astronautics