8 W.-H. Cheng et al.

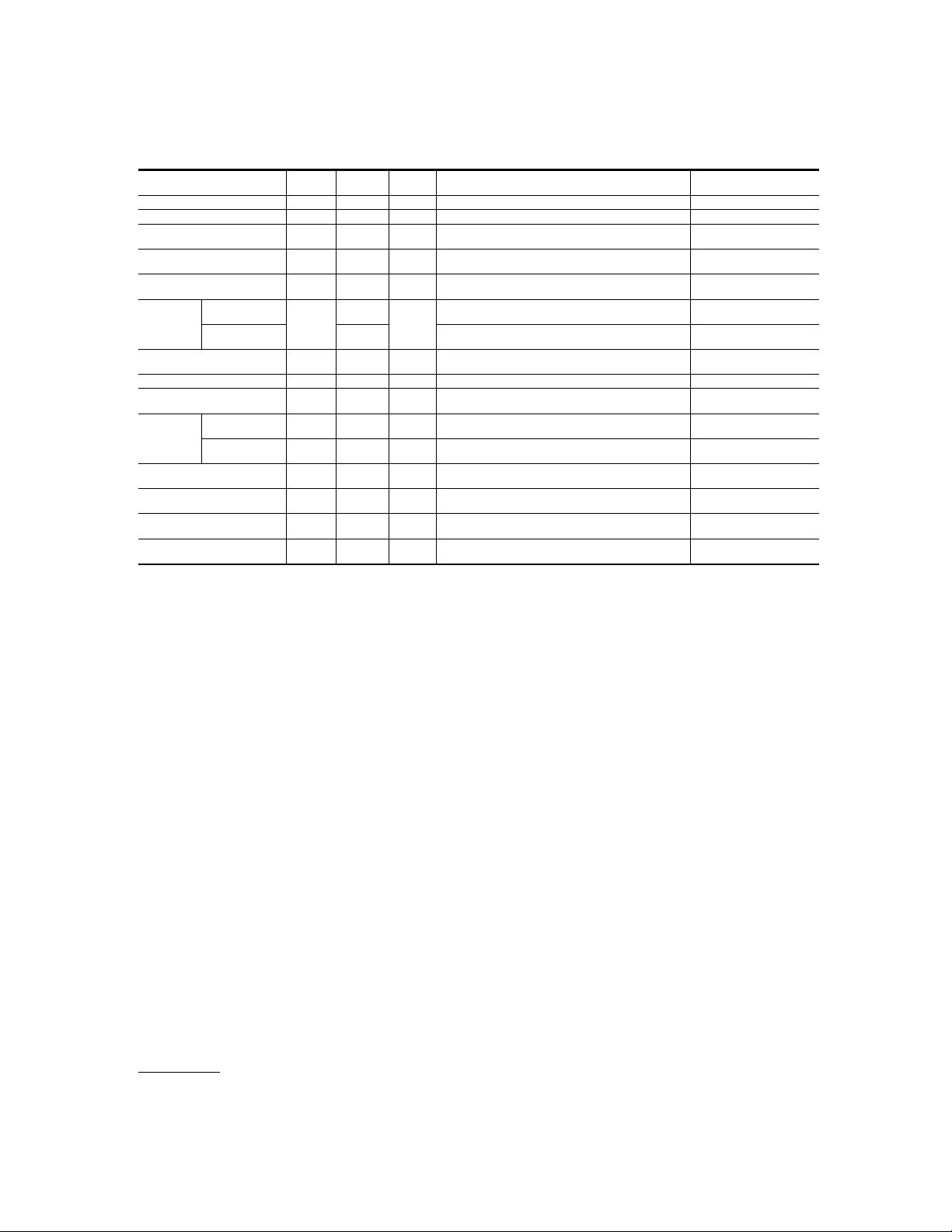

the overlapping area of the ground truth and predicted area to the total area, (4) mean accuracy, (5)

average precision, (6) average recall, (7) average F-1 score over pixels, and (8) foreground accuracy

as the number of true pixels on the body over the number of actual pixels on the body.

In particular, most of the parsing methods are evaluated on Fashionista dataset [

206

] in terms

of accuracy, average precision, average recall, and average F-1 score over pixels. We report the

performance comparisons in Table 1 of Supplementary Material.

2.3 Item Retrieval

As fashion e-commerce has grown over the years, there has been a high demand for innovative

solutions to help customers find preferred fashion items with ease. Though many fashion online

shopping sites support keyword-based searches, there are many visual traits of fashion items that are

not easily translated into words. It thus attracts tremendous attention from many research communities

to develop cross-scenario image-based fashion retrieval tasks for matching the real-world fashion

items to the online shopping image. Given a fashion image query, the goal of image-based fashion

item retrieval is to find similar or identical items from the gallery.

2.3.1 State-of-the-art methods. The notable early work on automatic image-based clothing

retrieval was presented by Wang and Zhang [

190

]. To date, extensive research studies have been

devoted to addressing the problem of cross-scenario clothing retrieval since there is a large domain

discrepancy between daily human photo captured in general environment and clothing images taken

in ideal conditions (i.e., embellished photos used in online clothing shops). Liu et al. [

122

] proposed

to utilize an unsupervised transfer learning method based on part-based alignment and features

derived from the sparse reconstruction. Kalantidis et al. [

86

] presented clothing retrieval from the

perspective of human parsing. A prior probability map of the human body was obtained through

pose estimation to guide clothing segmentation, and then the segments were classified through

locality-sensitive hashing. The visually similar items were retrieved by summing up the overlap

similarities. Notably, [86, 122, 190] are based on hand-crafted features.

With the advances of deep learning, there has been a trend of building deep neural network

architectures to solve the clothing retrieval task. Huang et al. [

69

] developed a Dual Attribute-

aware Ranking Network (DARN) to represent in-depth features using attribute-guided learning.

DARN simultaneously embedded semantic attributes and visual similarity constraints into the feature

learning stage, while at the same time modeling the discrepancy between domains. Li et al. [

103

]

presented a hierarchical super-pixel fusion algorithm for obtaining the intact query clothing item

and used sparse coding for improving accuracy. More explicitly, an over-segmentation hierarchical

fusion algorithm with human pose estimation was utilized to get query clothing items and to retrieve

similar images from the product clothing dataset.

The abovementioned studies are designed for similar fashion item retrieval. But more often, people

desire to find the same fashion item, as illustrated in Fig. 4(a). The first attempt on this task was

achieved by Kiapour et al. [

45

], who developed three different methods for retrieving the same

fashion item in the real-world image from the online shop, the street-to-shop retrieval task. The

three methods contained two deep learning baseline methods, and one method aimed to learn the

similarity between two different domains, street and shop domains. For the same goal of learning

the deep feature representation, Wang et al. [

188

] adopted a Siamese network that contained two

copies of the Inception-6 network with shared weights. Also, they introduced a robust contrastive

loss to alleviate over-fitting caused by some positive pairs (containing the same product) that were

visually different, and used one multi-task fine-tuning approach to learn a better feature representation

by tuning the parameters of the siamese network with product images and general images from

ImageNet [

27

]. Further, Jiang et al. [

83

] extended the one-way problem, street-to-shop retrieval task,

, Vol. 1, No. 1, Article . Publication date: January 2021.